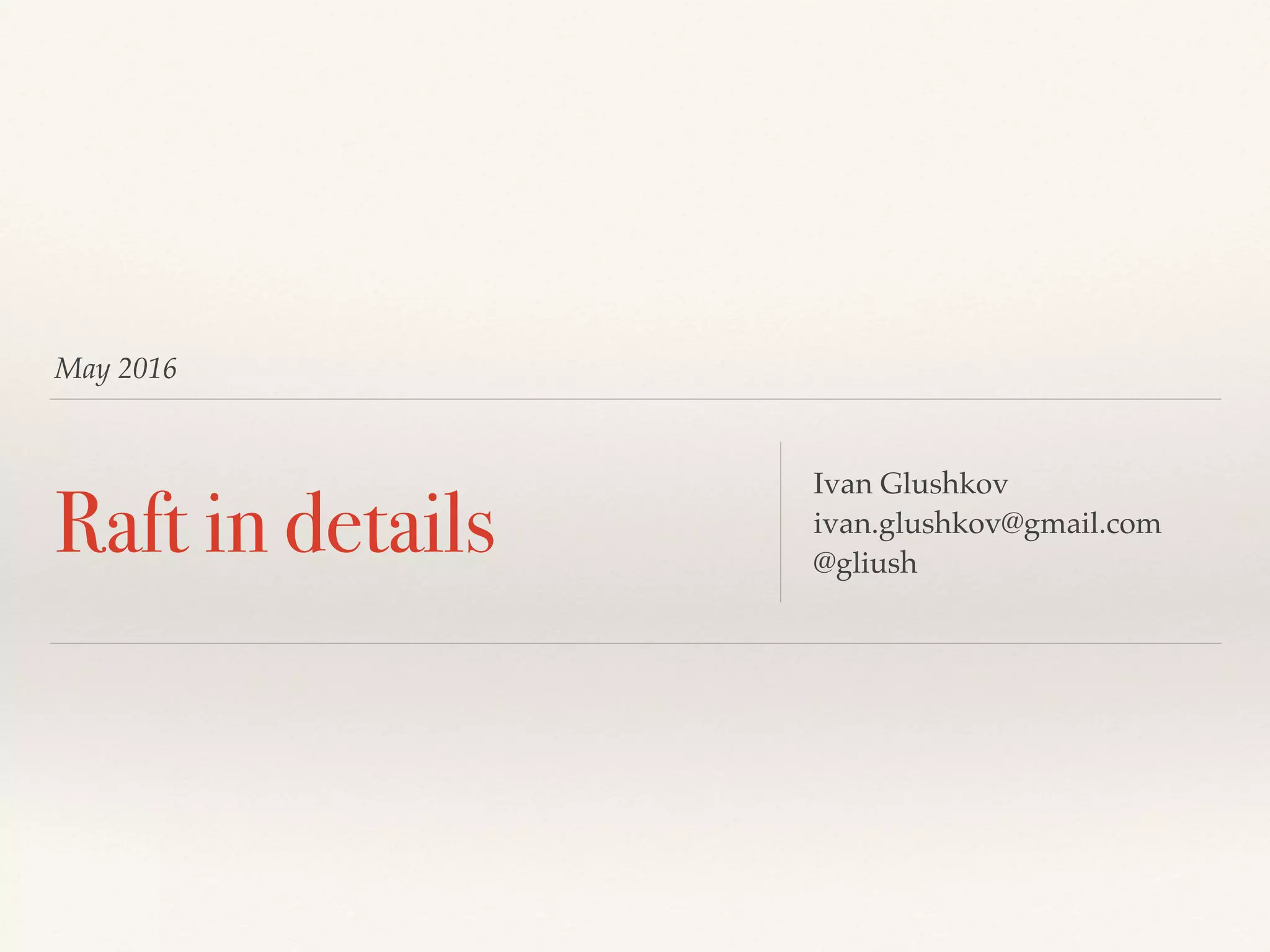

Raft is a consensus algorithm that allows for a replicated log to be consistently maintained across multiple servers. It elects a single leader to be responsible for log replication. The leader appends entries to its log, and replicates these entries to a majority of servers to be committed. This ensures safety properties like state machine safety and leader completeness. Raft aims to be more understandable than alternatives like Paxos through separation of concerns and reduced state spaces.

![Why

❖ Very important topic

❖ [Multi-]Paxos, the key player, is very difficult to

understand

❖ Paxos -> Multi-Paxos -> different approaches

❖ Different optimization, closed-source implementation](https://image.slidesharecdn.com/raftindetails-160519125002/75/Raft-in-details-3-2048.jpg)