The document summarizes the Google File System (GFS). It discusses the key points of GFS's design including:

- Files are divided into fixed-size 64MB chunks for efficiency.

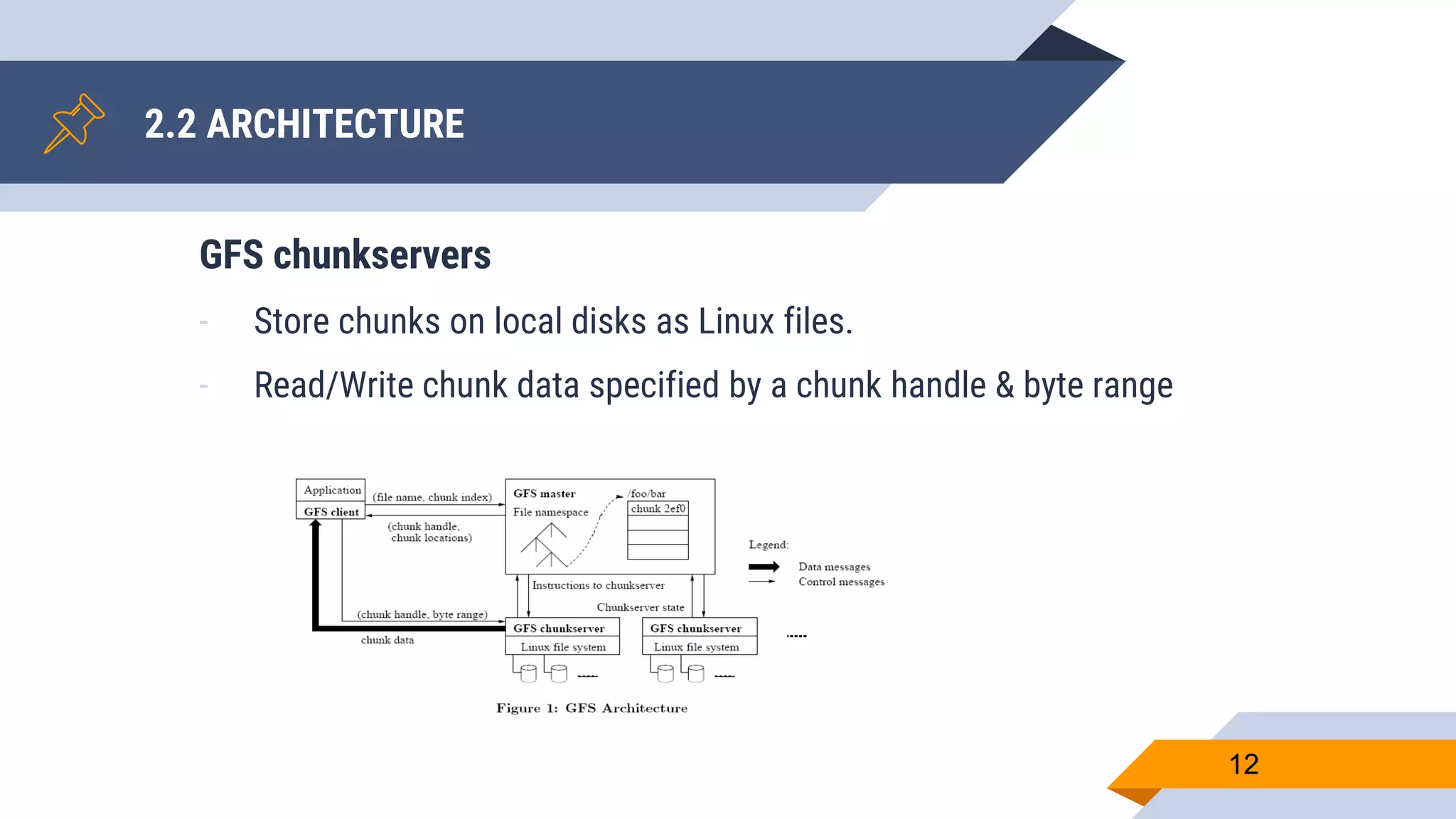

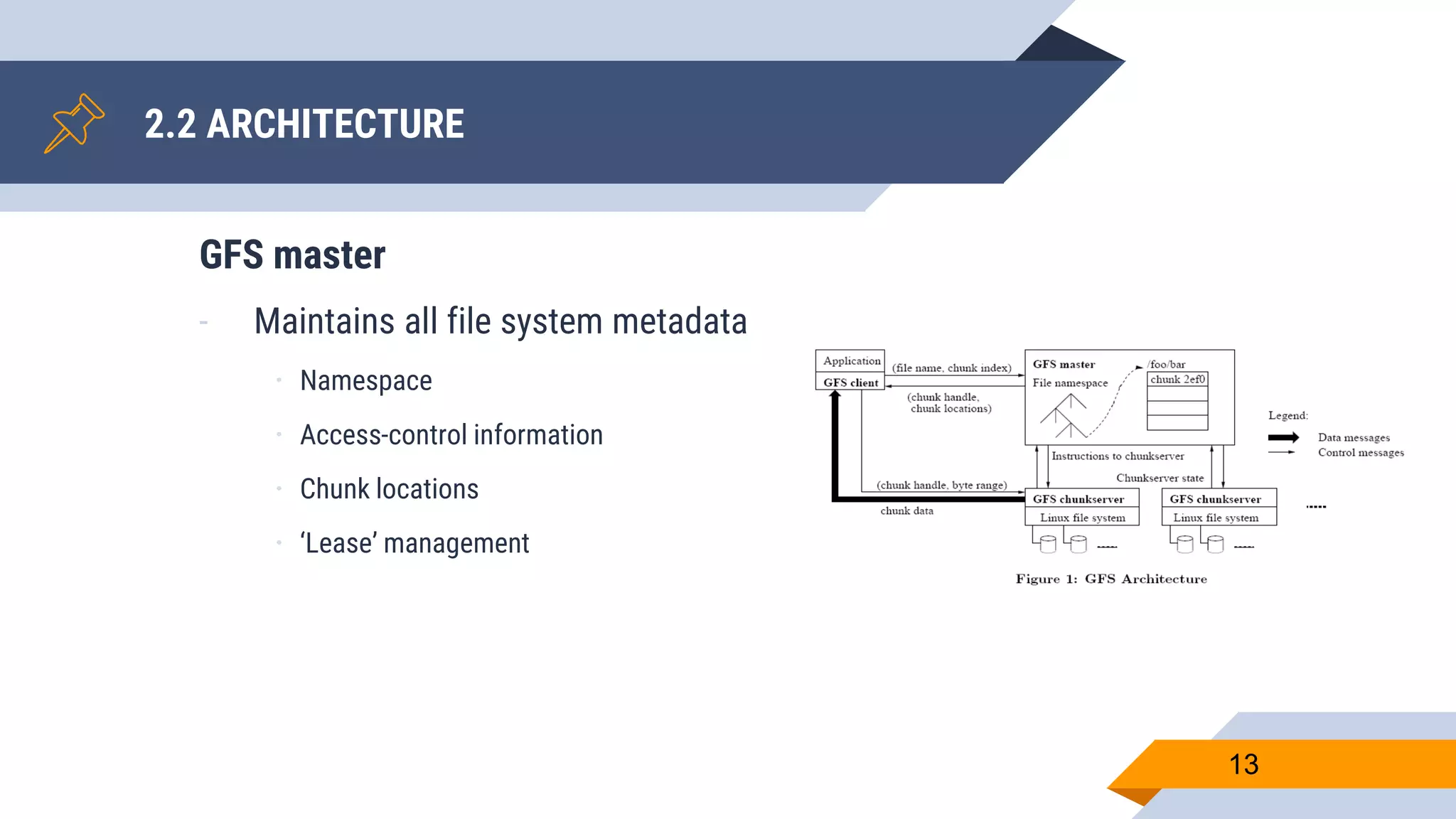

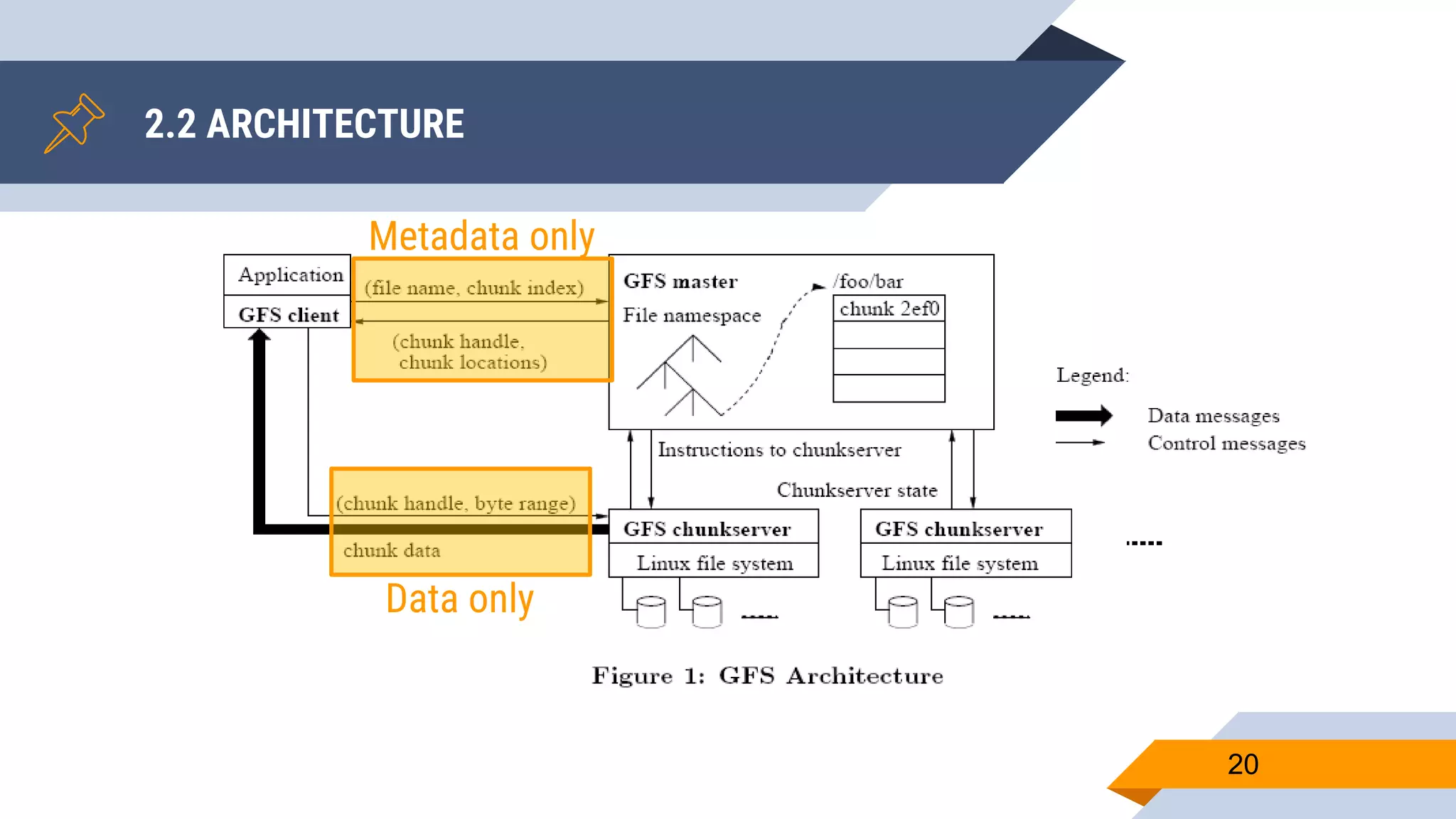

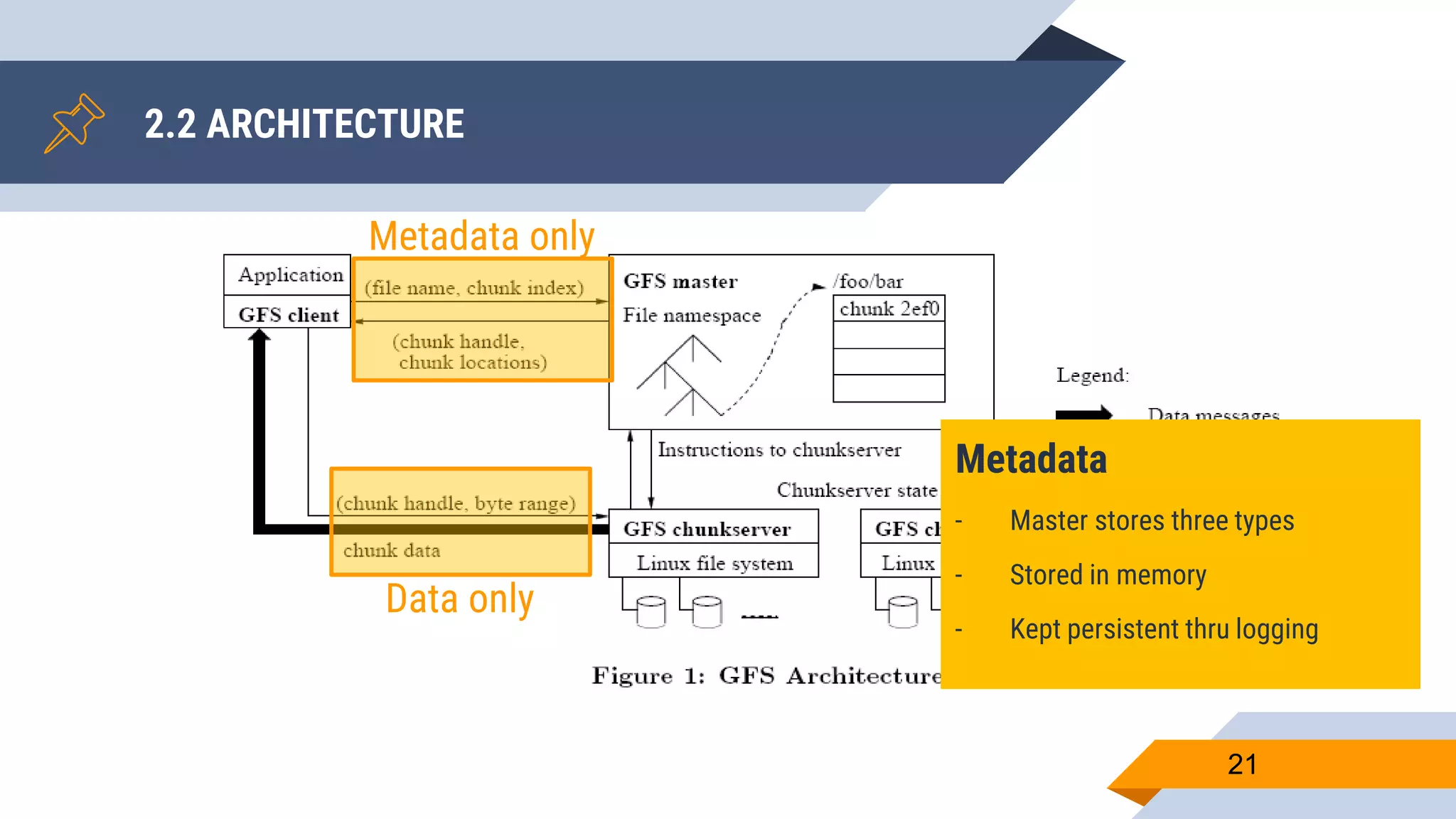

- Metadata is stored on a master server while data chunks are stored on chunkservers.

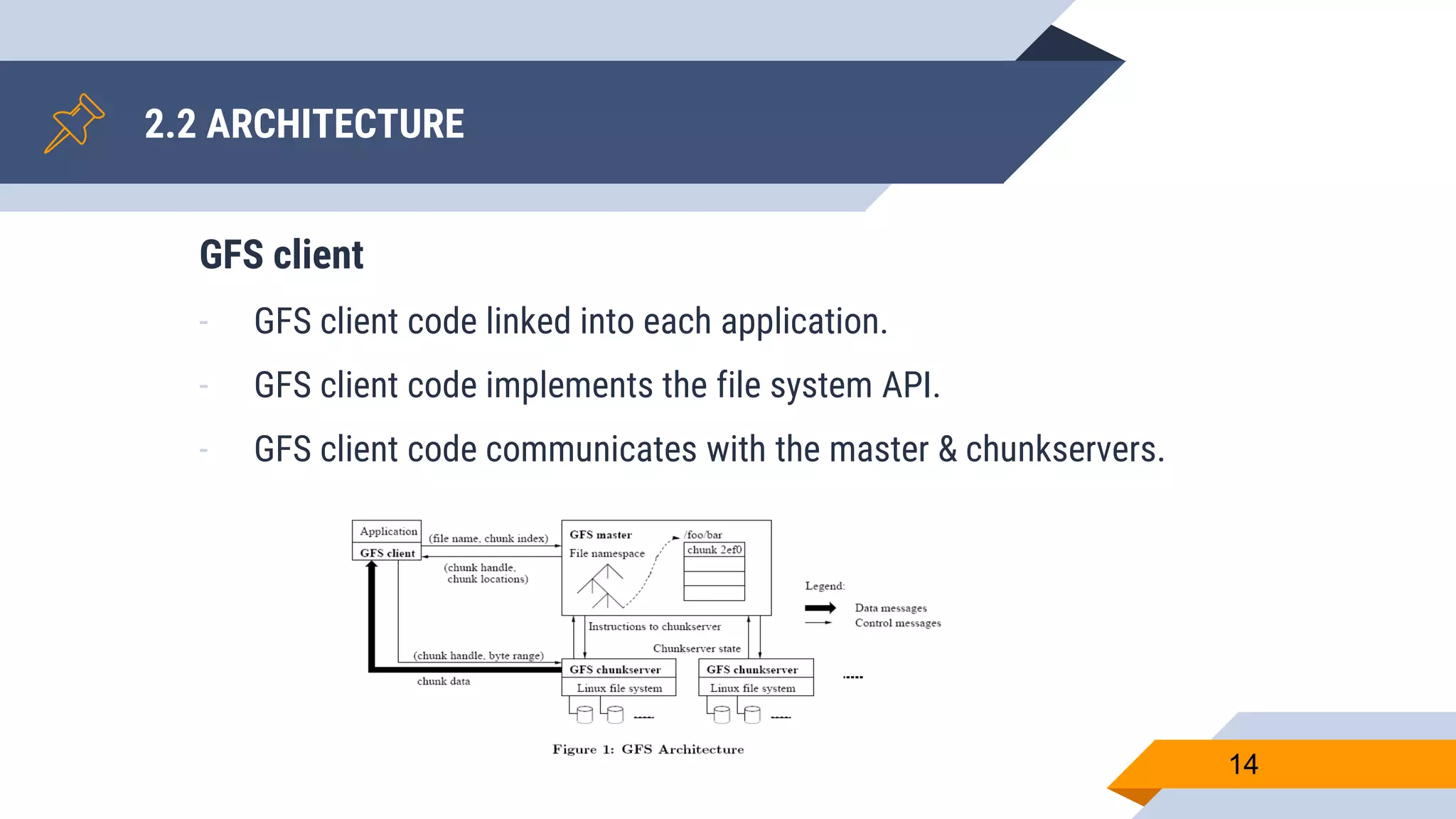

- The master manages file system metadata and chunk locations while clients communicate with both the master and chunkservers.

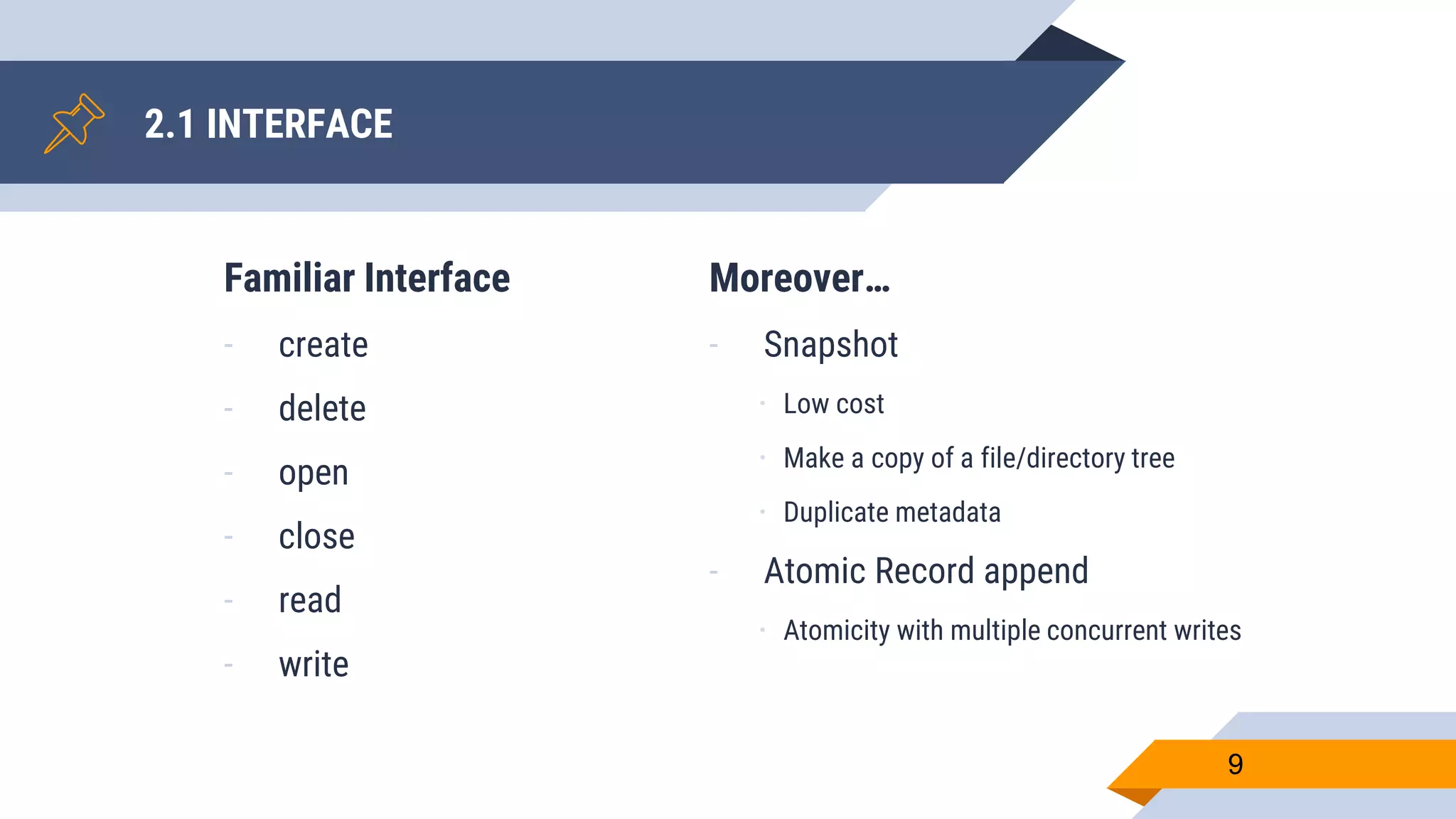

- GFS provides features like leases to coordinate updates, atomic appends, and snapshots for consistency and fault tolerance.