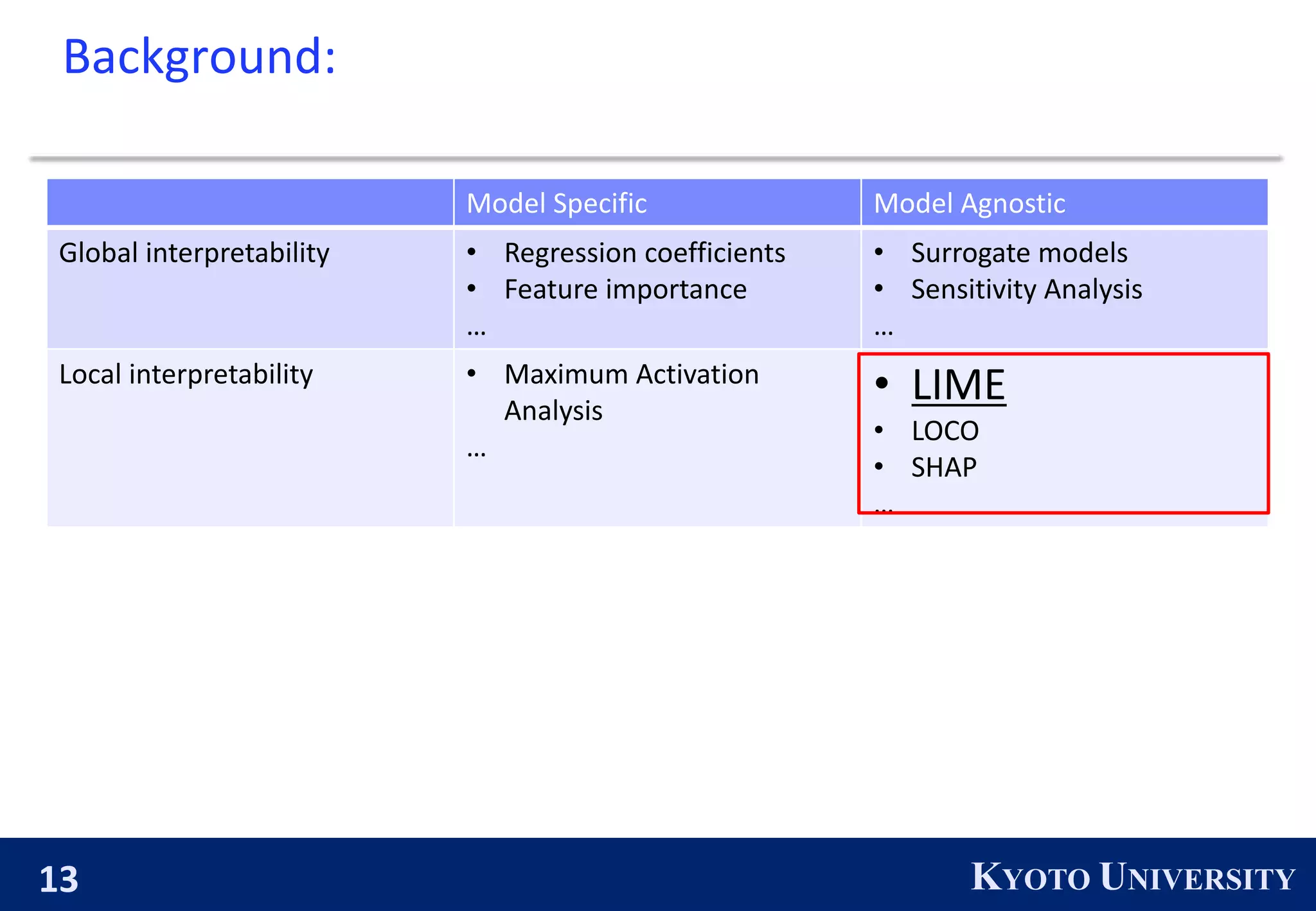

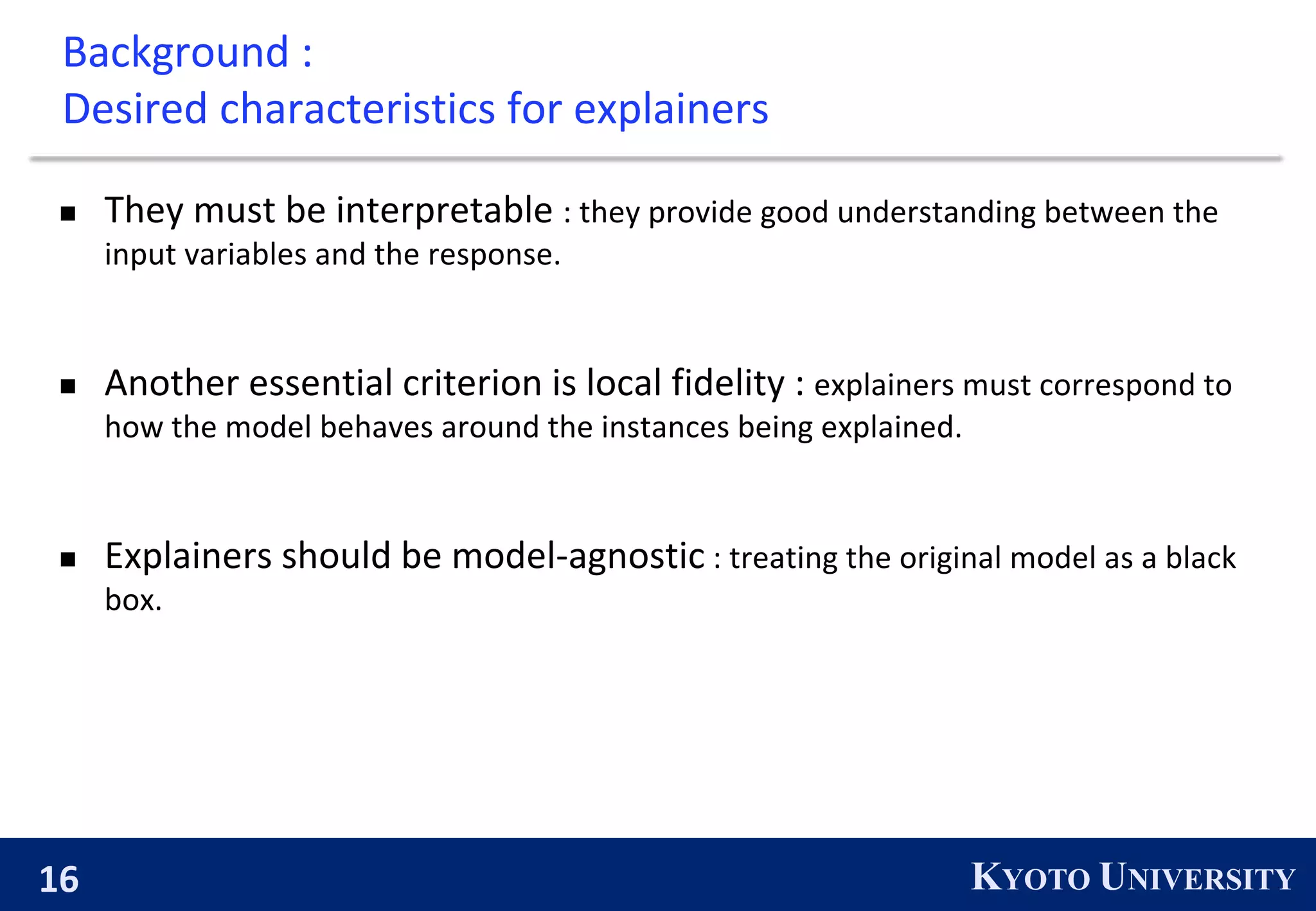

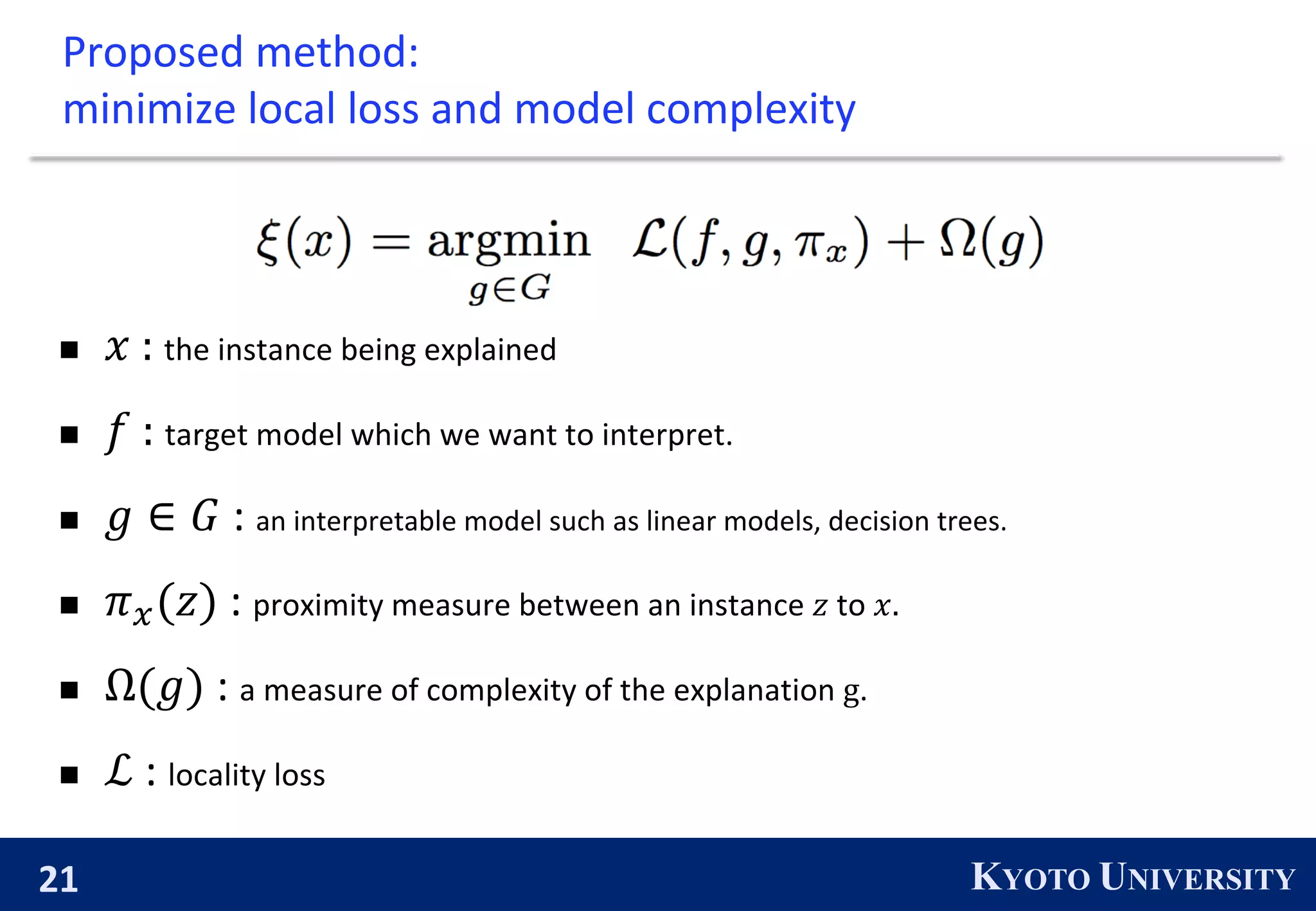

1) The document discusses LIME (Local Interpretable Model-Agnostic Explanations), a method for explaining the predictions of any machine learning model. LIME works by training an interpretable model locally around predictions to approximate the original model.

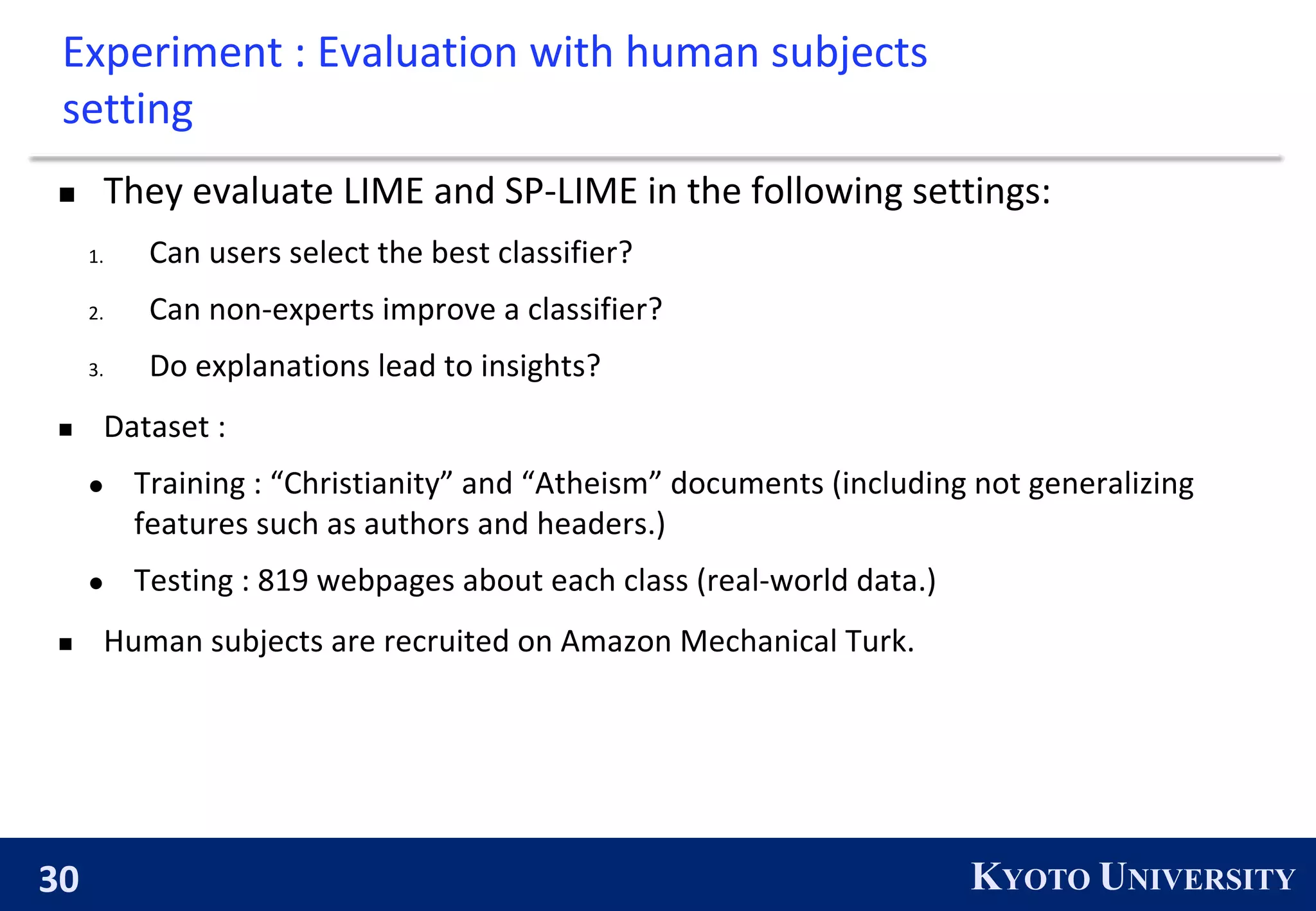

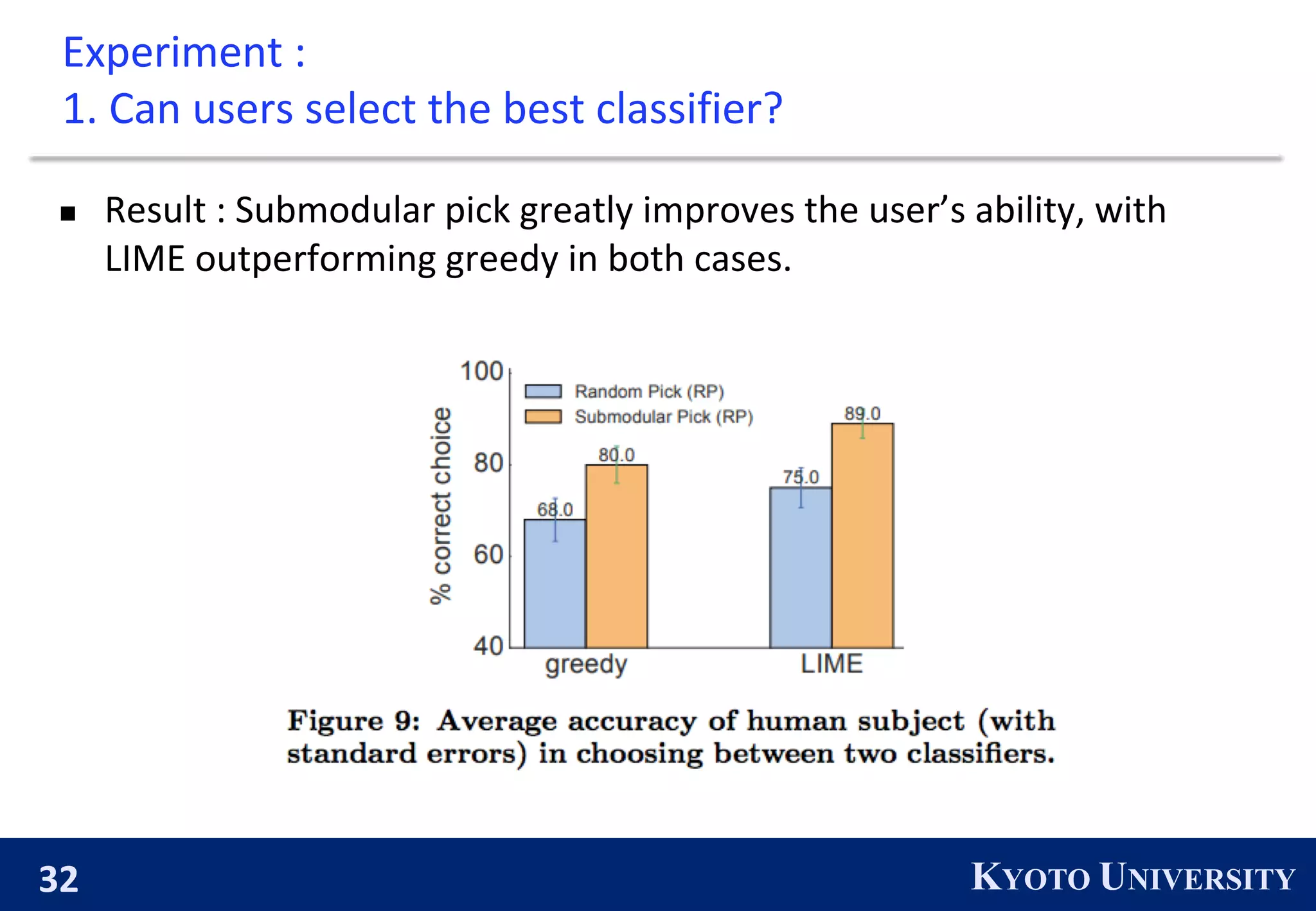

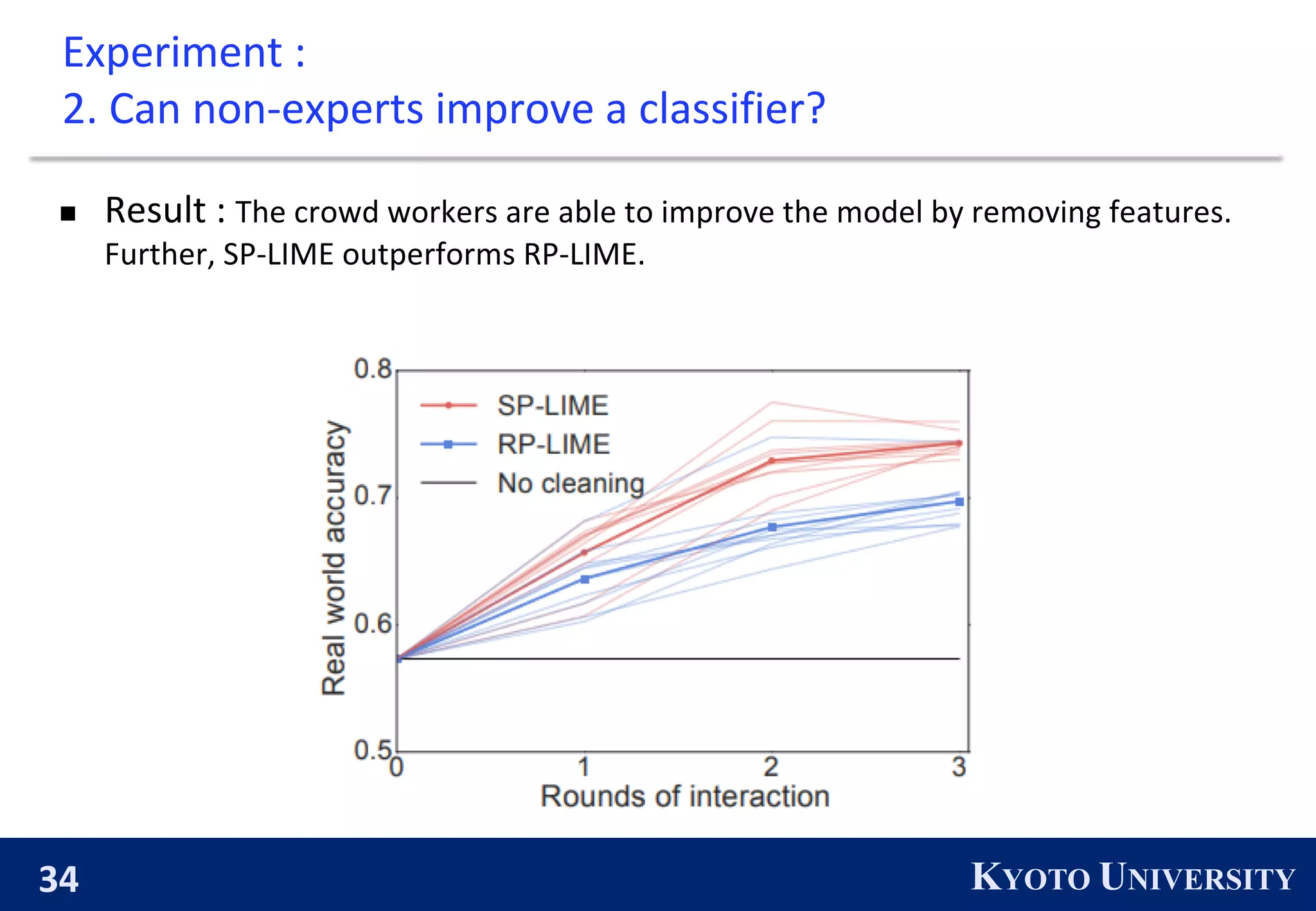

2) Experiments show that LIME explanations help human subjects select better performing classifiers, identify features to improve classifiers, and gain insights into how classifiers work.

3) SP-LIME is introduced to select a representative set of predictions to provide a global view of a model, by maximizing coverage of important features.