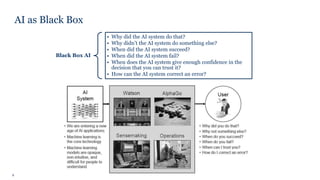

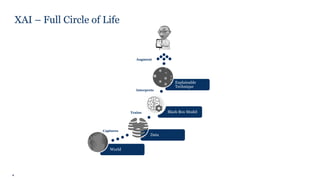

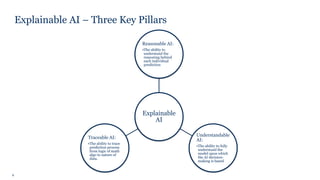

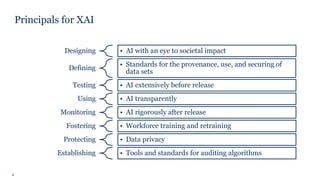

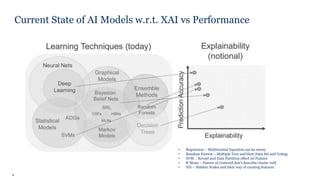

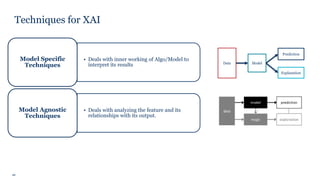

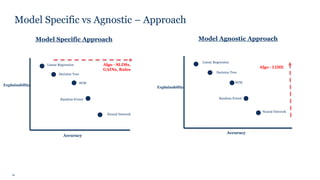

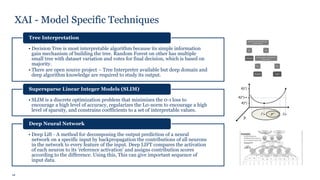

The document discusses explainable AI (XAI), emphasizing its importance in understanding AI decision-making through three key pillars: reasonable AI, understandable AI, and traceable AI. It outlines the limitations and challenges of XAI, including feature importance, domain nuances, and the complexities of different model types. The text also highlights business benefits of XAI, such as improved performance, decision-making, and regulatory compliance.