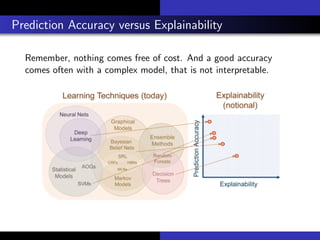

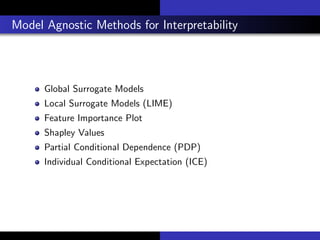

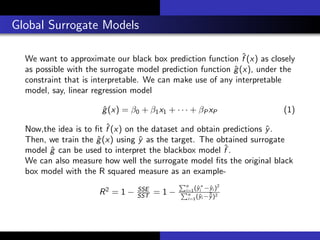

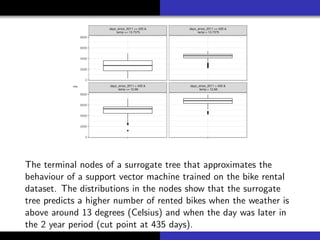

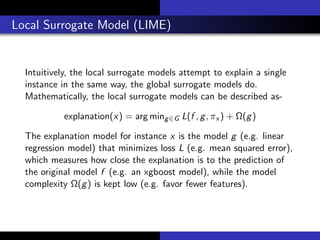

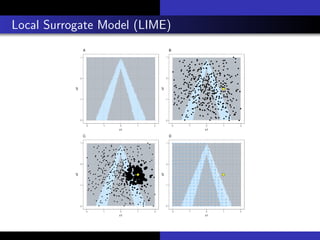

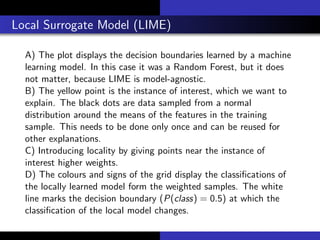

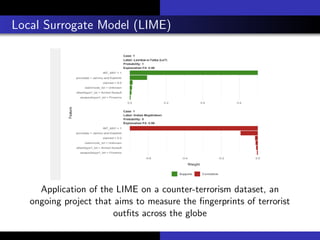

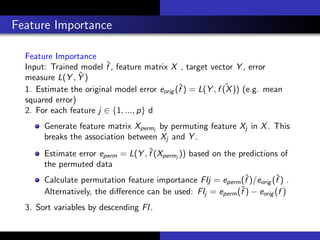

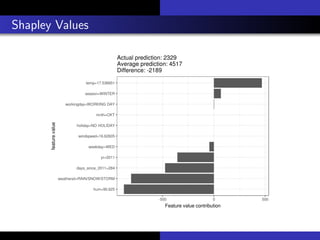

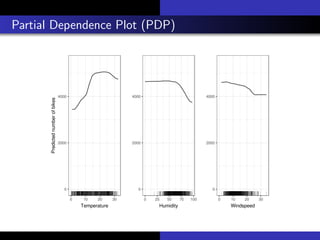

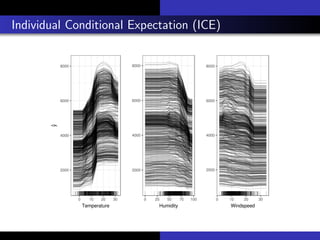

1) The document discusses various methods for interpreting machine learning models, including global and local surrogate models, feature importance plots, Shapley values, partial dependence plots, and individual conditional expectation plots.

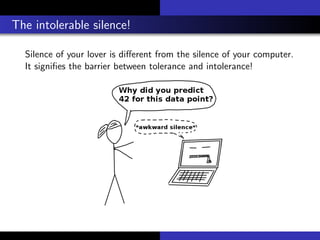

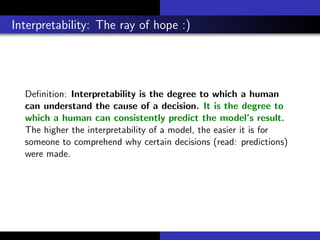

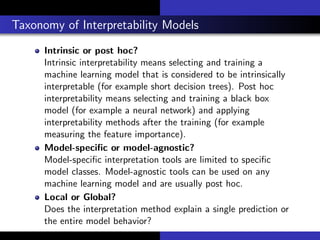

2) It explains that interpretability refers to how understandable the reasons for a model's predictions are to humans. Interpretability methods can provide global explanations of entire models or local explanations of individual predictions.

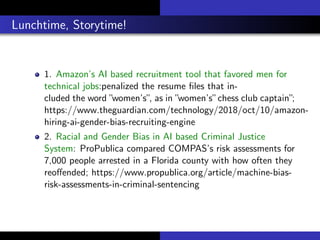

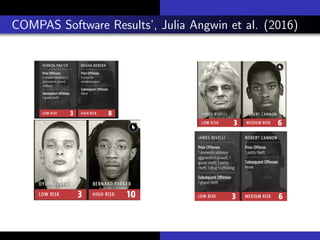

3) The document advocates that improving interpretability is important for addressing issues like bias in machine learning systems and increasing trust in applications used for high-stakes decisions like criminal justice.