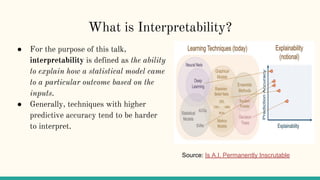

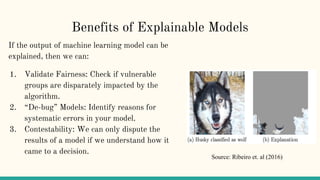

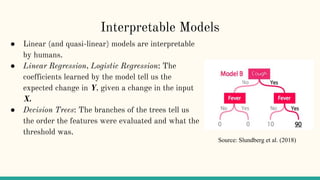

1. Interpretability helps understand complex machine learning models by explaining their outcomes based on inputs. Higher predictive accuracy often reduces interpretability.

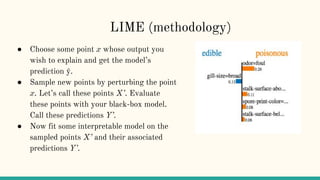

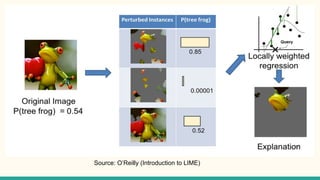

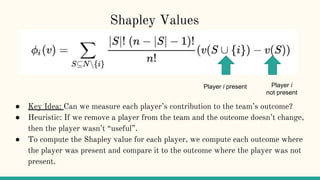

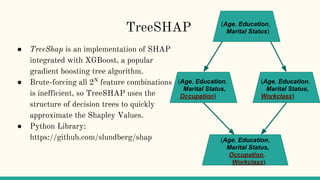

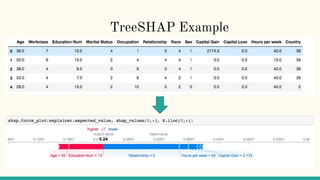

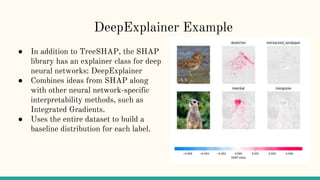

2. Methods like LIME and SHAP attribute model outcomes to input features through local surrogate models and game theory.

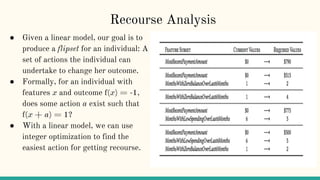

3. Recourse analysis identifies actions individuals could take to improve outcomes from automated decisions.