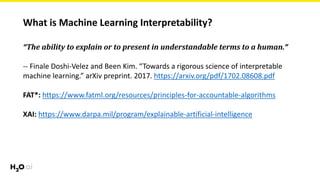

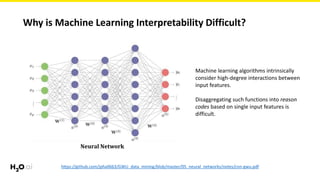

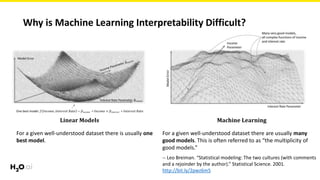

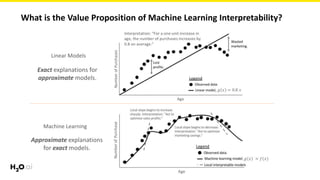

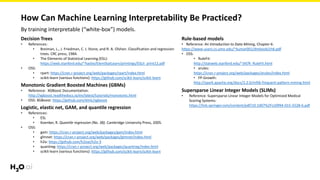

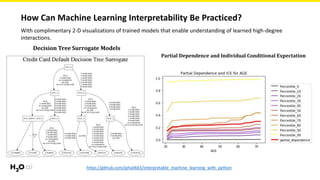

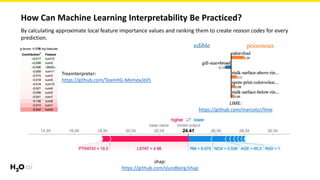

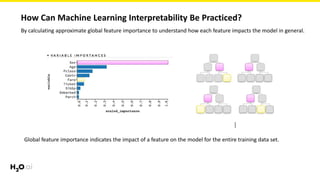

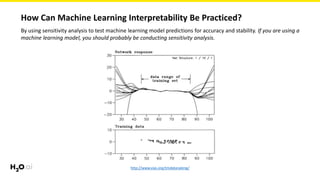

The document discusses machine learning interpretability, defining it as the ability to explain models in understandable terms and highlighting its importance for both social and commercial reasons. It outlines challenges faced in achieving interpretability due to the complexity of machine learning models and suggests practices for enhancing interpretability, including using interpretable models, visualizations, and sensitivity analysis. It also mentions methods and tools like SHAP and LIME for assessing model explanations and provides recommendations for their application.