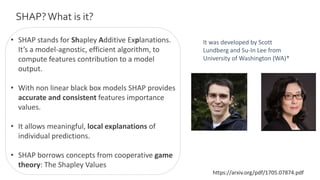

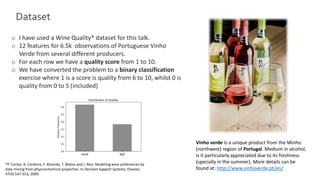

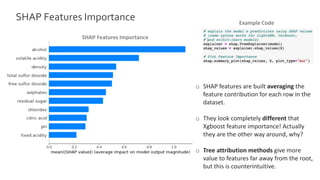

The document discusses the importance of interpretability in machine learning models, emphasizing that trust in models' predictions is crucial for social acceptance. It highlights the use of SHAP (Shapley Additive Explanations) as a method to provide consistent and insightful feature importance, drawing from cooperative game theory. The text also includes practical examples and applications of SHAP in understanding model outputs with a focus on feature contributions.