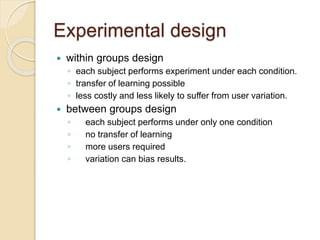

Chapter 9 discusses various evaluation techniques for Human-Computer Interaction (HCI), including cognitive walkthroughs, heuristic evaluations, and laboratory versus field studies. It emphasizes the importance of assessing usability and functionality throughout the design life cycle and describes methods for data collection and analysis, including experimental conditions and participant observation. The chapter also addresses the use of interviews, questionnaires, and physiological measurements to gather insights on user interactions.