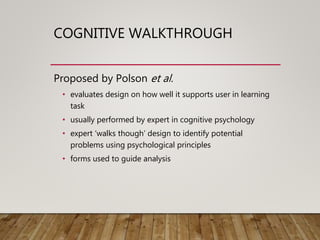

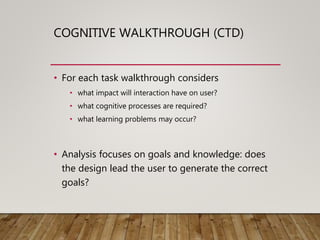

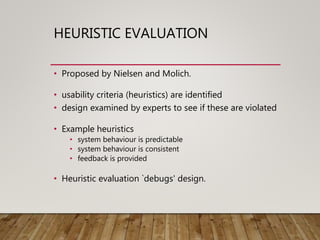

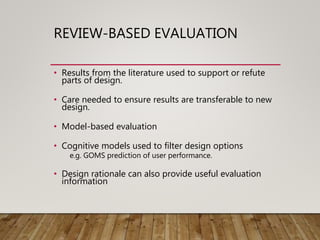

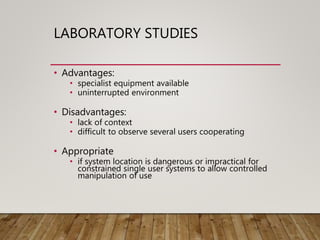

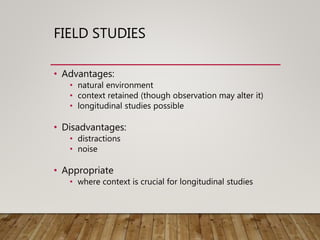

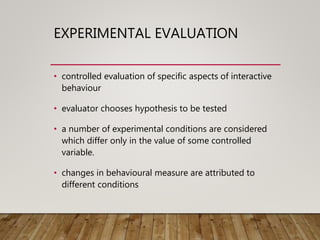

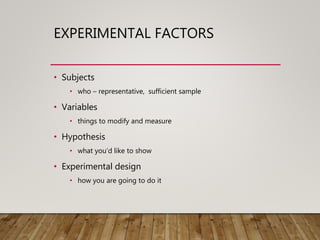

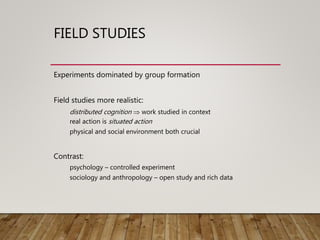

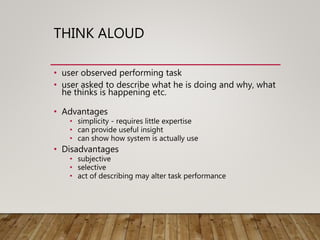

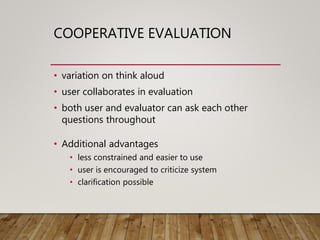

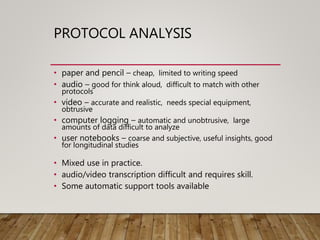

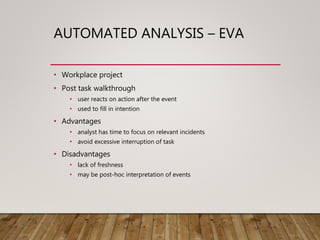

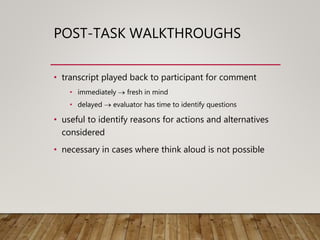

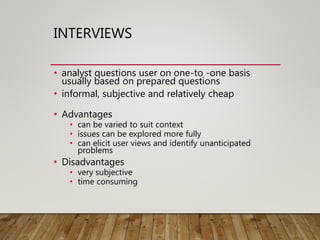

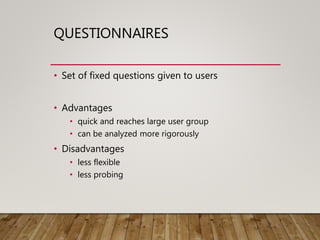

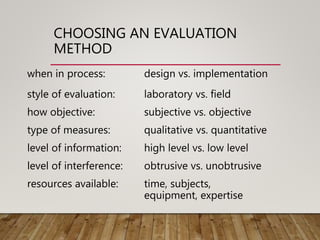

This document discusses various techniques for evaluating user interface designs and implementations, including cognitive walkthroughs, heuristic evaluations, laboratory and field studies, and physiological methods. It describes the goals of evaluation as assessing system functionality, the effect of the interface on users, and identifying specific problems. Each technique is examined in terms of its advantages, disadvantages, and appropriateness for different evaluation purposes and stages of the design process.