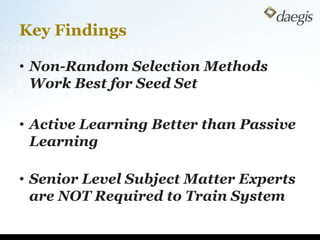

This document provides an overview of predictive coding technology for prioritizing or coding electronic documents. It defines predictive coding as a process that uses human judgments on a smaller seed set of documents to train a computer system to extrapolate those judgments to the remaining documents. The document then outlines the key steps in a predictive coding process, including creating a seed set, relatedness scoring to build a model, keyword searching, predicting responsiveness, assessing results, training the system, and producing final responsive documents. It also discusses metrics for measuring a system's accuracy.