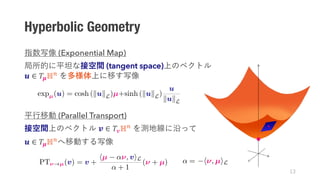

1. The document presents a novel hyperbolic distribution called the pseudo-hyperbolic Gaussian, which is a Gaussian distribution on hyperbolic space that can be evaluated analytically and differentiated with respect to parameters.

2. This distribution enables gradient-based learning of probabilistic models on hyperbolic space. It also allows sampling from the hyperbolic probability distribution without auxiliary means like rejection sampling.

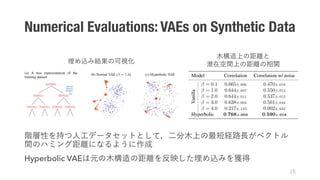

3. As applications of the distribution, the authors develop a hyperbolic variational autoencoder and a method for probabilistic word embedding on hyperbolic space. They demonstrate the efficacy of the distribution on datasets including MNIST, Atari 2600 Breakout, and WordNet.

![Motivation

ARTICLERESEARCH

Figure 3 | Monte Carlo tree search in AlphaGo. a, Each simulation

traverses the tree by selecting the edge with maximum action value Q,

plus a bonus u(P) that depends on a stored prior probability P for that

is evaluated

a rollout to

Selectiona b cExpansion Evaluation

p

p

Q + u(P)

Q + u(P)Q + u(P)

Q + u(P)

P P

P P

r

P

max

max

P

[Silver+2016]

Mammal

Primate

Human Monkey

Rodent](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-3-320.jpg)

![Motivation

Mammal

Primate

Human Monkey

Rodent

ARTICLECH

Monte Carlo tree search in AlphaGo. a, Each simulation

he tree by selecting the edge with maximum action value Q,

is evaluated in two ways: using the value network vθ

a rollout to the end of the game with the fast rollout

Selection b c dExpansion Evaluation Backup

p

p

Q + u(P)

Q + u(P)Q + u(P)

Q + u(P)

P P

P P

Q QQ

Q

rr r

P

max

max

P

[Silver+2016]

Hierarchical Datasets Hyperbolic Space

[Image: wikipedia.org]

[Nickel & Kiela, 2017]](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-4-320.jpg)

![Motivation

Mammal

Primate

Human Monkey

Rodent

ARTICLECH

Monte Carlo tree search in AlphaGo. a, Each simulation

he tree by selecting the edge with maximum action value Q,

is evaluated in two ways: using the value network vθ

a rollout to the end of the game with the fast rollout

Selection b c dExpansion Evaluation Backup

p

p

Q + u(P)

Q + u(P)Q + u(P)

Q + u(P)

P P

P P

Q QQ

Q

rr r

P

max

max

P

[Silver+2016]

Hierarchical Datasets Hyperbolic Space

Volume increases exponentially

with its radius](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-5-320.jpg)

![Motivation

Mammal

Primate

Human Monkey

Rodent

ARTICLECH

Monte Carlo tree search in AlphaGo. a, Each simulation

he tree by selecting the edge with maximum action value Q,

is evaluated in two ways: using the value network vθ

a rollout to the end of the game with the fast rollout

Selection b c dExpansion Evaluation Backup

p

p

Q + u(P)

Q + u(P)Q + u(P)

Q + u(P)

P P

P P

Q QQ

Q

rr r

P

max

max

P

[Silver+2016]

Hierarchical Datasets Hyperbolic Space

[Nickel+2017]](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-6-320.jpg)

![Motivation

Mammal

Primate

Human Monkey

Rodent

ARTICLECH

Monte Carlo tree search in AlphaGo. a, Each simulation

he tree by selecting the edge with maximum action value Q,

is evaluated in two ways: using the value network vθ

a rollout to the end of the game with the fast rollout

Selection b c dExpansion Evaluation Backup

p

p

Q + u(P)

Q + u(P)Q + u(P)

Q + u(P)

P P

P P

Q QQ

Q

rr r

P

max

max

P

[Silver+2016]

Hierarchical Datasets Hyperbolic Space

[Nickel+2017]

How can we extend these works to

probabilistic inference?](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-7-320.jpg)

![Difficulty: Probabilistic Distribution on Curved Space

…

M

1.

2. 3.

[Image: wikipedia.org]](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-8-320.jpg)

![Difficulty: Probabilistic Distribution on Curved Space

…

M

1.

2. 3.

[Image: wikipedia.org]](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-9-320.jpg)

![Difficulty: Probabilistic Distribution on Curved Space

…

M

1.

2. 3.

[Image: wikipedia.org]](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-10-320.jpg)

![Difficulty: Probabilistic Distribution on Curved Space

…

M

1.

2. 3.

[Image: wikipedia.org]](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-11-320.jpg)

![[ja.wikipedia.org]

(e.g. Poincaré disk, Lorentz model, …)

Lorentz Model

ℝ"#$ Lorentzian product

-1 n :

Hyperbolic Geometry](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-12-320.jpg)

![Numerical Evaluations: VAEs on Breakout

Atari 2600 Breakout-v4

DQN [Mnih+ 2015]

VAE

(≒

)

Vanilla

Vanilla, |v|2 = 200

VanillaHyperbolic](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-17-320.jpg)

![Numerical Evaluations: Word Embeddings

WordNet Nouns word embedding

Euclid [Vilnis & McCallum 2015]](https://image.slidesharecdn.com/slidenaganoicmlyomiv2-190721042557/85/ICLR-ICML2019-A-Wrapped-Normal-Distribution-on-Hyperbolic-Space-for-Gradient-Based-Learning-ICML2019-18-320.jpg)