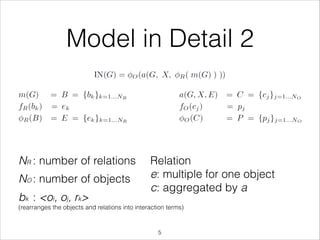

The document discusses a study aimed at developing a general-purpose learnable physics engine that can understand various physical dynamics through interaction networks. The model was tested on simulated scenarios, showcasing better performance compared to alternative approaches in learning physical interactions. Key findings suggest the potential for expansion and application to larger systems while questioning the efficiency and advantages over existing models.

![Implementation 2

7

m(G) = Ds

Ds

DR

NR

ORr

ORs

Ra

= B

[b1, b2, ..., bk]

[e1, e2, ..., ek] = E

fR](https://image.slidesharecdn.com/20170119nips-170125085027/85/Interaction-Networks-for-Learning-about-Objects-Relations-and-Physics-7-320.jpg)

![Implementation 3

8

G, X, E

E = ERr

– T

[O; X; E] = C

–

Ds

Ds

DR

NR

O

X

E

–

fR

a

P = Ot+1

DA

fA

(Free energy)](https://image.slidesharecdn.com/20170119nips-170125085027/85/Interaction-Networks-for-Learning-about-Objects-Relations-and-Physics-8-320.jpg)