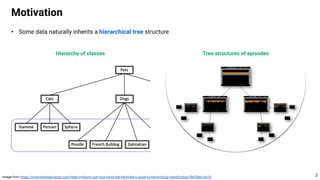

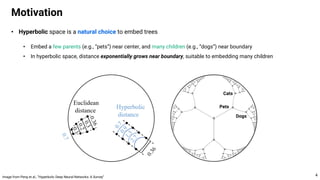

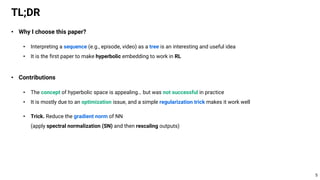

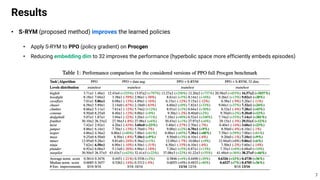

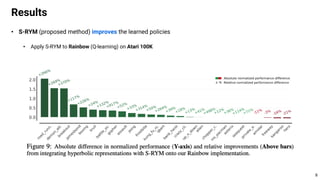

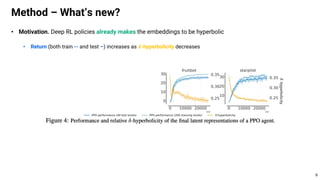

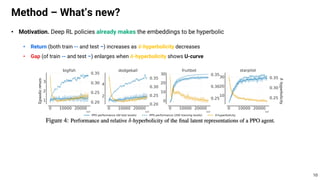

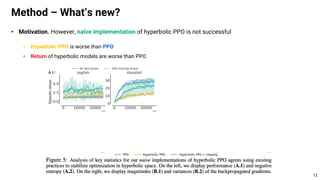

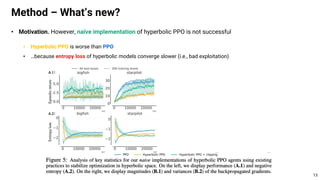

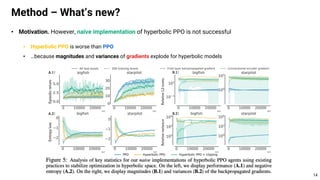

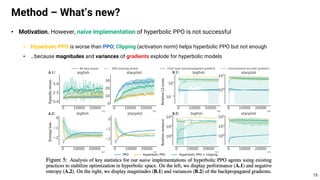

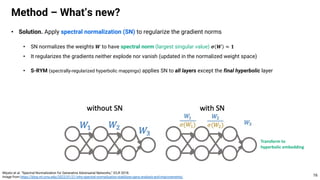

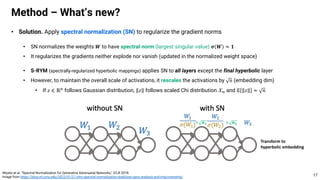

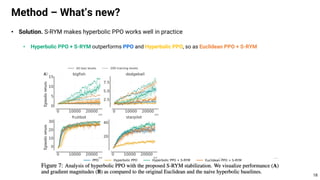

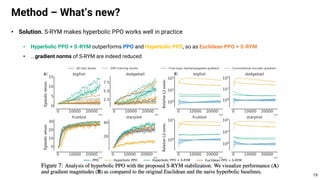

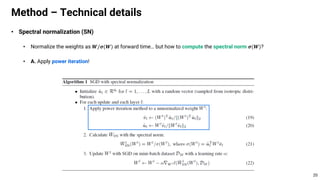

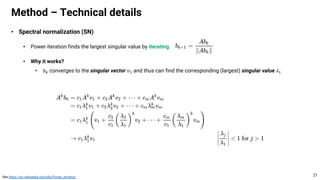

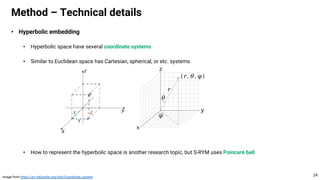

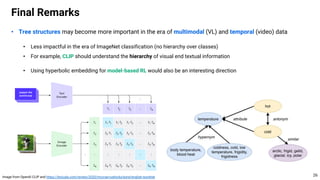

This document proposes using hyperbolic space to embed hierarchical tree structures, like those that can represent sequences of events in reinforcement learning problems. Specifically, it suggests a method called S-RYM that applies spectral normalization to regularize gradients when training deep reinforcement learning agents with hyperbolic embeddings. This stabilization technique allows naive hyperbolic embeddings to outperform standard Euclidean embeddings. It works by reducing gradient norm explosions during training, allowing the entropy loss to converge properly. The document provides technical details on spectral normalization, hyperbolic space representations, and how S-RYM trains deep reinforcement learning agents with stabilized hyperbolic embeddings.