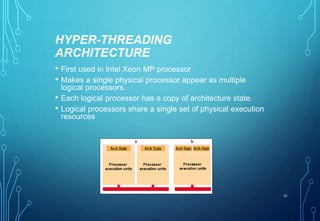

This document discusses hyper-threading technology, which allows processors to work more efficiently by executing two threads simultaneously. It begins with an introduction to hyper-threading and then covers key concepts like how it works, the replicated and shared resources, applications that benefit from it, and advantages like improved performance and increased number of supported users. It also notes disadvantages like increased overhead and potential shared resource conflicts.