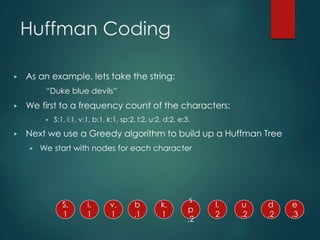

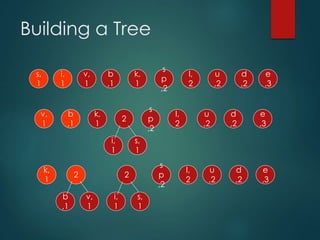

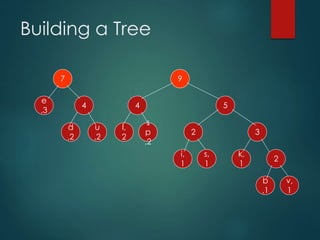

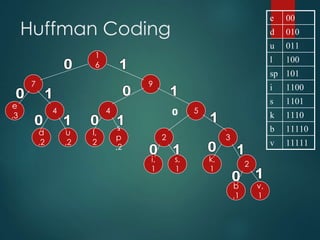

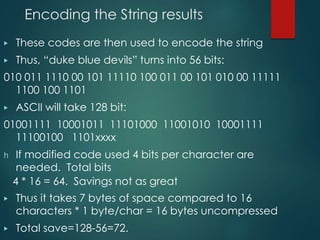

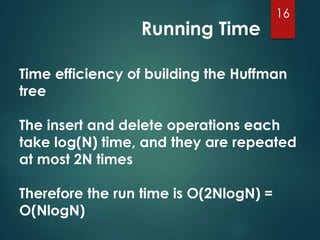

This presentation summarizes Huffman coding. It begins with an outline covering the definition, history, building the tree, implementation, algorithm and examples. It then discusses how Huffman coding encodes data by building a binary tree from character frequencies and assigning codes based on the tree's structure. An example shows how the string "Duke blue devils" is encoded. The time complexity of building the Huffman tree is O(NlogN). Real-life applications of Huffman coding include data compression in fax machines, text files and other forms of data transmission.

![Human Coding

Create New Node m

1. frequencies[m] ←

frequency1 + frequency2 // new

node

2. frequencies[node1] ← m // left link

3. frequencies[node2] ← -m // right link

4. insert in PQueue

(m, frequency1 + frequency2)](https://image.slidesharecdn.com/huffman-coding-170911184817/85/Huffman-coding-5-320.jpg)