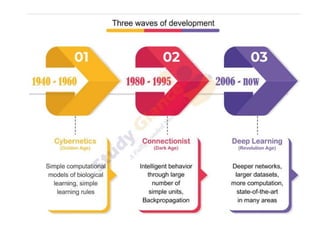

The document outlines the historical trends in deep learning, highlighting three waves of development: the first wave from the 1940s-1960s focused on foundational theories and models, the second wave from the 1980-1995 introduced backpropagation and early neural networks, and the current wave beginning in 2006 emphasizes advancements in deep learning technologies. Key milestones include the introduction of the perceptron, the neocognitron, and modern achievements like AlexNet and GPT-3. Significant breakthroughs in neural network architectures and techniques have led to transformative applications in various domains, particularly in natural language processing and computer vision.