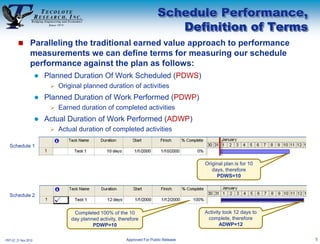

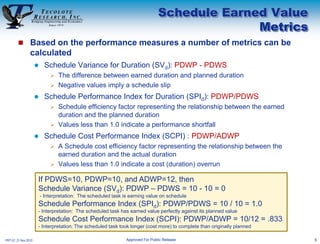

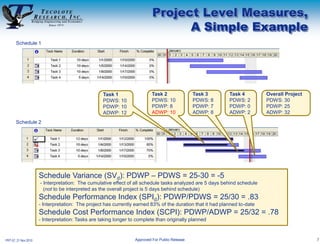

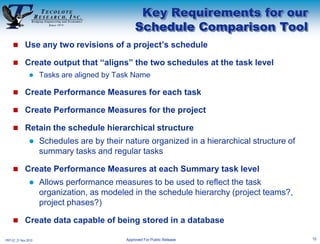

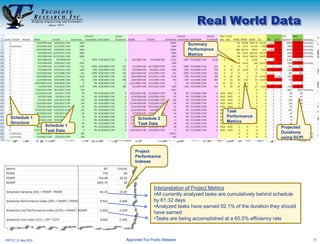

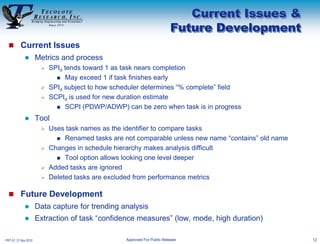

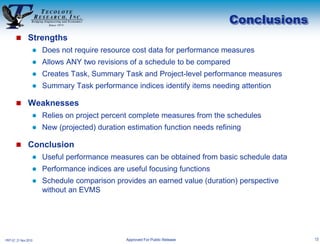

The document describes a tool developed by NASA to measure schedule performance by comparing two project schedules. The tool extracts schedule data from MS Project files to calculate schedule variance, performance index, and cost performance index at the task and project levels. These metrics are designed to assess whether the project is earning planned duration and completing tasks within planned timeframes. The tool is still being refined but provides a way to measure schedule performance without requiring detailed resource cost data.