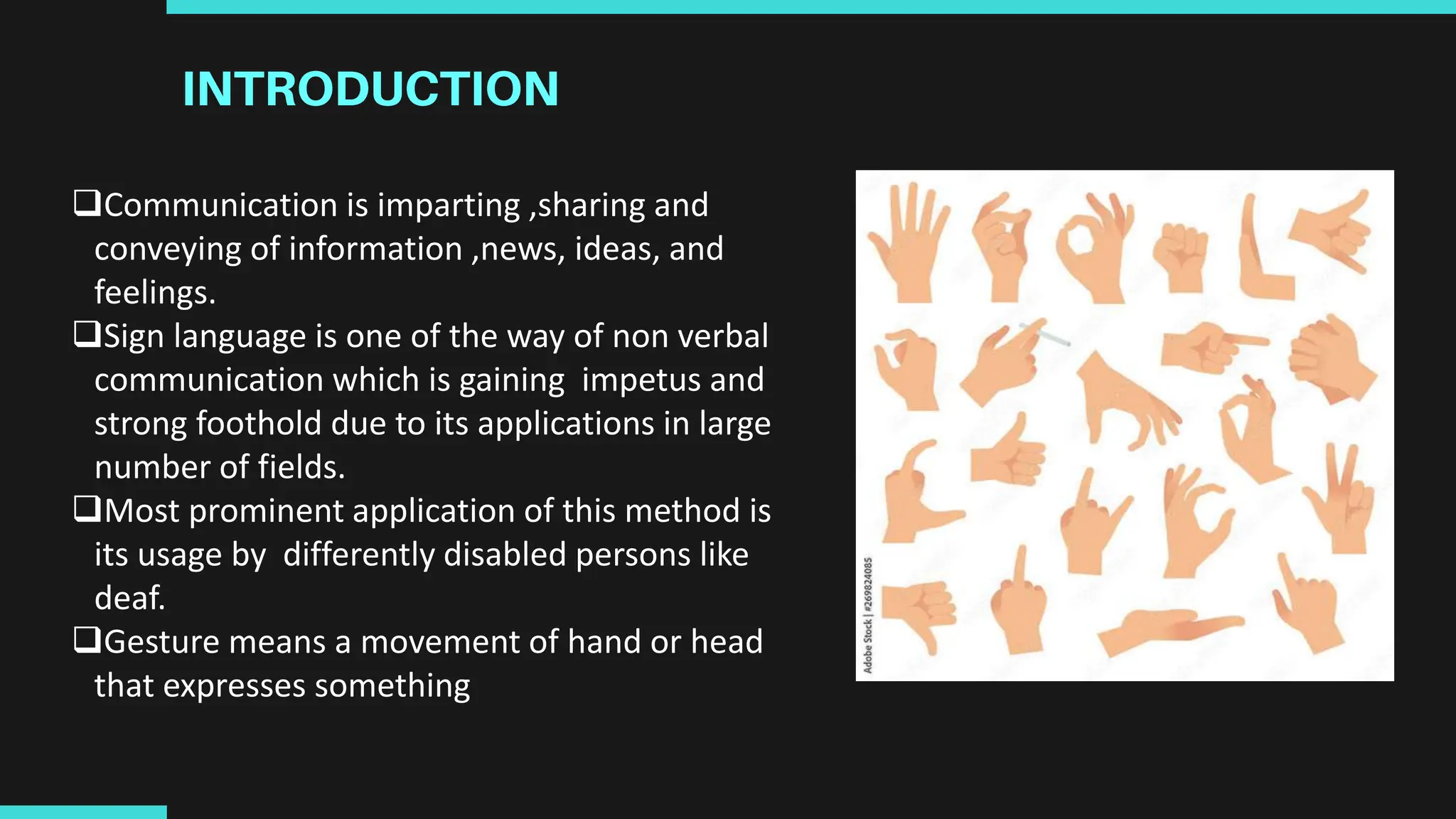

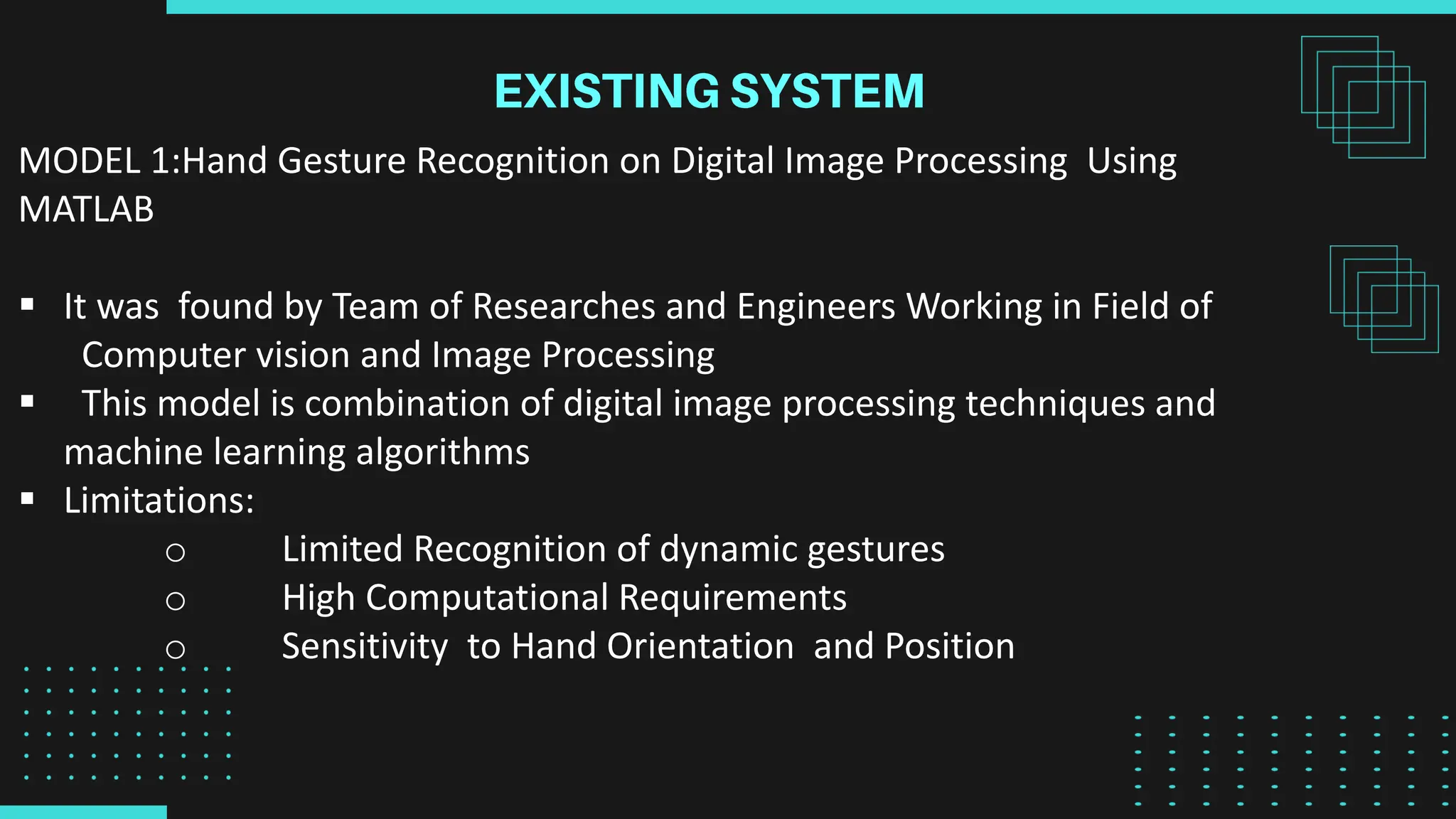

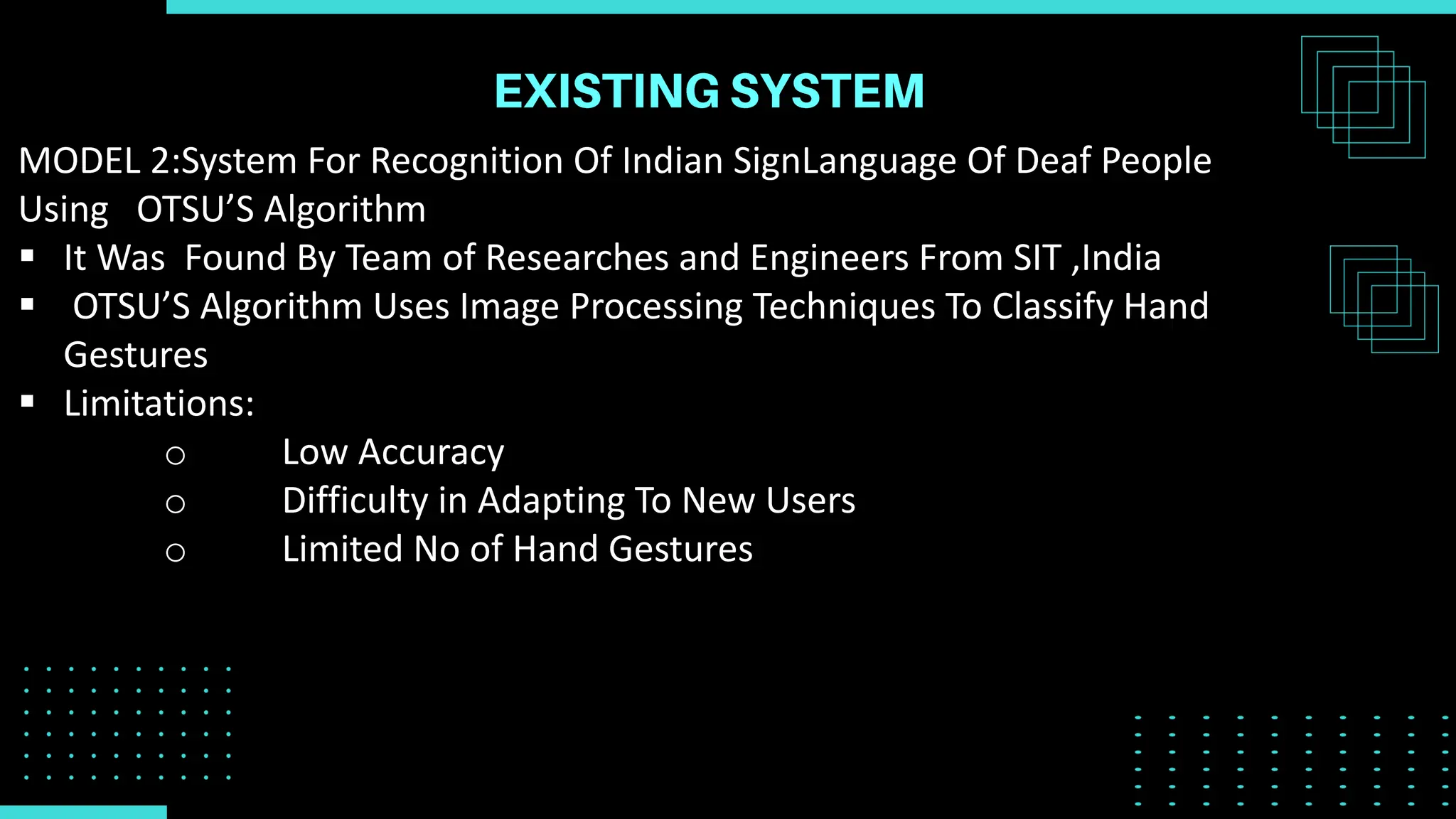

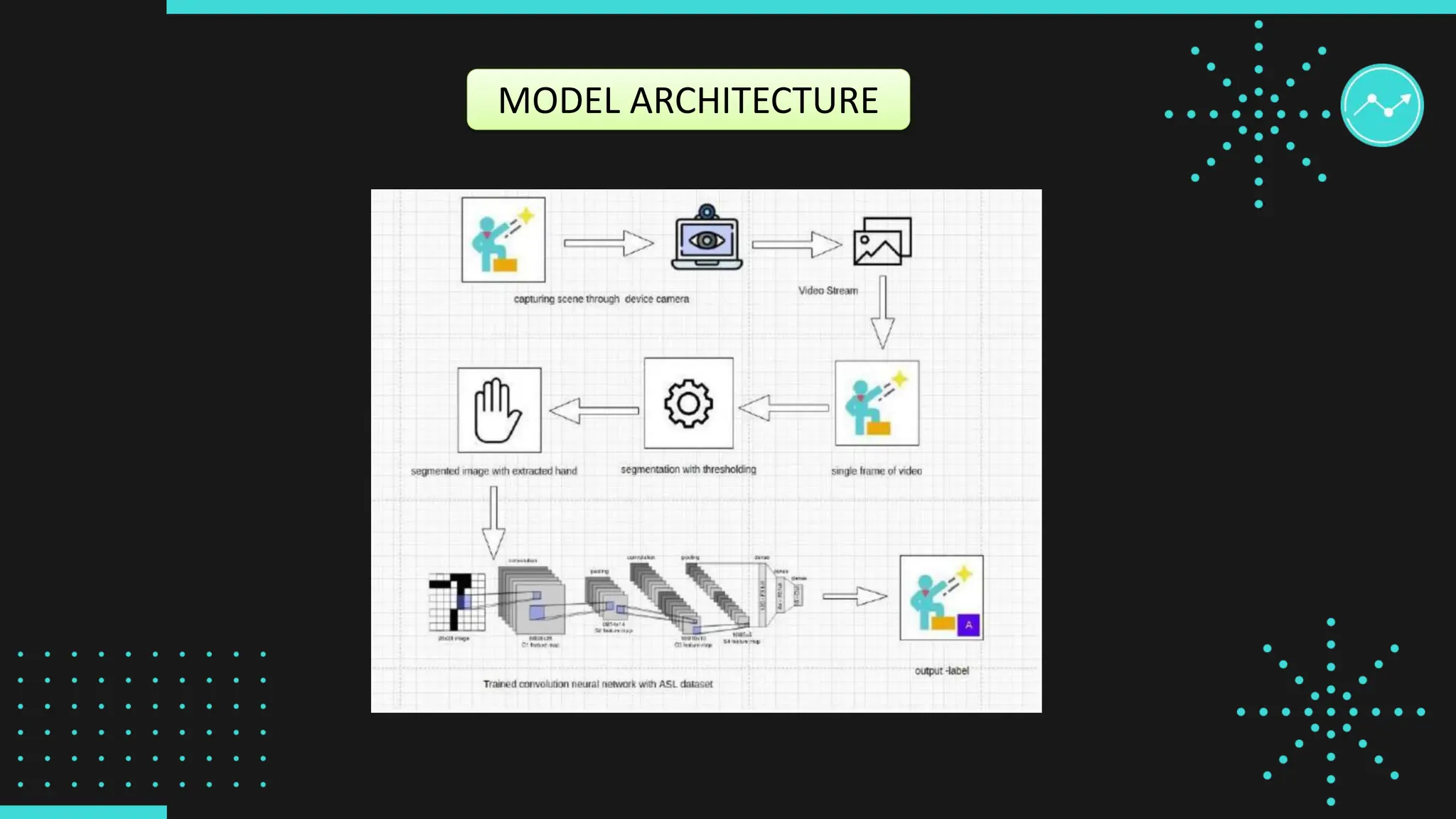

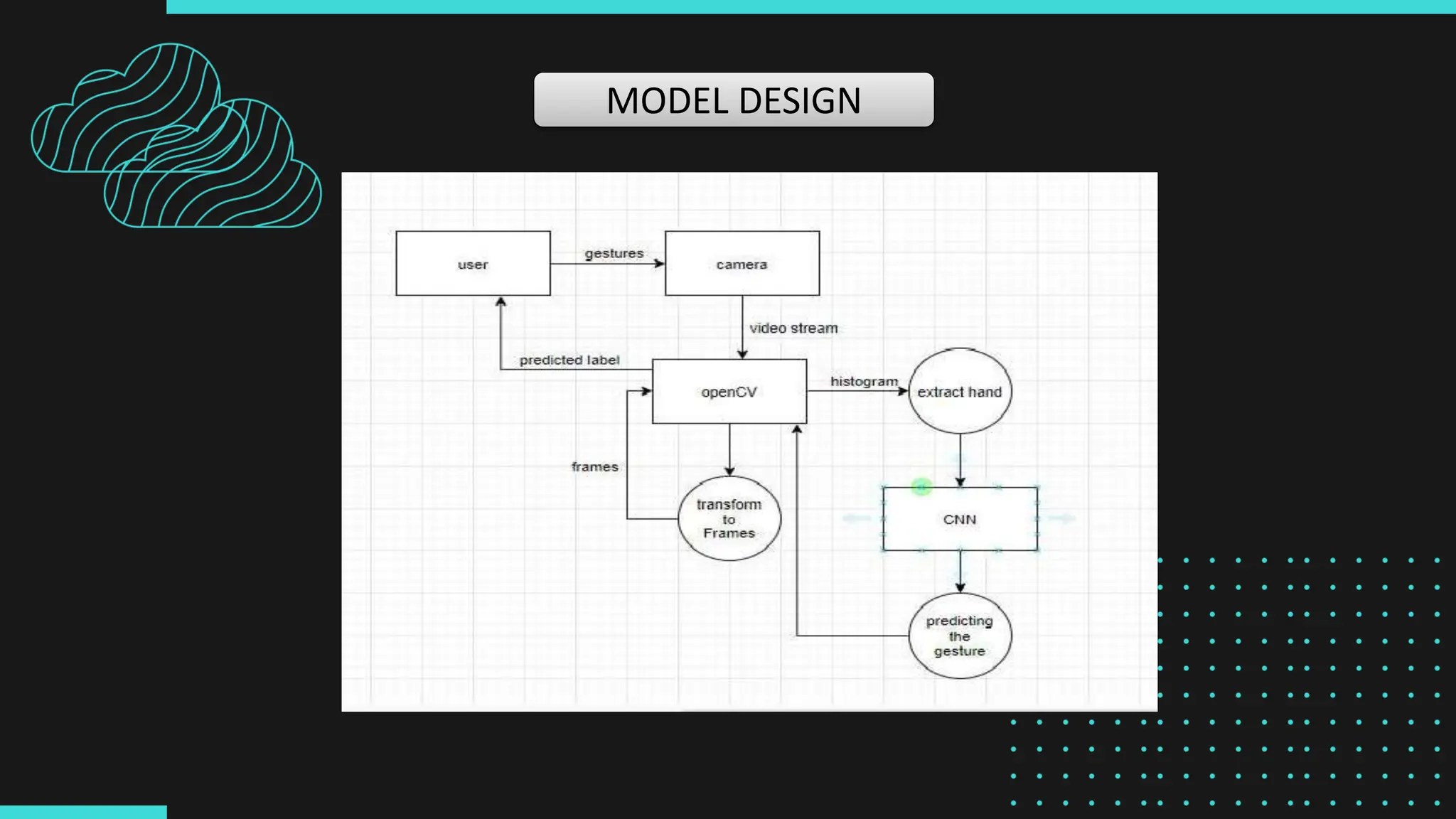

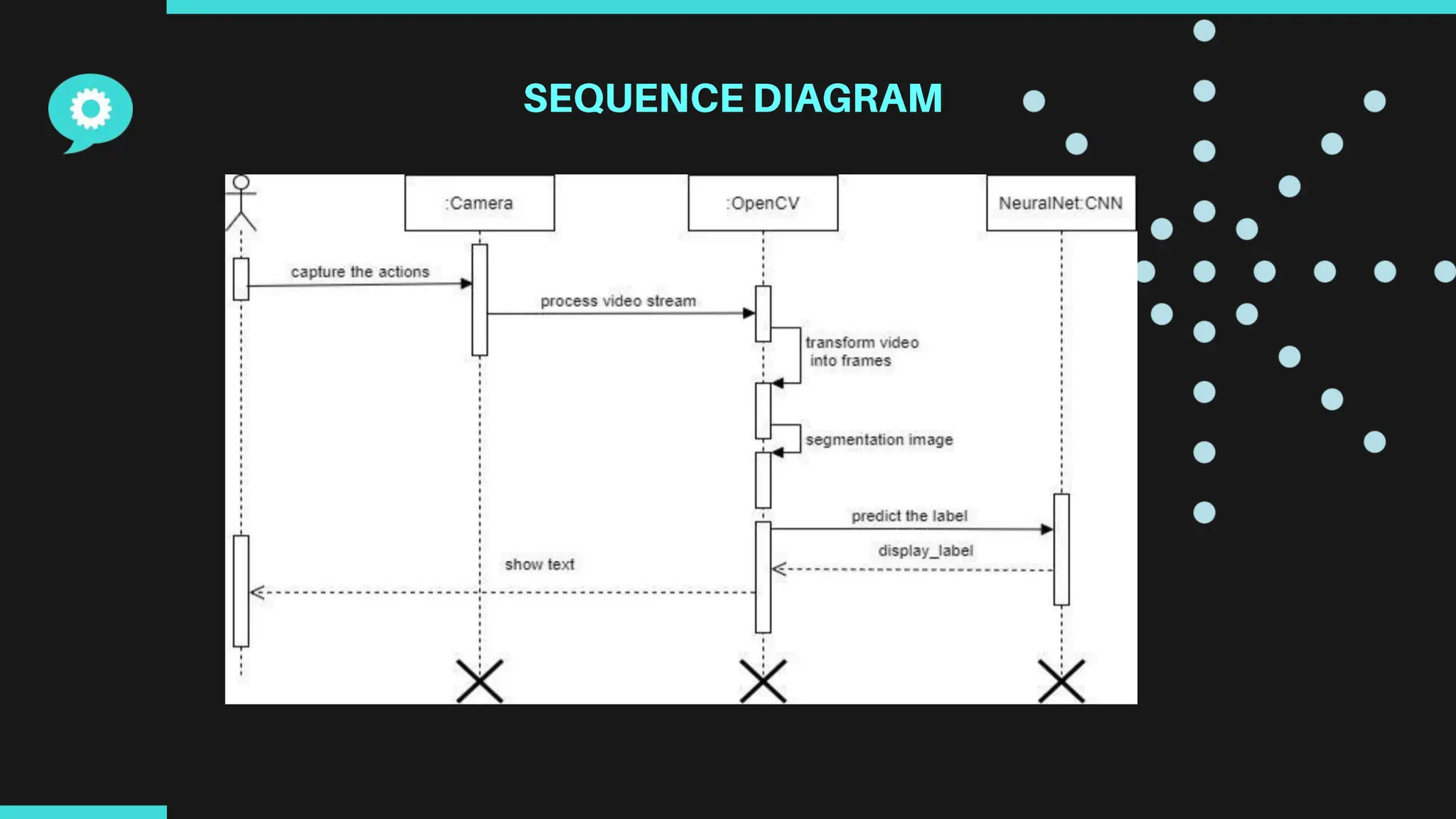

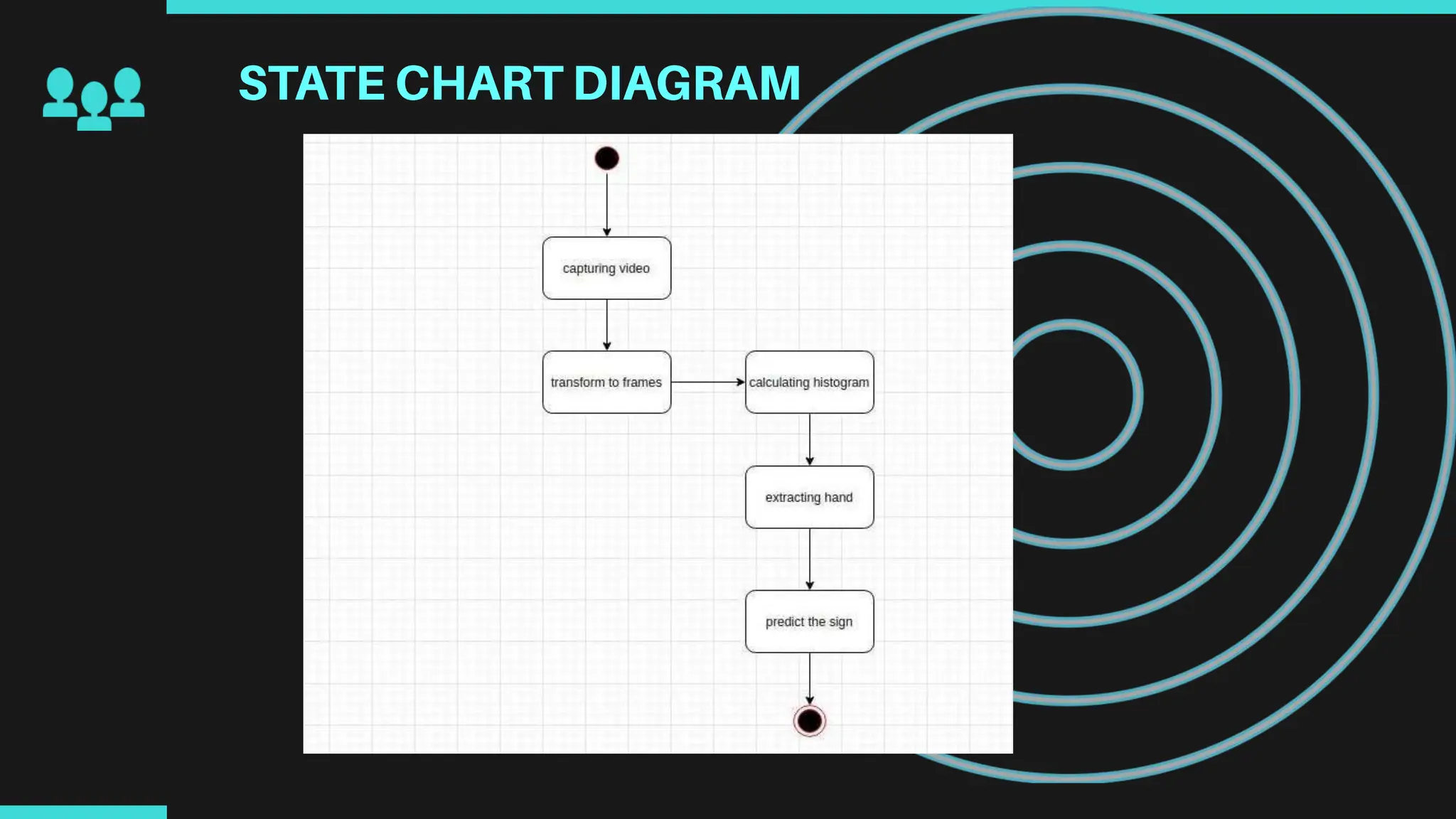

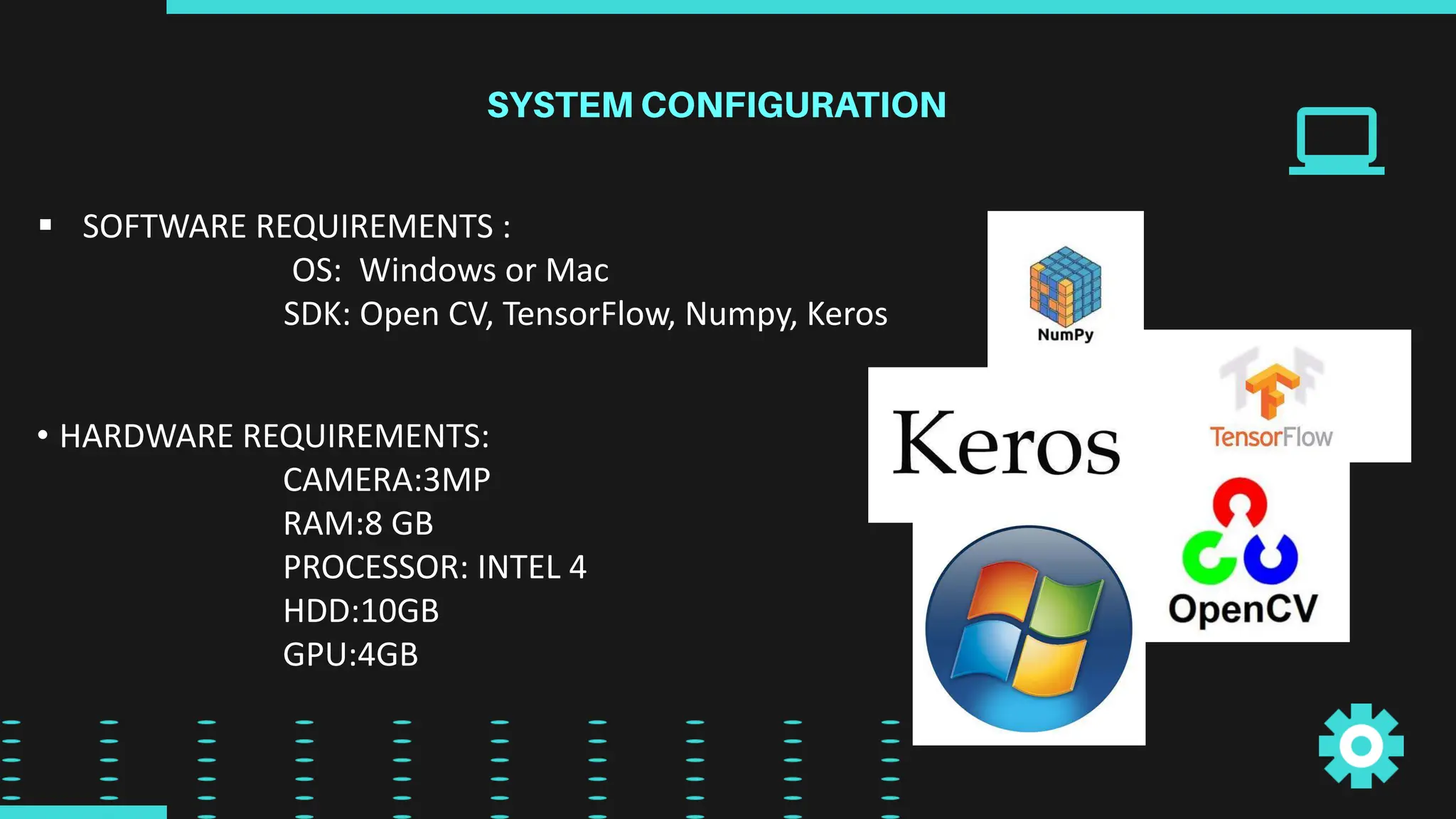

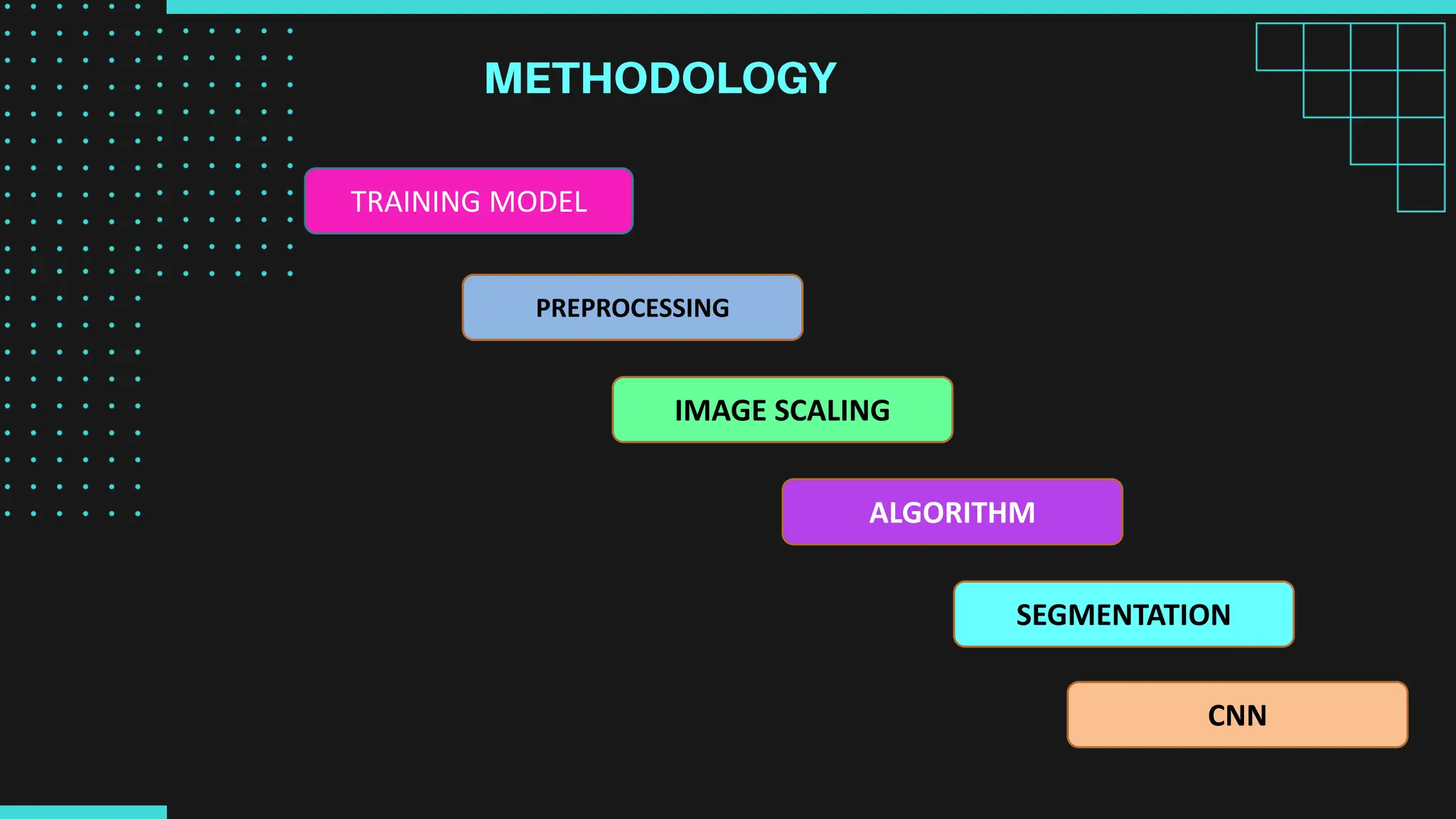

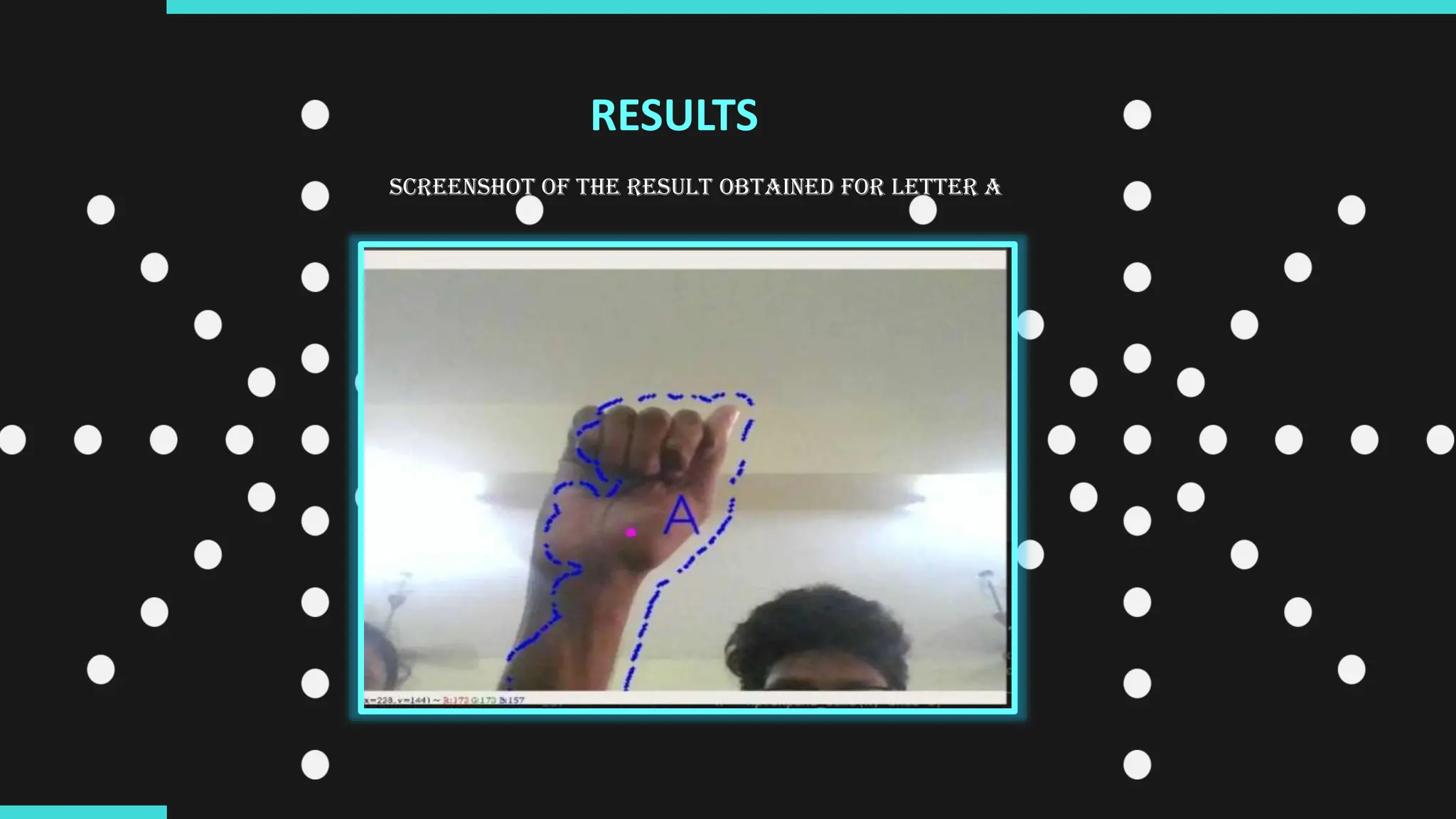

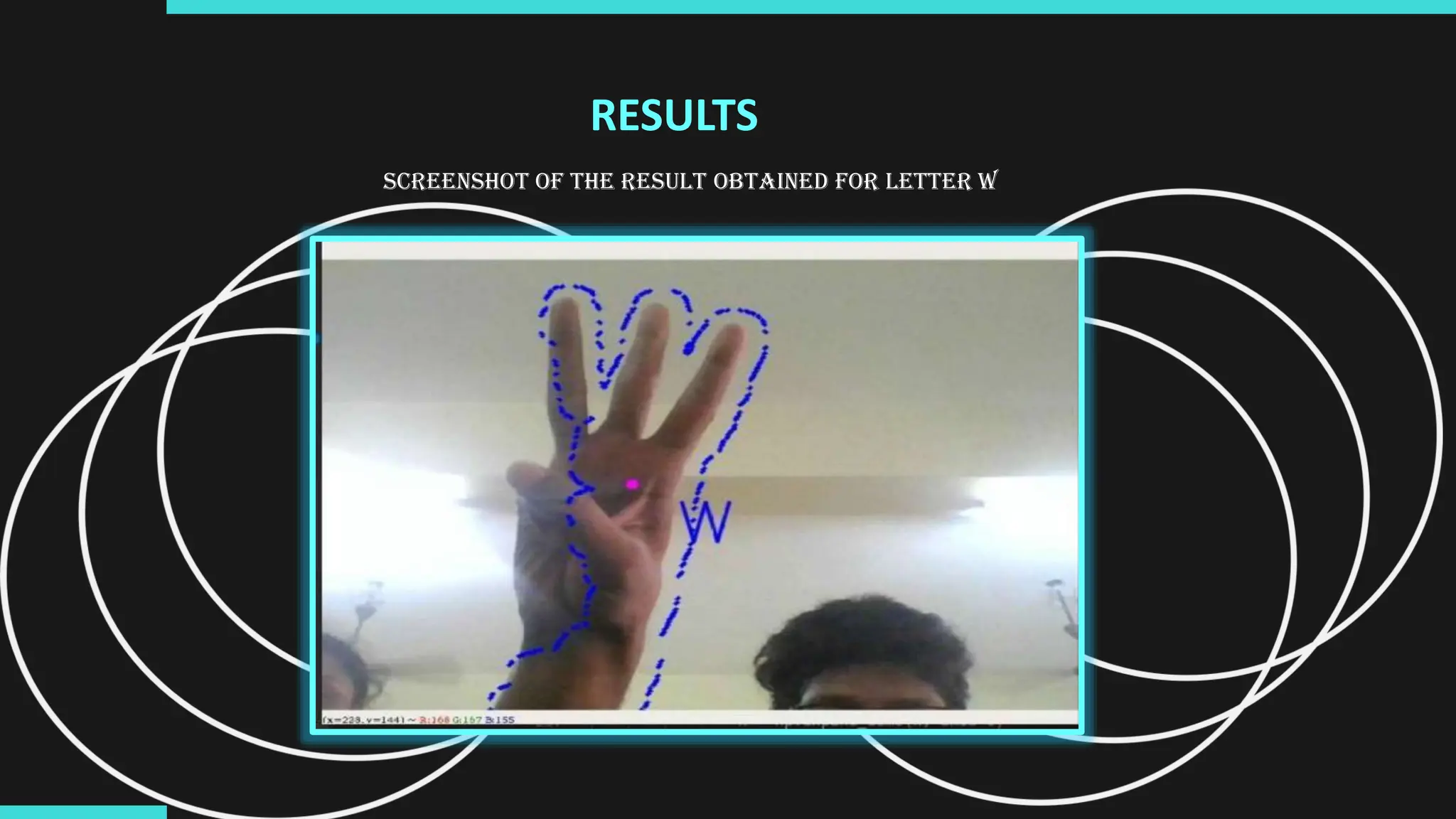

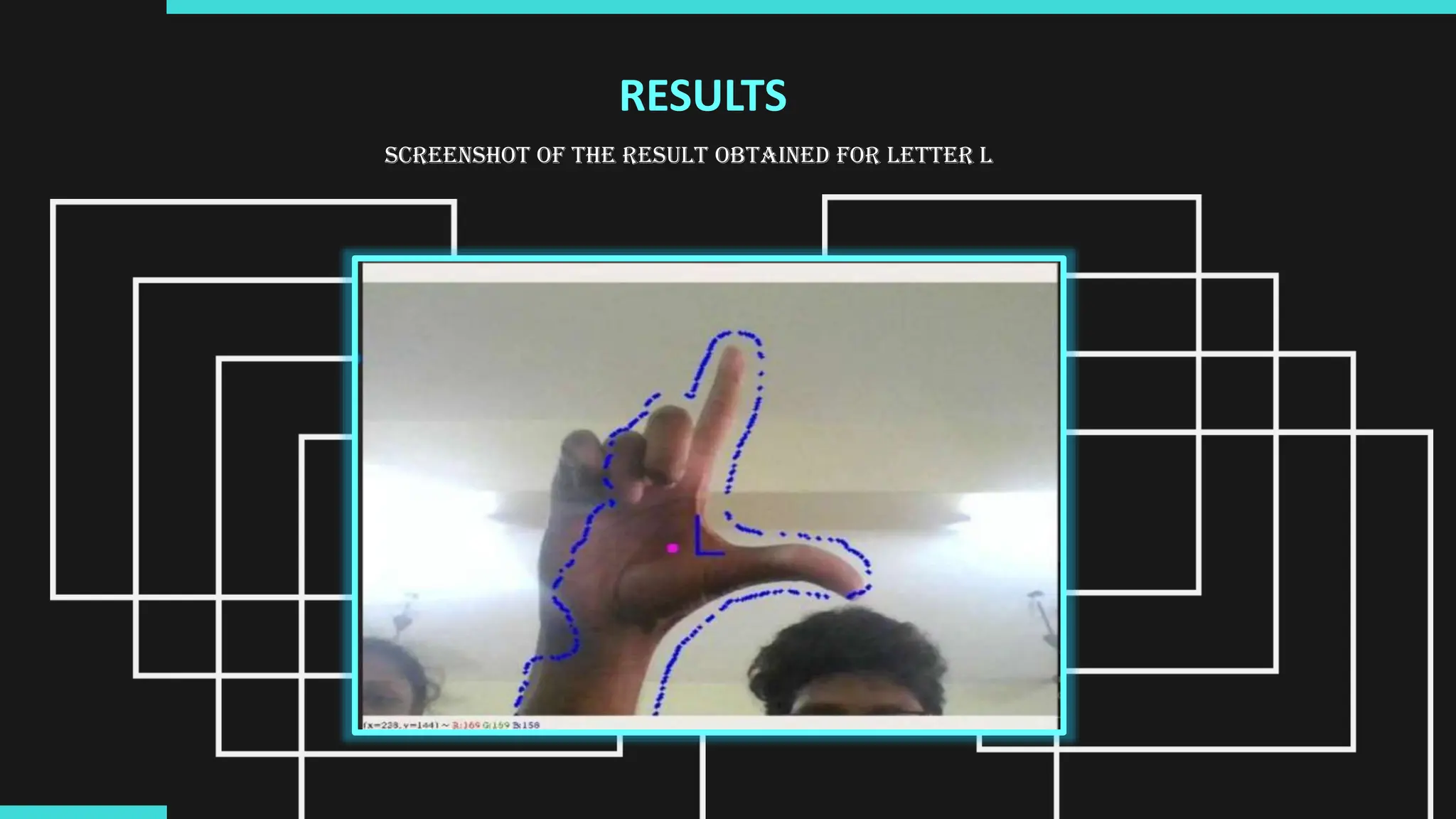

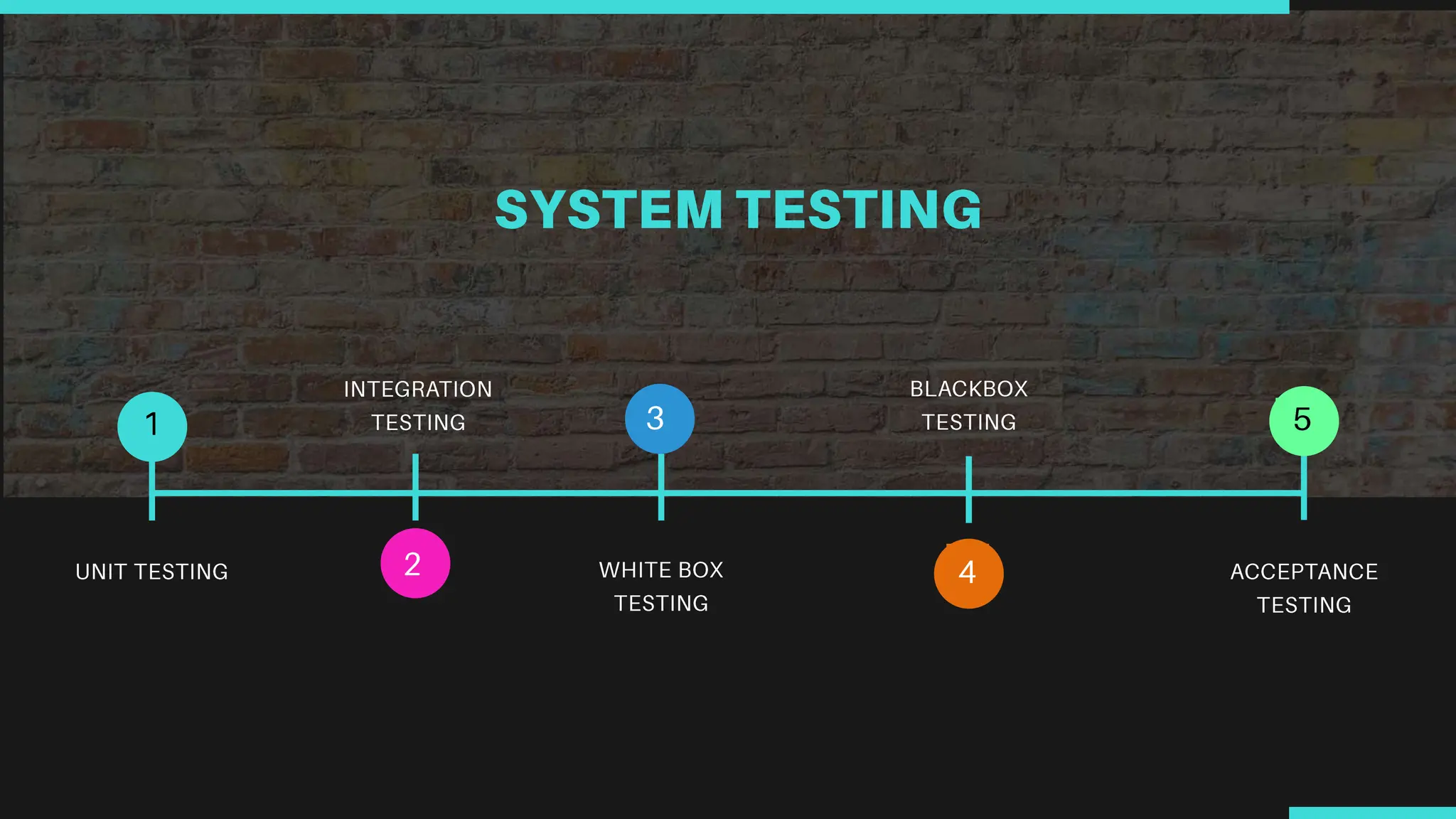

The document discusses the development of a sign language recognition system using convolutional neural networks (CNNs) to assist communication for speech-impaired individuals through hand gestures. The proposed system captures video to segment hand pixels and compare them with a trained model, addressing limitations in existing methodologies. Future enhancements include improving gesture recognition accuracy, translating signs into full sentences, and extending capabilities to cover various sign languages and facial expressions.

![ [1] S. Mitra and T. Acharya. Gesture recognition: A survey. IEEE

Systems, Man, and Cybernetics, 37:311–324, 2007.

[2] V. I. Pavlovic, R. Sharma, and T. S. Huang. Visual interpretation of

hand gestures for human-computer interaction: A review. PAMI,

19:677–695, 1997.

[3J. J. LaViola Jr. An introduction to 3D gestural interfaces. In

SIGGRAPH Course, 2014.

[4] S. B. Wang, A. Quattoni, L. Morency, D. Demirdjian, and T. Darrell.

Hidden conditional random fields for gesture recognition. In CVPR,

pages 1521–1527, 2006](https://image.slidesharecdn.com/saiprojectppt-240428105520-1684de66/75/Hand-gesture-recognition-PROJECT-PPT-pptx-22-2048.jpg)