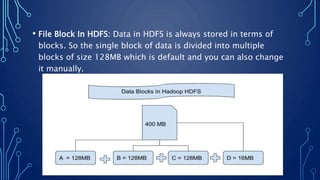

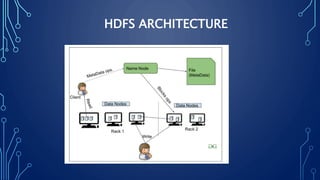

Hadoop is an open-source framework that uses clusters of commodity hardware to store and process big data using the MapReduce programming model. It consists of four main components: MapReduce for distributed processing, HDFS for storage, YARN for resource management and scheduling, and common utilities. HDFS stores large files as blocks across nodes for fault tolerance. MapReduce jobs are split into map and reduce phases to process data in parallel. YARN schedules resources and manages job execution. The common utilities provide libraries and scripts used by all Hadoop components. Major companies use Hadoop to analyze large amounts of data.