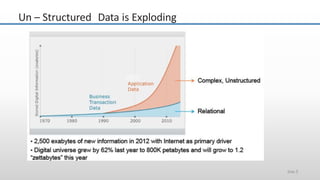

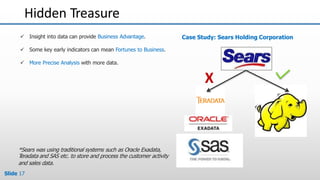

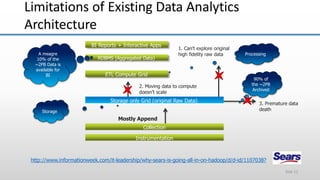

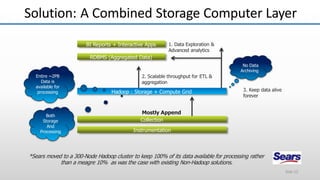

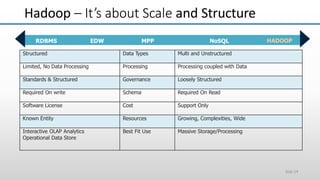

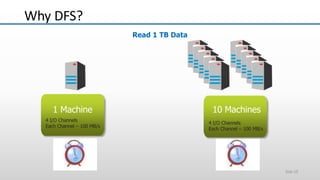

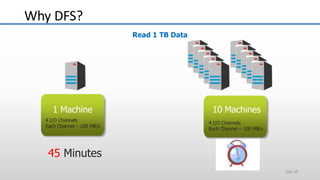

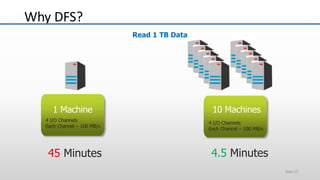

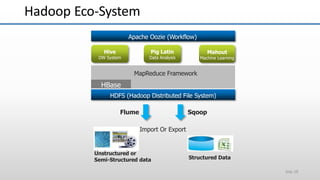

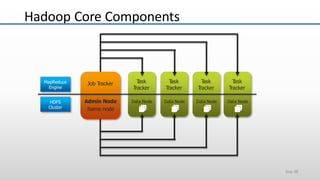

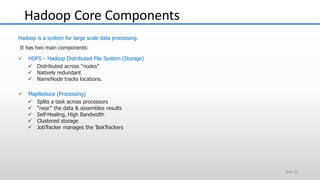

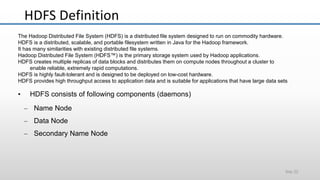

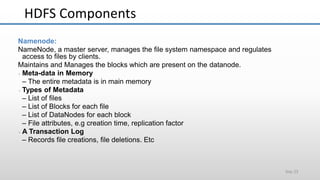

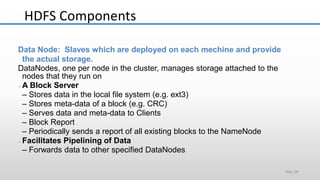

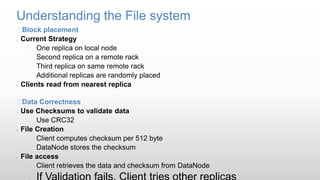

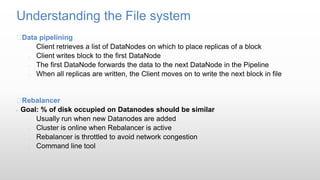

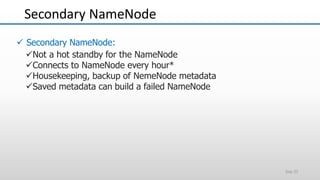

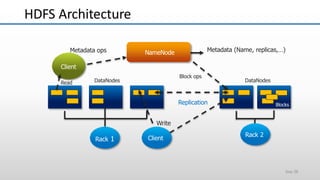

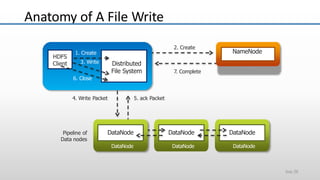

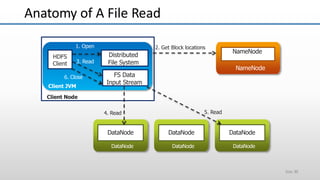

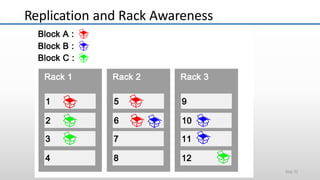

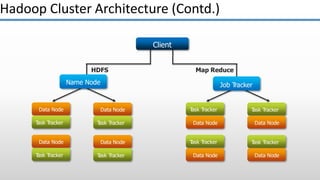

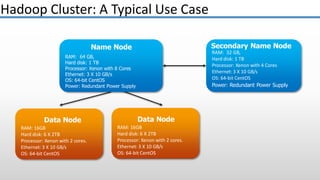

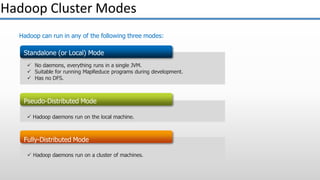

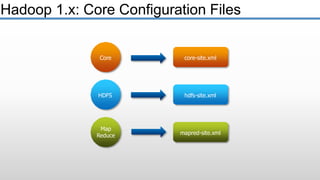

The document provides an overview of big data and its characteristics, explaining its challenges and the limitations of traditional data processing tools. It introduces Hadoop as a solution, detailing its components including HDFS (Hadoop Distributed File System) and MapReduce for large-scale data processing, while also discussing various use cases and customer scenarios across different industries. The architecture of Hadoop, including the role of names nodes and data nodes in managing and storing large datasets, is elaborated upon, showcasing its distributed nature and efficiency.