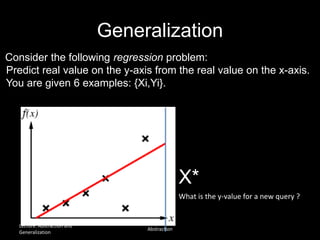

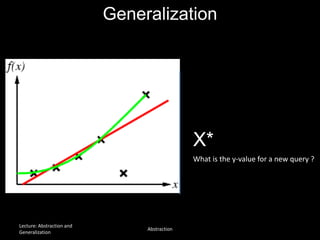

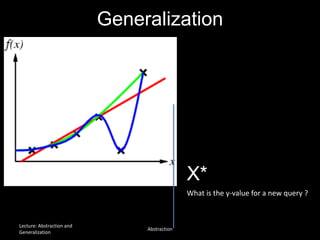

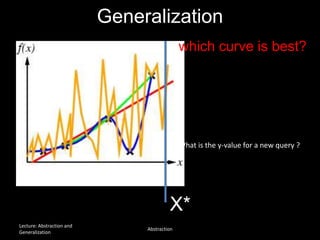

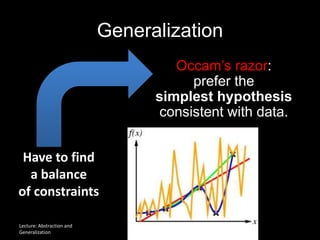

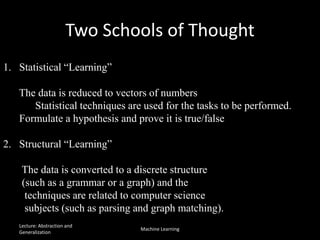

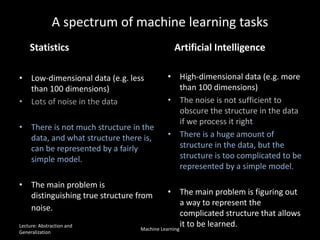

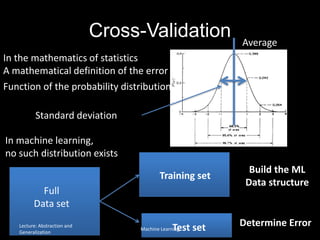

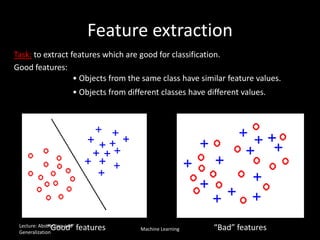

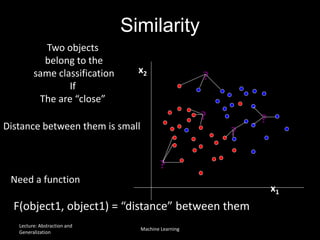

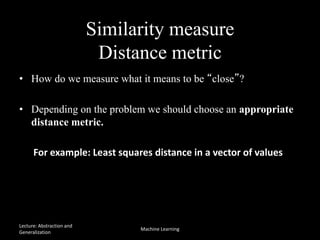

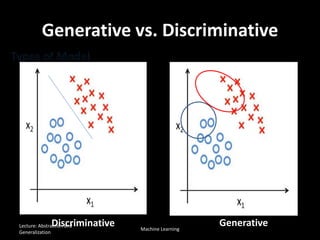

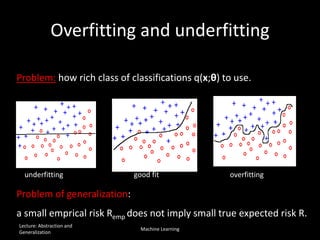

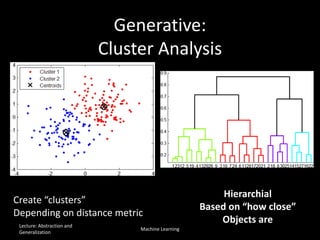

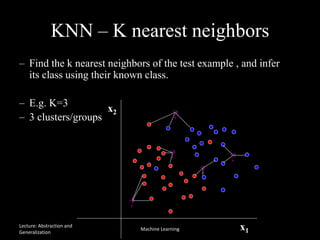

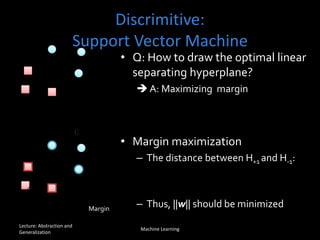

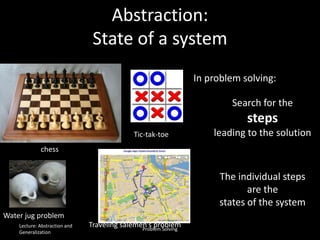

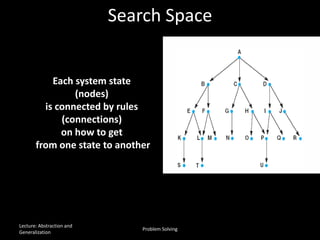

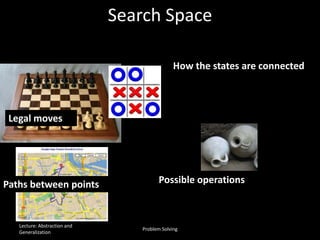

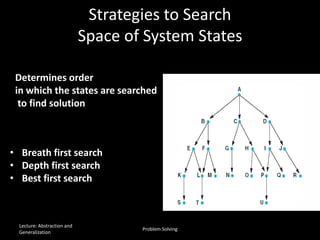

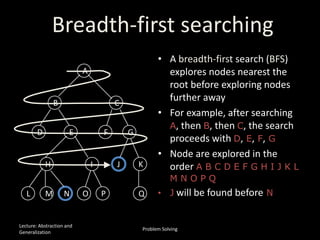

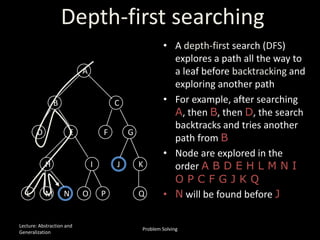

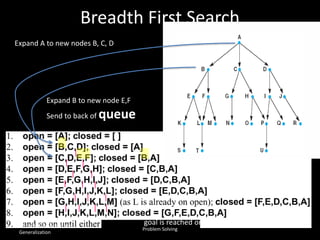

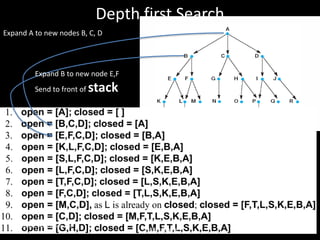

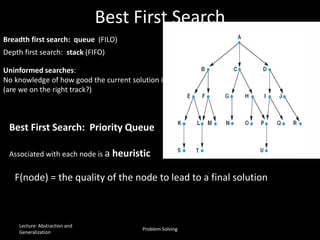

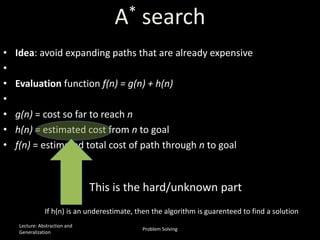

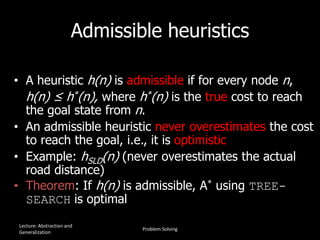

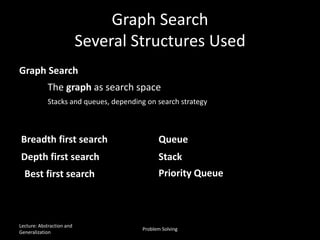

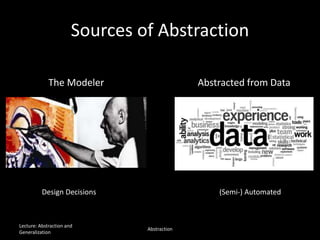

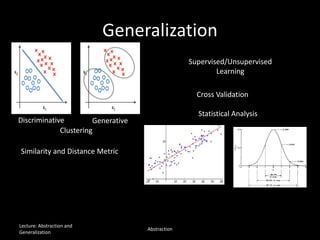

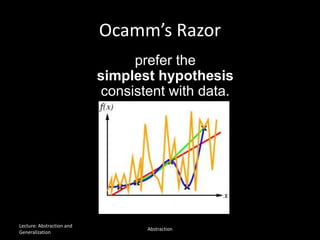

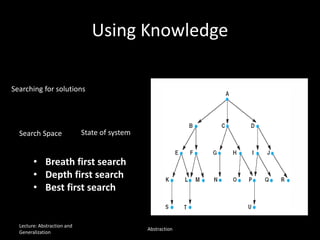

The document discusses the concepts of abstraction and generalization in knowledge representation and problem-solving, emphasizing the importance of choosing the right representation of reality based on specific aspects to be modeled. It explores various machine learning techniques, including supervised and unsupervised learning, pattern recognition, and classification algorithms, touching on statistical methods and the challenges of overfitting and underfitting. Additionally, it outlines problem-solving strategies within search spaces, including breadth-first, depth-first, and best-first search methods, and highlights the significance of heuristics in optimizing search algorithms.