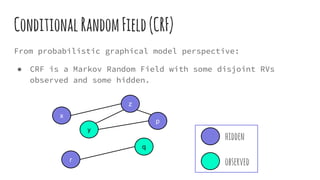

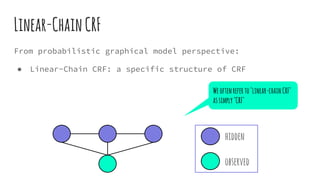

This document provides an overview of linear-chain conditional random fields (CRFs), including how they relate to logistic regression and how they can be used for tasks like part-of-speech tagging and speech disfluency detection. It explains that linear-chain CRFs are a type of log-linear model that uses a graph structure to represent relationships between input features and output labels. Feature functions in CRFs can capture dependencies between neighboring output labels. The document provides examples of how CRFs are trained and tested for sequence labeling tasks.

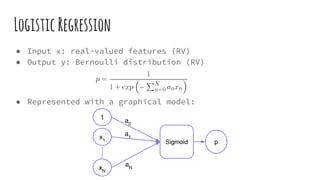

![Log-LinearModel

Note:

1. “Feature” vs. “Feature function”

○ Feature: only correspond to input

○ Feature function: correspond to both input and output

2. Must sum over all possible label y' for denominator

-> normalization into [0, 1].

General form:

● Fj

(x,y): j-th feature function](https://image.slidesharecdn.com/fromlogisticregressiontolinear-chaincrf-160717222729/85/From-logistic-regression-to-linear-chain-CRF-14-320.jpg)

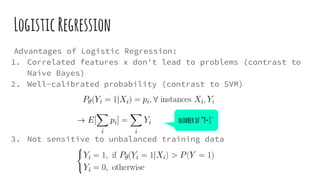

![Linear-ChainCRF

From Log-Linear Model point of view: Linear-Chain CRF is a

Log-Linear Model, of which

1. The length L of output y can be varying.

2. The form of feature function is the sum of ”low-level

feature functions”:

“We can have a fixed set of feature-functions Fj

for log-

linear training, even though the training examples are not

fixed-length.” [1]](https://image.slidesharecdn.com/fromlogisticregressiontolinear-chaincrf-160717222729/85/From-logistic-regression-to-linear-chain-CRF-19-320.jpg)

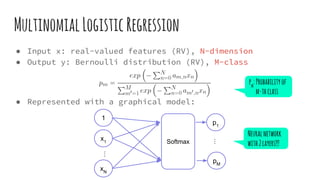

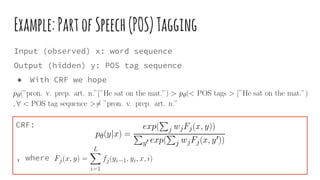

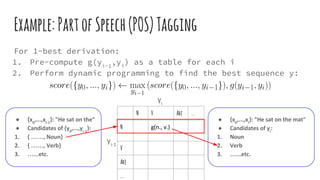

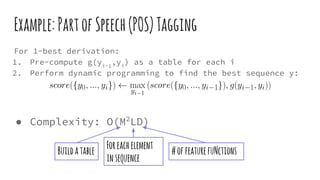

![Example:PartofSpeech(POS)Tagging

An example of low-level feature function fj

(x,yi

,yi-1

,i):

● "The i-th word in x is capitalized, and POS tag yi

=

proper noun." [TRUE(1) or FALSE(0)]

If wj

positively large: given x and other condition fixed, y

is more probable if fj

(x,yi

,yi-1

,i) is activated.

CRF:

, where

Noteafeaturefunctionmaynotuse

allthegiveninformation](https://image.slidesharecdn.com/fromlogisticregressiontolinear-chaincrf-160717222729/85/From-logistic-regression-to-linear-chain-CRF-22-320.jpg)

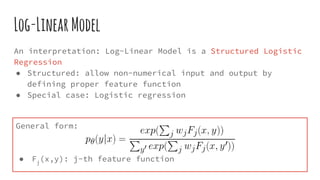

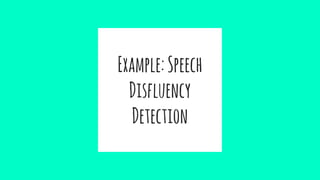

![Example:SpeechDisfluencyDetection

One of the application of CRF in speech recognition:

Boundary/Disfluency Detection [5]

● Repetition : “It is is Tuesday.”

● Hesitation : “It is uh… Tuesday.”

● Correction: “It is Monday, I mean, Tuesday.”

● etc.

Possible clues: prosody

● Pitch

● Duration

● Energy

● Pause

● etc.

“Itisuh…Tuesday.”

● Pitchreset?

● Longduration?

● Lowenergy?

● Pauseexistence?](https://image.slidesharecdn.com/fromlogisticregressiontolinear-chaincrf-160717222729/85/From-logistic-regression-to-linear-chain-CRF-31-320.jpg)

![One of the application of CRF in speech recognition:

Boundary/Disfluency Detection [5]

● CRF Input x: prosodic features

● CRF Output y:

Speech

Recognition

Rescoring

Example:SpeechDisfluencyDetection](https://image.slidesharecdn.com/fromlogisticregressiontolinear-chaincrf-160717222729/85/From-logistic-regression-to-linear-chain-CRF-32-320.jpg)

![Reference

[1] Charles Elkan, “Log-linear Models and Conditional Random

Fields”

○ Tutorial at CIKM08 (ACM International Conference on Information and

Knowledge Management)

○ Video: http://videolectures.net/cikm08_elkan_llmacrf/

○ Lecture notes: http://cseweb.ucsd.edu/~elkan/250B/cikmtutorial.pdf

[2] Hanna M. Wallach, “Conditional Random Fields: An

Introduction”

[3] Jeremy Morris, “Conditional Random Fields: An Overview”

○ Presented at OSU Clippers 2008, January 11, 2008](https://image.slidesharecdn.com/fromlogisticregressiontolinear-chaincrf-160717222729/85/From-logistic-regression-to-linear-chain-CRF-33-320.jpg)

![Reference

[4] C. Sutton, K. Rohanimanesh, A. McCallum, “Conditional

random fields: Probabilistic models for segmenting and

labeling sequence data”, 2001.

[5] Liu, Y. and Shriberg, E. and Stolcke, A. and Hillard, D.

and Ostendorf, M. and Harper, M., “Enriching speech

recognition with automatic detection of sentence boundaries

and disfluencies”, in IEEE Transactions on Audio, Speech,

and Language Processing, 2006.](https://image.slidesharecdn.com/fromlogisticregressiontolinear-chaincrf-160717222729/85/From-logistic-regression-to-linear-chain-CRF-34-320.jpg)