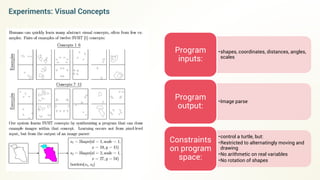

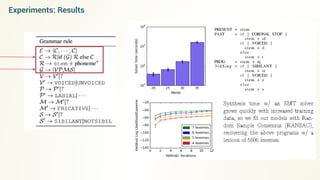

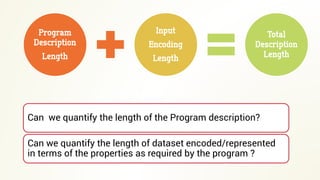

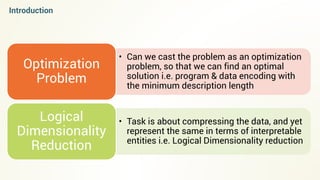

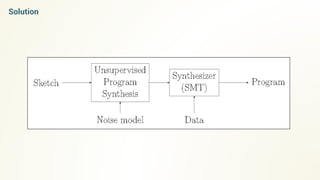

- The document discusses program synthesis through solving optimization problems to find the shortest program that fits the given observations and constraints.

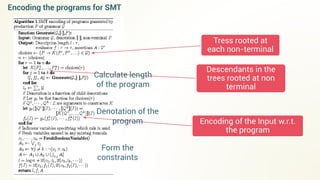

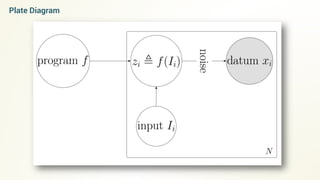

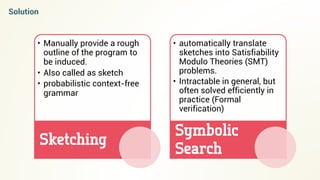

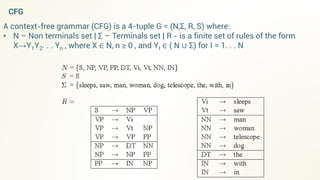

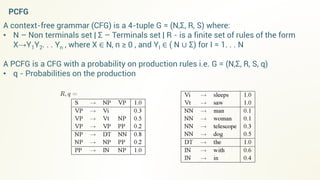

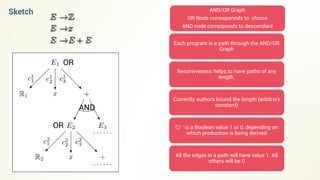

- It proposes using probabilistic context-free grammars to define the search space of possible programs and casting the problem as finding a satisfying assignment for a set of constraints over the program variables.

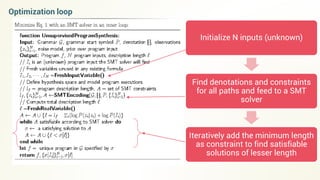

- An iterative algorithm is described that finds program solutions, adds a minimum length constraint, and repeats to find shorter programs that still satisfy the constraints.

![Motivation – 2

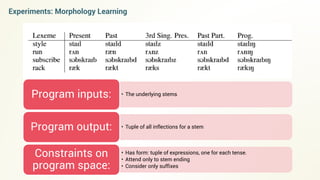

Learning Morphological Rules

Style, styled

hatch,hatched

Articulate,articulated

Pay,paid

Lay,laid

Need,needed

Program

if [ property1.value1 == True ]

(stem + d)

elseif [ property3.value2 > 5 ]

(stem +ed)

Elseif [property4.value5 == “y”]

(stem + id)

Synthesize Execute

Style, styled

hatch,hatched

Articulate,articulated

Pay,paid

Lay,laid

Need,needed

Run,ran

Noise

<stem, word in past tense>

Program(snatch) = snatched](https://image.slidesharecdn.com/rg-160603044935/85/Unsupervised-program-synthesis-3-320.jpg)

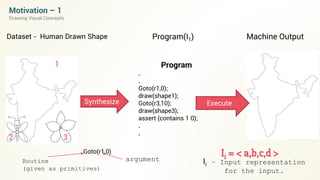

![Denotations

Mathematical Objects, that describe

the meaning of entities in a

language

Every node in the selected path has

a denotation

In denotation, each non-terminal is

an expression (or a routine), which

takes an input I and the range for

the output is known

The path will give the sequence of

the routines with the appropriate

values for the arguments, which is

obtained from the input

[Expression] (Input) = Output

The output is dependent on the input](https://image.slidesharecdn.com/rg-160603044935/85/Unsupervised-program-synthesis-15-320.jpg)