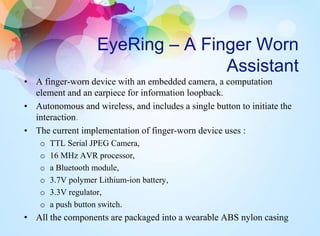

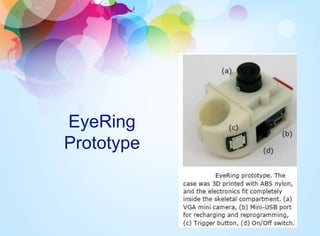

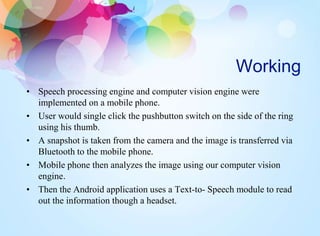

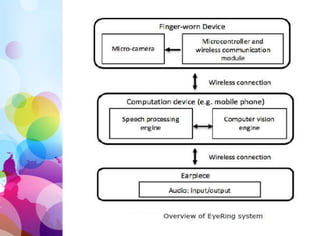

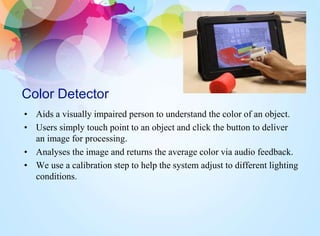

The document presents the 'Eyering', a finger-worn device designed to assist visually impaired users through various applications like navigation, currency detection, and color recognition. It uses touch and free-air pointing gestures, integrates a camera, and employs a mobile application for processing and audio feedback. Future applications include enabling users to read printed materials and improving navigation for tourists.