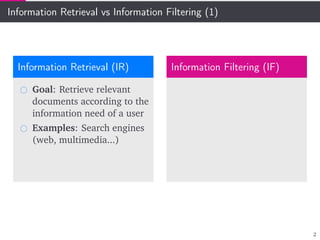

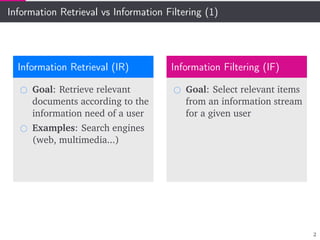

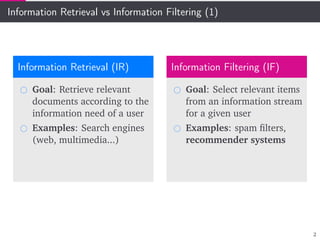

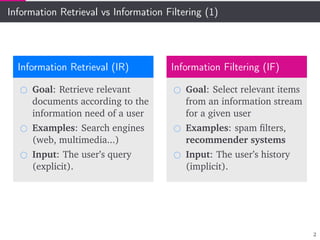

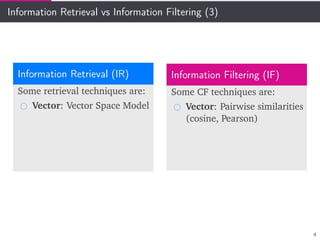

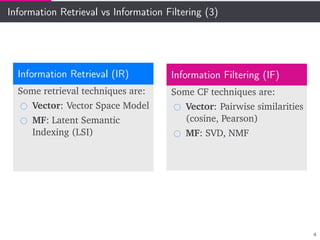

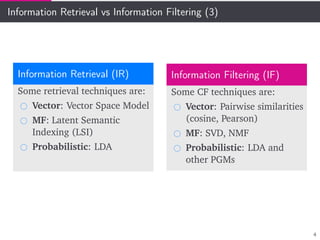

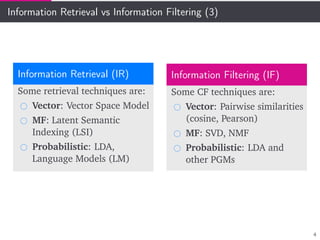

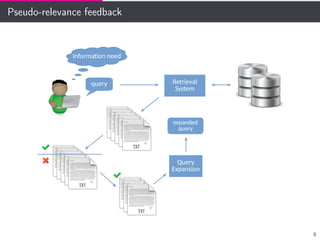

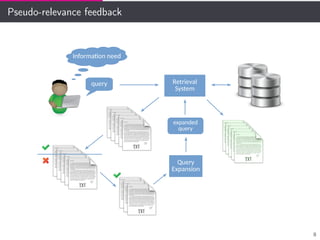

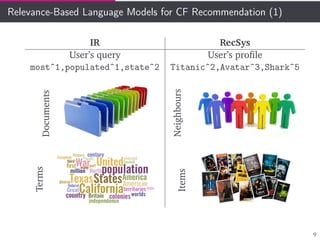

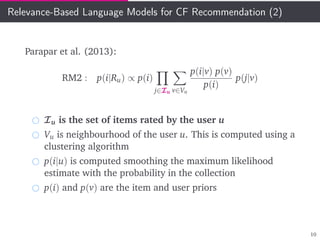

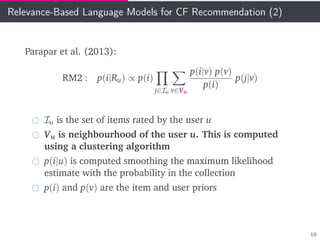

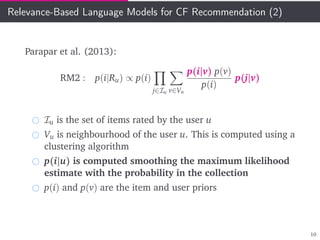

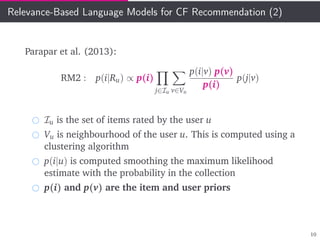

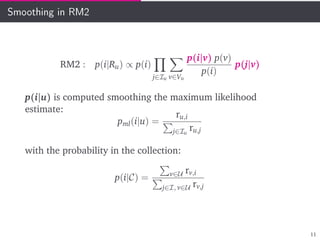

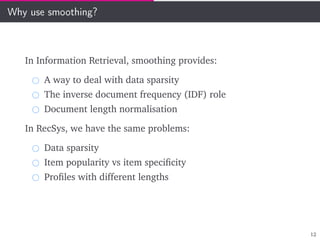

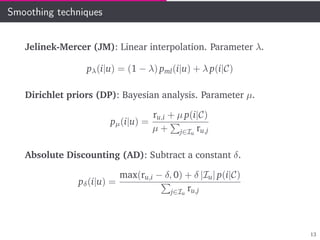

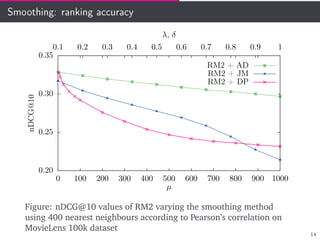

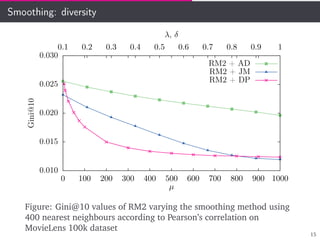

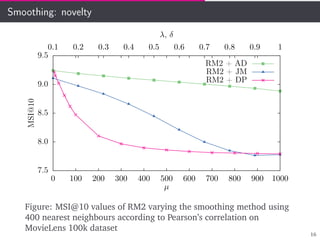

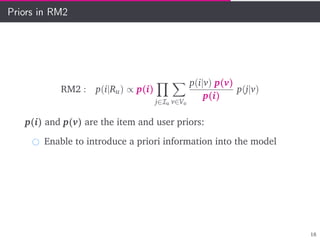

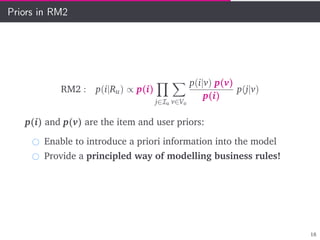

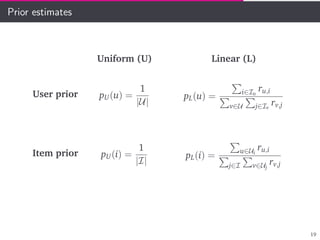

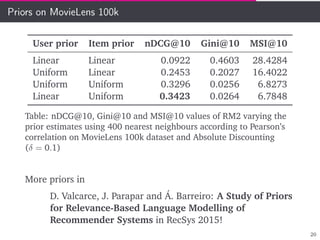

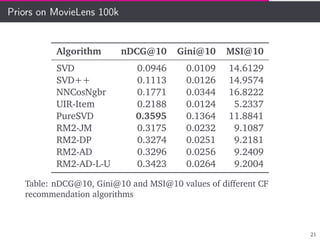

The document discusses the application of statistical language models to recommender systems, contrasting information retrieval (IR) and information filtering (IF). It emphasizes the potential of using language models for collaborative filtering, exploring techniques for query expansion and the integration of user and item priors. The results of various smoothing methods and their impact on recommendation accuracy are presented, alongside comparisons with other collaborative filtering algorithms.