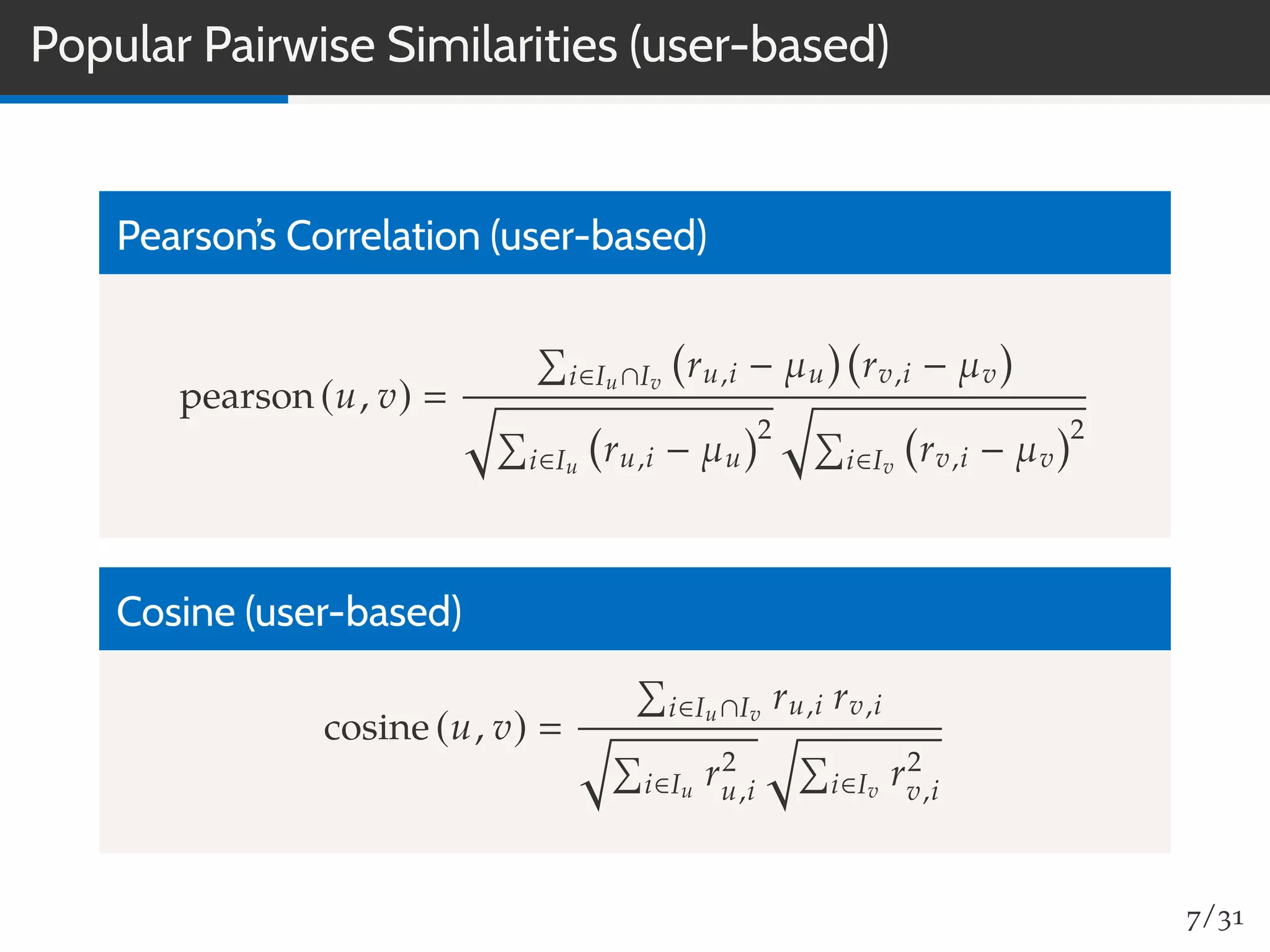

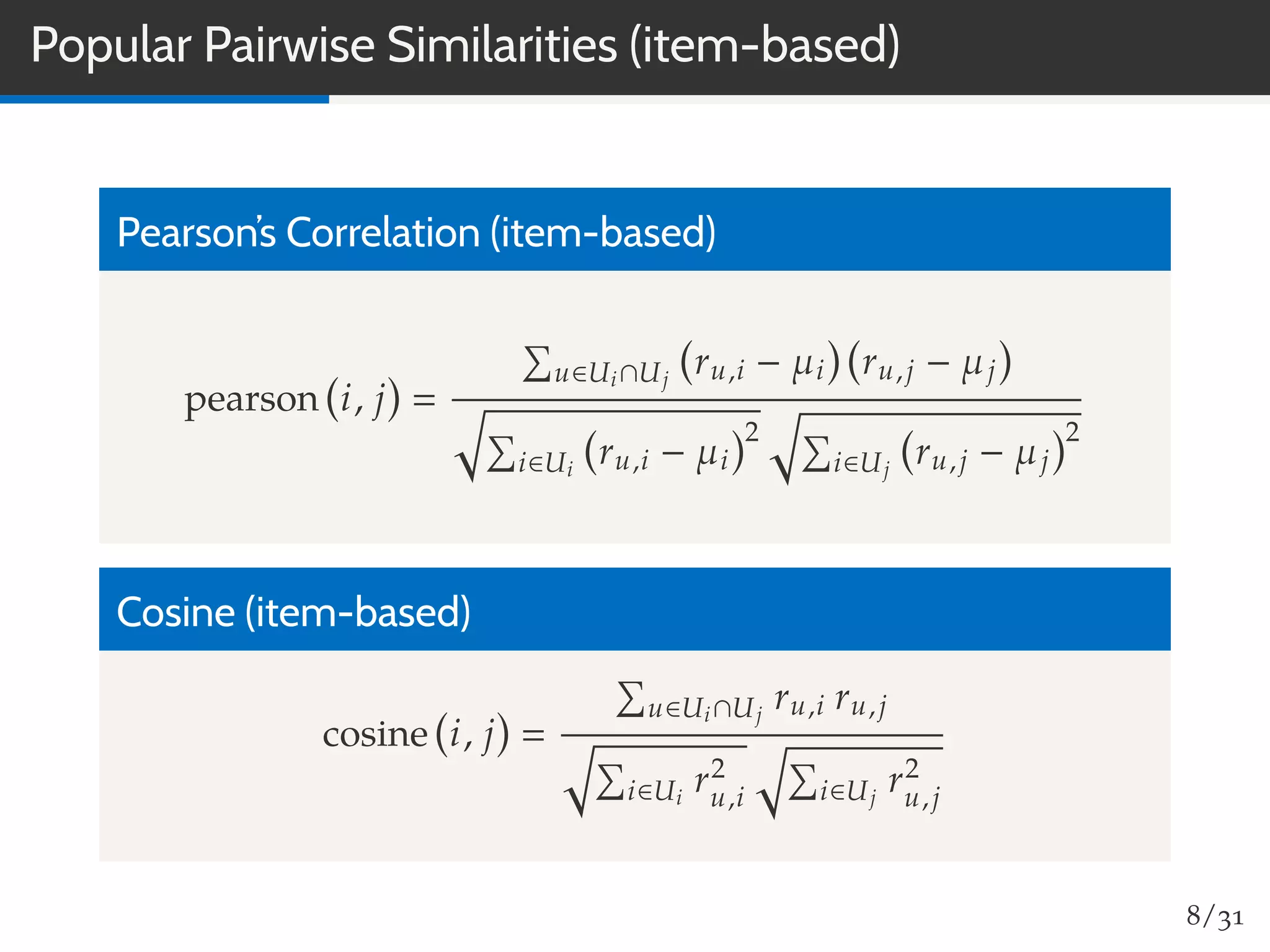

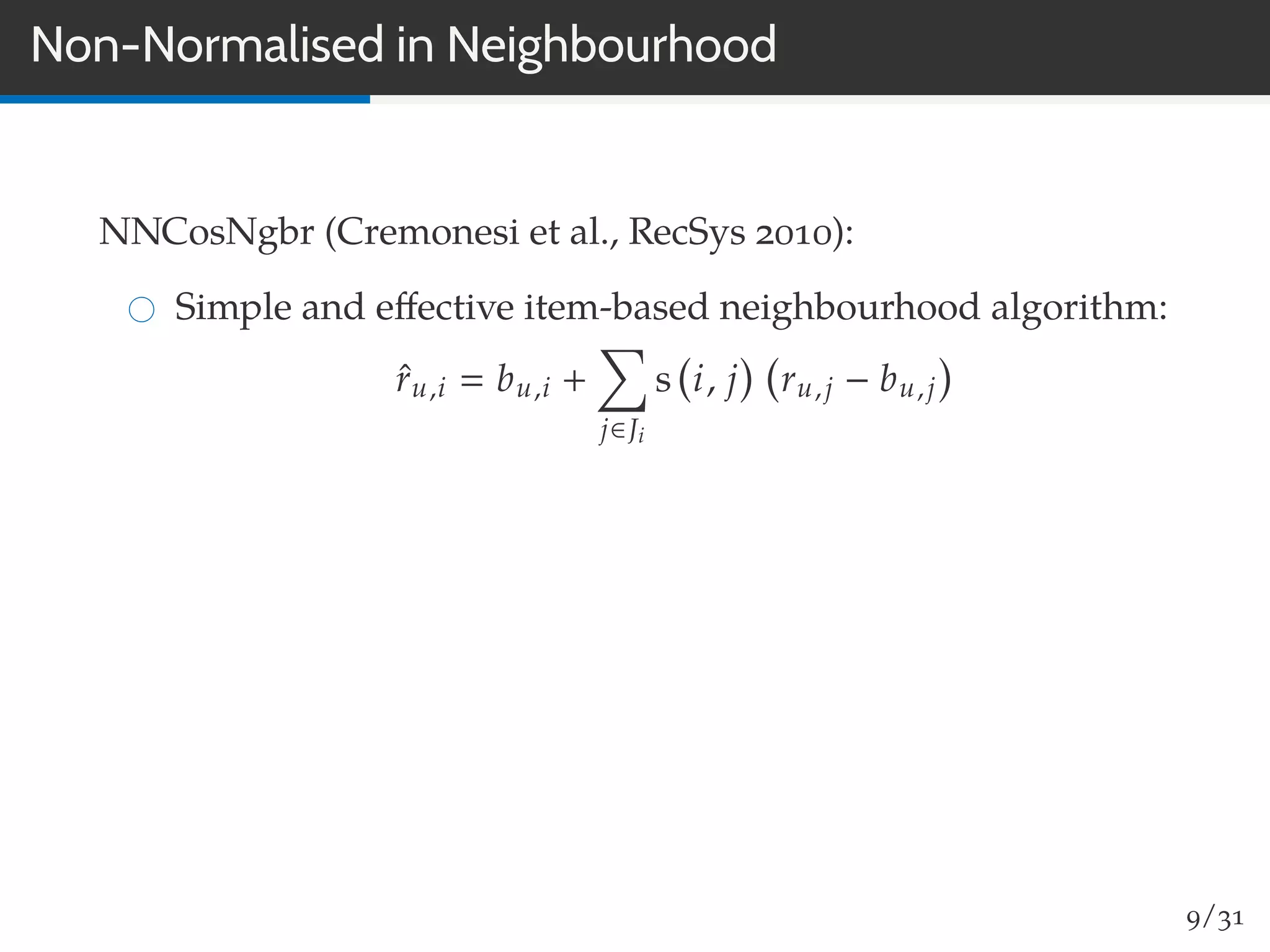

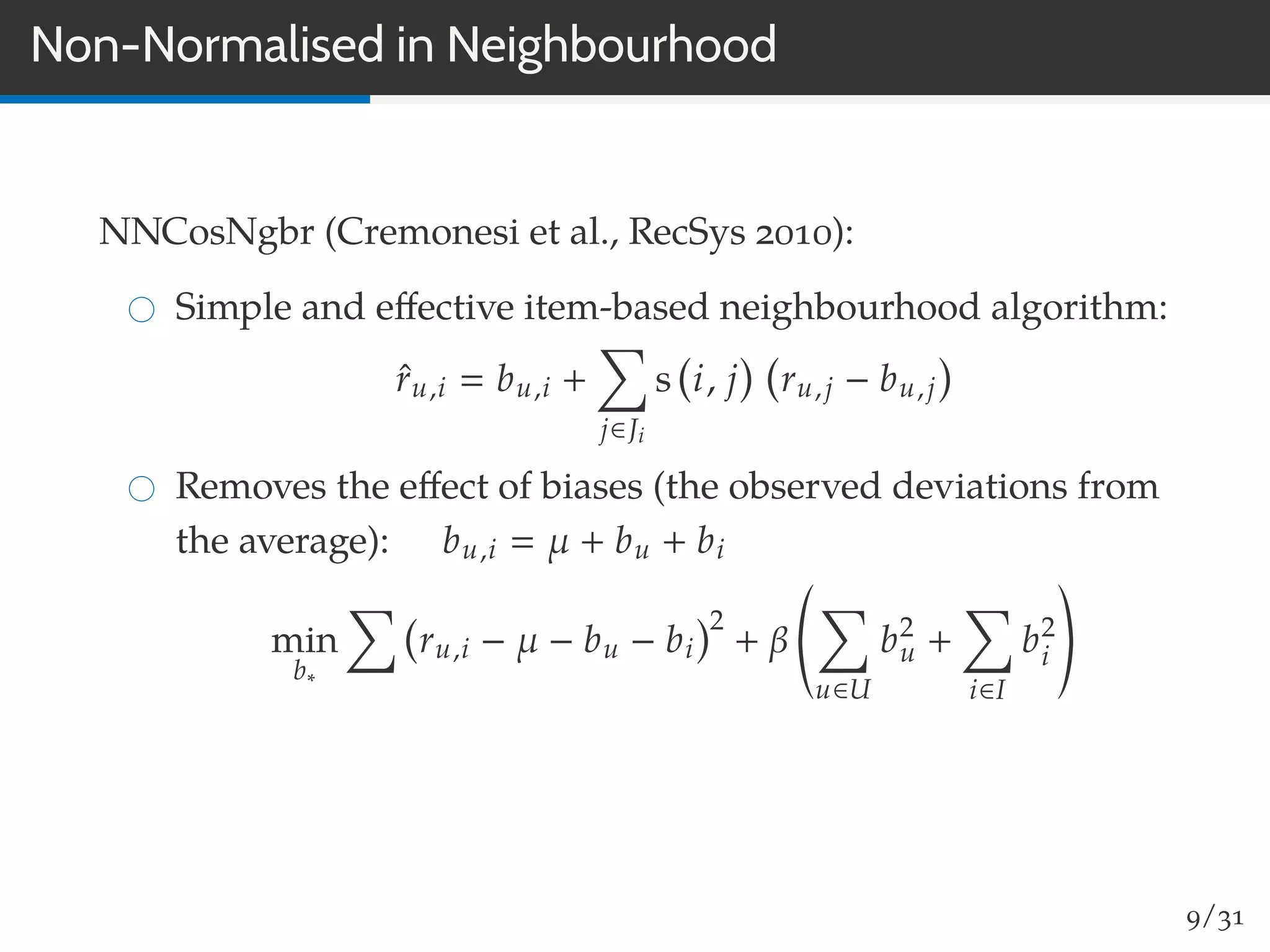

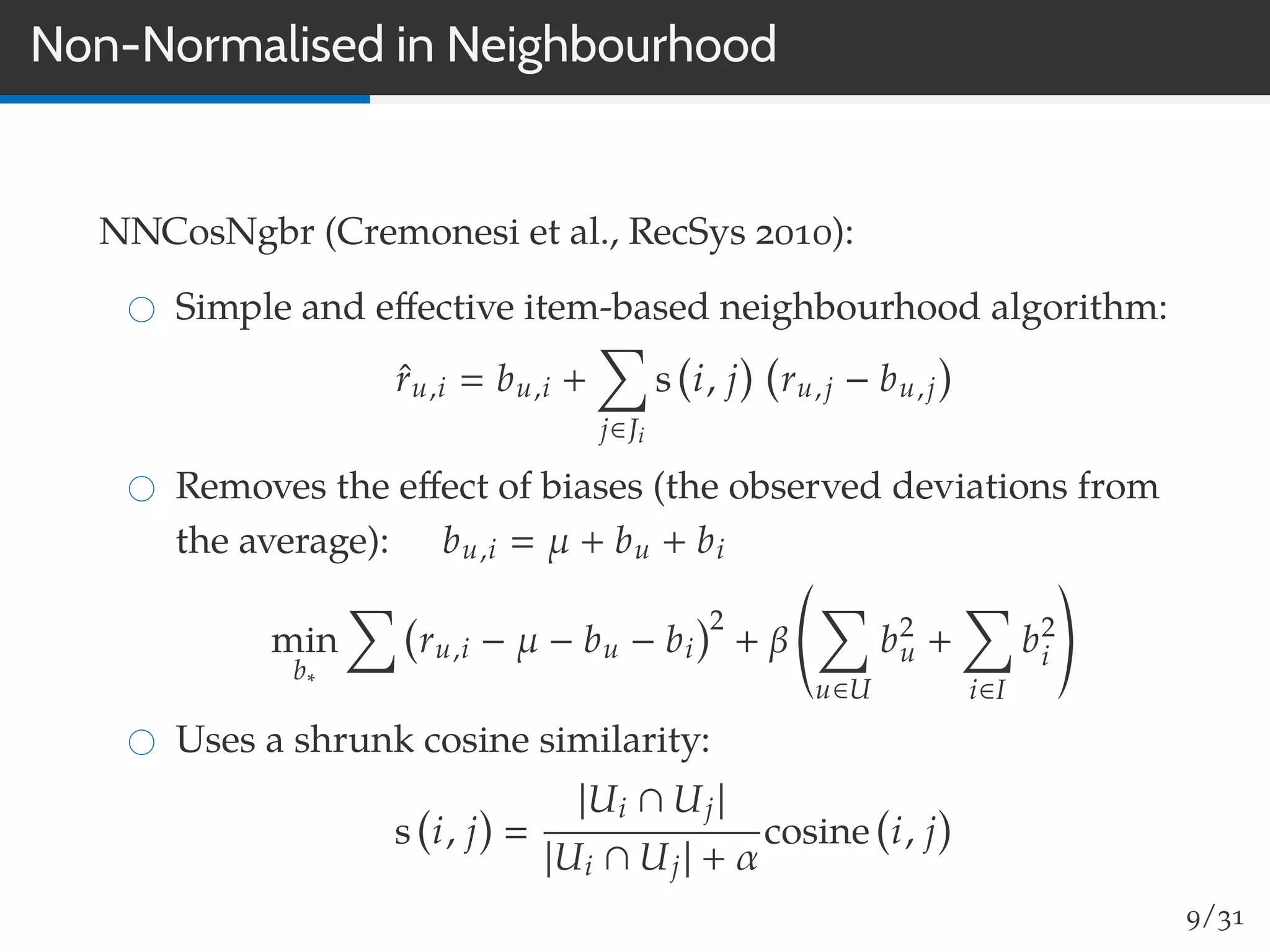

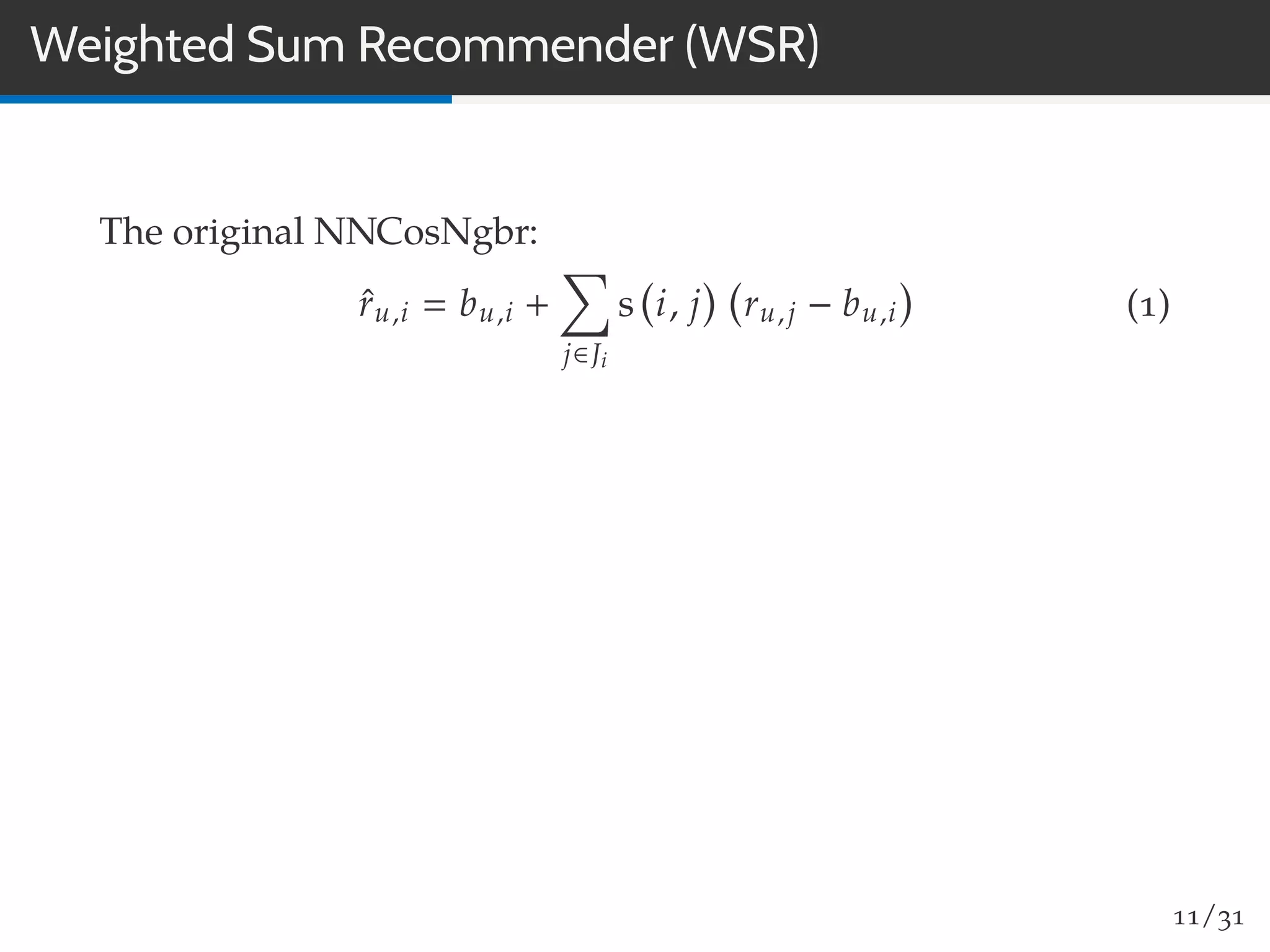

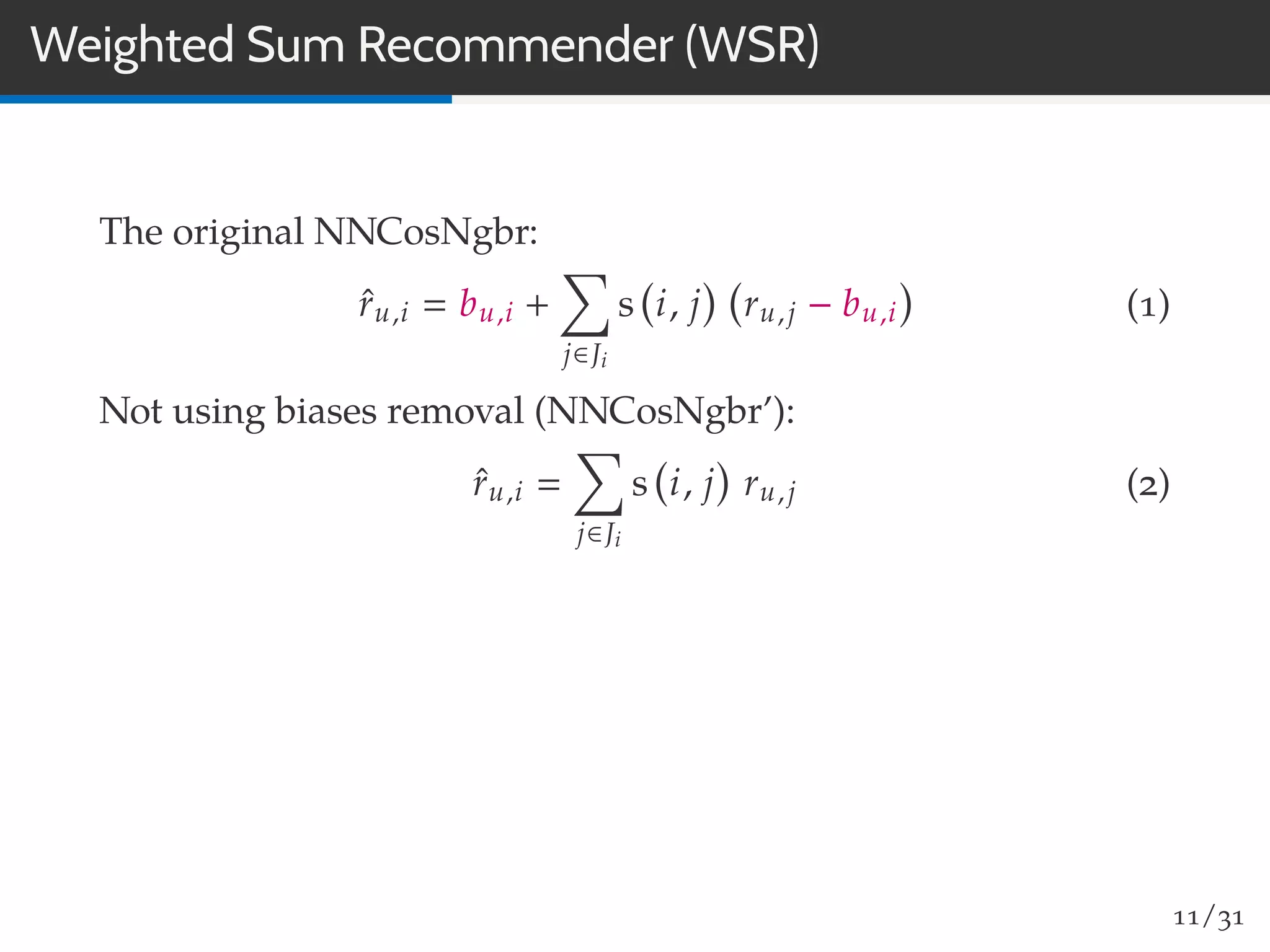

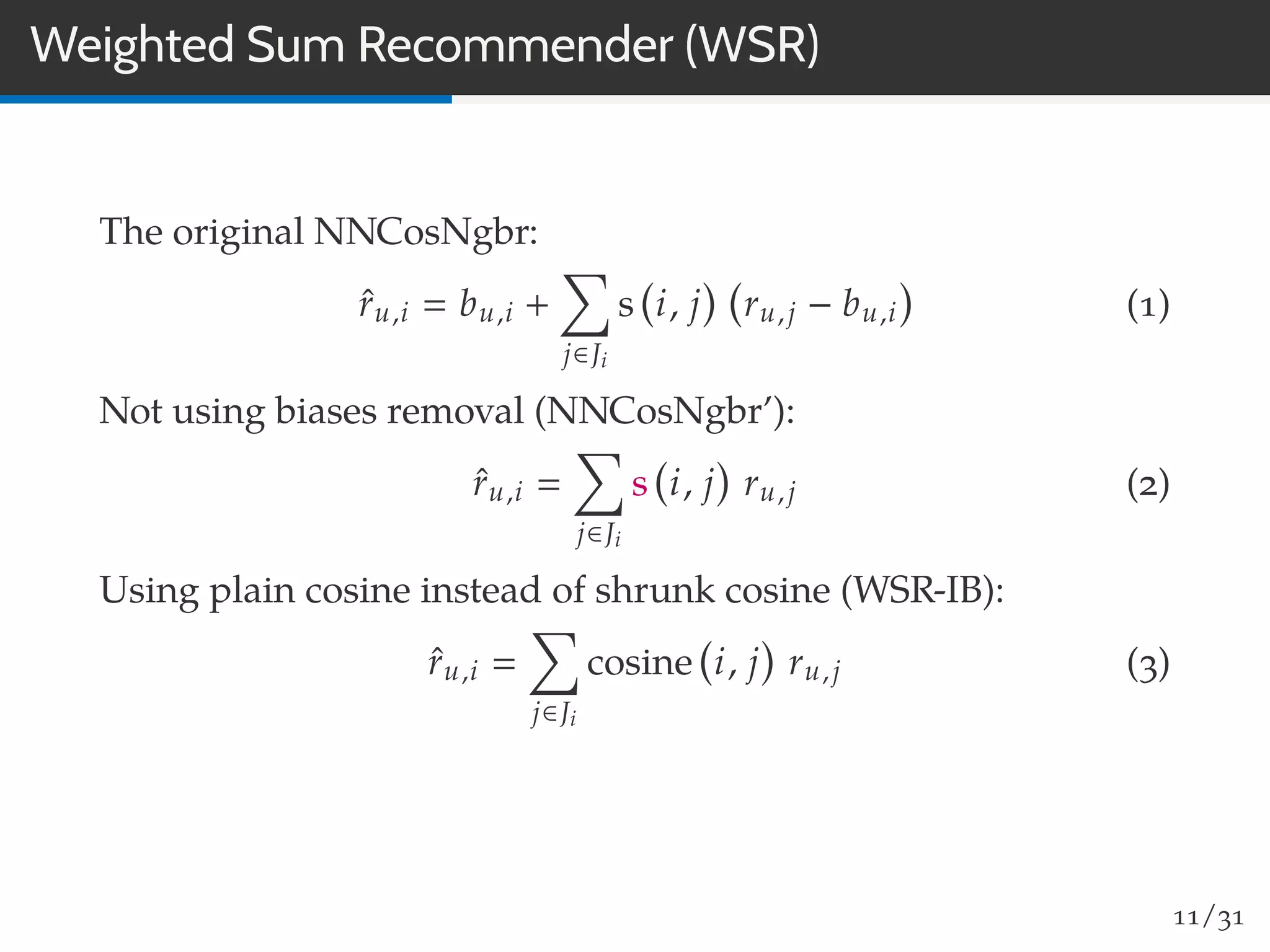

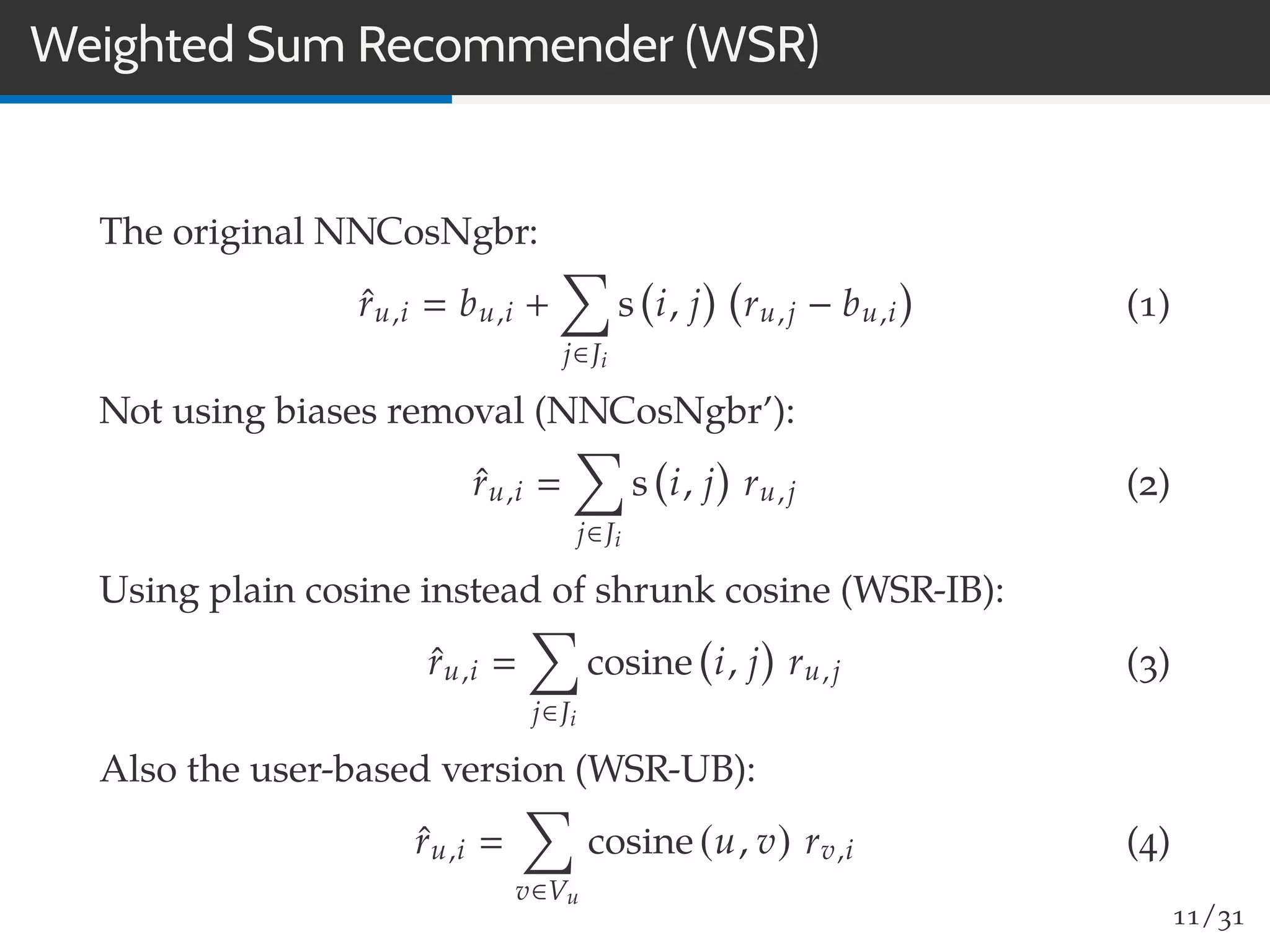

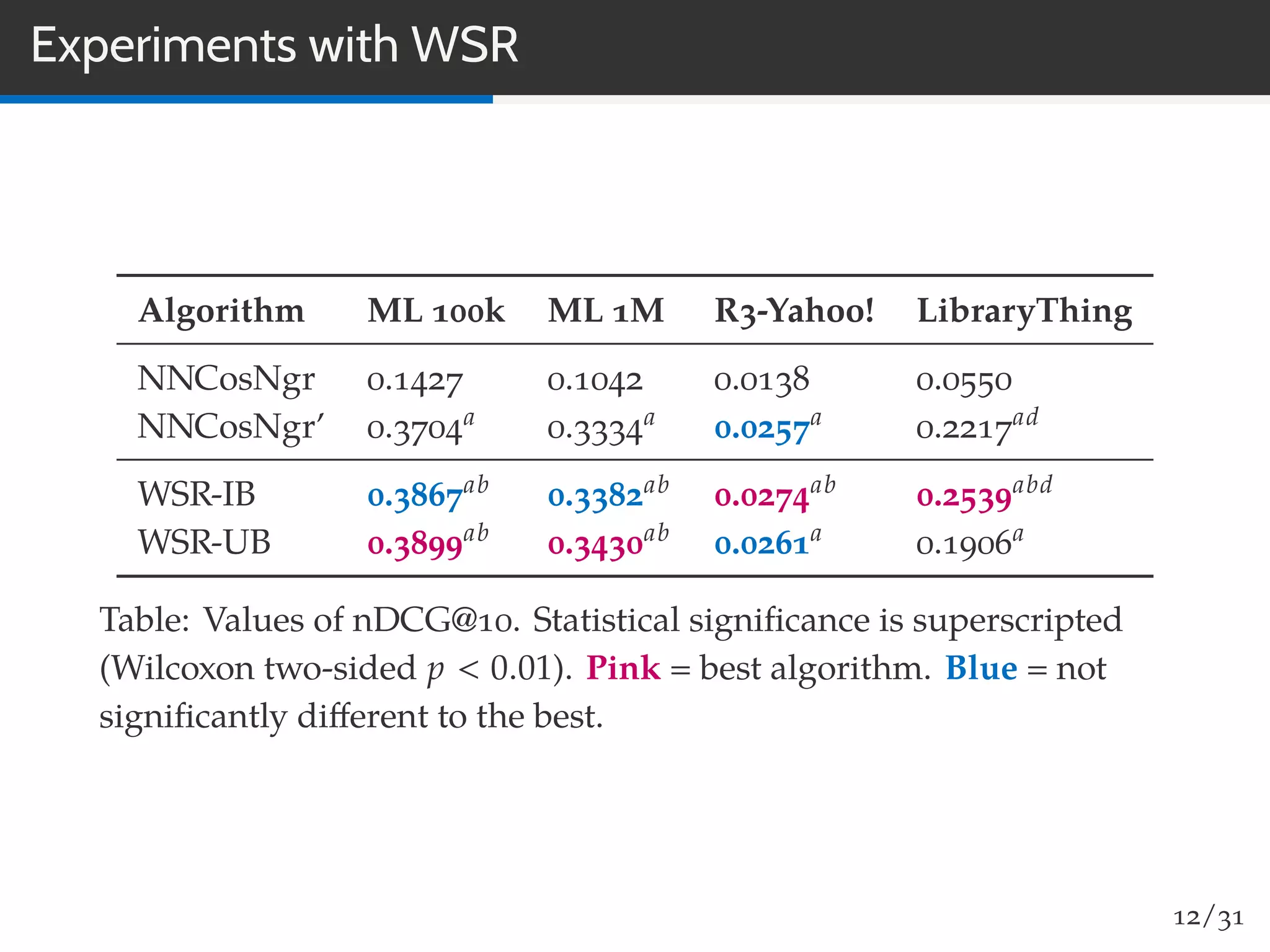

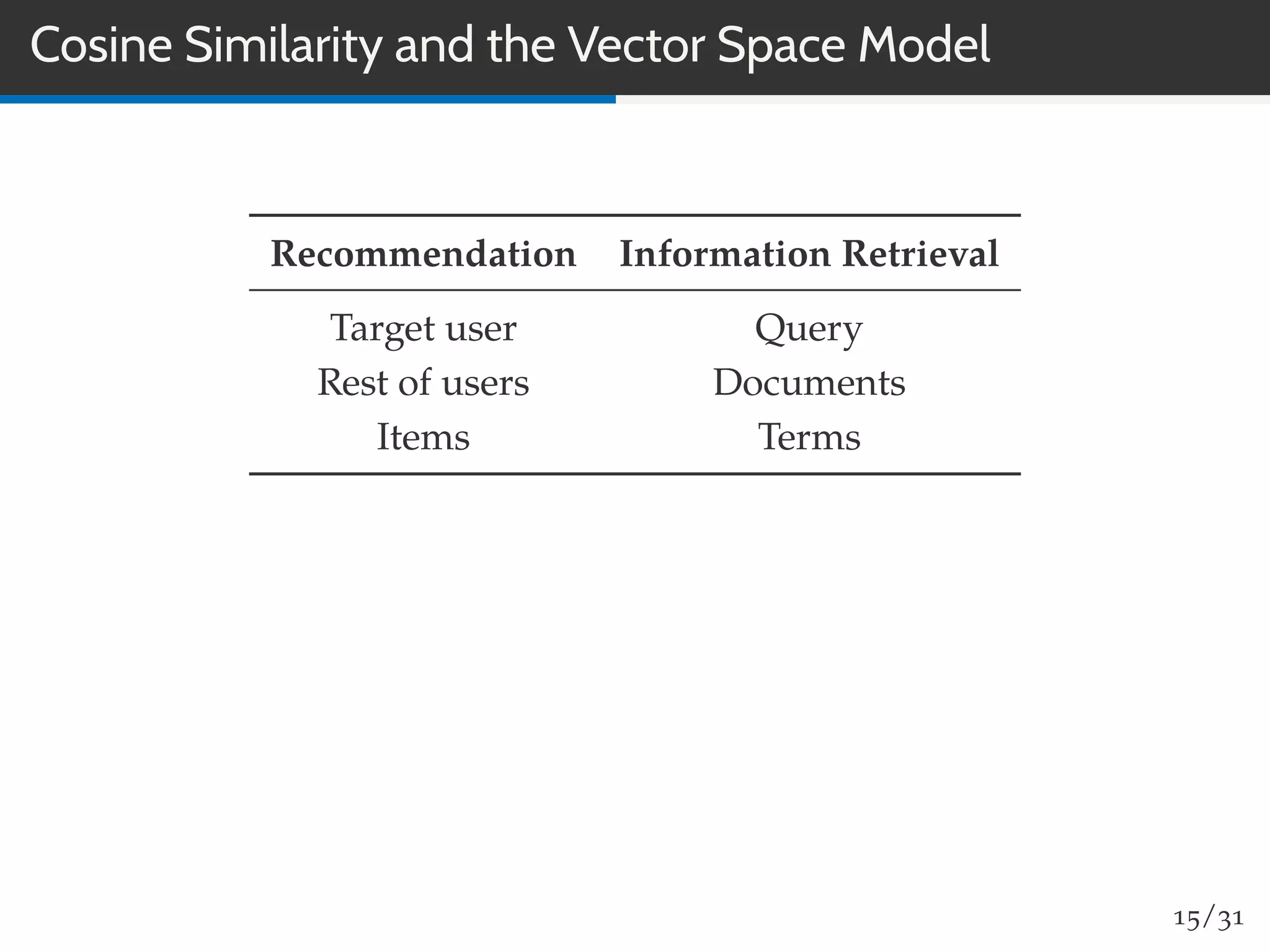

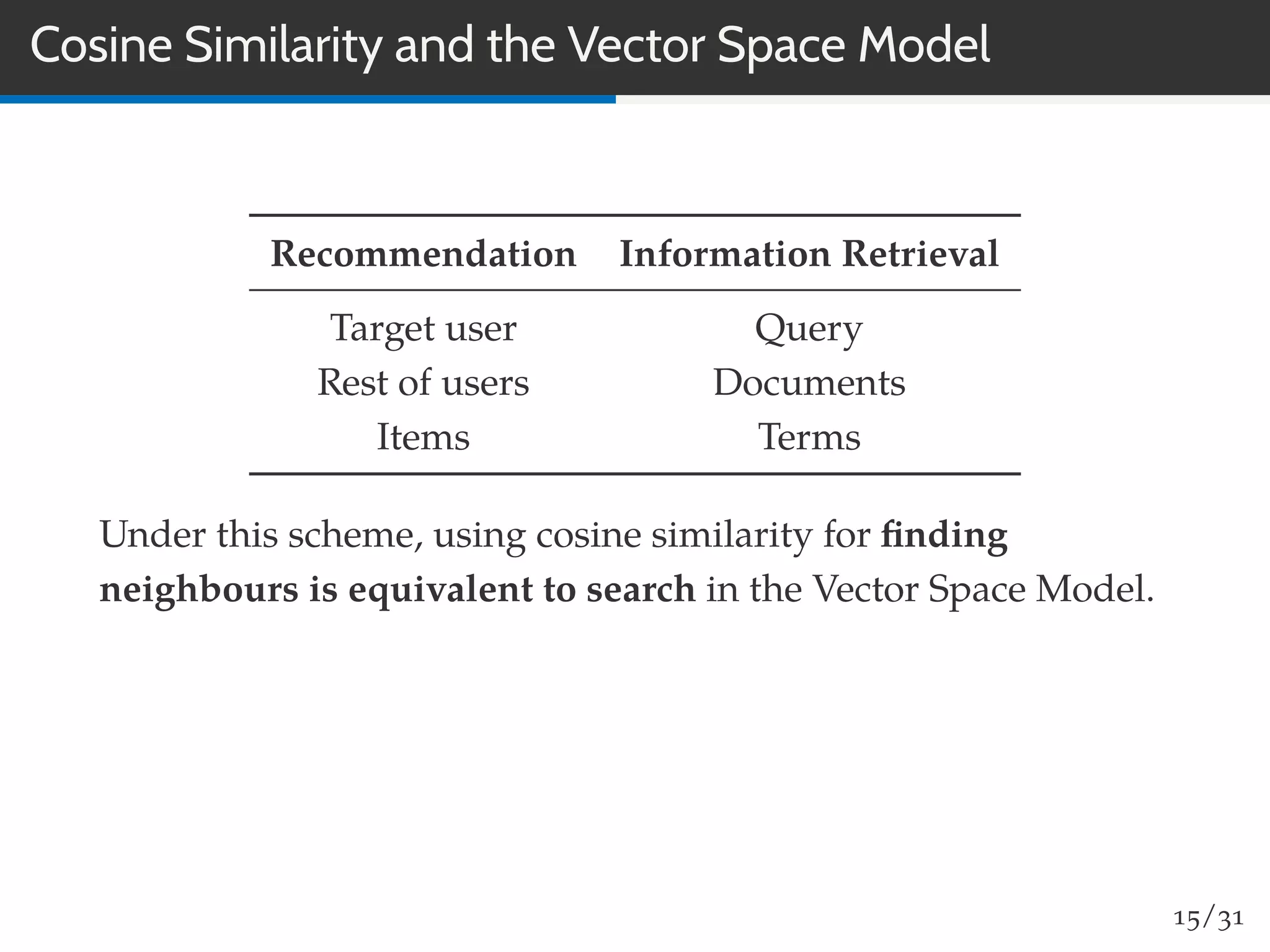

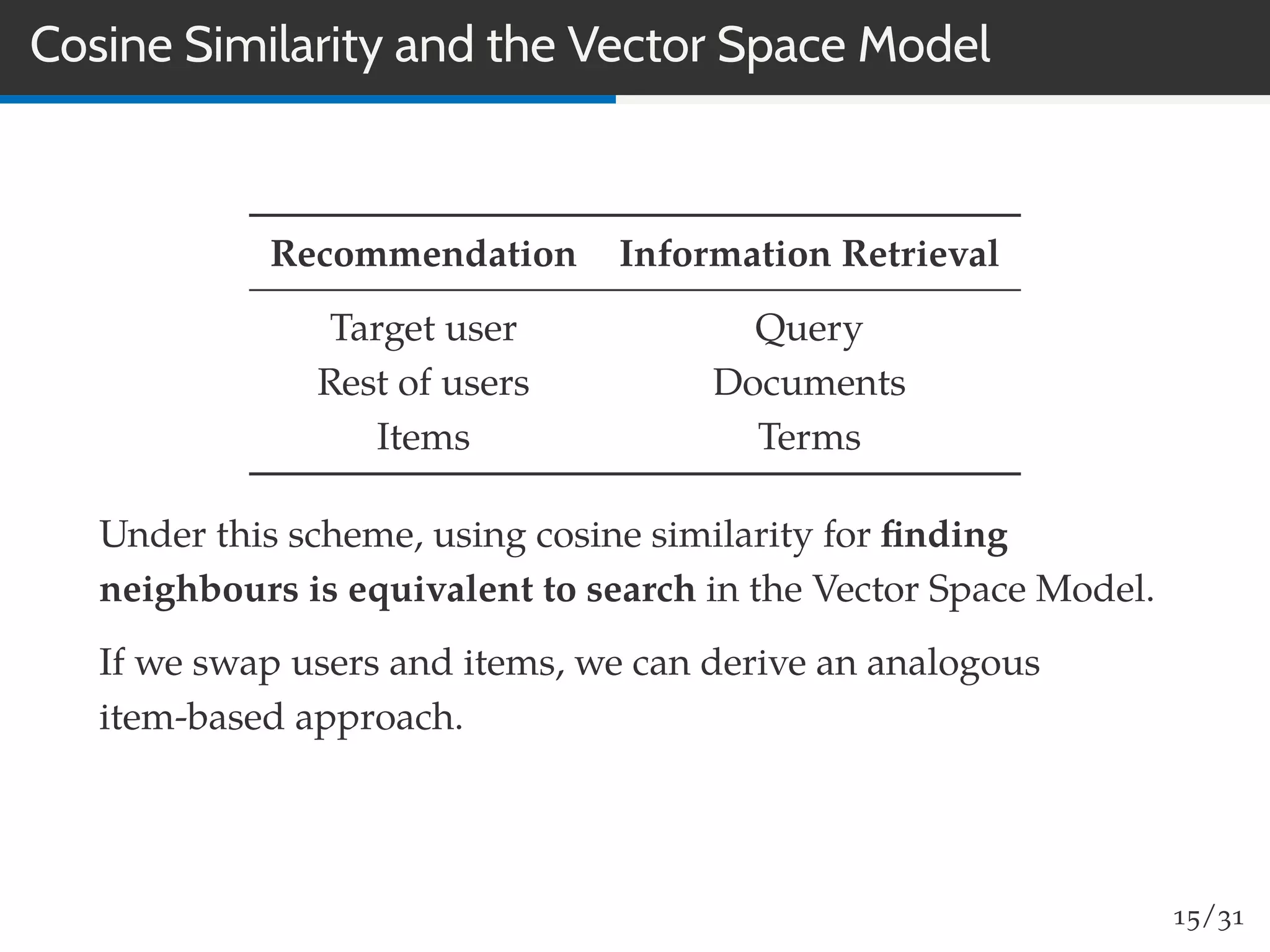

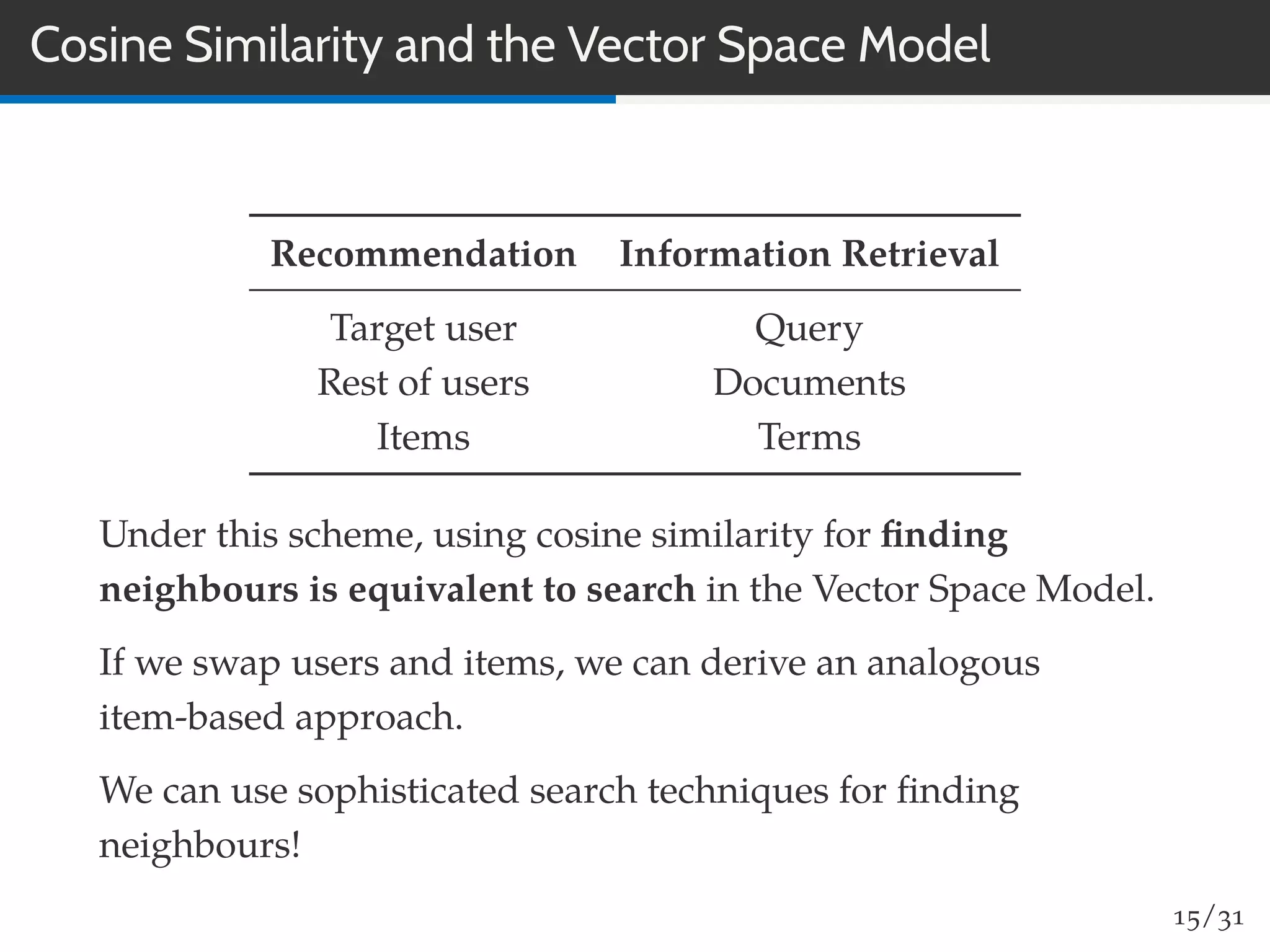

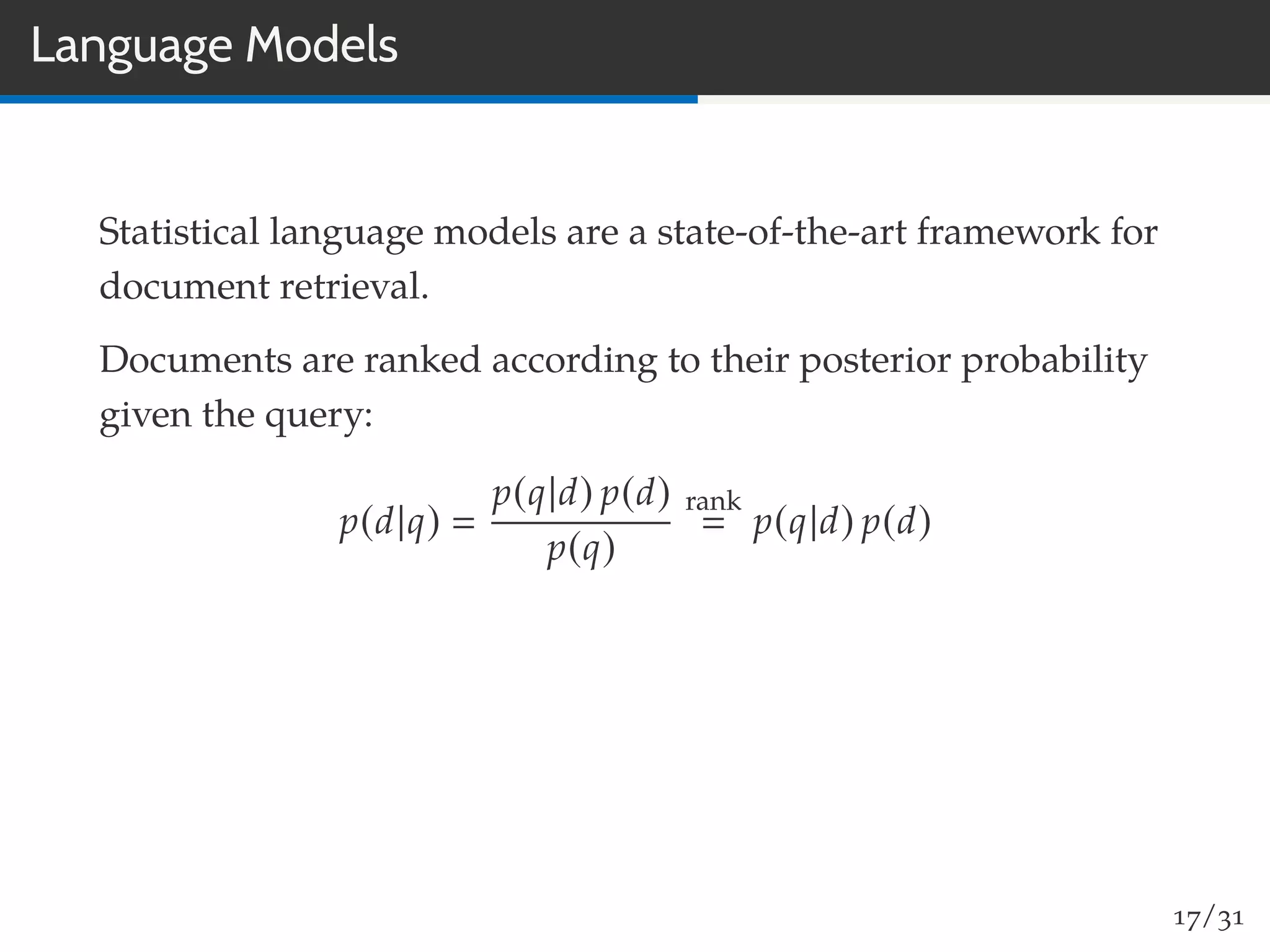

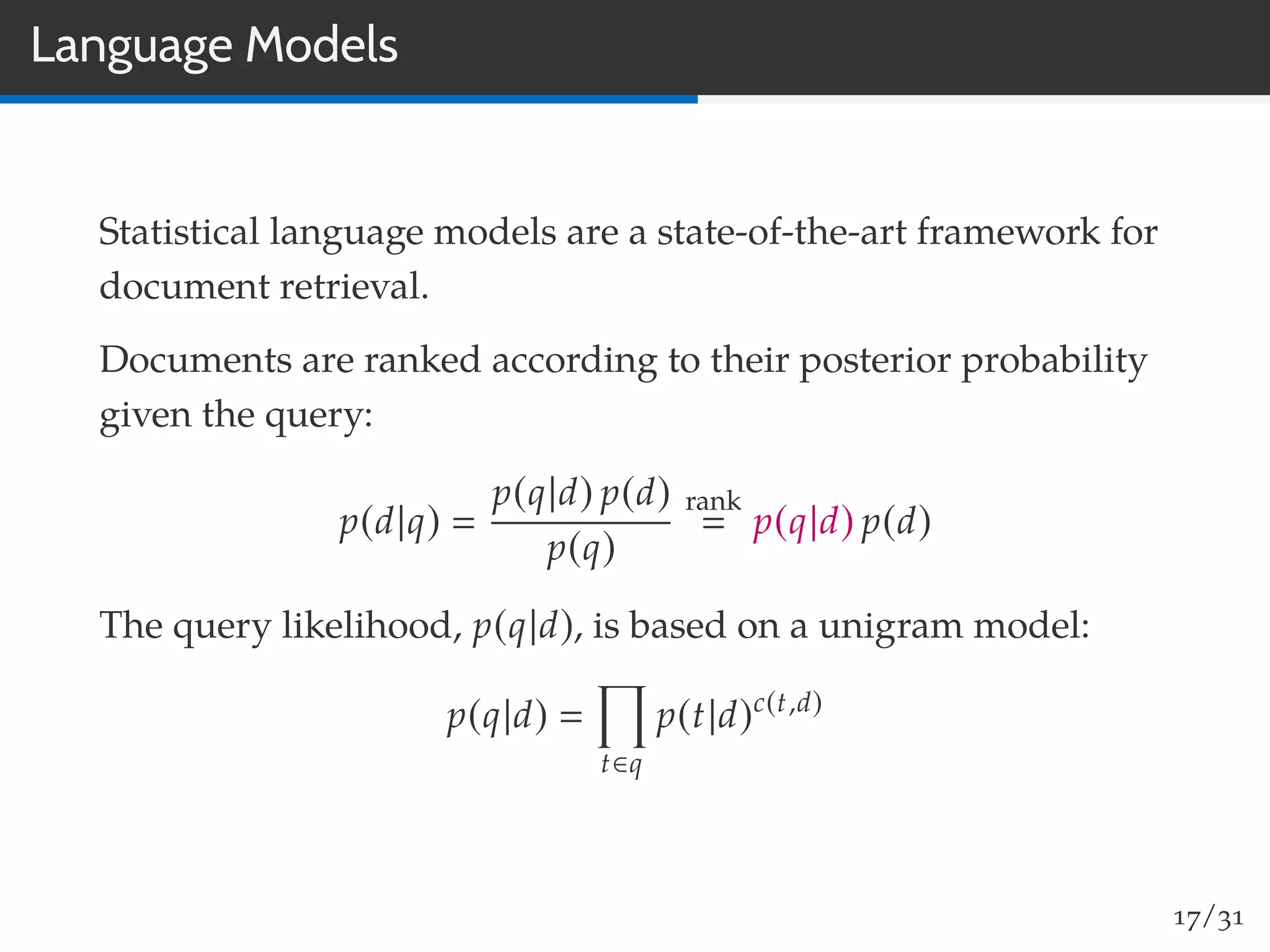

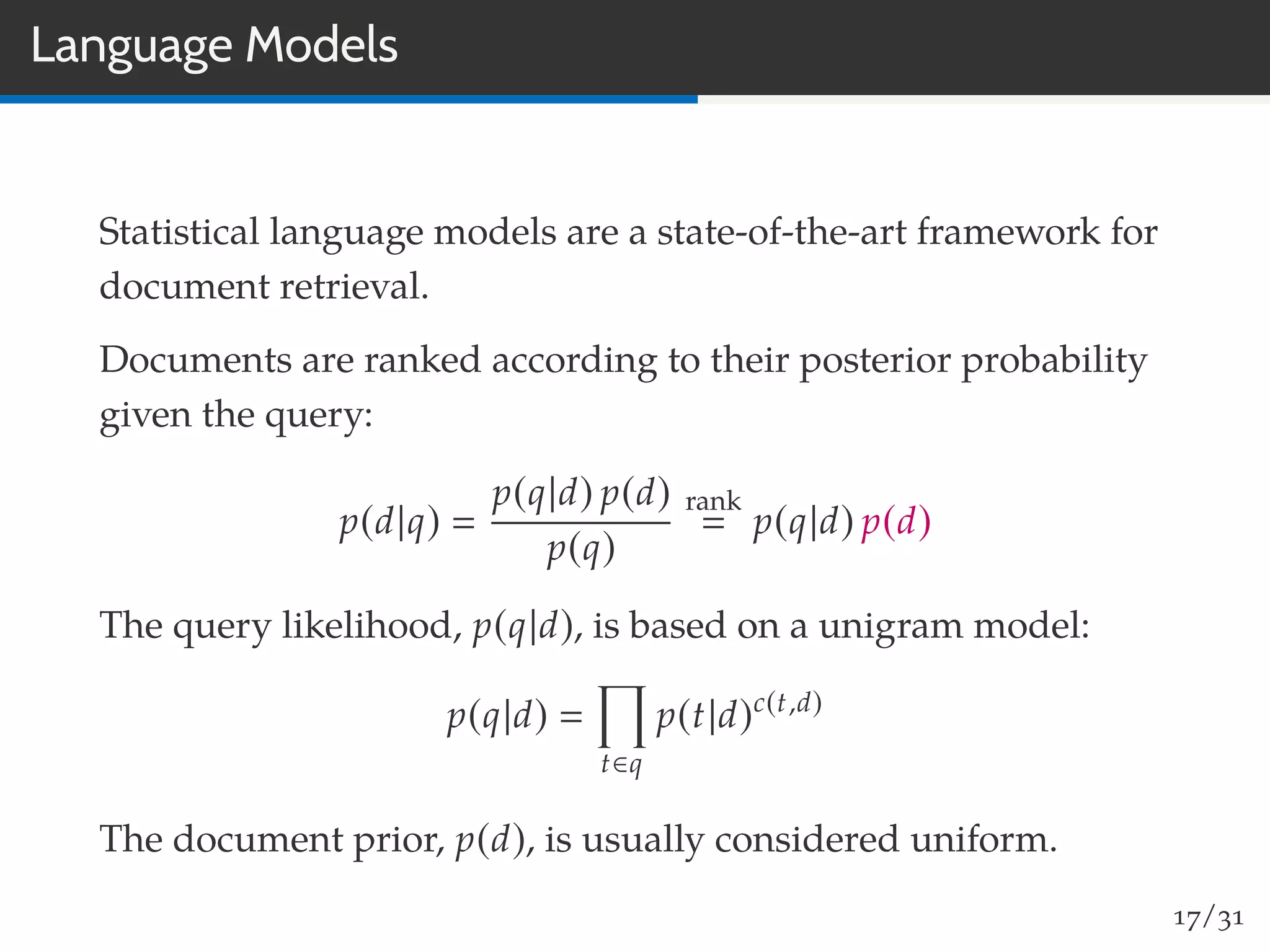

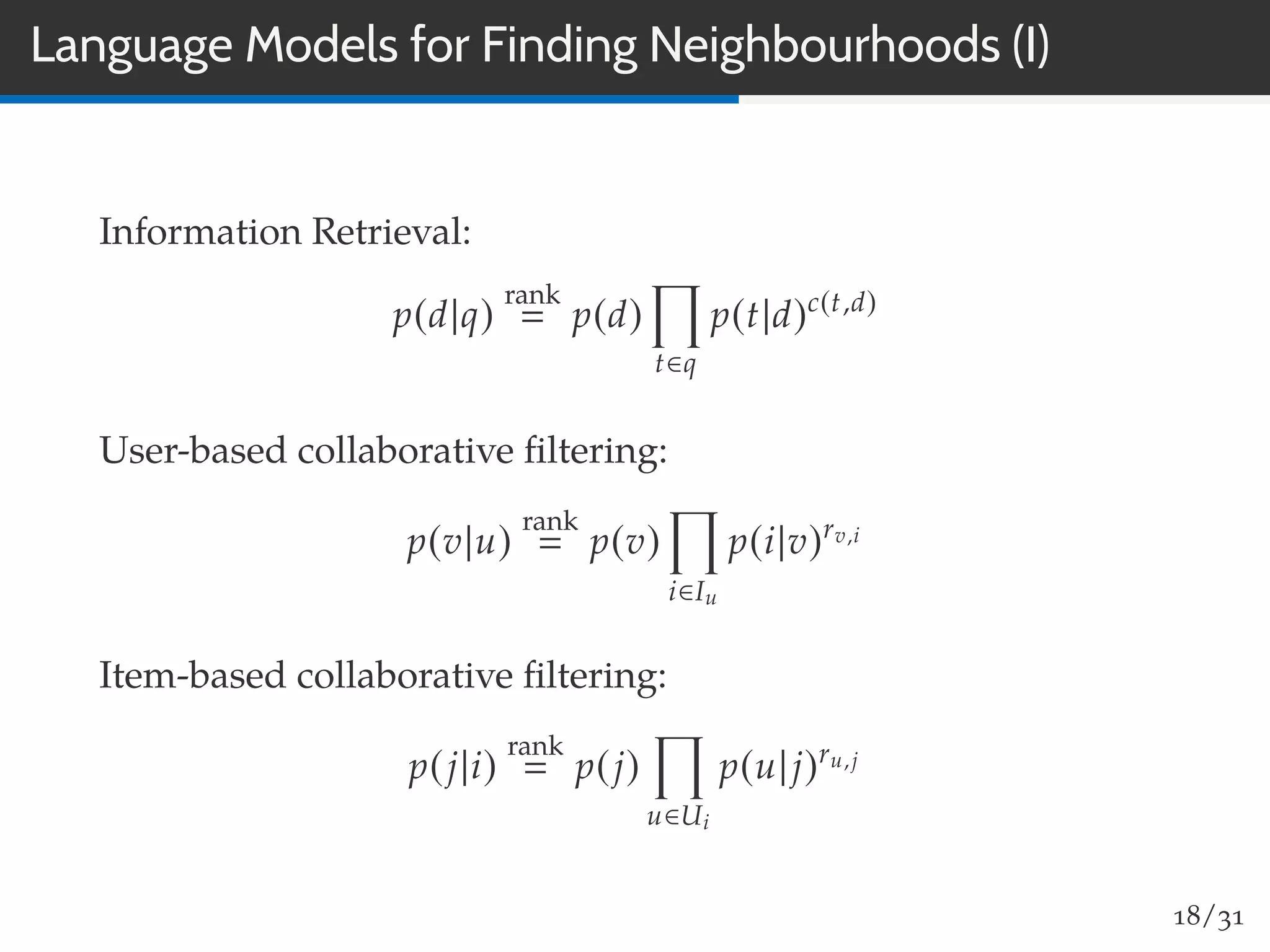

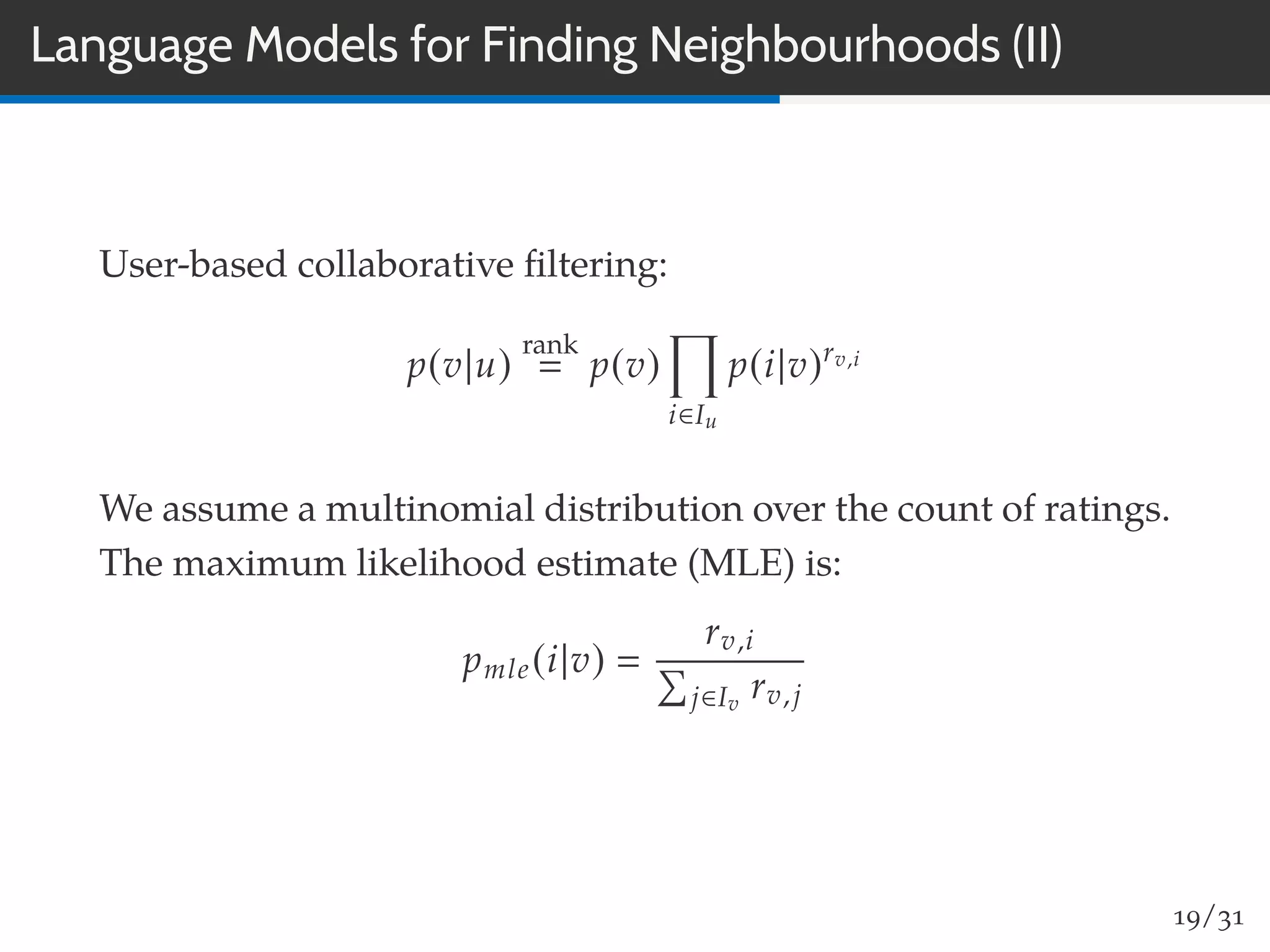

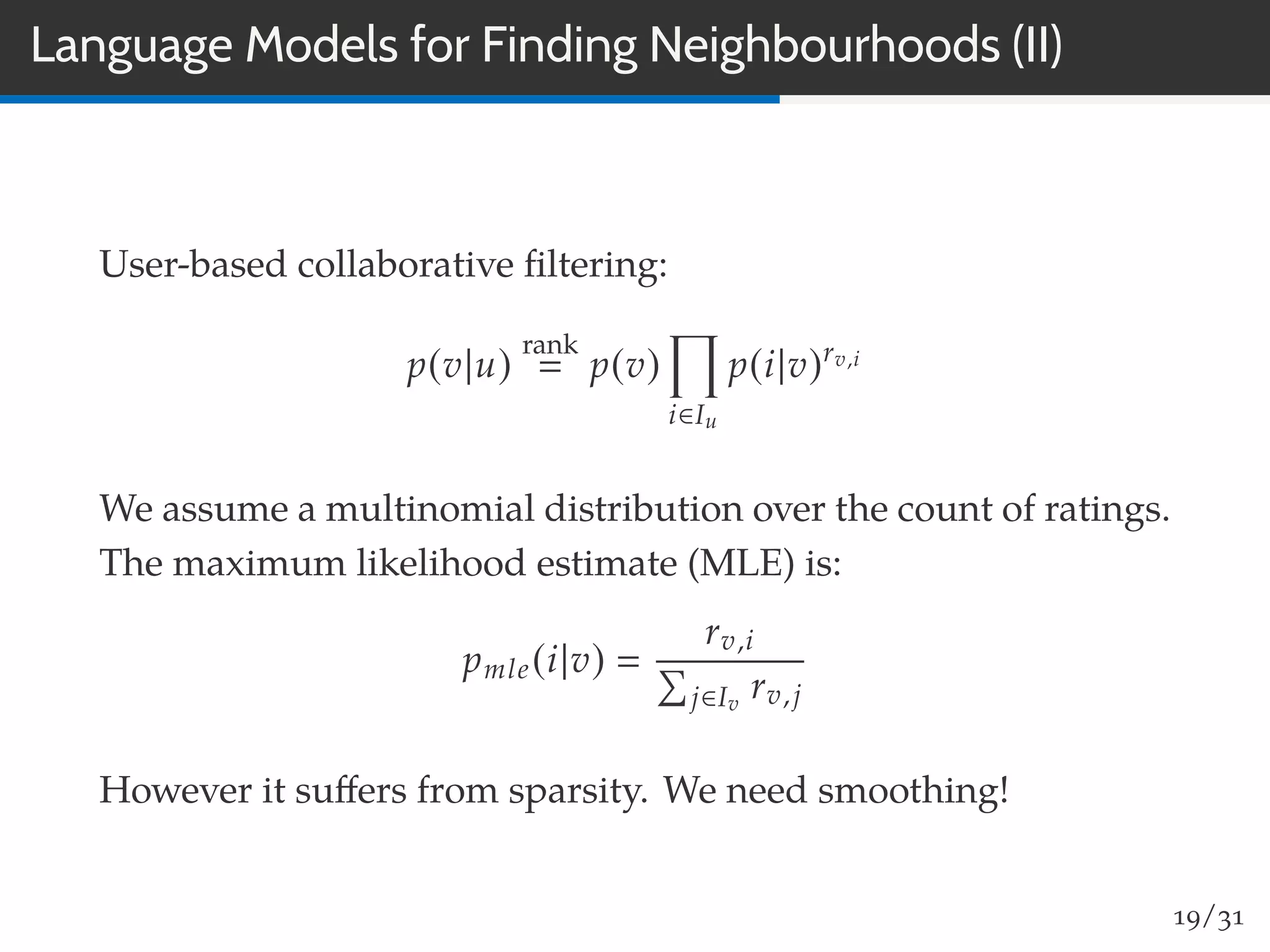

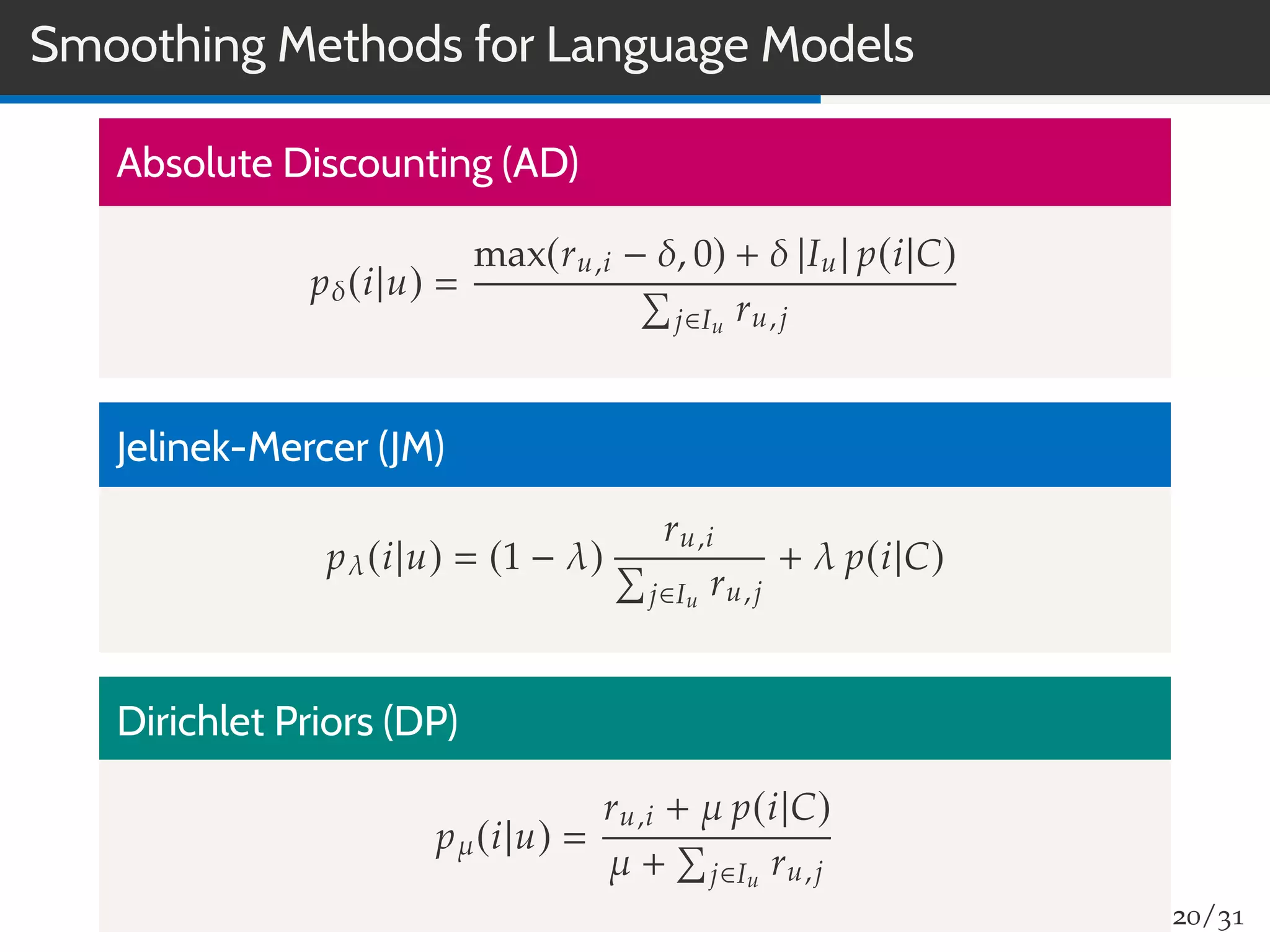

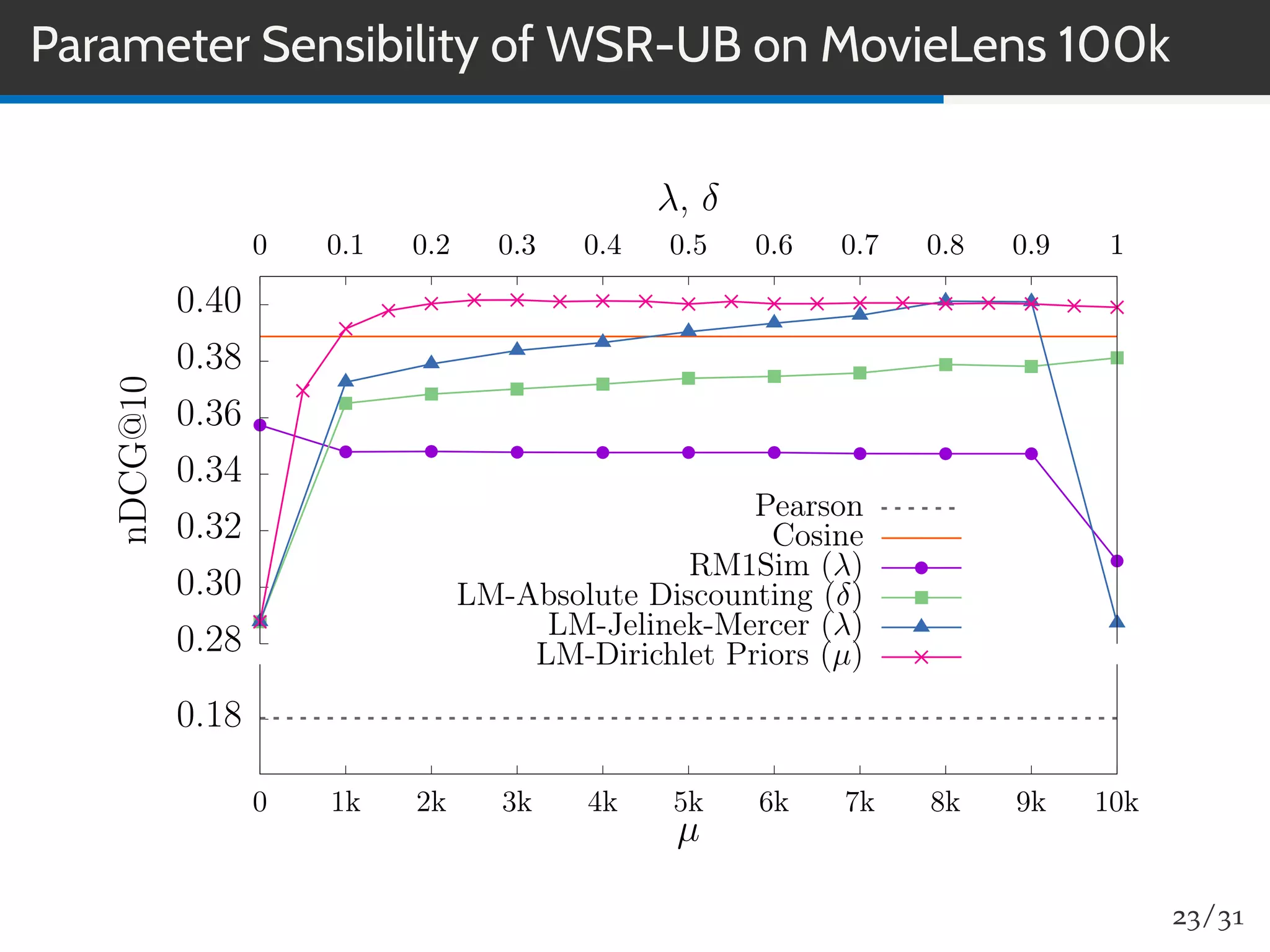

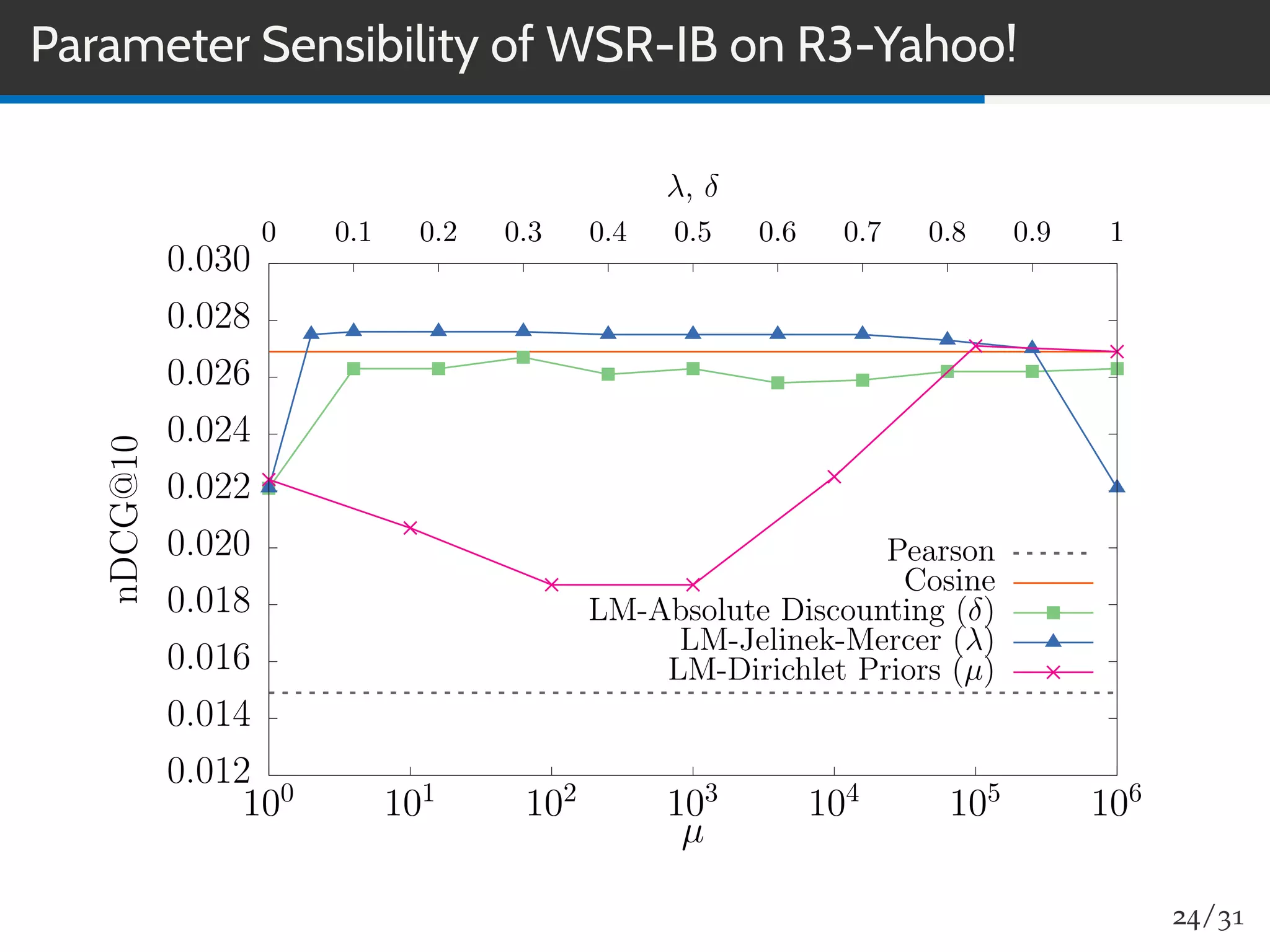

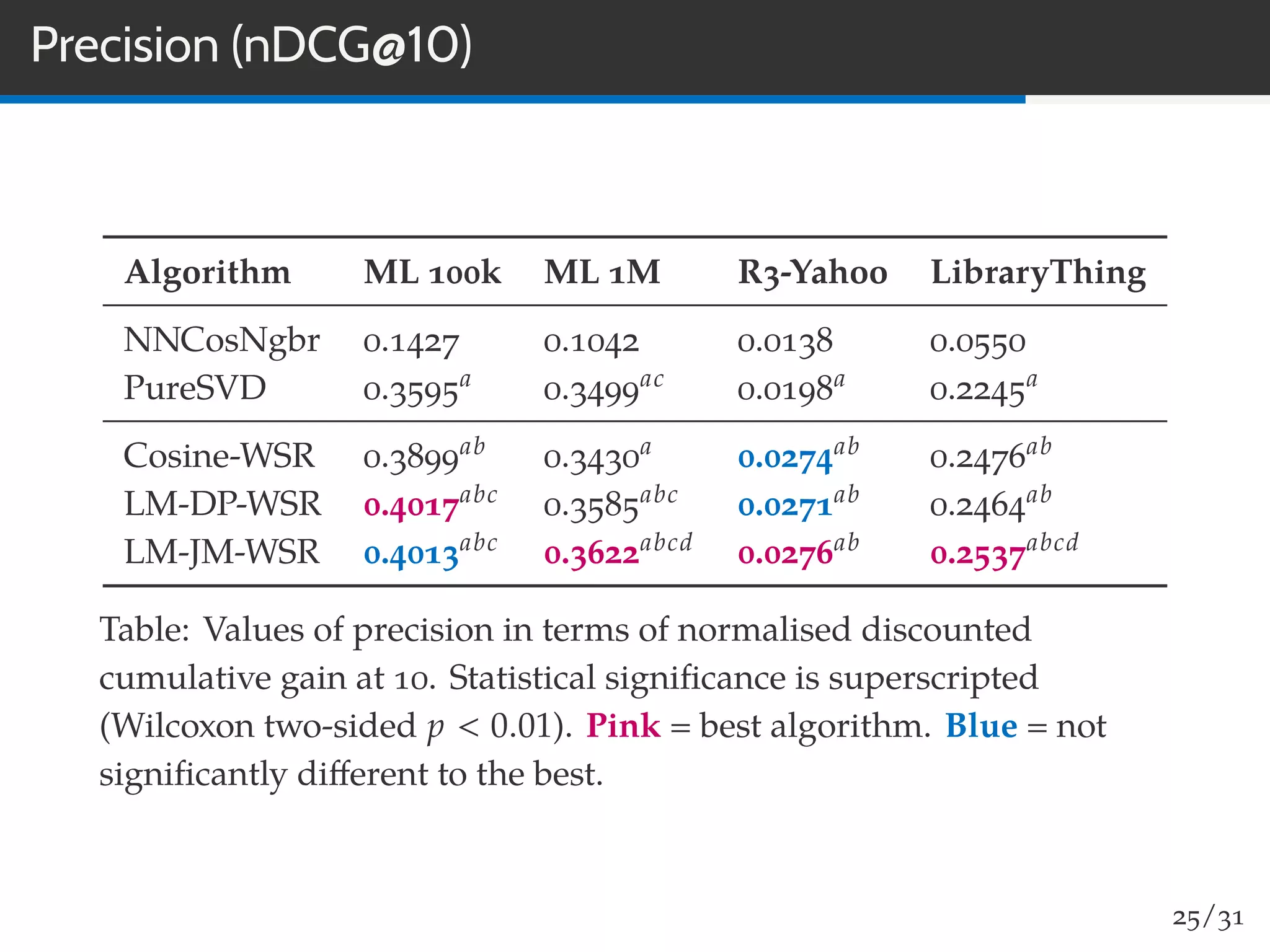

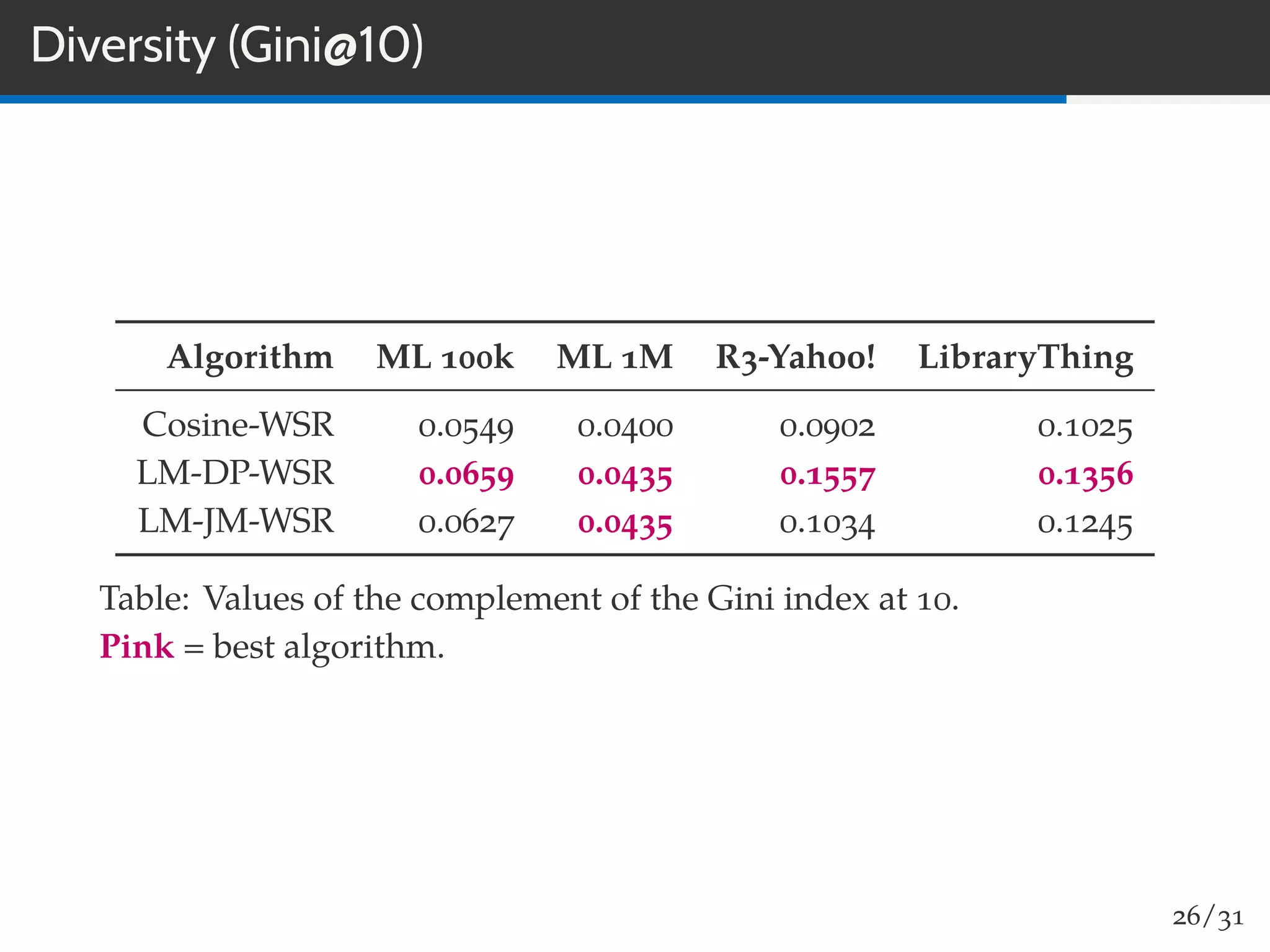

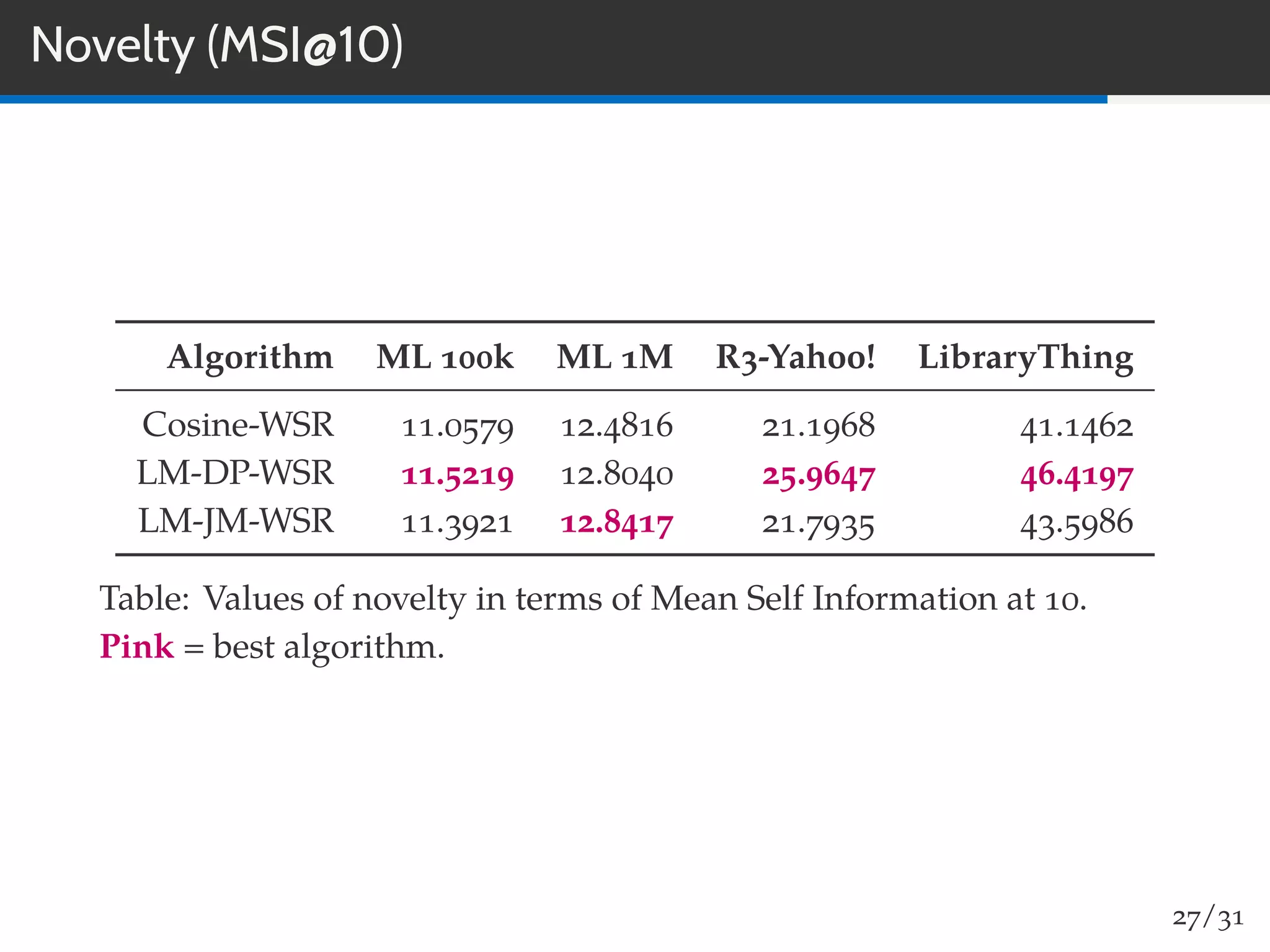

The document discusses a novel approach to collaborative filtering using language models to improve recommendation systems. It emphasizes the importance of pairwise similarities, particularly using cosine similarity, to enhance performance and presents experiments demonstrating the effectiveness of this method compared to traditional algorithms. The findings indicate higher accuracy in recommendations, along with improved novelty and diversity, alongside potential future directions for research and application.