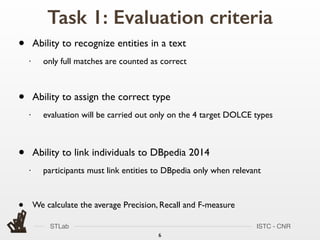

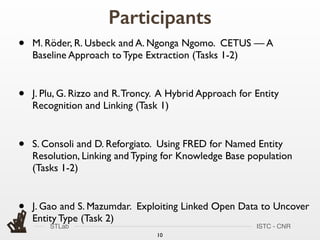

The document presents an overview of the Open Knowledge Extraction (OKE) Challenge aimed at creating a reference framework for knowledge extraction to populate the semantic web with machine-understandable data. It details two main tasks: entity recognition, linking, and typing for knowledge base population, and class induction for knowledge base enrichment, along with their evaluation criteria. Various participant approaches and evaluation methodologies, including the use of the Gerbil benchmark framework, are also discussed.