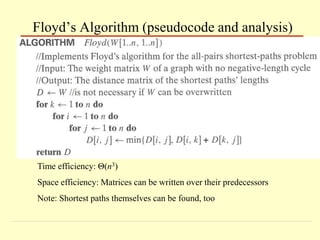

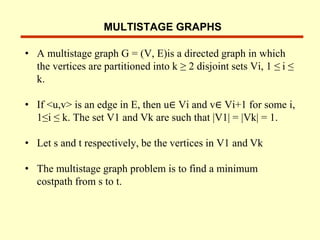

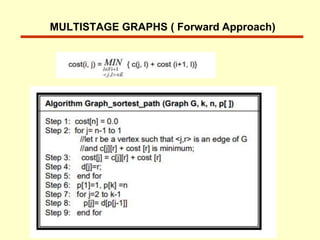

The document describes several algorithms that use dynamic programming techniques. It discusses the coin changing problem, computing binomial coefficients, Floyd's algorithm for finding all-pairs shortest paths, optimal binary search trees, the knapsack problem, and multistage graphs. For each problem, it provides the key recurrence relation used to build the dynamic programming solution in a bottom-up manner, often using a table to store intermediate results. It also analyzes the time and space complexity of the different approaches.

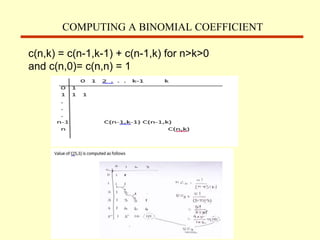

![Given n items of

integer weights: w1 w2 … wn

values: v1 v2 … vn

a knapsack of integer capacity W

find most valuable subset of the items that fit into the knapsack

Consider instance defined by first i items and capacity j (j W).

Let V[i,j] be optimal value of such instance. Then

max {V[i-1,j], vi + V[i-1,j- wi]} if j- wi 0

V[i,j] =

V[i-1,j] if j- wi < 0

Initial conditions: V[0,j] = 0 and V[i,0] = 0

Knapsack Problem by DP](https://image.slidesharecdn.com/dynamicprogramming-230623052101-bee8fa17/85/Dynamic-Programming-pptx-13-320.jpg)

![After simplifications, we obtain the recurrence for C[i,j]:

C[i,j] = min {C[i,k-1] + C[k+1,j]} + ∑ ps for 1 ≤ i ≤ j ≤ n

C[i,i] = pi for 1 ≤ i ≤ j ≤ n

DP for Optimal BST Problem (cont.)

goal

0

0

C[i,j]

0

1

n+1

0 1 n

p 1

p2

n

p

i

j](https://image.slidesharecdn.com/dynamicprogramming-230623052101-bee8fa17/85/Dynamic-Programming-pptx-17-320.jpg)

![The left table is filled using the recurrence

C[i,j] = min {C[i,k-1] + C[k+1,j]} + ∑ ps , C[i,i] = pi

i ≤ k ≤ j s = i

The right saves the tree roots, which are the k’s that give the

minima

Example: key A B C D

probability 0.1 0.2 0.4 0.3

j

0 1 2 3 4

1 0 .1 .4 1.1 1.7

2 0 .2 .8 1.4

3 0 .4 1.0

4 0 .3

5 0

0 1 2 3 4

1 1 2 3 3

2 2 3 3

3 3 3

4 4

5

B

A

C

D](https://image.slidesharecdn.com/dynamicprogramming-230623052101-bee8fa17/85/Dynamic-Programming-pptx-18-320.jpg)

![Time efficiency: Θ(n3) but can be reduced to Θ(n2) by taking

advantage of monotonicity of entries in the

root table, i.e., R[i,j] is always in the range

between R[i,j-1] and R[i+1,j]

Space efficiency: Θ(n2)

Method can be expanded to include unsuccessful searches

Analysis DP for Optimal BST Problem](https://image.slidesharecdn.com/dynamicprogramming-230623052101-bee8fa17/85/Dynamic-Programming-pptx-20-320.jpg)

![On the k-th iteration, the algorithm determines shortest paths

between every pair of vertices i, j that use only vertices among

1,…,k as intermediate

D(k)[i,j] = min {D(k-1)[i,j], D(k-1)[i,k] + D(k-1)[k,j]}

Floyd’s Algorithm (matrix generation)](https://image.slidesharecdn.com/dynamicprogramming-230623052101-bee8fa17/85/Dynamic-Programming-pptx-23-320.jpg)

![TRAVELLING SALESMAN PROBLEM

1 2 3 4

1 0 10 15 20

2 5 0 9 10

3 6 13 0 12

4 8 8 9 0

Cost( i, s)=min{Cost(j, s-(j))+d[ i, j]}](https://image.slidesharecdn.com/dynamicprogramming-230623052101-bee8fa17/85/Dynamic-Programming-pptx-34-320.jpg)

![S = Φ

Cost(2,Φ,1)=d(2,1)=5

Cost(3,Φ,1)=d(3,1)=6

Cost(4,Φ,1)=d(4,1)=8

S = 1

Cost(2,{3},1)=d[2,3]+Cost(3,Φ,1)=9+6=15

Cost(2,{4},1)=d[2,4]+Cost(4,Φ,1)=10+8=18

Cost(3,{2},1)=d[3,2]+Cost(2,Φ,1)=13+5=18

Cost(3,{4},1)=d[3,4]+Cost(4,Φ,1)=12+8=20

Cost(4,{3},1)=d[4,3]+Cost(3,Φ,1)=9+6=15

Cost(4,{2},1)=d[4,2]+Cost(2,Φ,1)=8+5=13

S = 2

Cost(2,{3,4},1)=min{d[2,3]+Cost(3,{4},1),

d[2,4]+Cost(4,{3},1)}

= min {9+20,10+15} = min{29,25} = 25](https://image.slidesharecdn.com/dynamicprogramming-230623052101-bee8fa17/85/Dynamic-Programming-pptx-35-320.jpg)

![Cost(3,{2,4},1)=min{d[3,2]+Cost(2,{4},1),

d[3,4]+Cost(4,{2},1)}

=min {13+18,12+13} = min {31, 25} = 25

Cost(4,{2,3},1)=min{d[4,2]+Cost(2,{3},1),

d[4,3]+Cost(3,{2},1)}

=min {8+15,9+18} = min {23,27} =23

S = 3

Cost(1,{2,3,4},1)=min{ d[1,2]+Cost(2,{3,4},1),

d[1,3]+Cost(3,{2,4},1), d[1,4]+cost(4,{2,3},1)}

=min{10+25, 15+25, 20+23} =

min{35,40,43}=35](https://image.slidesharecdn.com/dynamicprogramming-230623052101-bee8fa17/85/Dynamic-Programming-pptx-36-320.jpg)