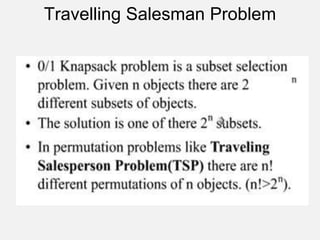

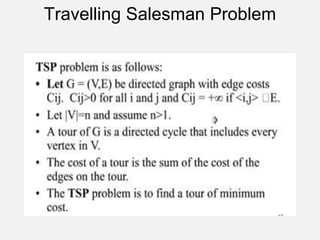

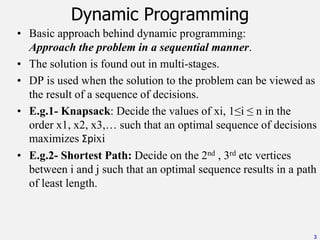

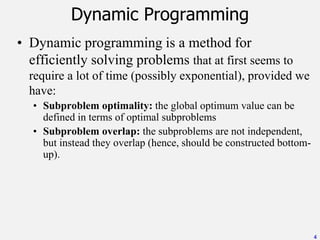

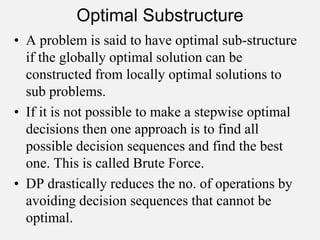

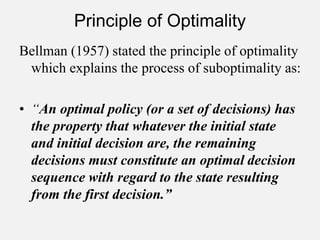

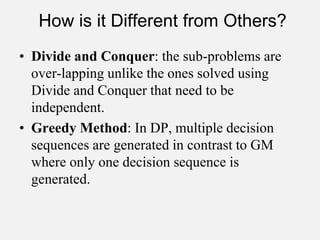

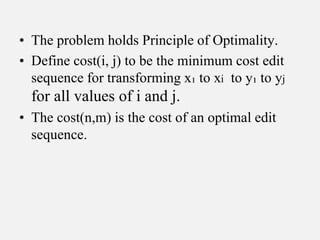

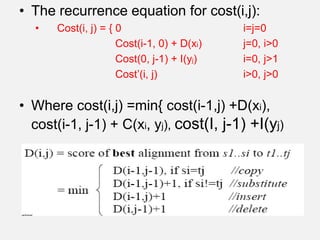

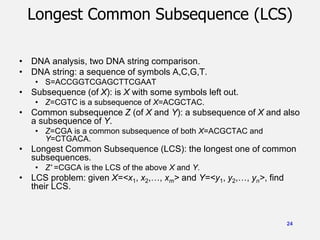

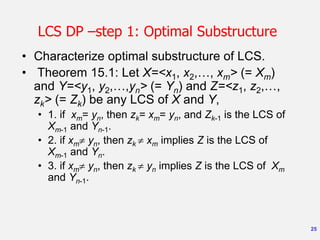

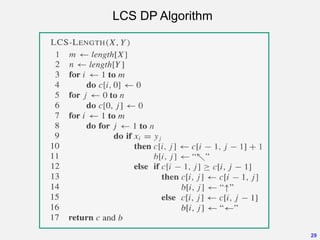

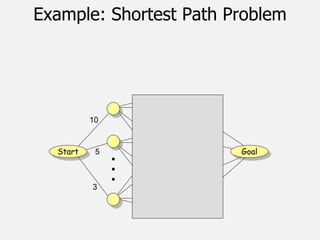

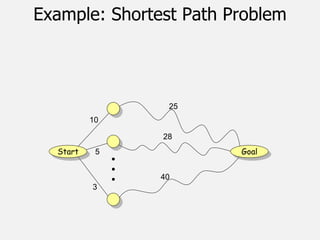

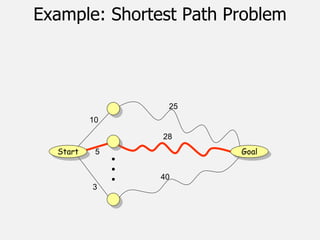

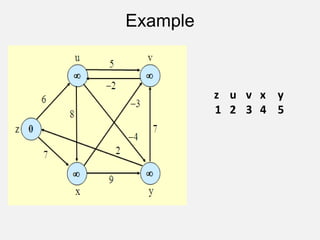

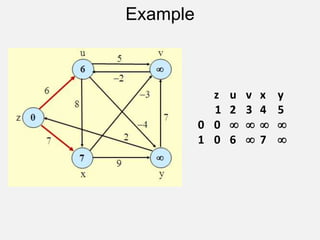

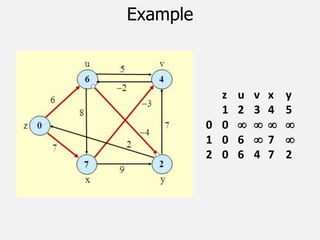

The document discusses various algorithms that use dynamic programming. It begins by defining dynamic programming as an approach that breaks problems down into optimal subproblems. It provides examples like knapsack and shortest path problems. It describes the characteristics of problems solved with dynamic programming as having optimal subproblems and overlapping subproblems. The document then discusses specific dynamic programming algorithms like matrix chain multiplication, string editing, longest common subsequence, shortest paths (Bellman-Ford and Floyd-Warshall). It provides explanations, recurrence relations, pseudocode and examples for these algorithms.

![12

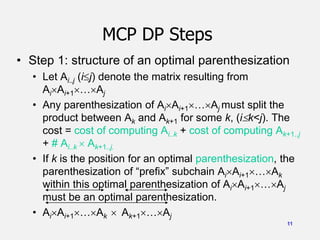

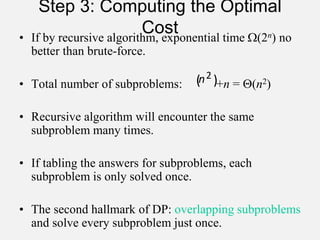

MCP DP Steps

• Step 2: a recursive relation

• Let m[i,j] be the minimum number of

multiplications for AiAi+1…Aj

• m[1,n] will be the answer

If the final multiplication for Aij is Aij= AikAk+1,j

then

• m[i,j] = 0 if i = j

min {m[i,k] + m[k+1,j] +pi-1pkpj } if i<j

ik<j](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-12-320.jpg)

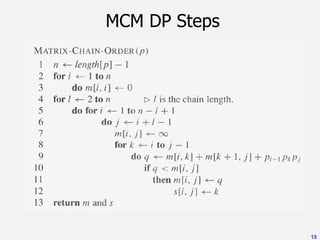

![Step 3: Algorithm

• Array m[1..n,1..n], with m[i,j] records the

optimal cost for AiAi+1…Aj .

• Array s[1..n,1..n], s[i,j] records index k

which achieved the optimal cost when

computing m[i,j].

• Suppose the input to the algorithm is p=<

p0 , p1 ,…, pn >.](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-14-320.jpg)

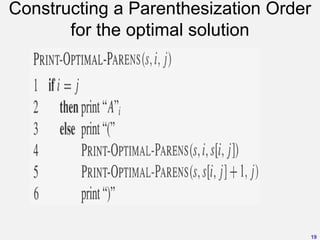

![MCM DP Steps

• Step 4, constructing a parenthesization

order for the optimal solution.

• Since s[1..n,1..n] is computed, and s[i,j] is

the split position for AiAi+1…Aj , i.e, Ai…As[i,j]

and As[i,j] +1…Aj , thus, the parenthesization

order can be obtained from s[1..n,1..n]

recursively, beginning from s[1,n].](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-18-320.jpg)

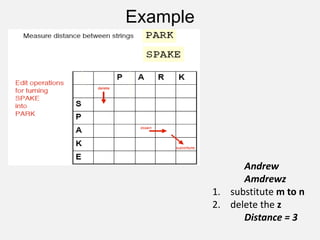

![String Editing

• Two string are given: X= x₁, x₂, x₃,…,xn and

Y=y₁, y₂, …, ym.

– xi, 1<i<n and yj, 1<j<m are members of Alphabet, a

finite set of symbols.

• Transform X into Y using a sequence of edit

operations on X.

• Set of Edit operations available: Insert[I(xi)],

Delete[D(xi)] and Change[C(xi, yj)].

• Cost associated with each operation.

• Total cost of the sequence of operations is the

sum of cost of each edit operation in sequence.

• Problem: Identify a minimum-cost edit sequence

that transforms X into Y.](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-20-320.jpg)

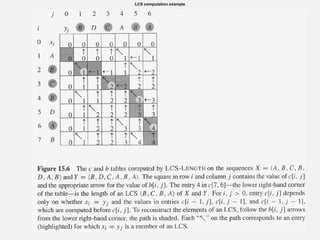

![26

LCS DP –step 2:Recursive Solution

• What the theorem says:

• If xm= yn, find LCS of Xm-1 and Yn-1, then append xm.

• If xm yn, find LCS of Xm-1 and Yn and LCS of Xm and Yn-

1, take which one is longer.

• Overlapping substructure:

• Both LCS of Xm-1 and Yn and LCS of Xm and Yn-1 will

need to solve LCS of Xm-1 and Yn-1.

• c[i,j] is the length of LCS of Xi and Yj .](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-26-320.jpg)

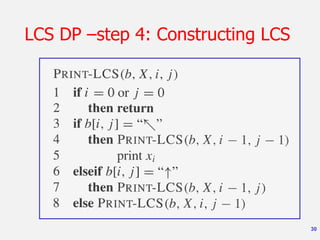

![27

LCS DP-- step 3:Computing the Length of LCS

• c[0..m,0..n], where c[i,j] is defined as

above.

• c[m,n] is the answer (length of LCS).

• b[1..m,1..n], where b[i,j] points to the

table entry corresponding to the optimal

subproblem solution chosen when

computing c[i,j].

• From b[m,n] backward to find the LCS.](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-27-320.jpg)

![Dijikstra’s Shortest Path Algorithm

DIJKSTRA (G, w, s)

{

INITIALIZE SINGLE-SOURCE (G, s)

S ← { } // S will ultimately contains vertices of final

shortest-path weights from s

Initialize priority queue Q i.e., Q ← V[G]

while priority queue Q is not empty do

u ← MIN(Q) // Pull out new vertex

S ← S + {u}

// Perform relaxation for each vertex v adjacent to u

for each vertex v in Adj[u] do

Relax (u, v, w)

}

37](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-37-320.jpg)

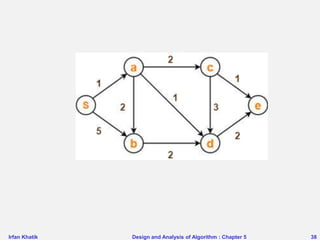

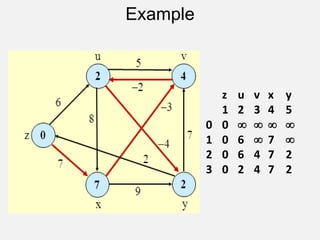

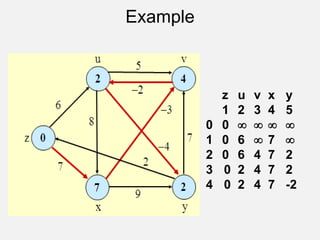

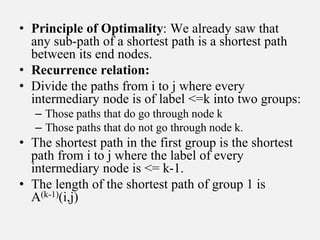

![The All-Pairs Shortest Path Problem:

Floyd-Marshall Algorithm

• Input: A weighted graph, represented by its

weight matrix W.

• Problem: Find the distance between every pair of

nodes.

• Dynamic programming Design:

– Notation: A(k)(i,j) = length of the shortest path from

node i to node j where the label of every intermediary

node is <= k.

• A(0)(i,j) = W[i,j].](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-46-320.jpg)

![• The shortest path in the two groups is the

shorter of the shortest paths of the two groups,

we get

• The algorithm follows:

A(k)(i,j)=min(A(k-1)(i,j), A(k-1)(i,k) + A(k-1)(k,j))

Algorithm AllPaths(cost, a, n)

{

for i=1 to n do

for j=1 to n do

A(0)(i,j) := cost[i,j];

for k=1 to n do

for i=1 to n do

for j=1 to n do

A(k)(i,j)=min(A(k-1)(i,j),A(k-1)(i,k) + A(k-1)(k,j))

}](https://image.slidesharecdn.com/aacch3advancestrategiesdynamicprogramming-221129141504-758f43ce/85/AAC-ch-3-Advance-strategies-Dynamic-Programming-pptx-49-320.jpg)