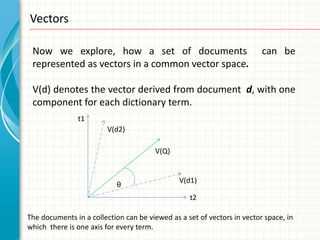

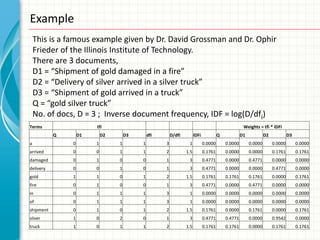

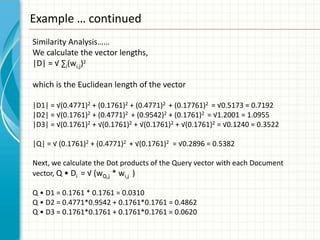

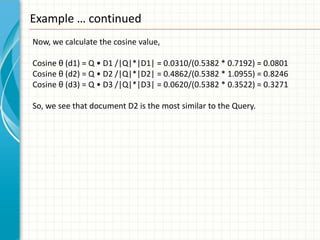

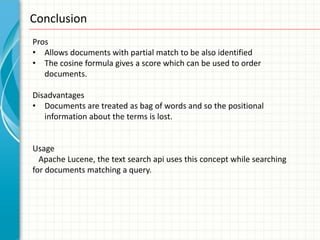

Vector space model represents documents and queries as vectors in a common vector space. Each dimension corresponds to a unique term, and the value in each dimension represents how important that term is to the document or query. Document similarity is calculated by taking the cosine of the angle between the document and query vectors, with a value closer to 1 indicating greater similarity. An example calculates tf-idf weights for terms in documents and a query, derives the document and query vectors, and determines that the second document has the highest similarity to the query based on a cosine similarity value of 0.8246.