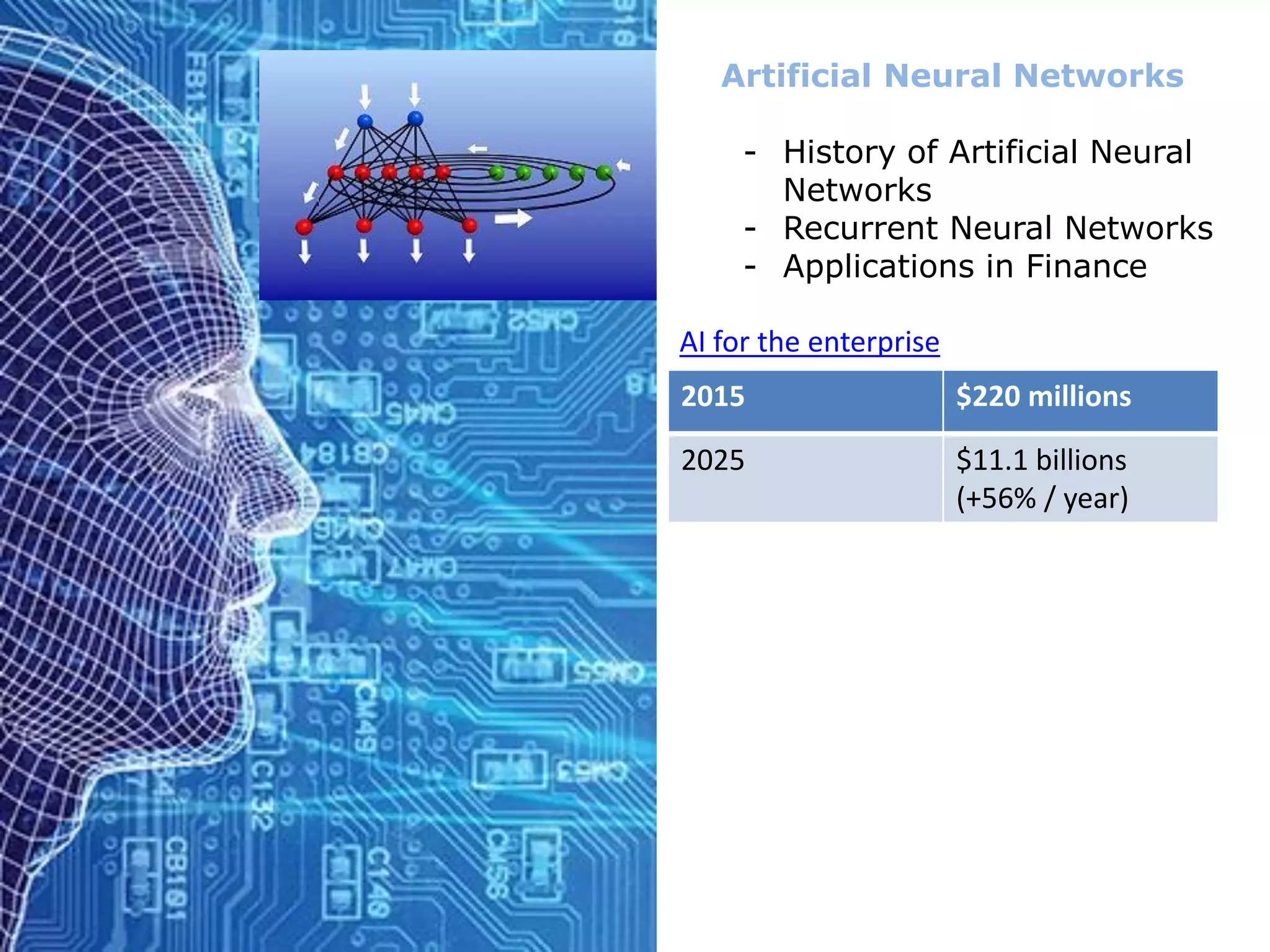

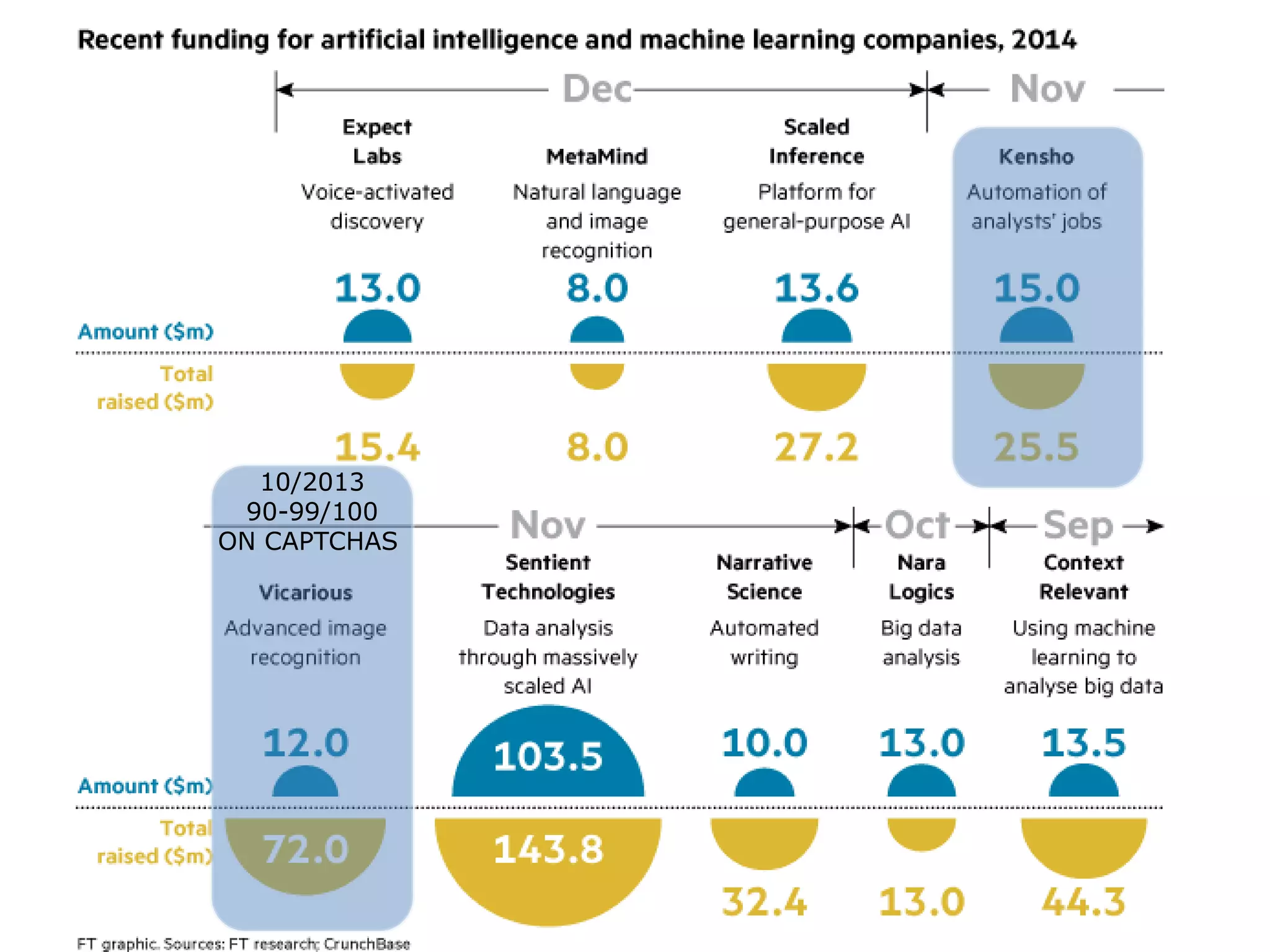

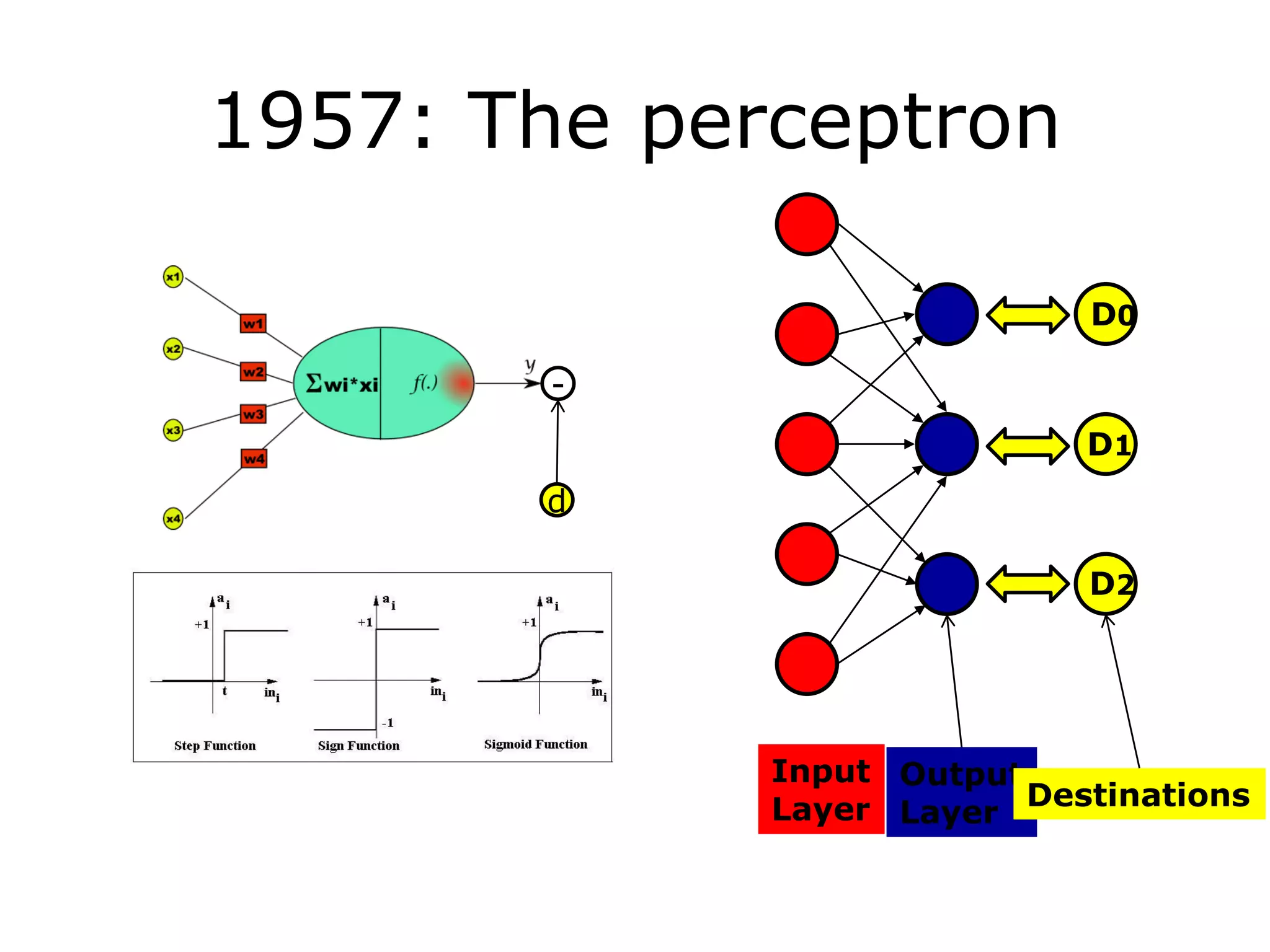

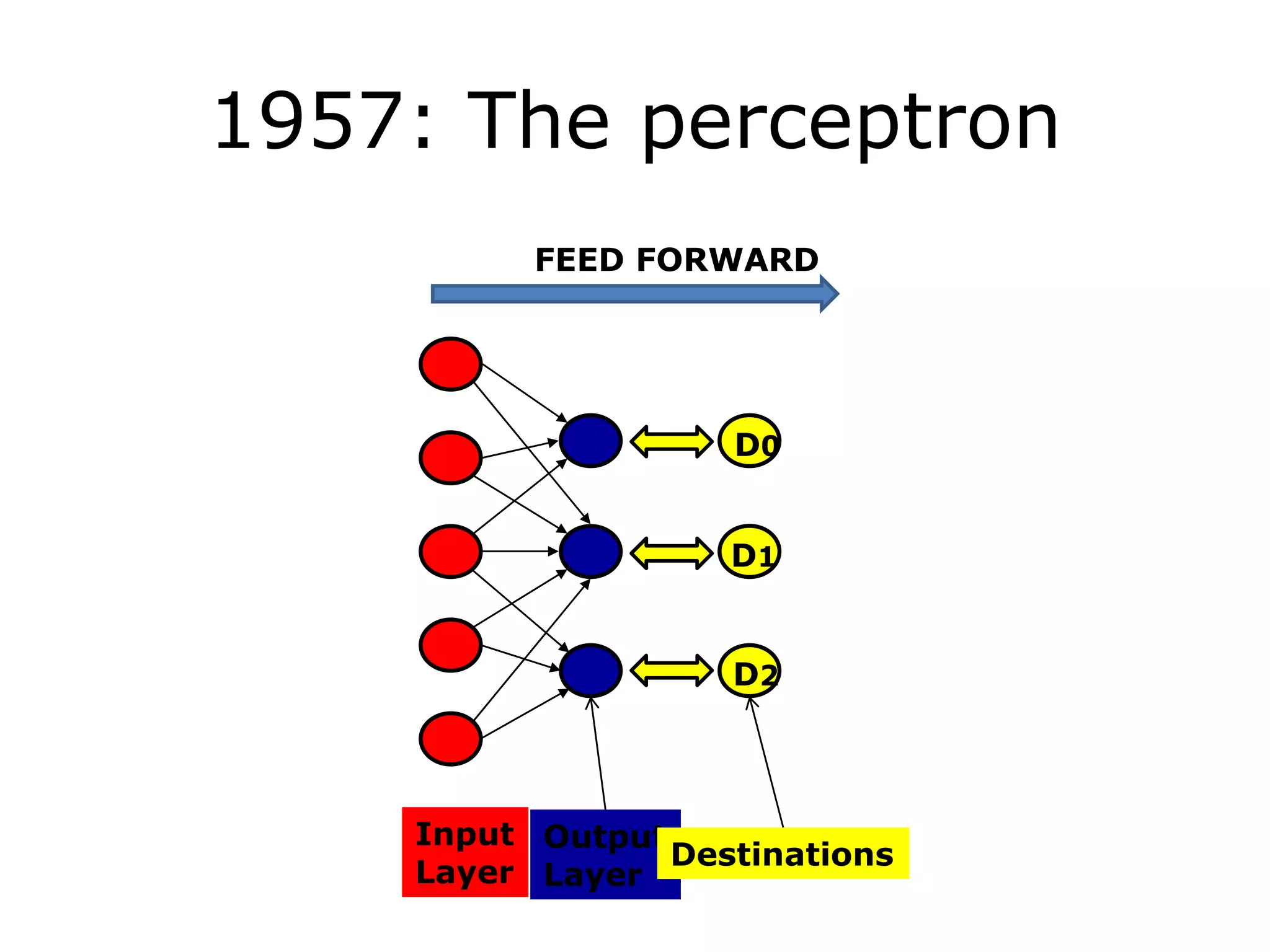

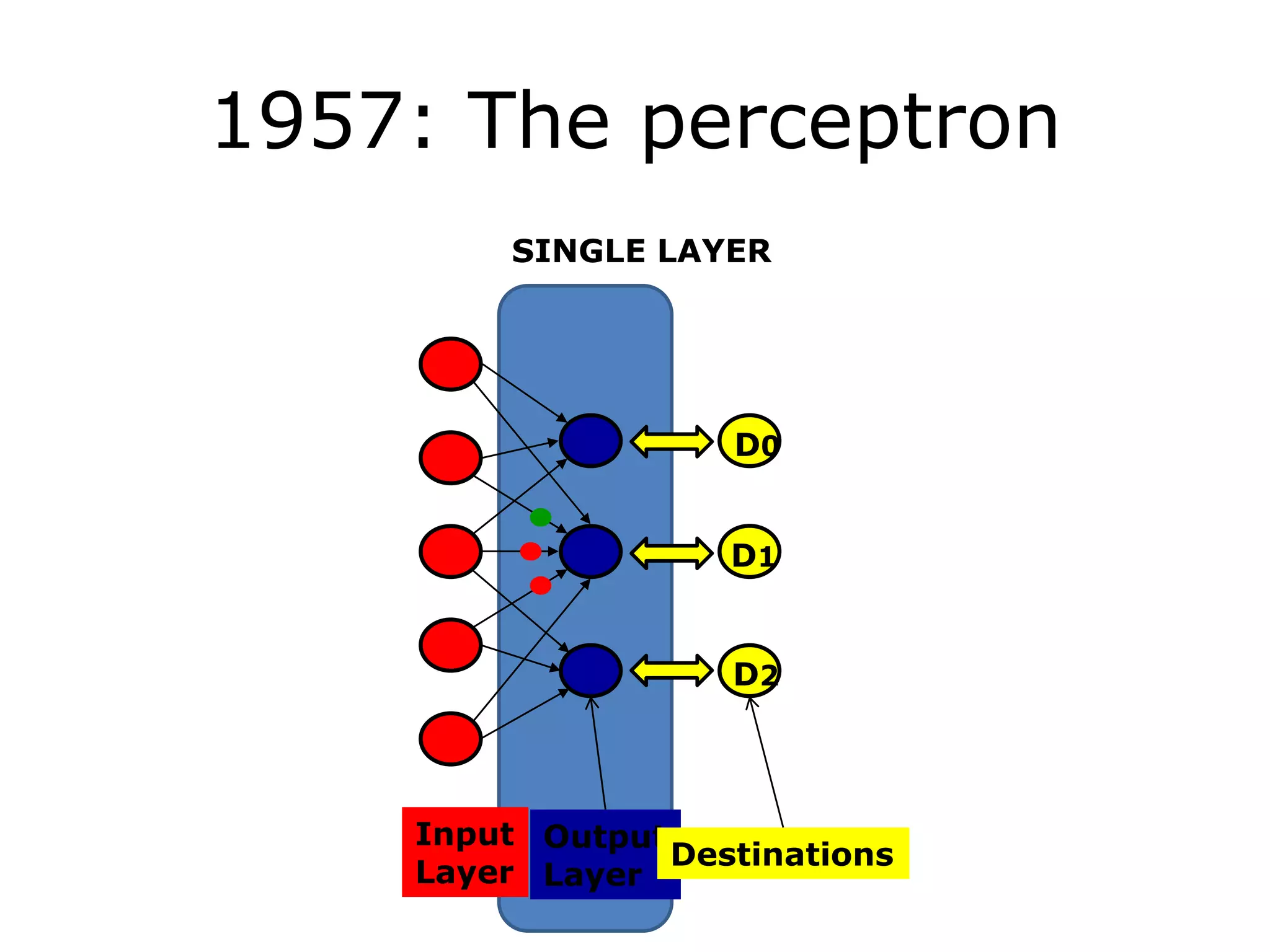

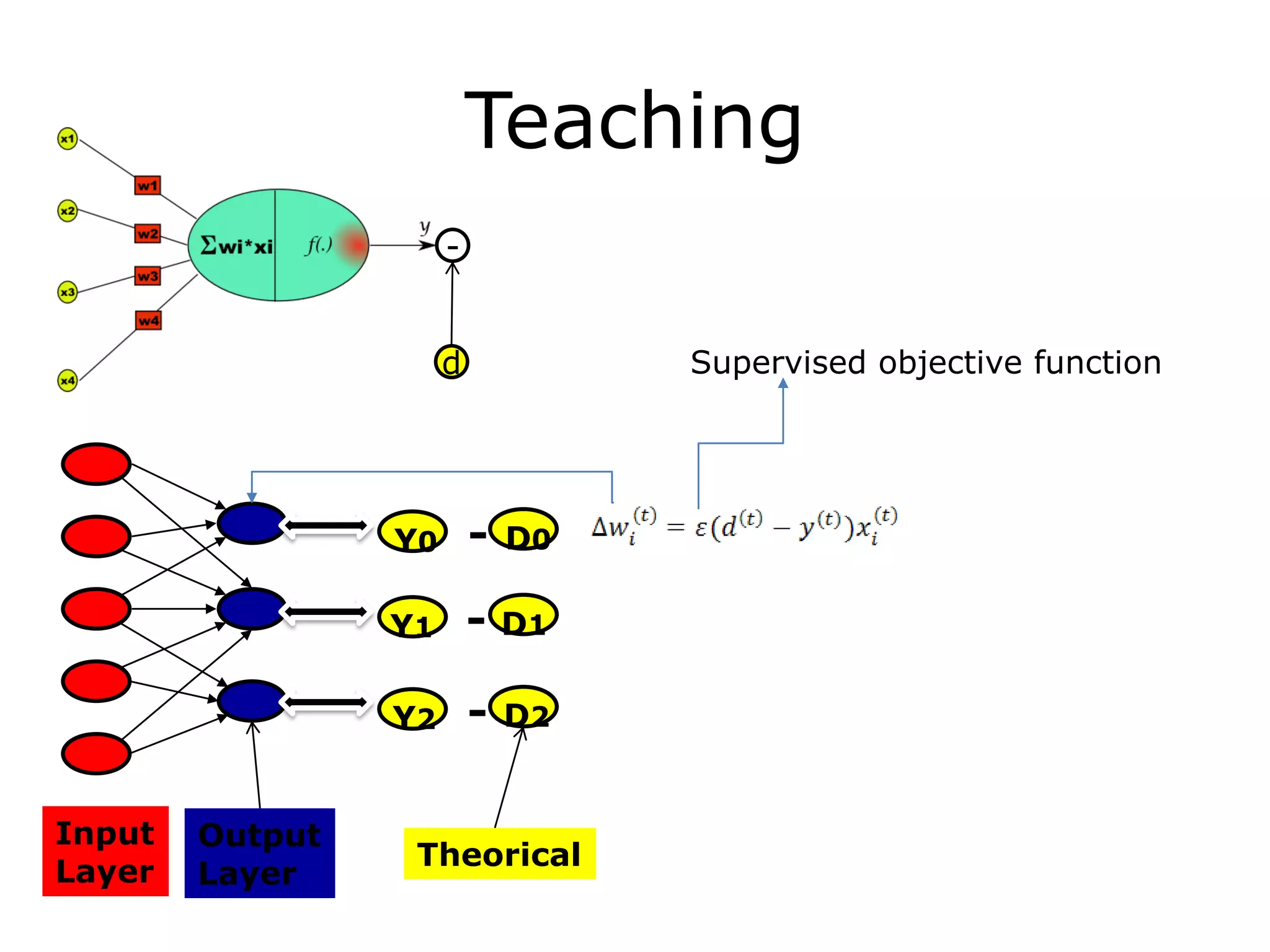

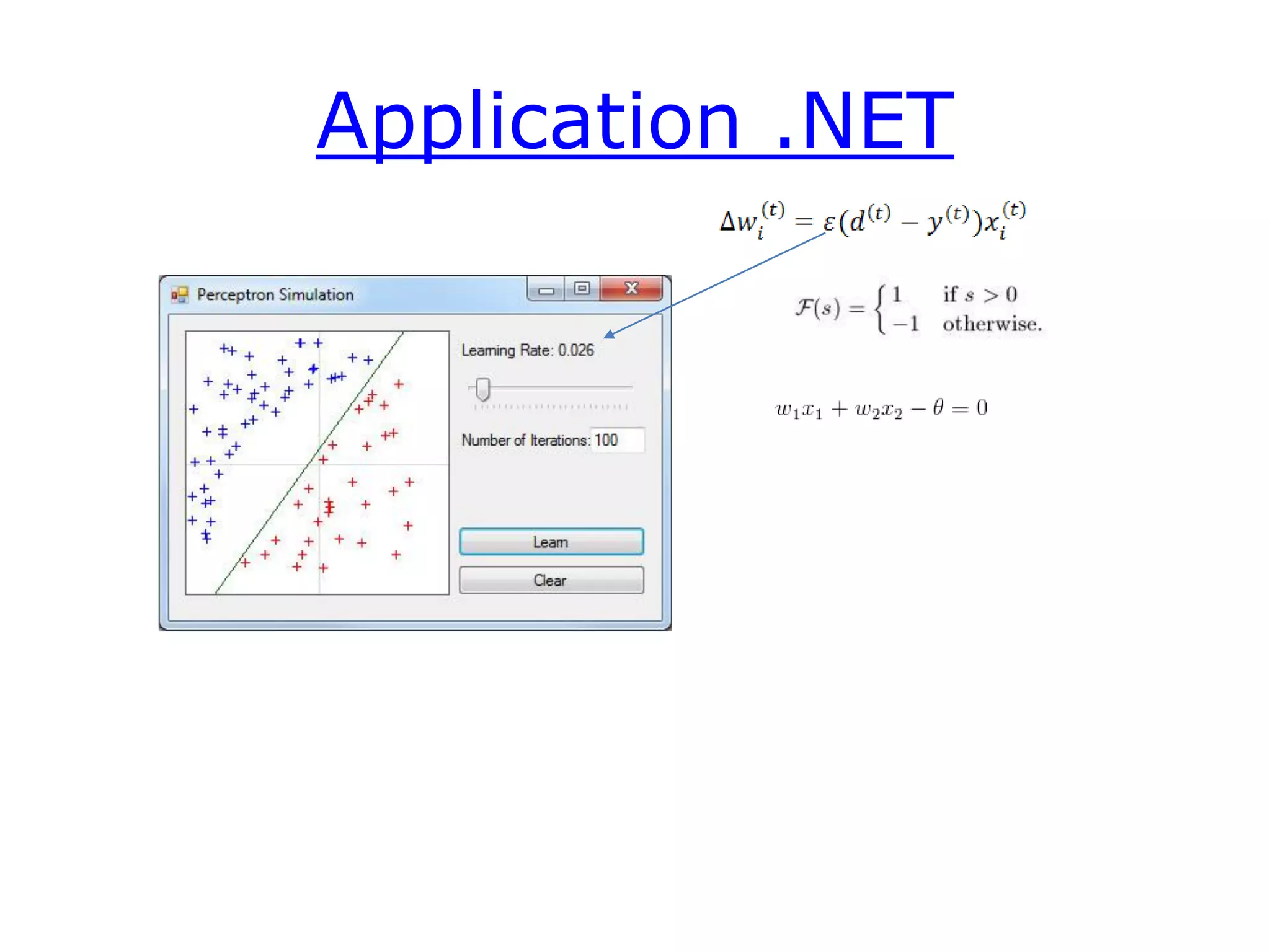

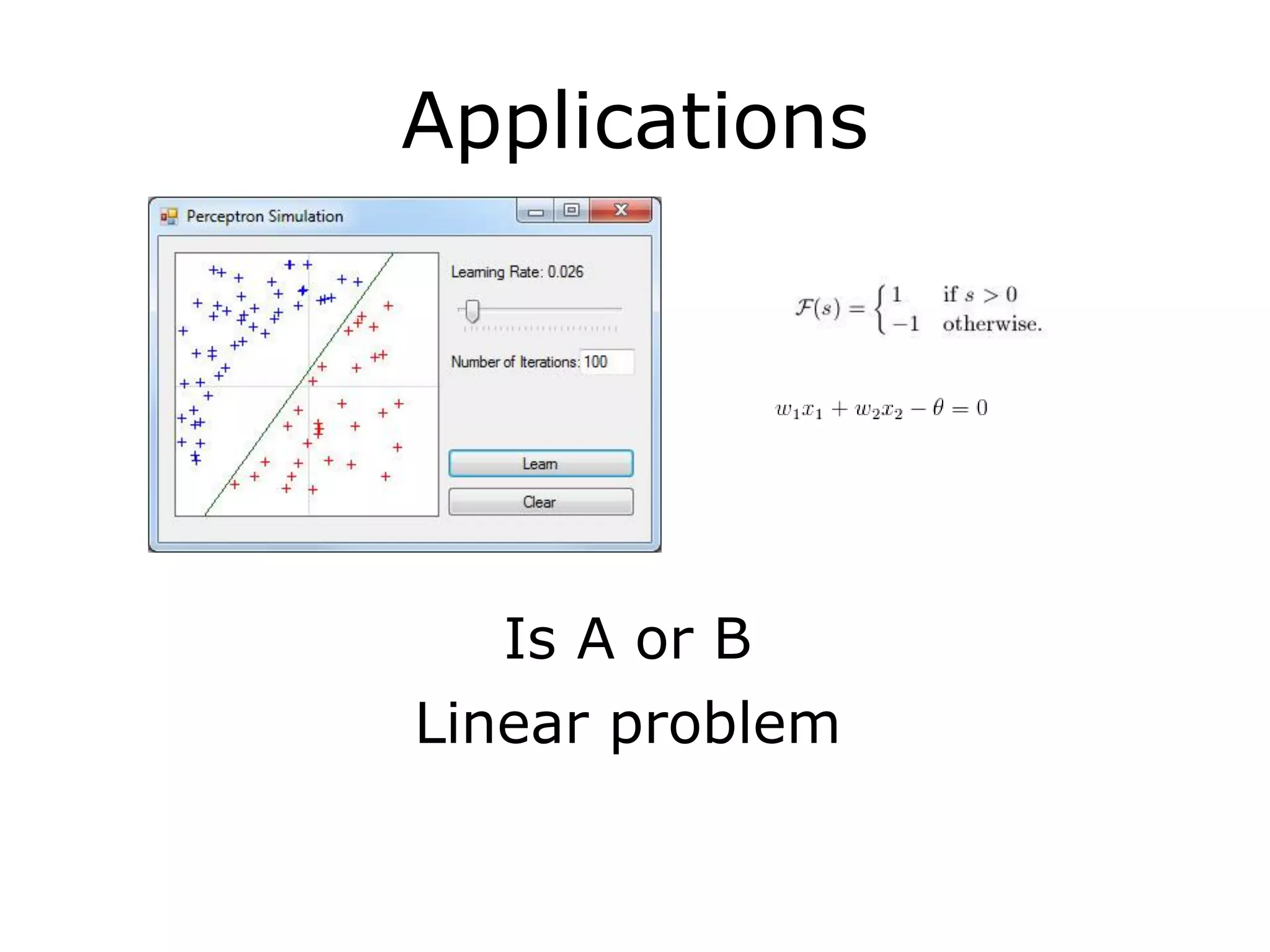

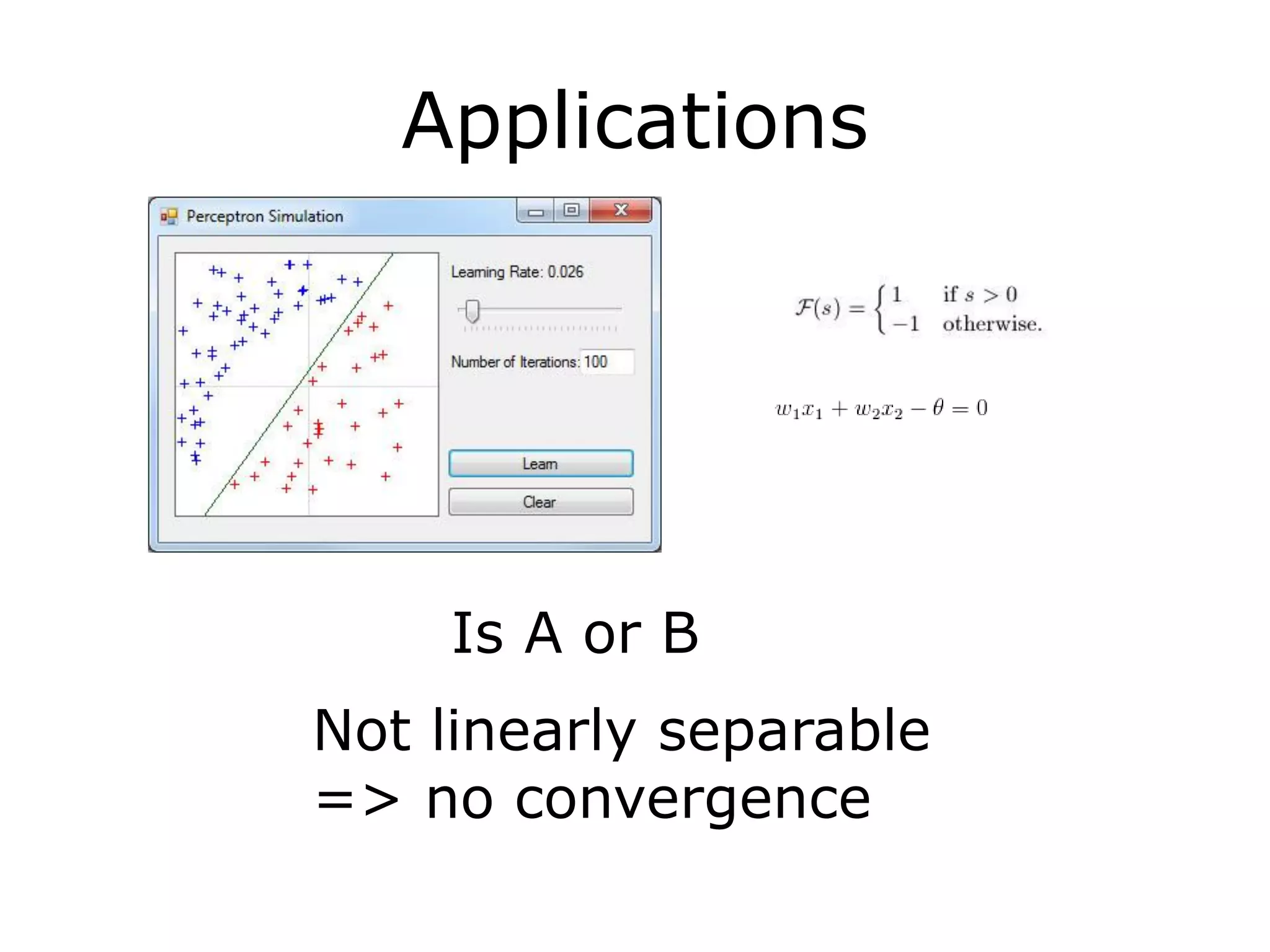

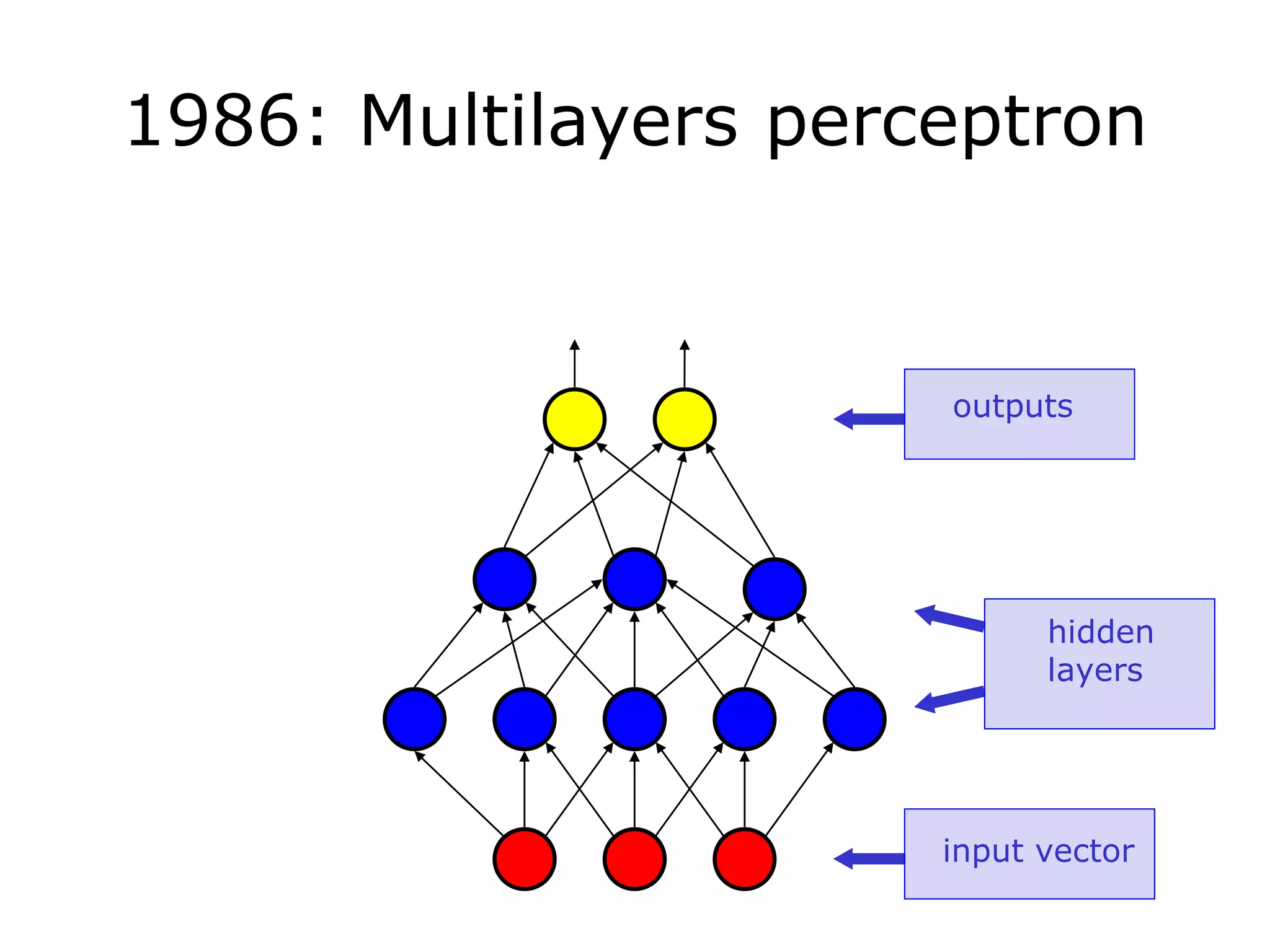

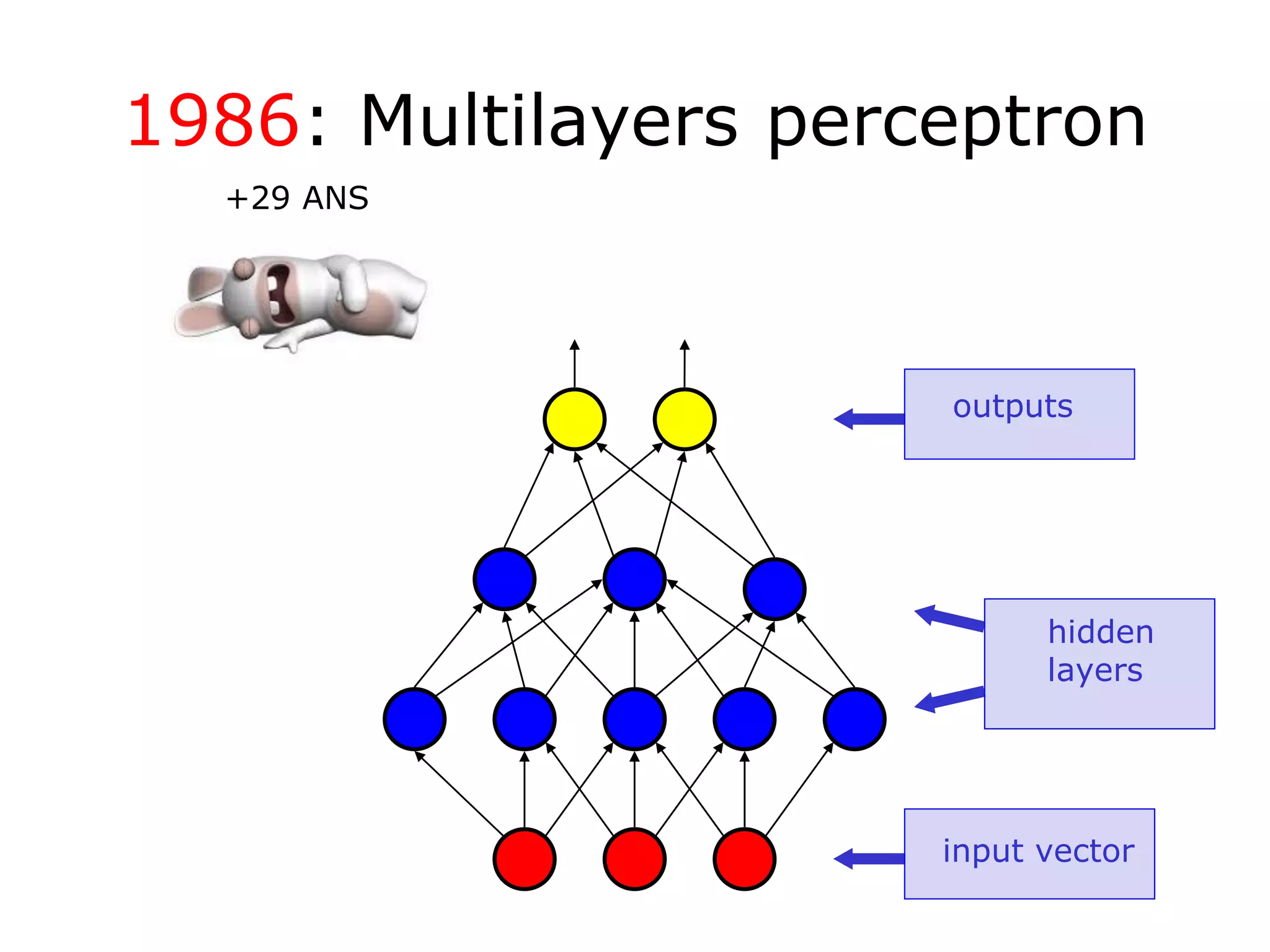

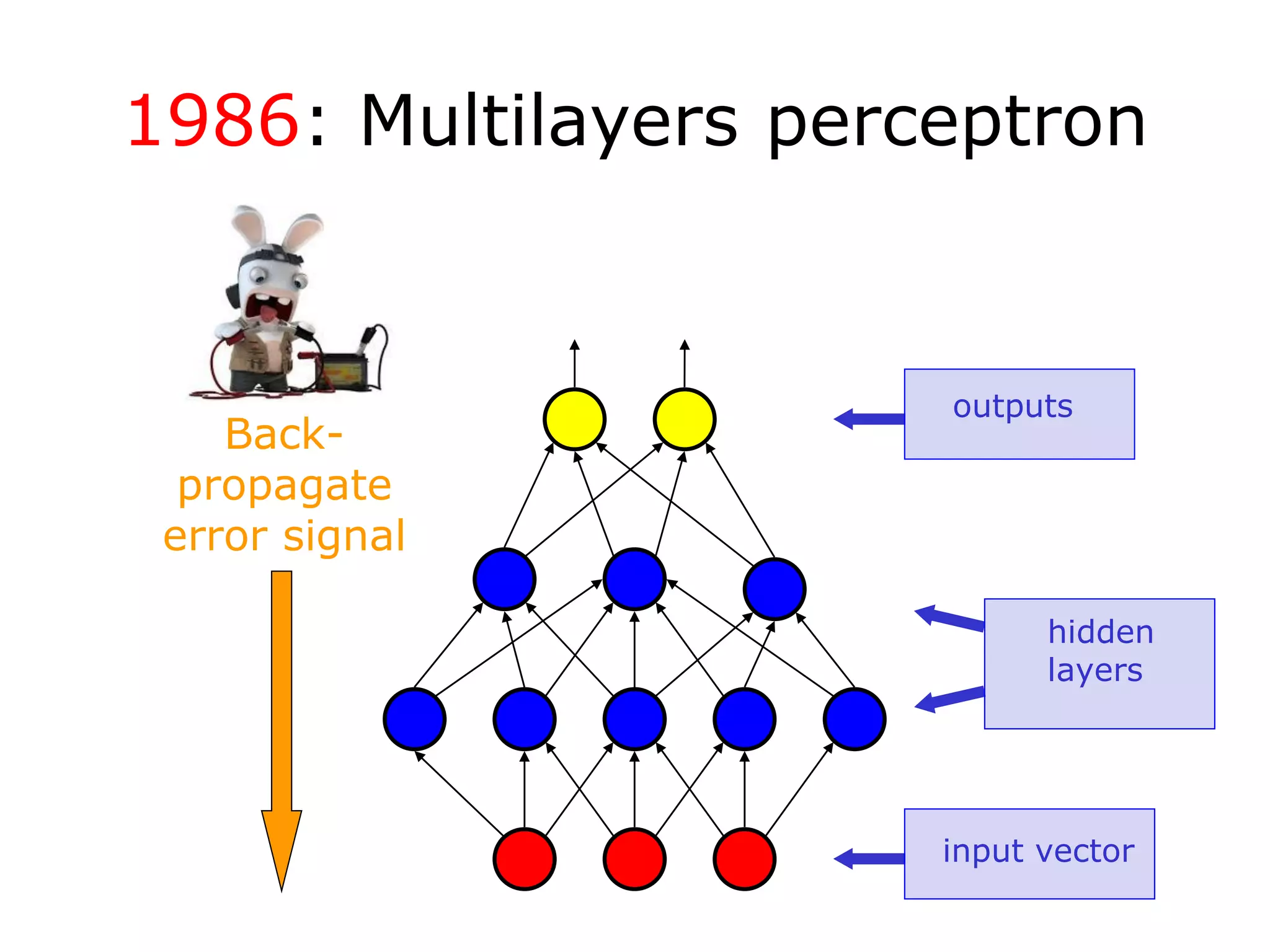

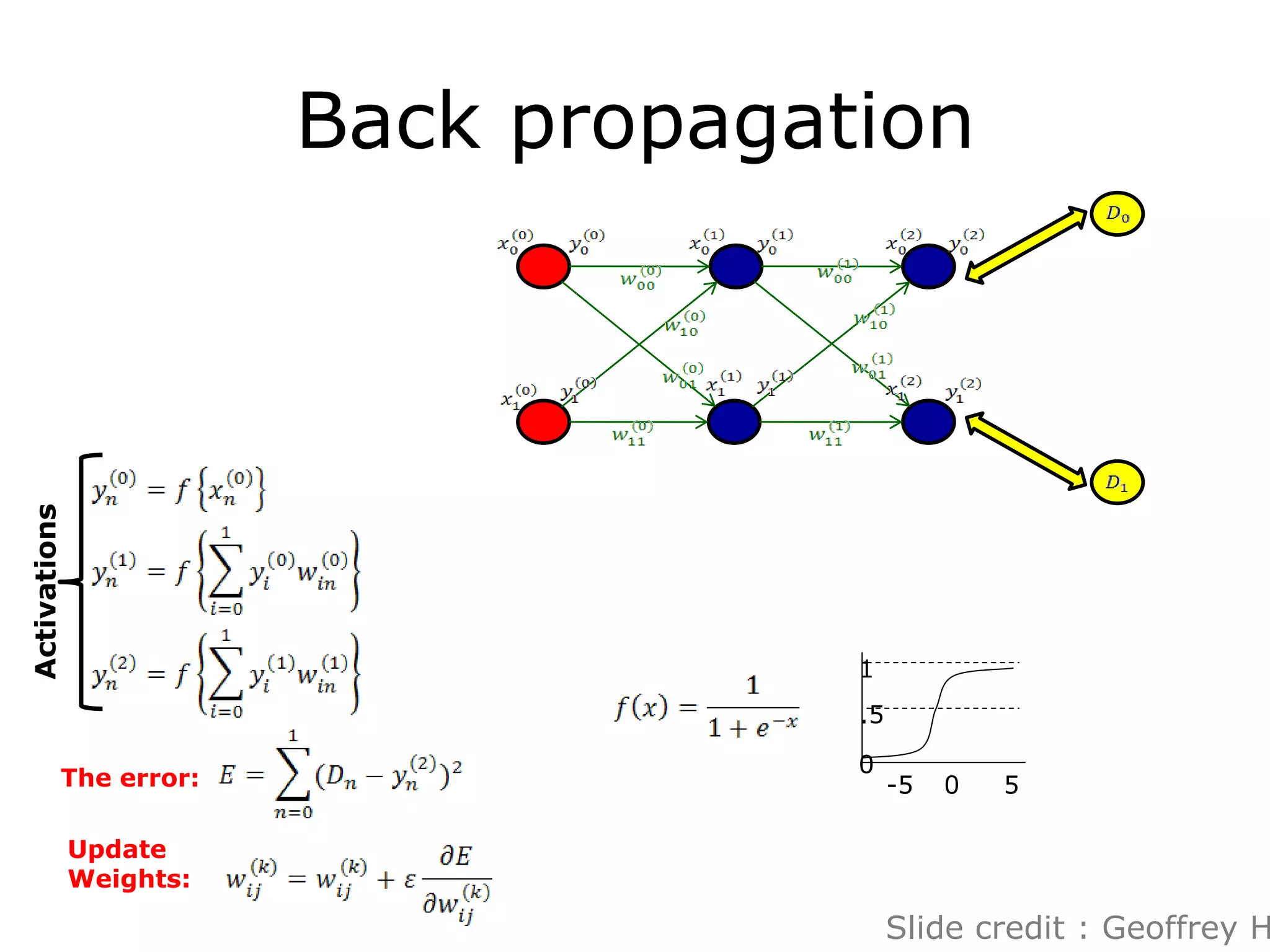

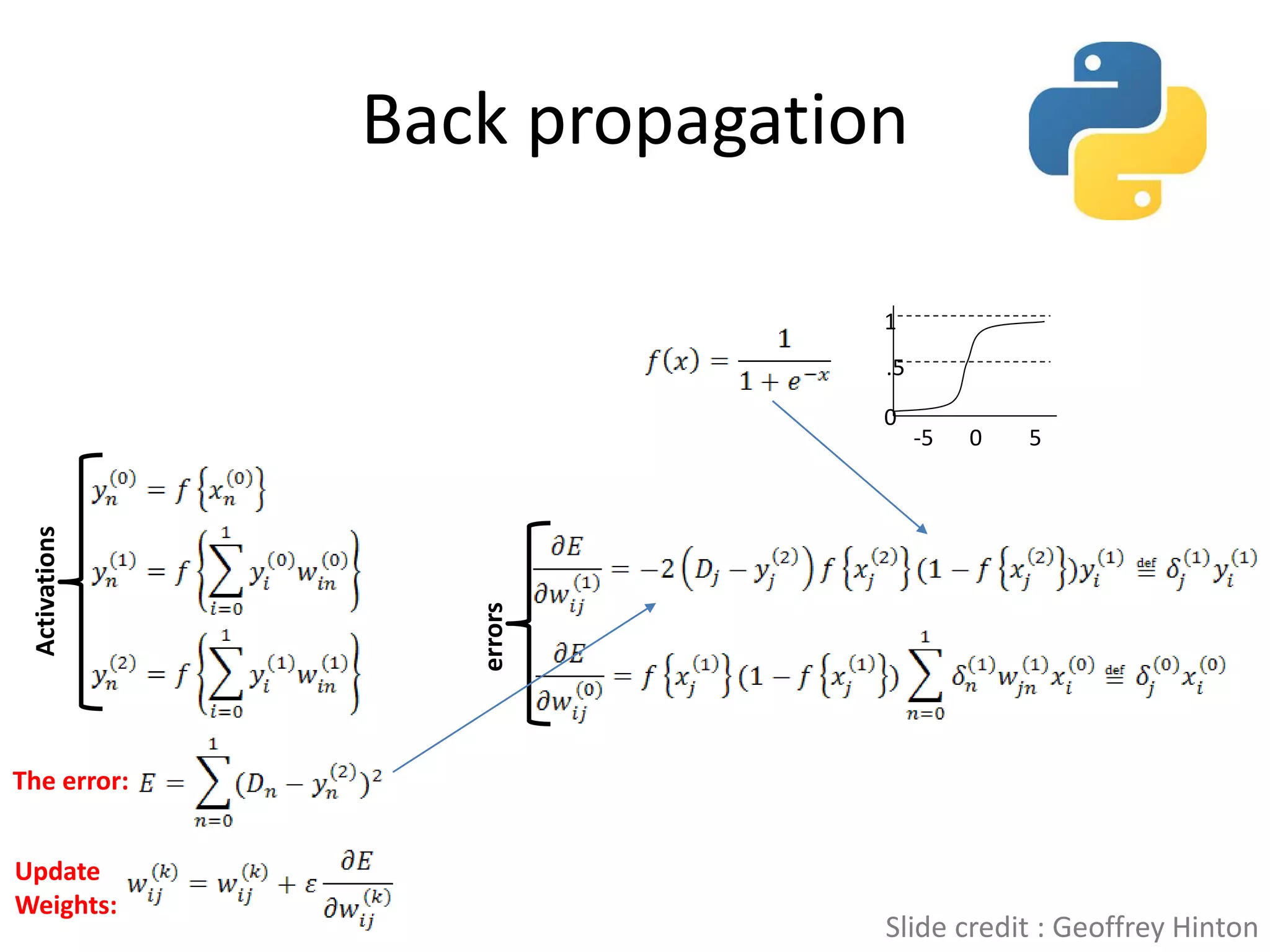

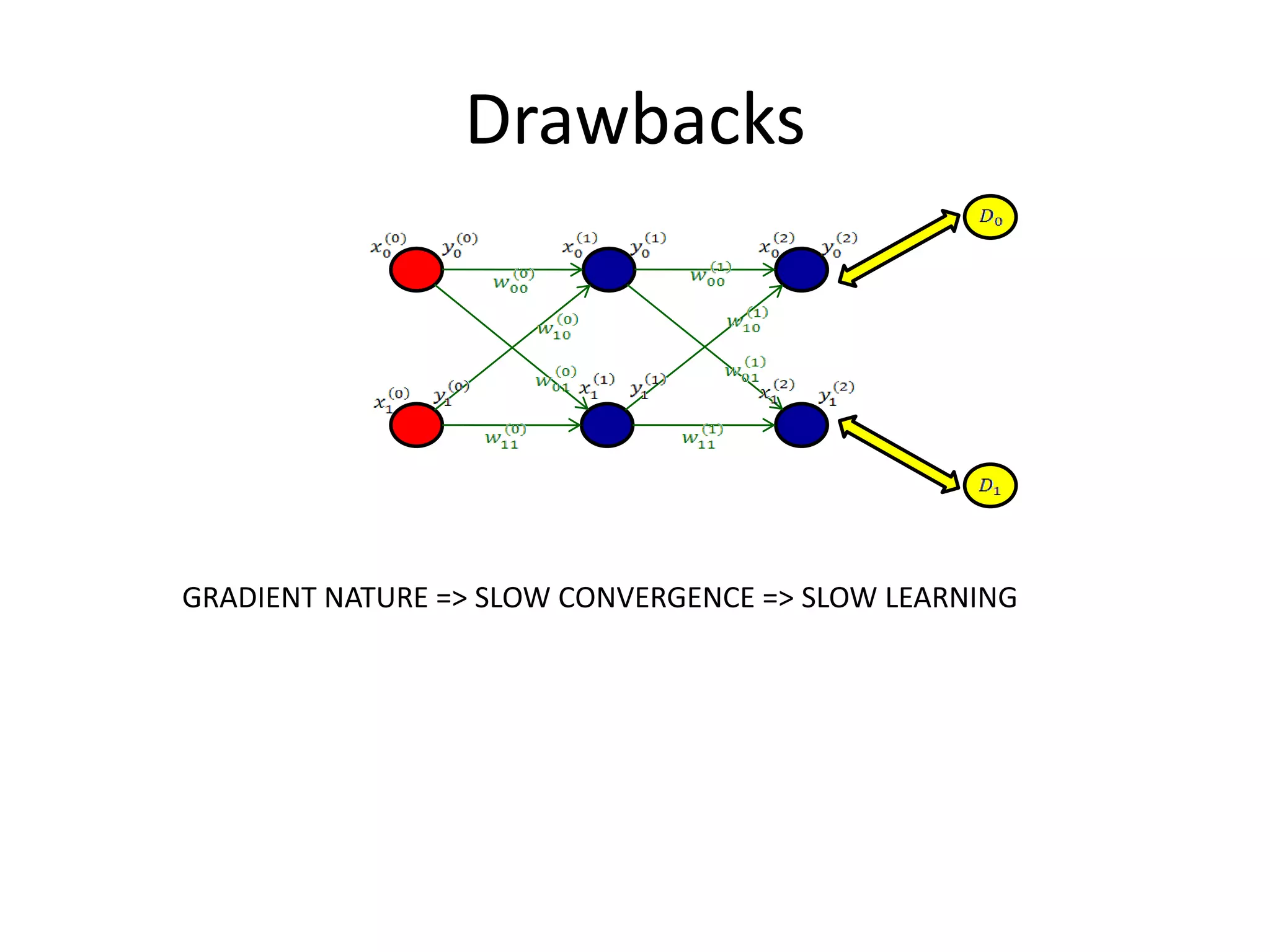

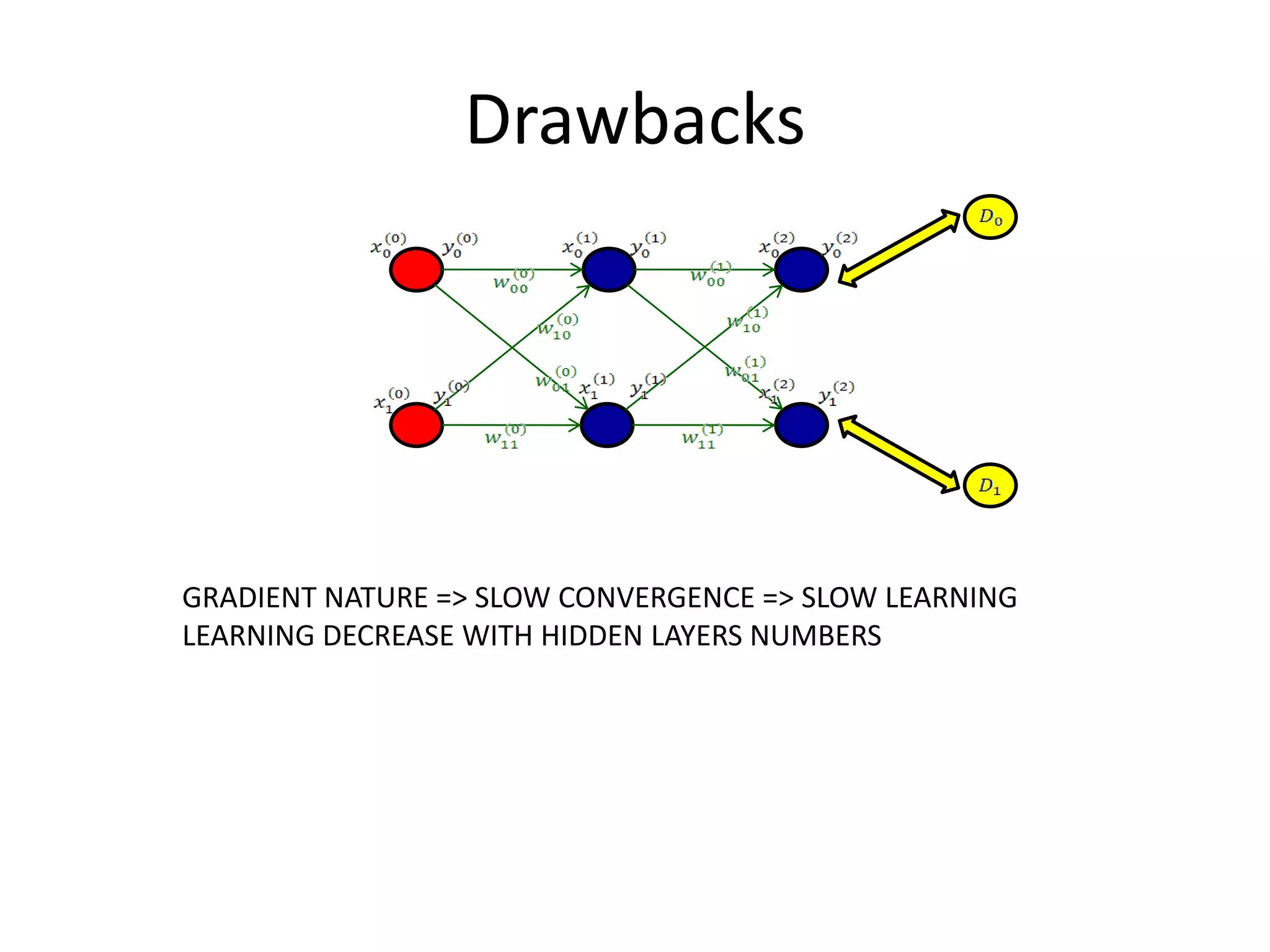

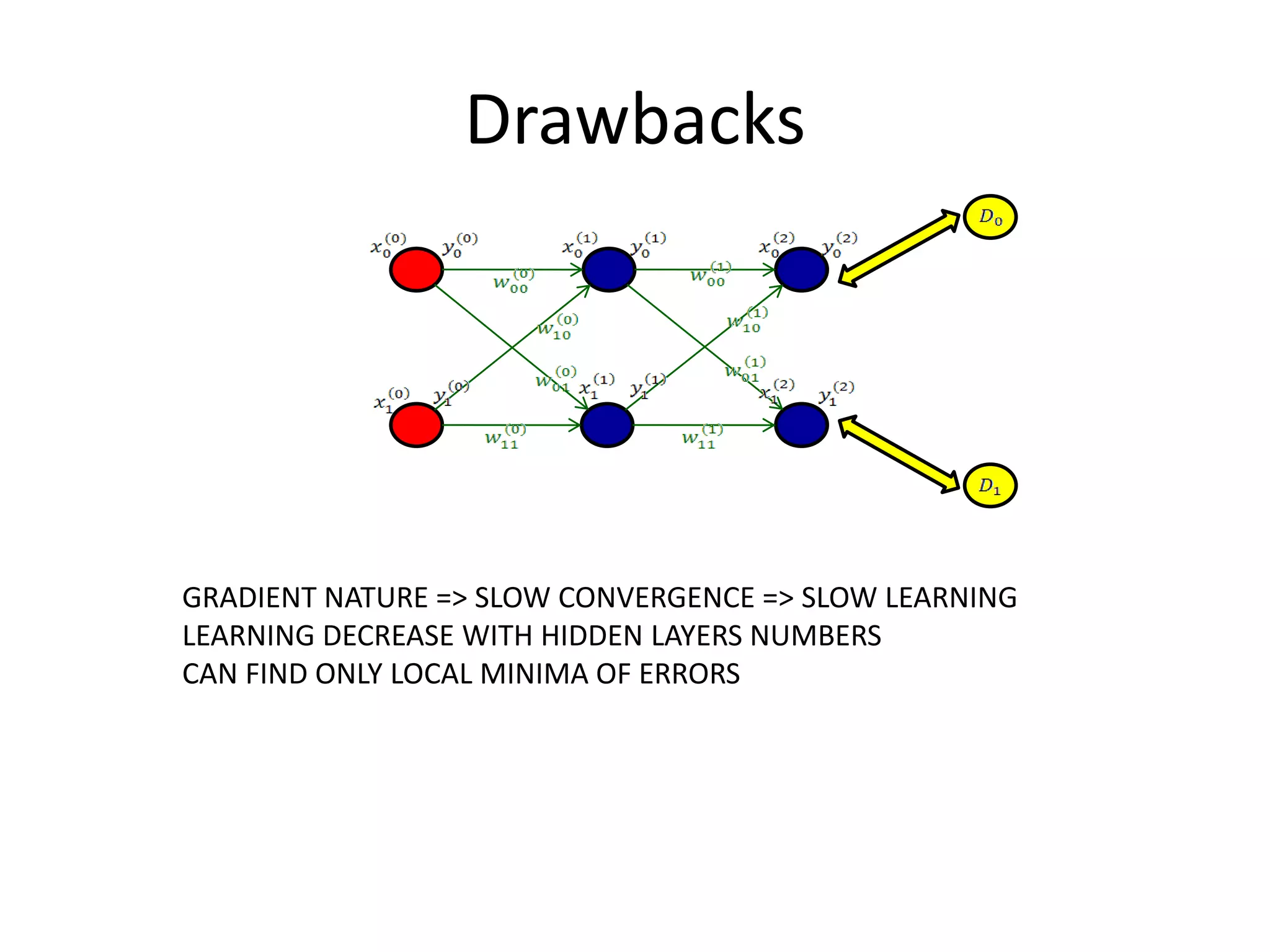

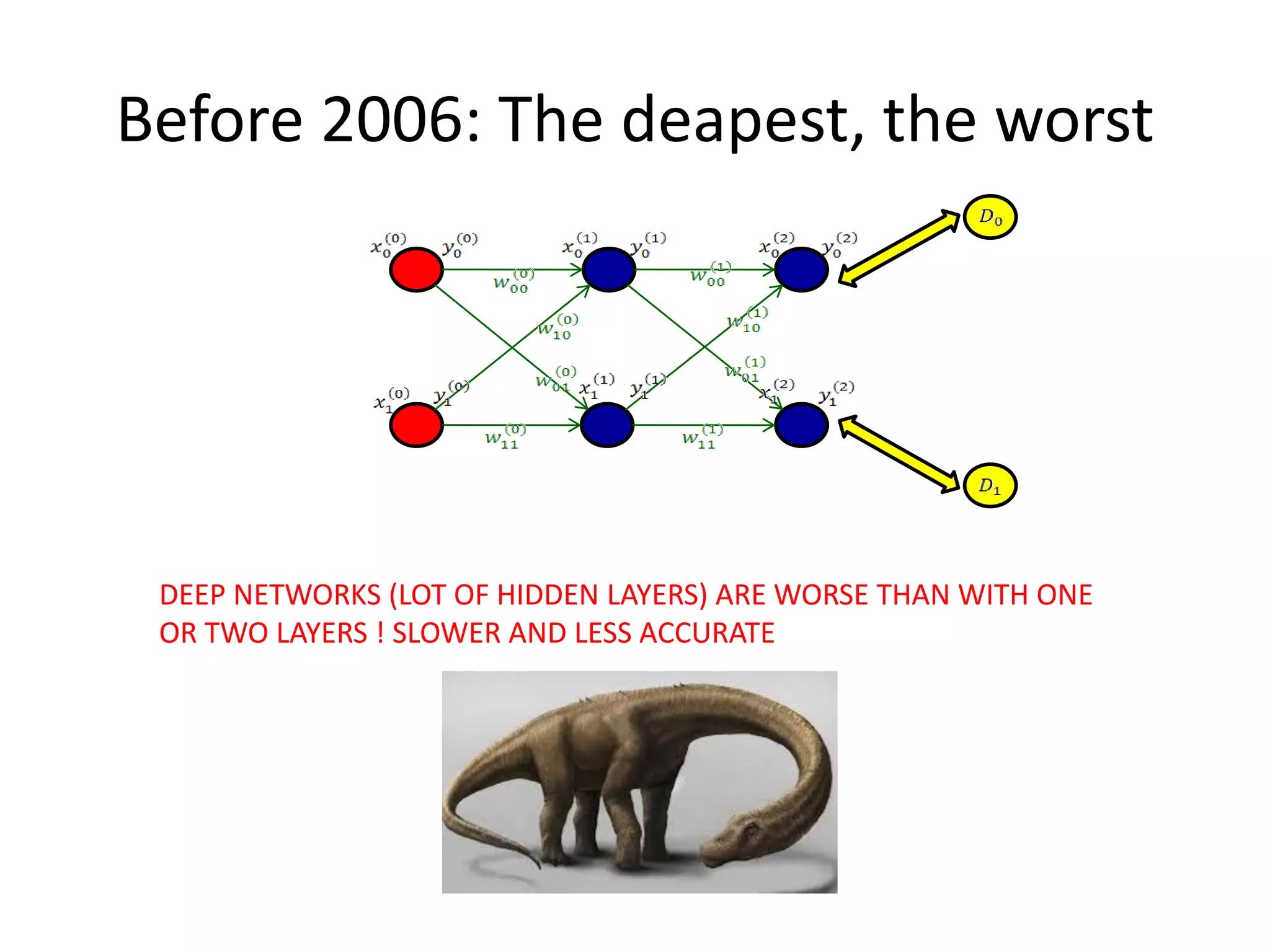

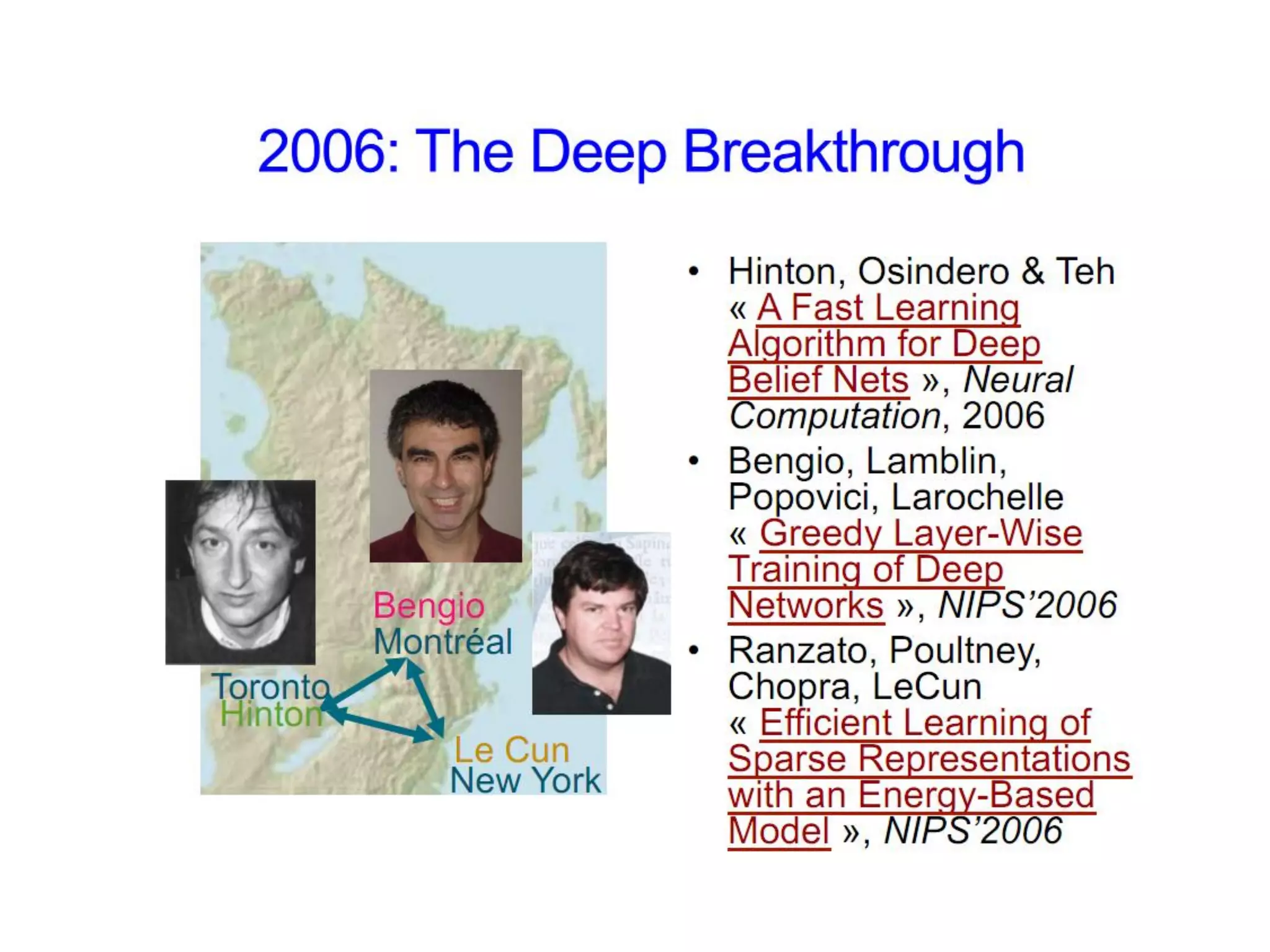

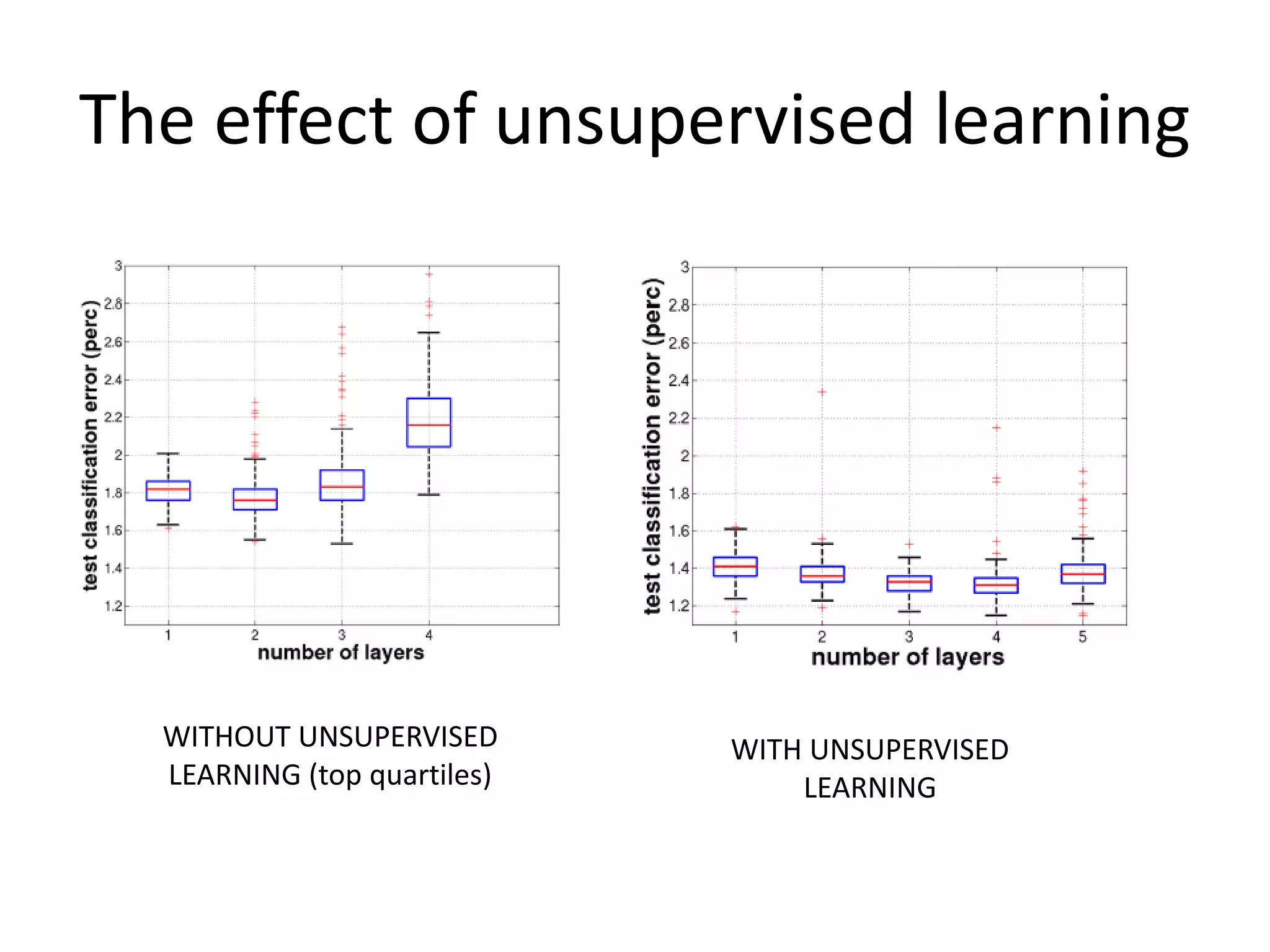

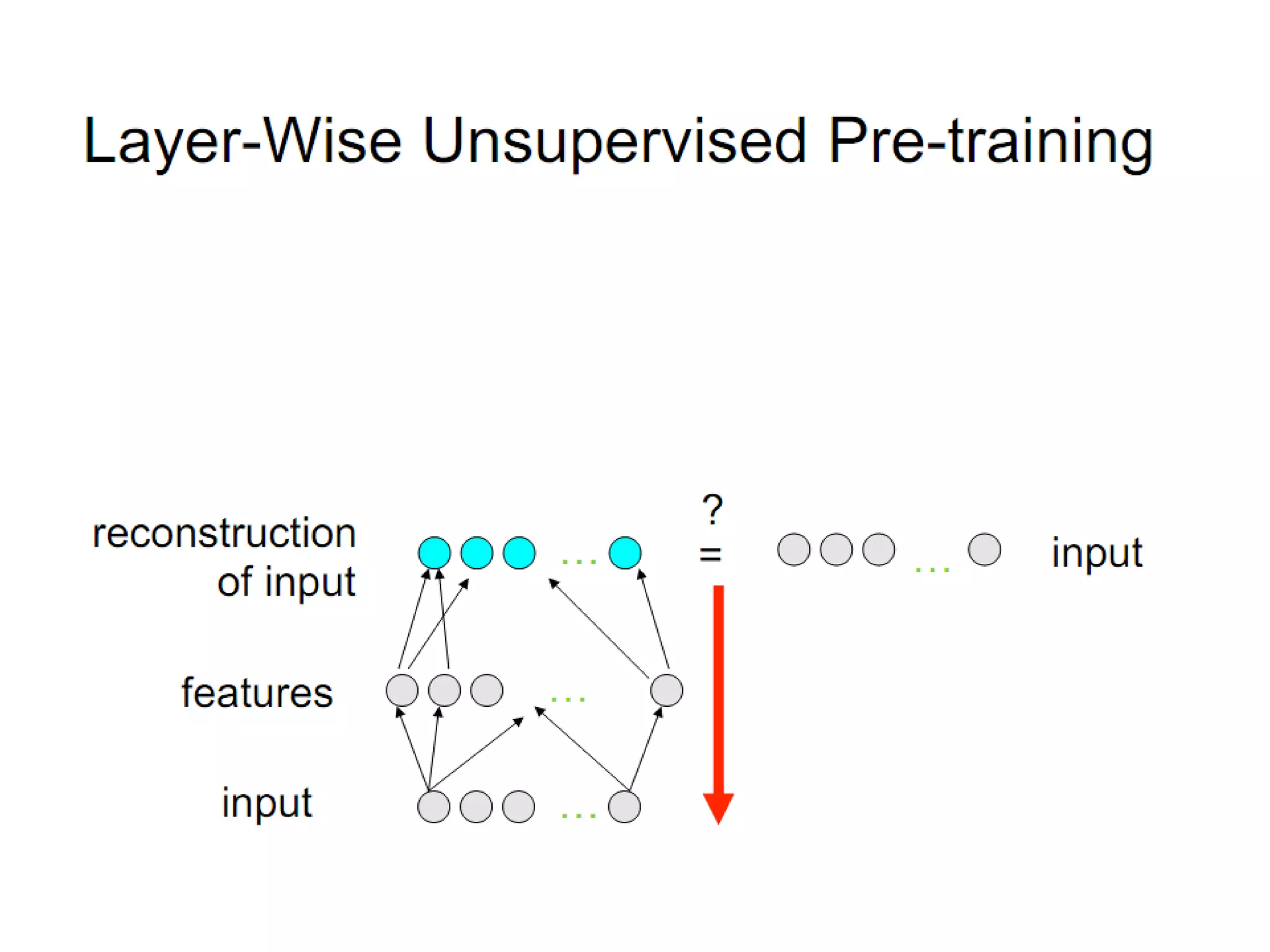

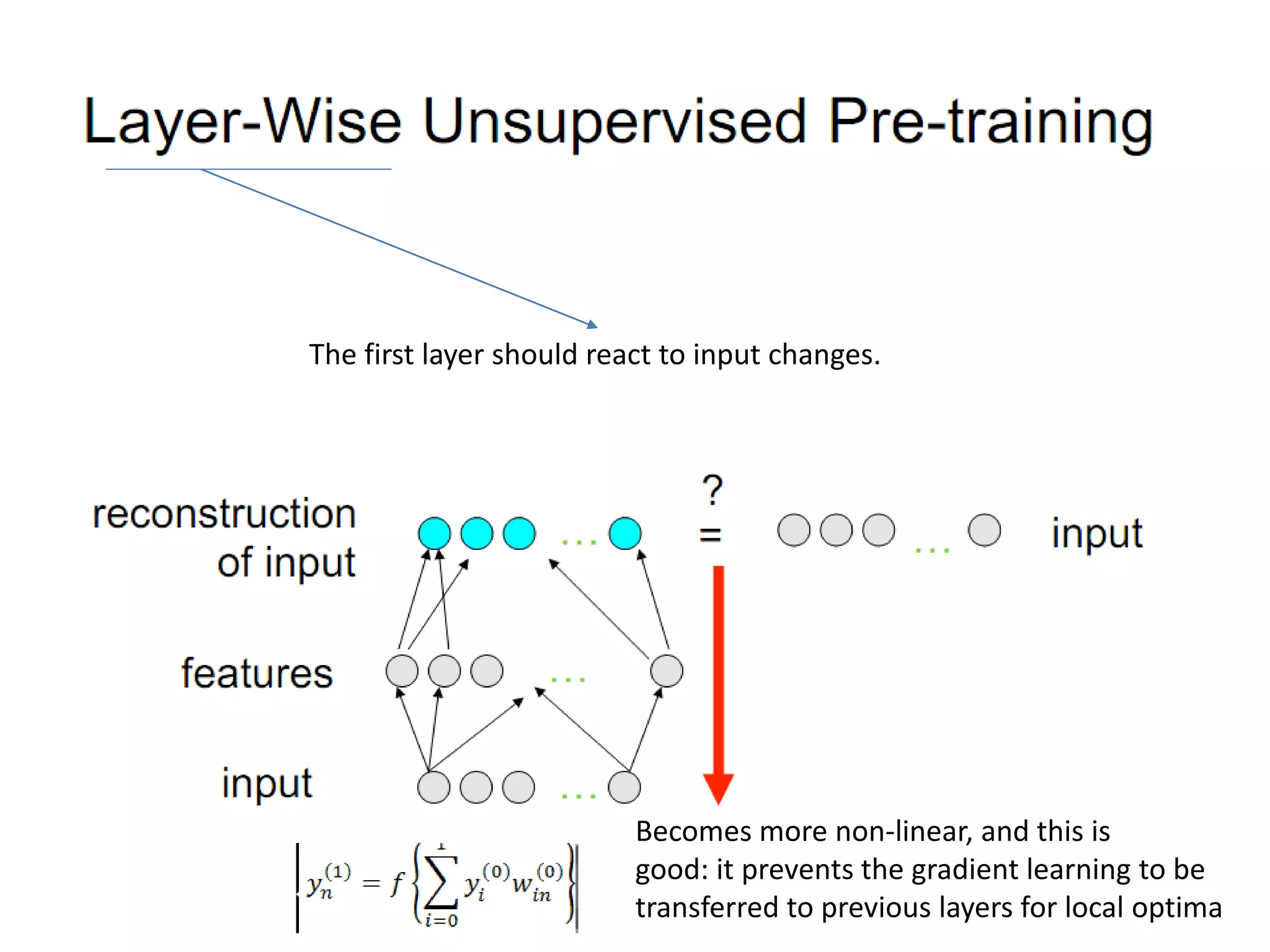

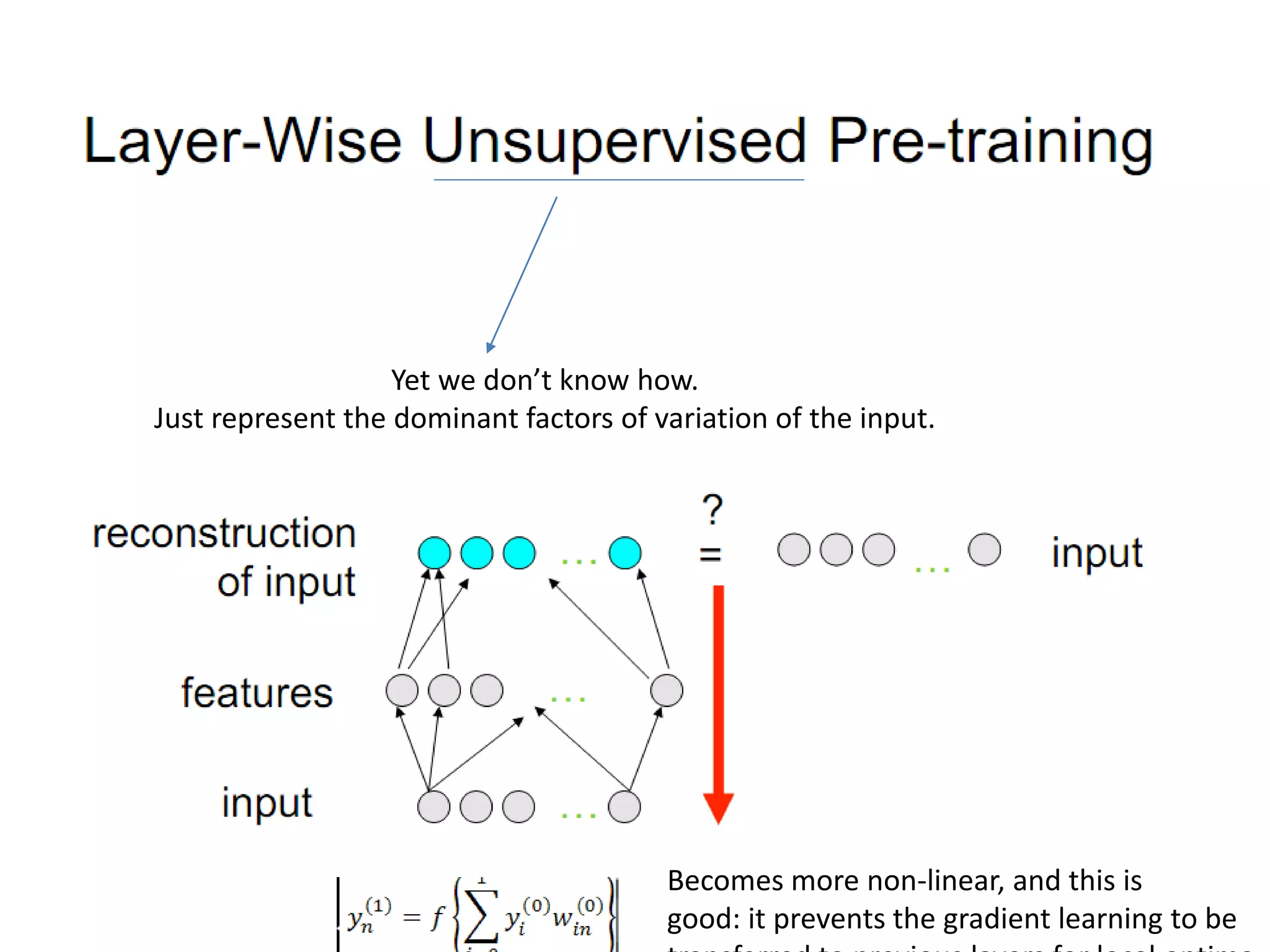

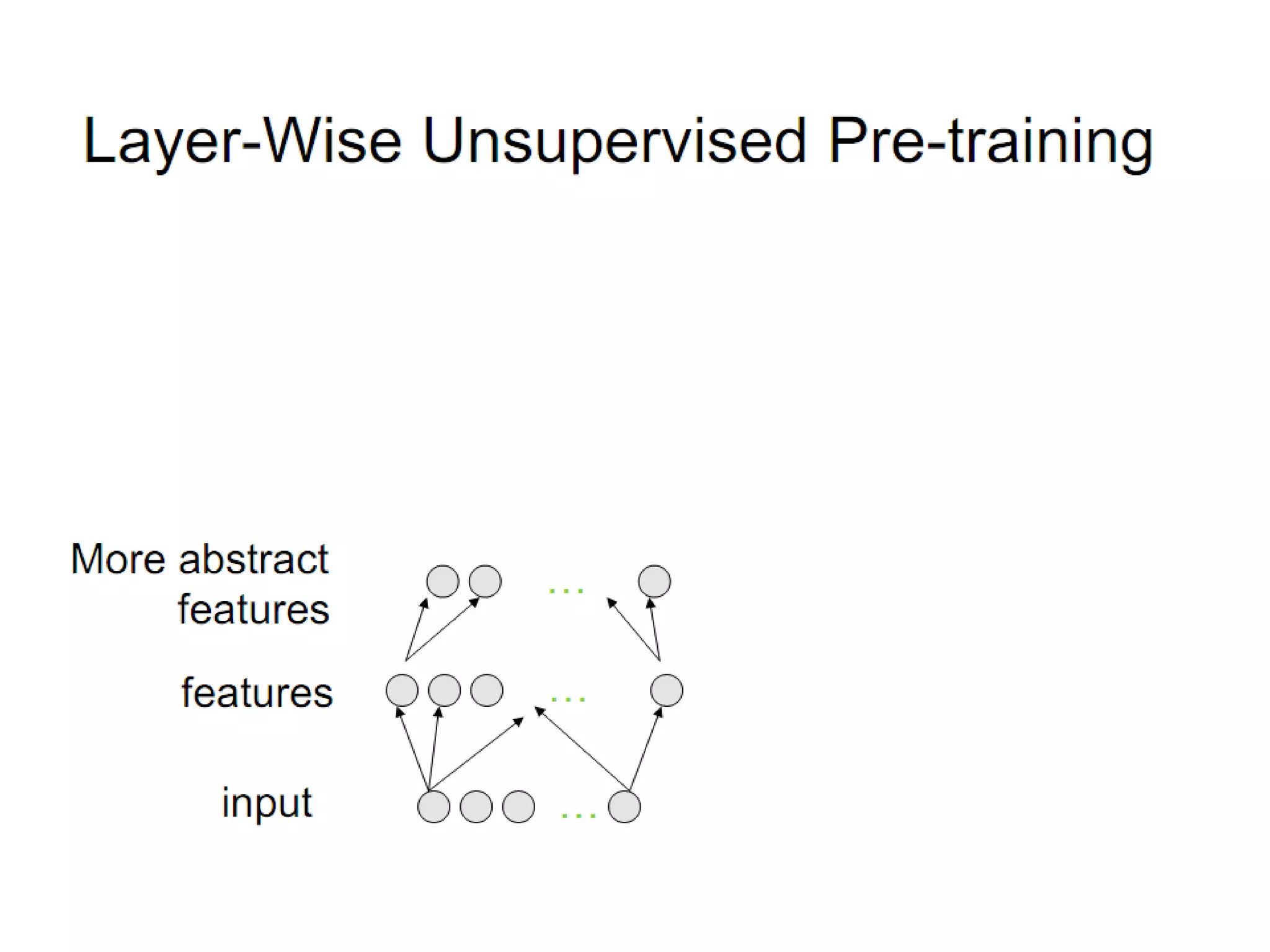

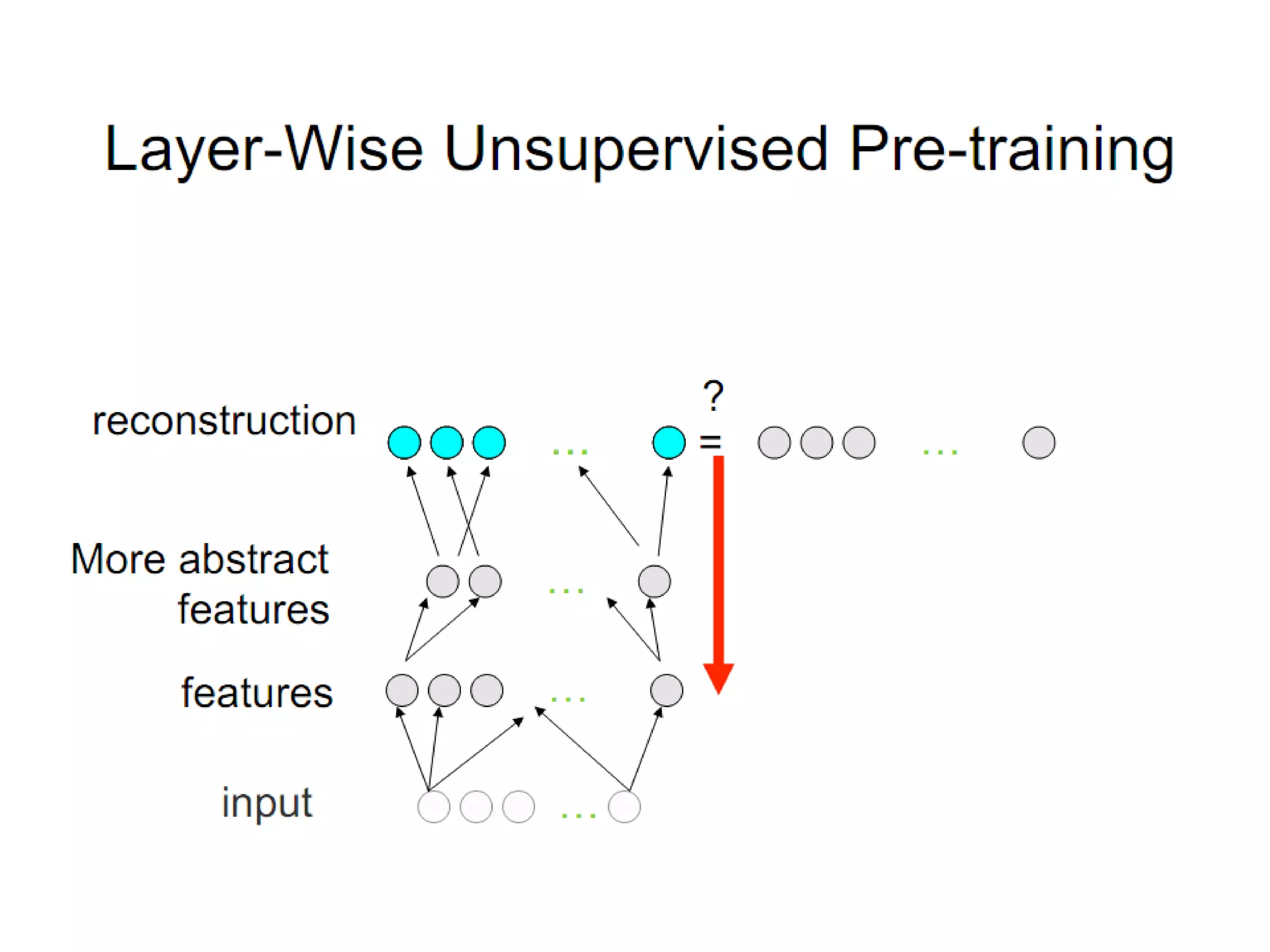

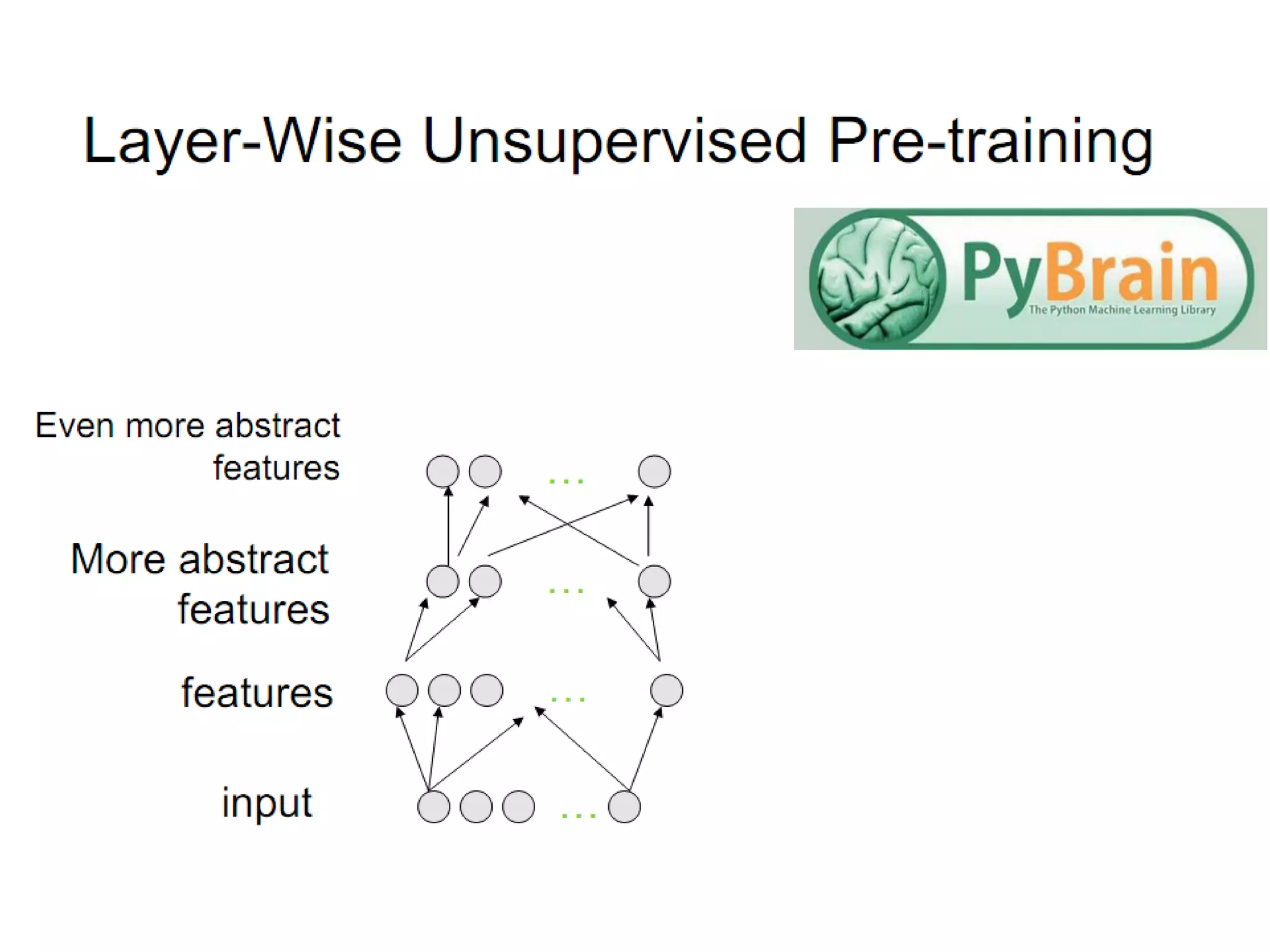

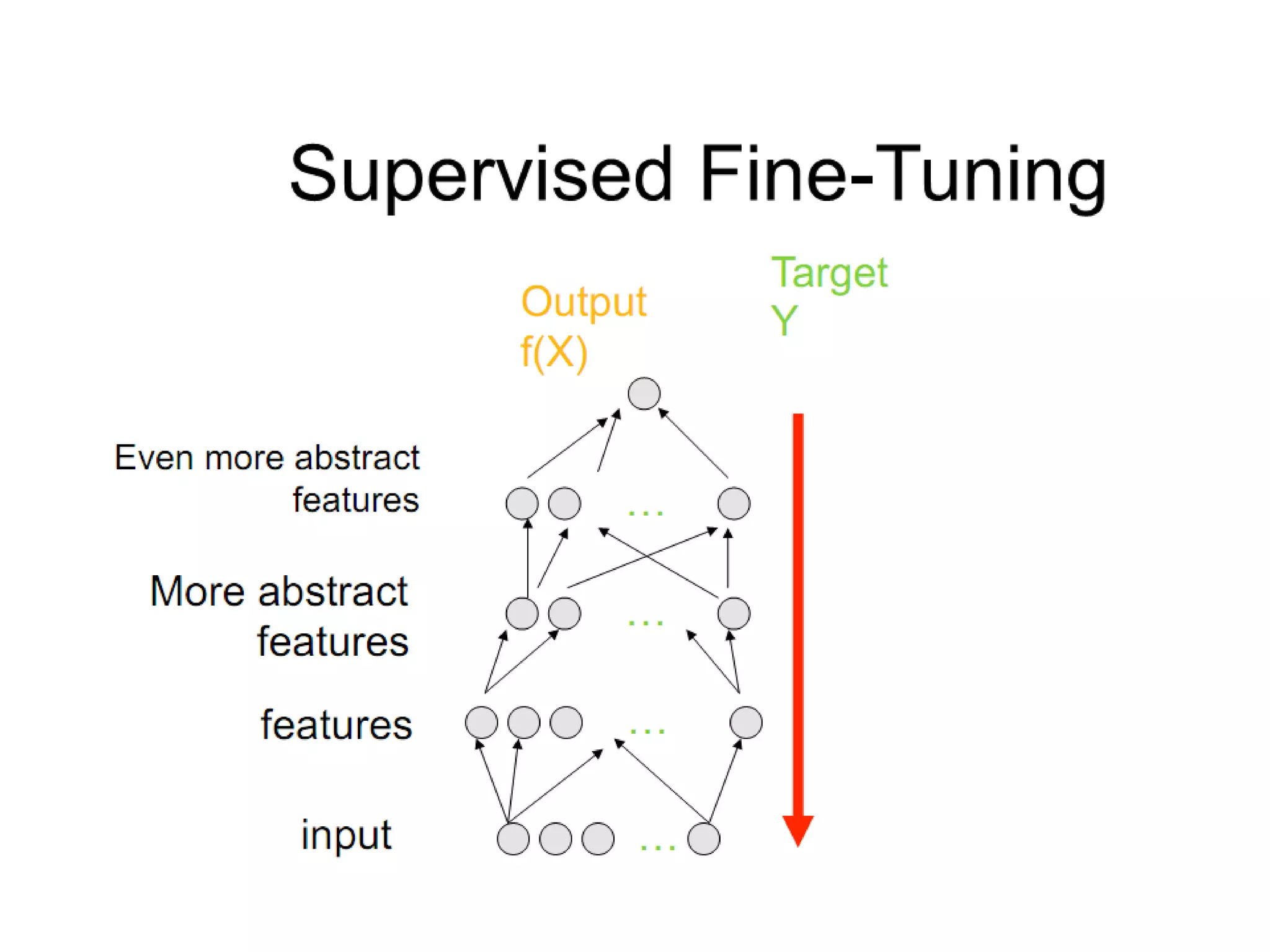

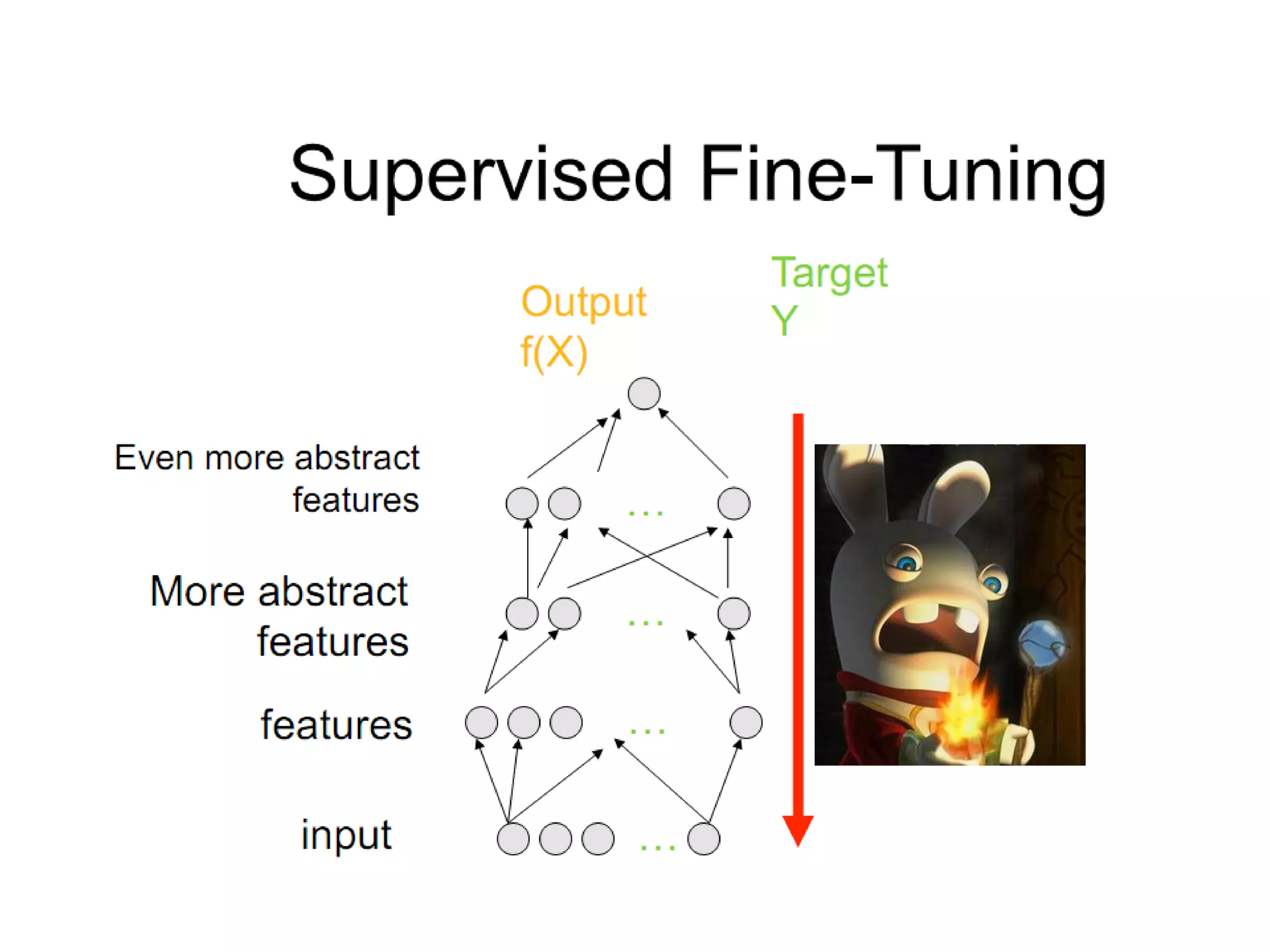

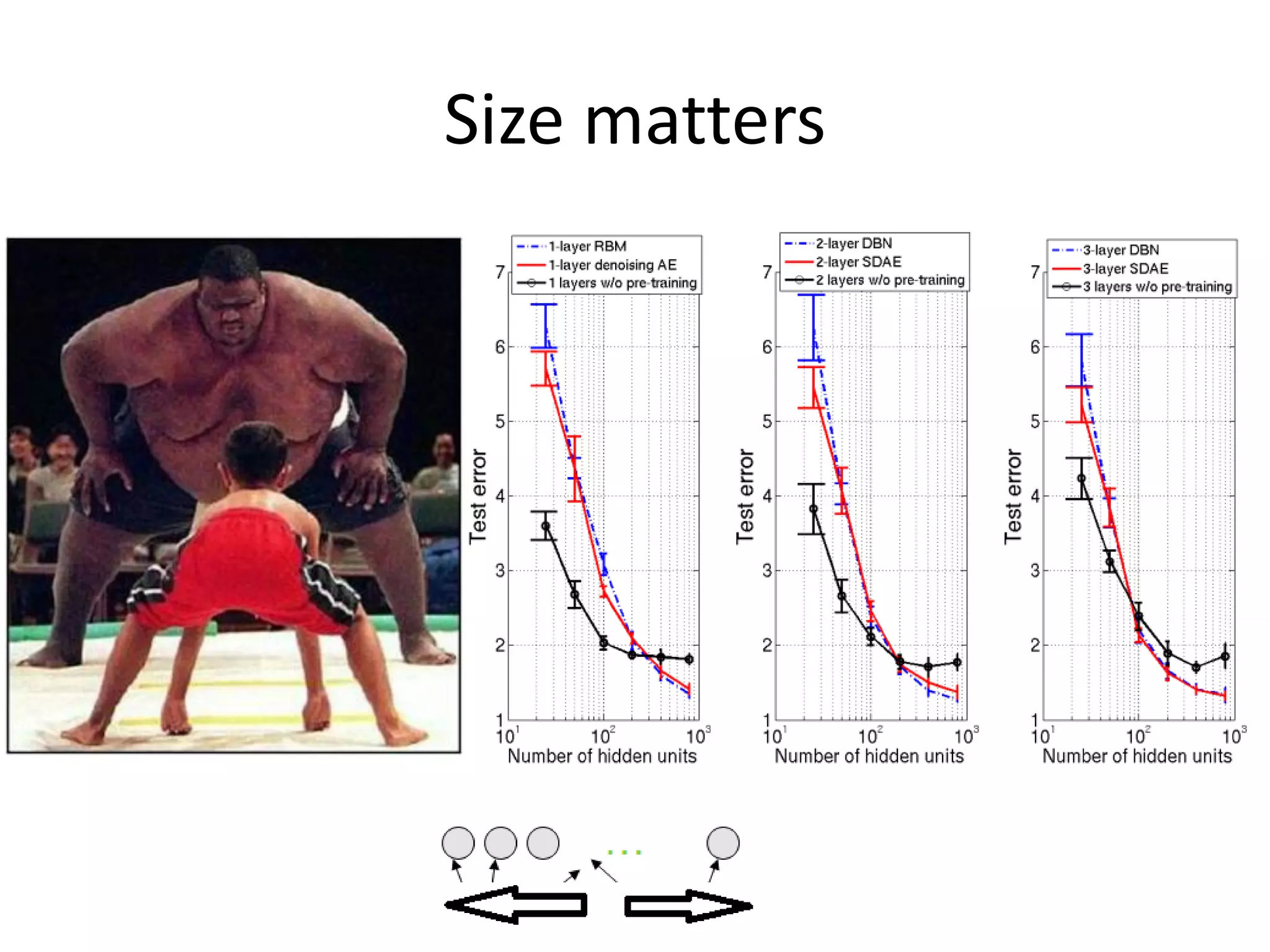

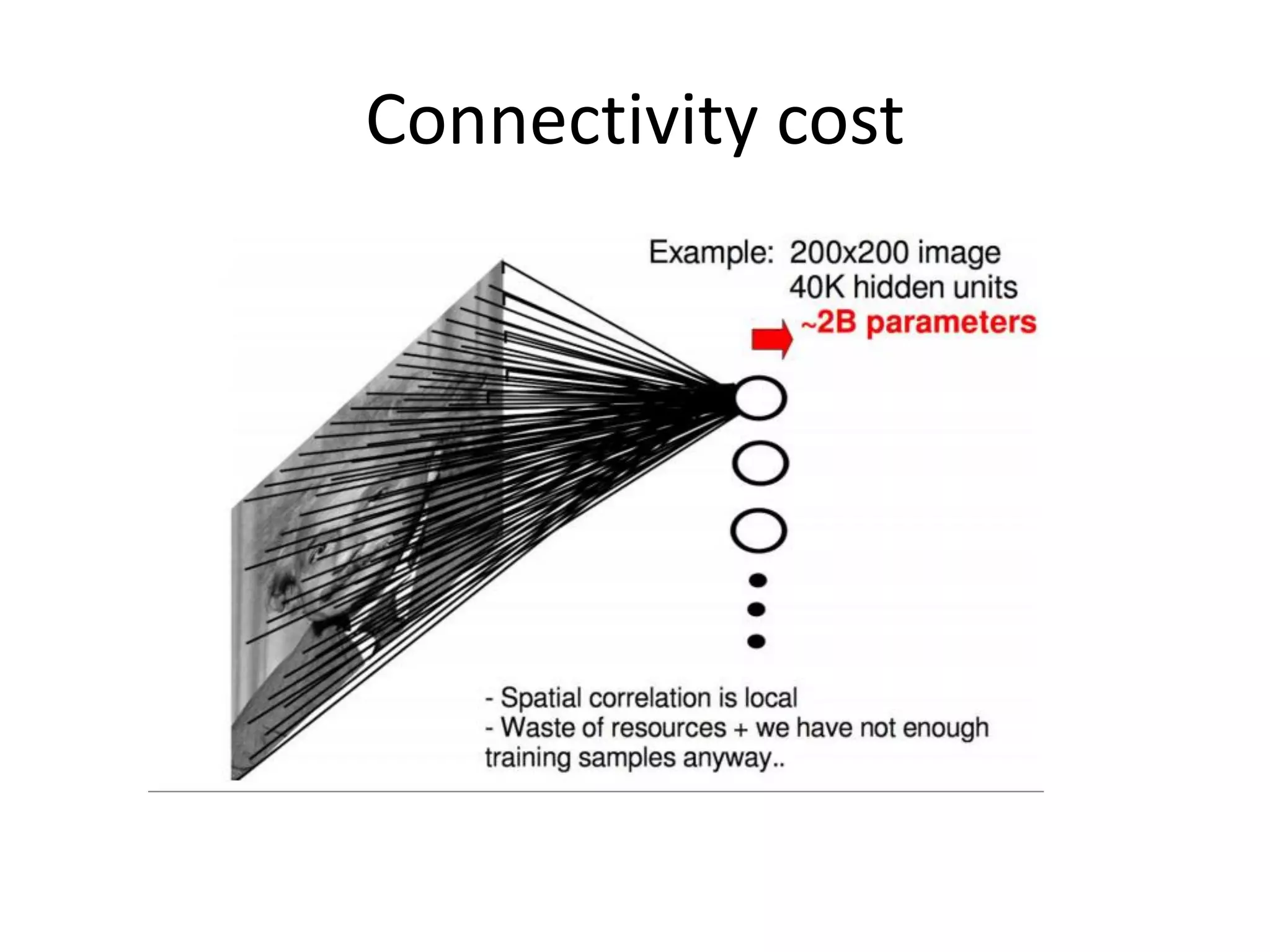

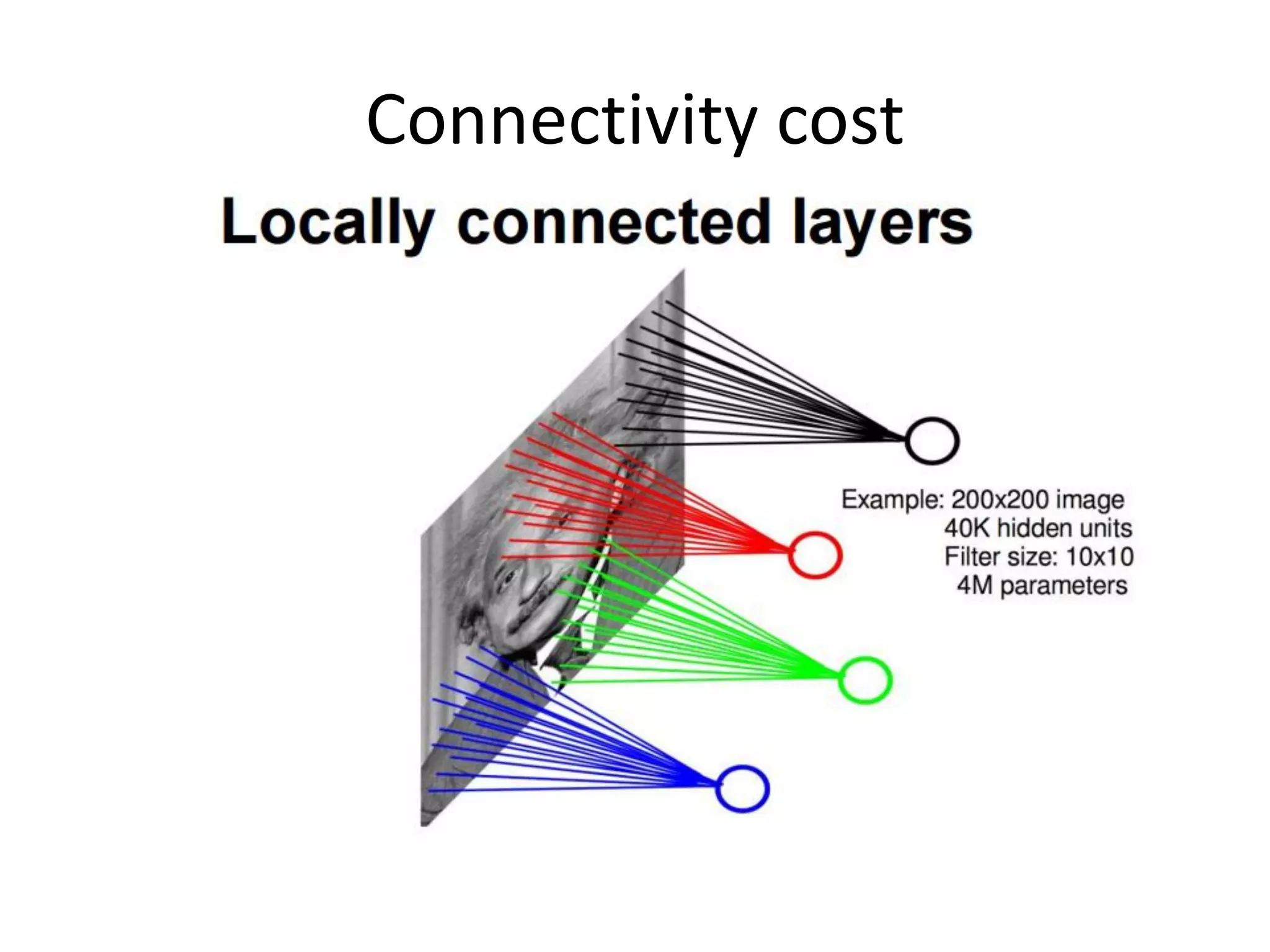

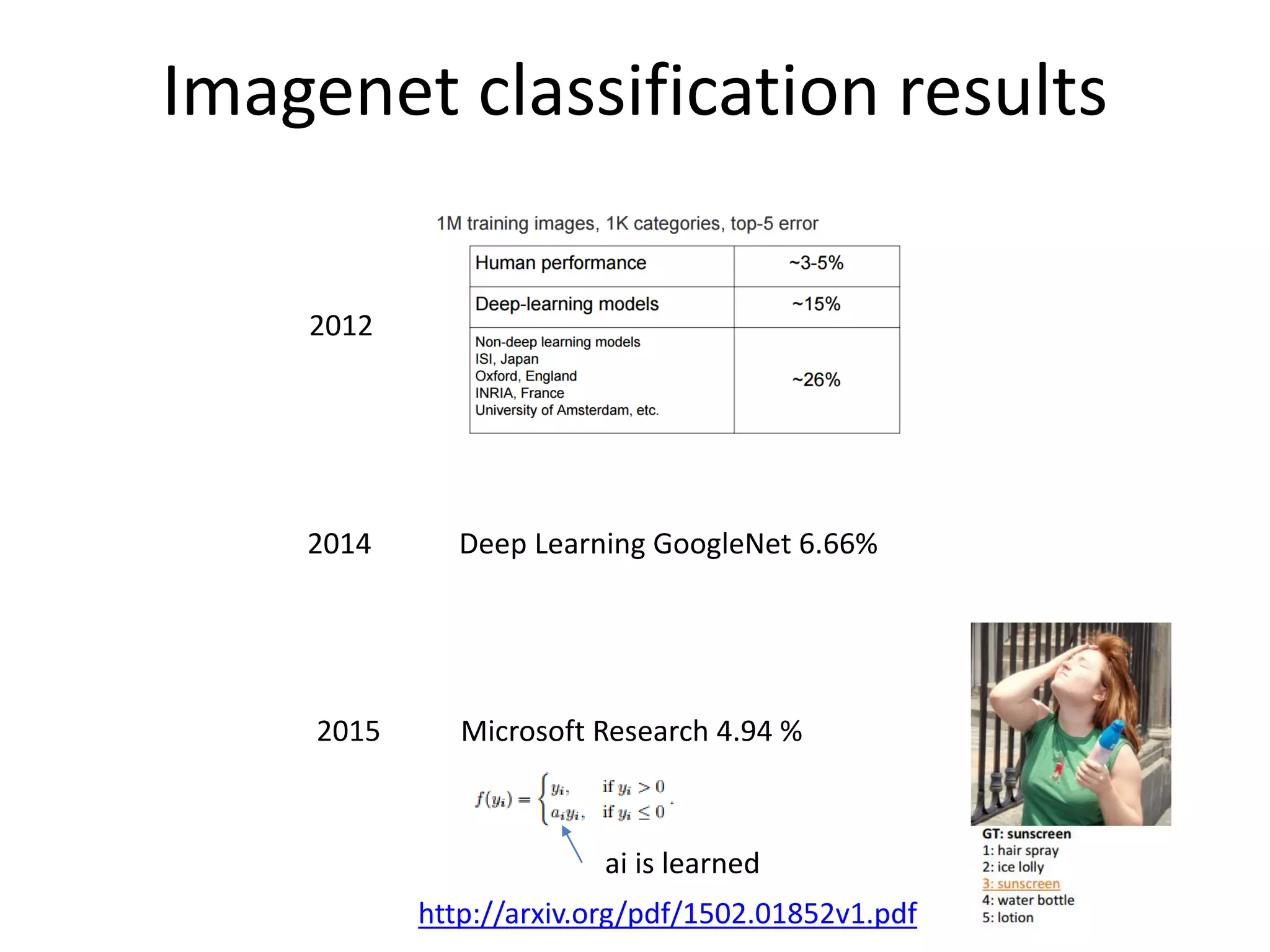

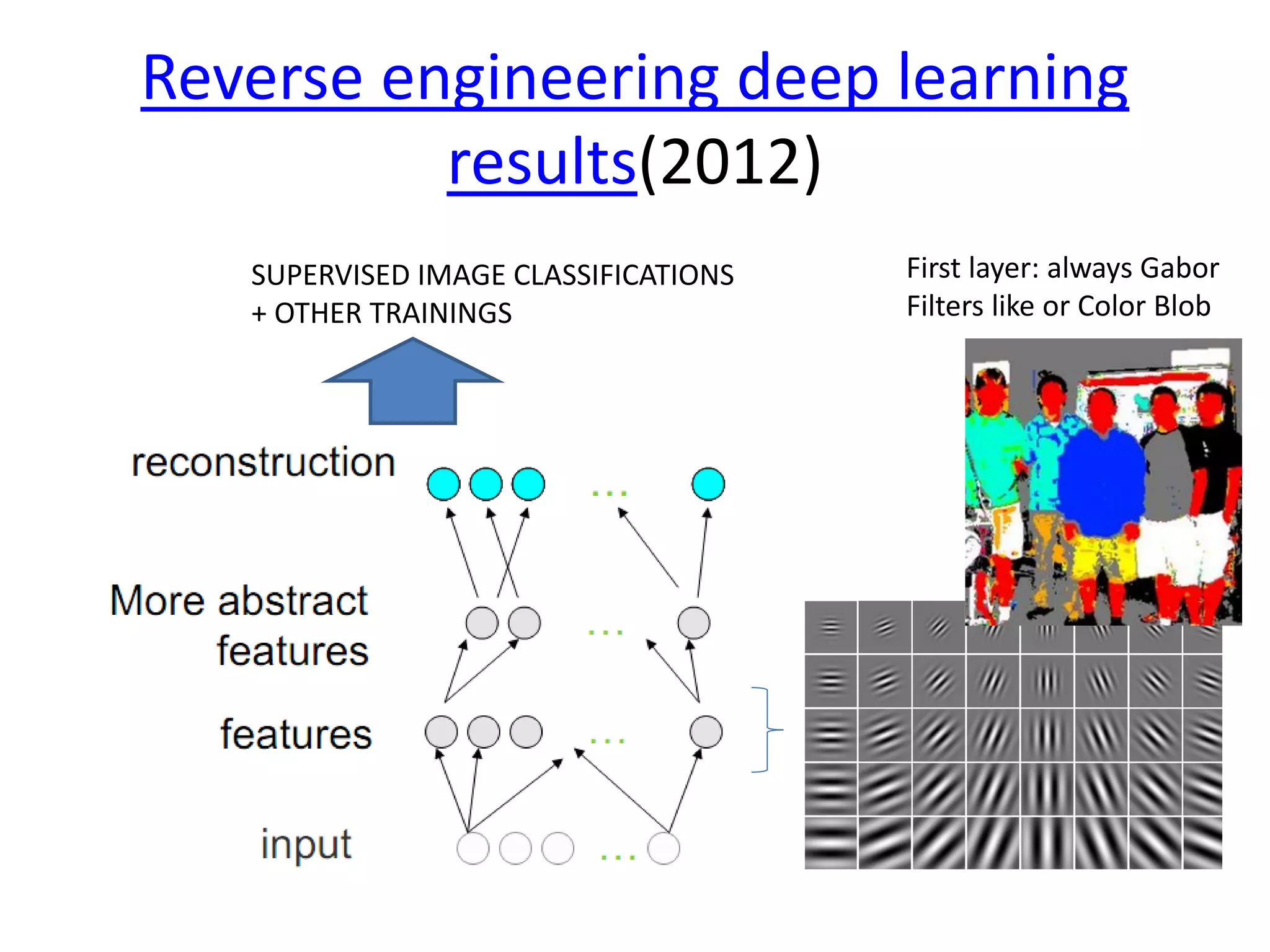

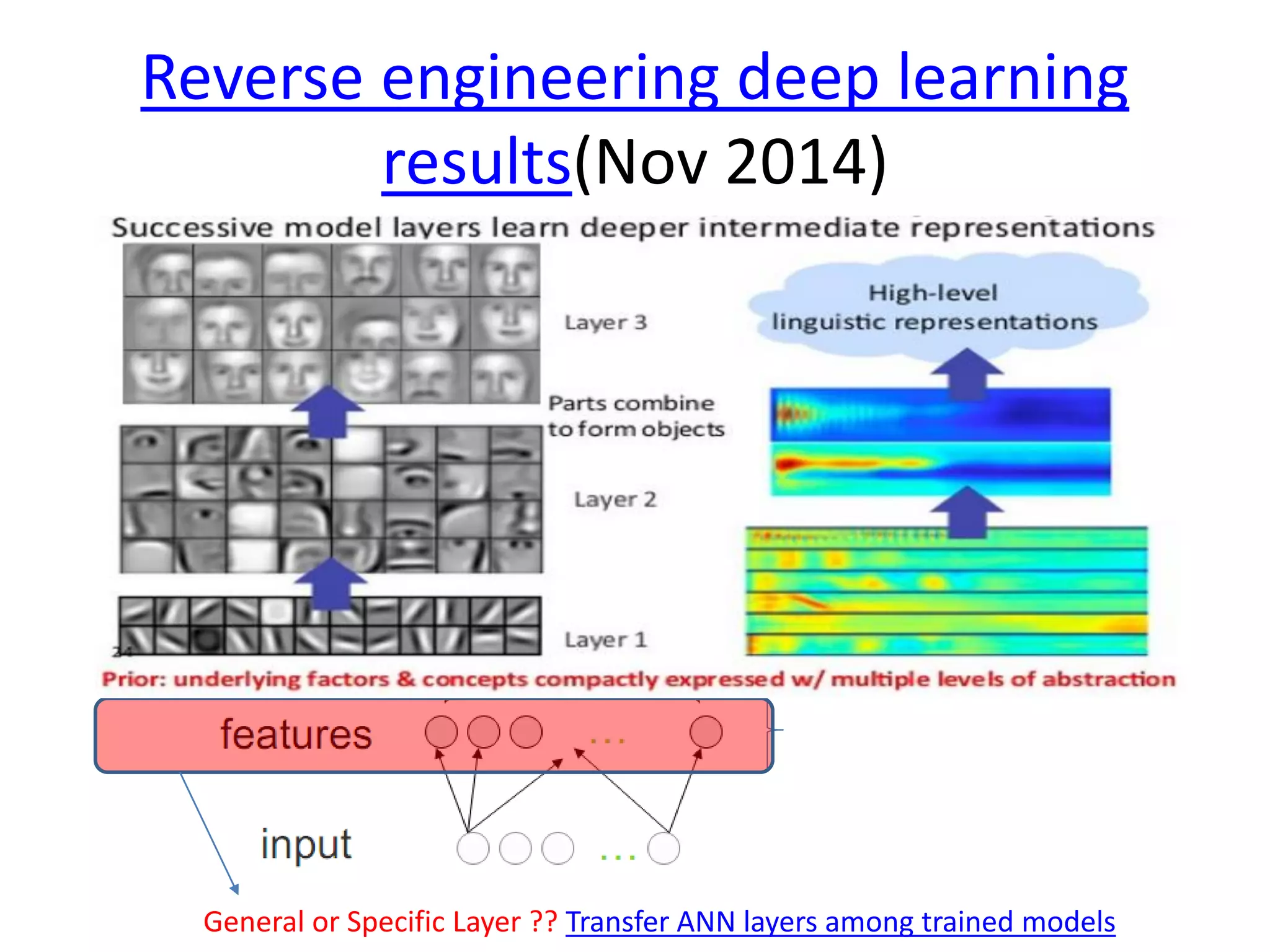

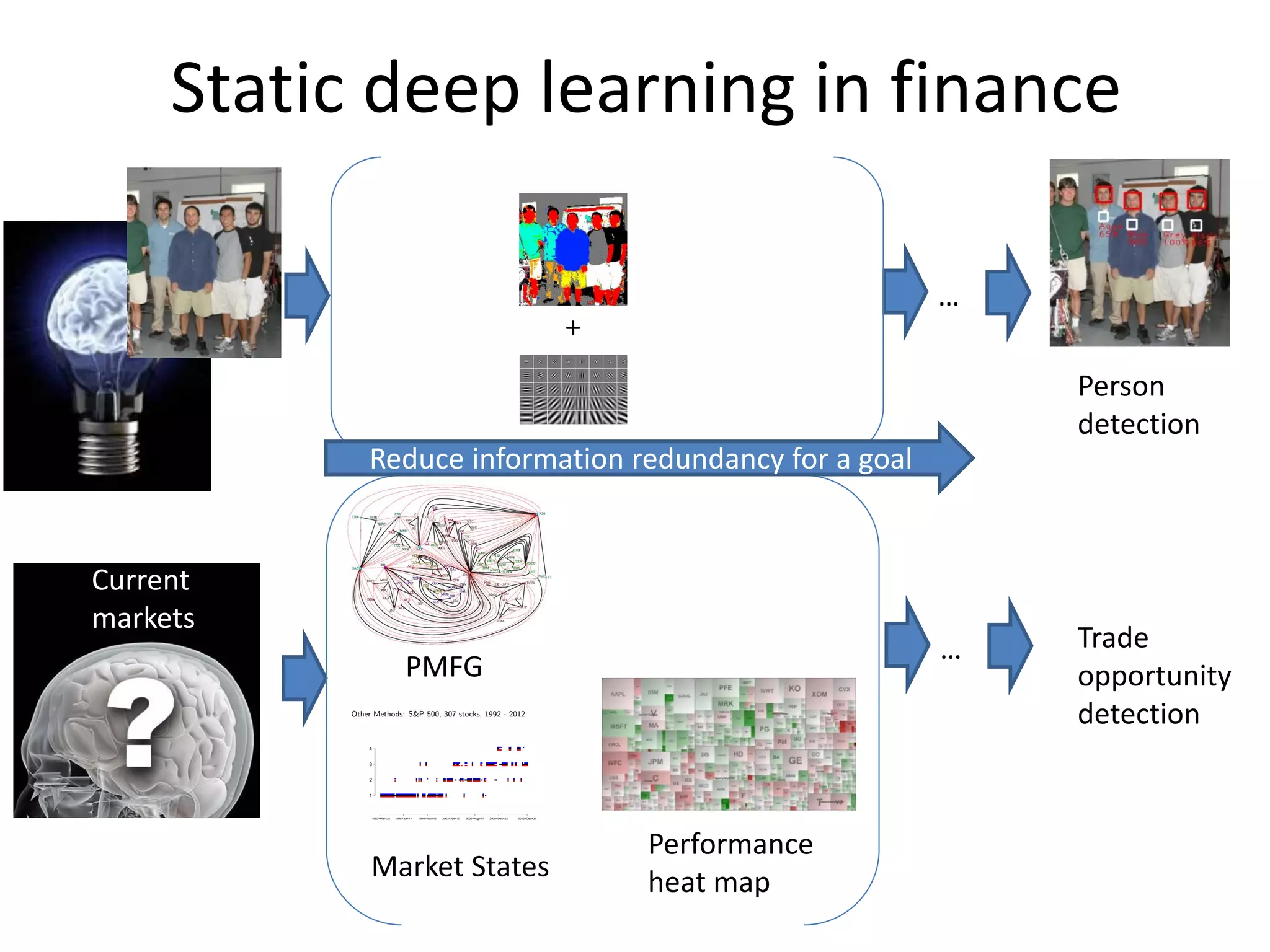

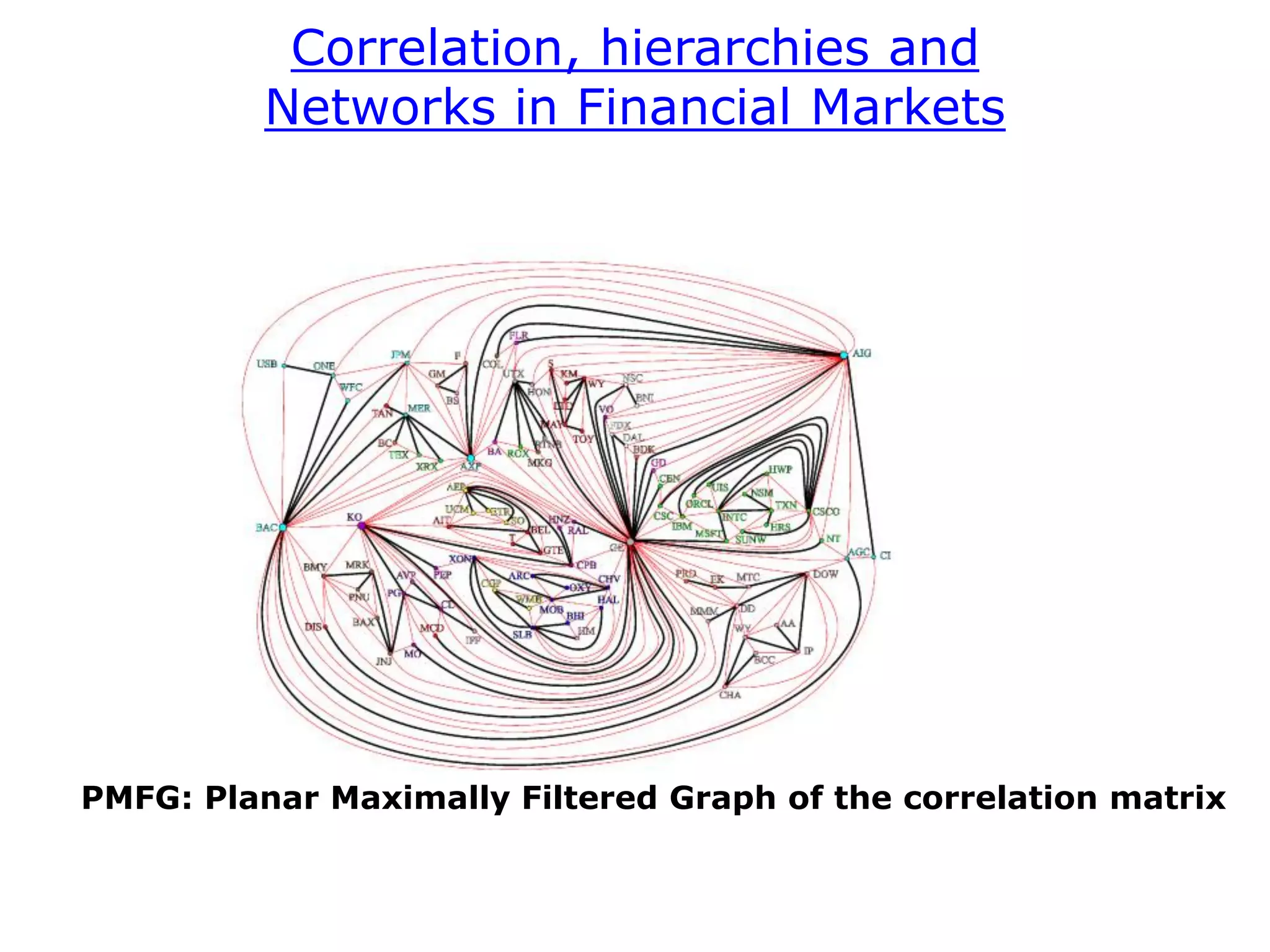

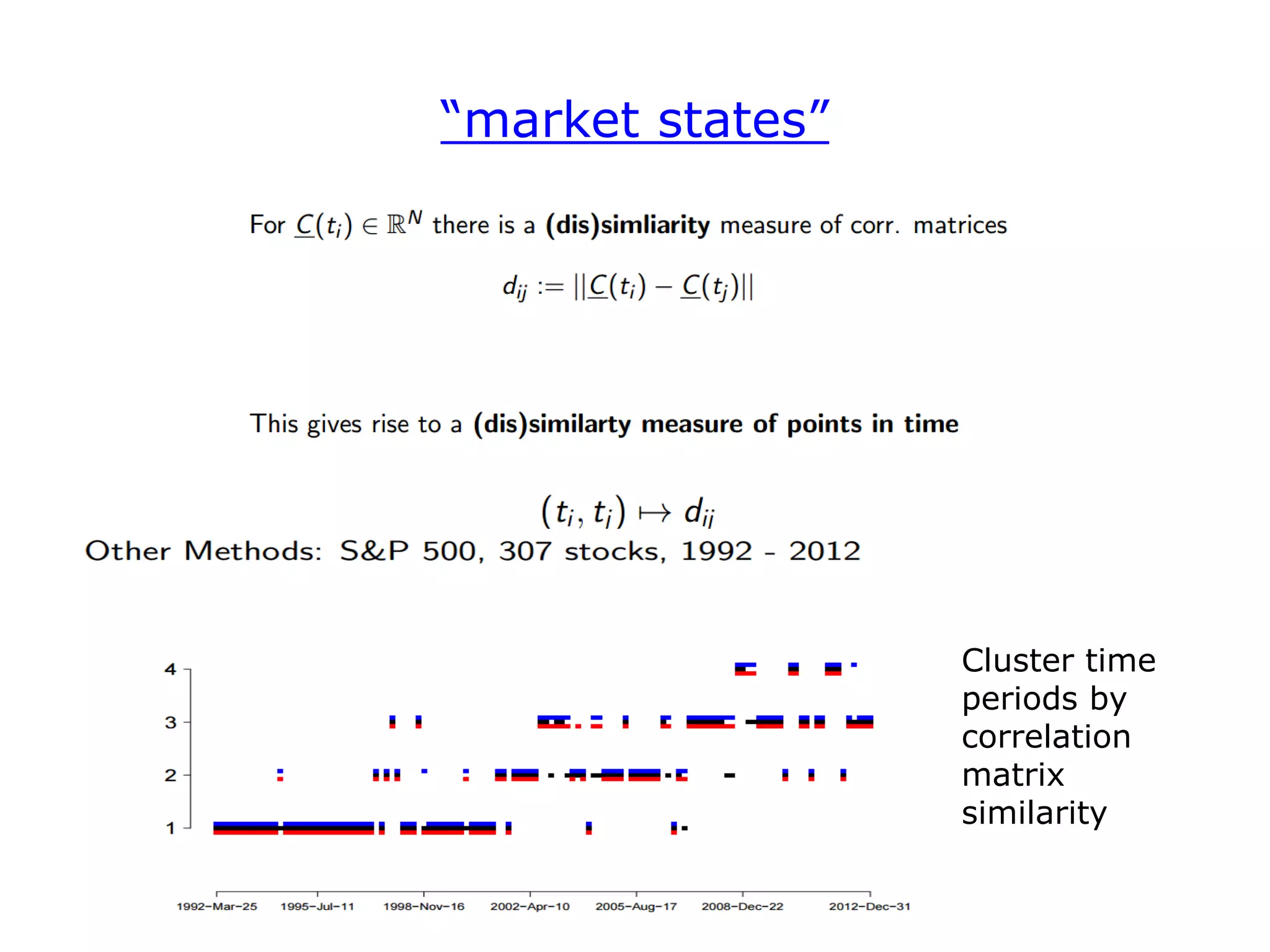

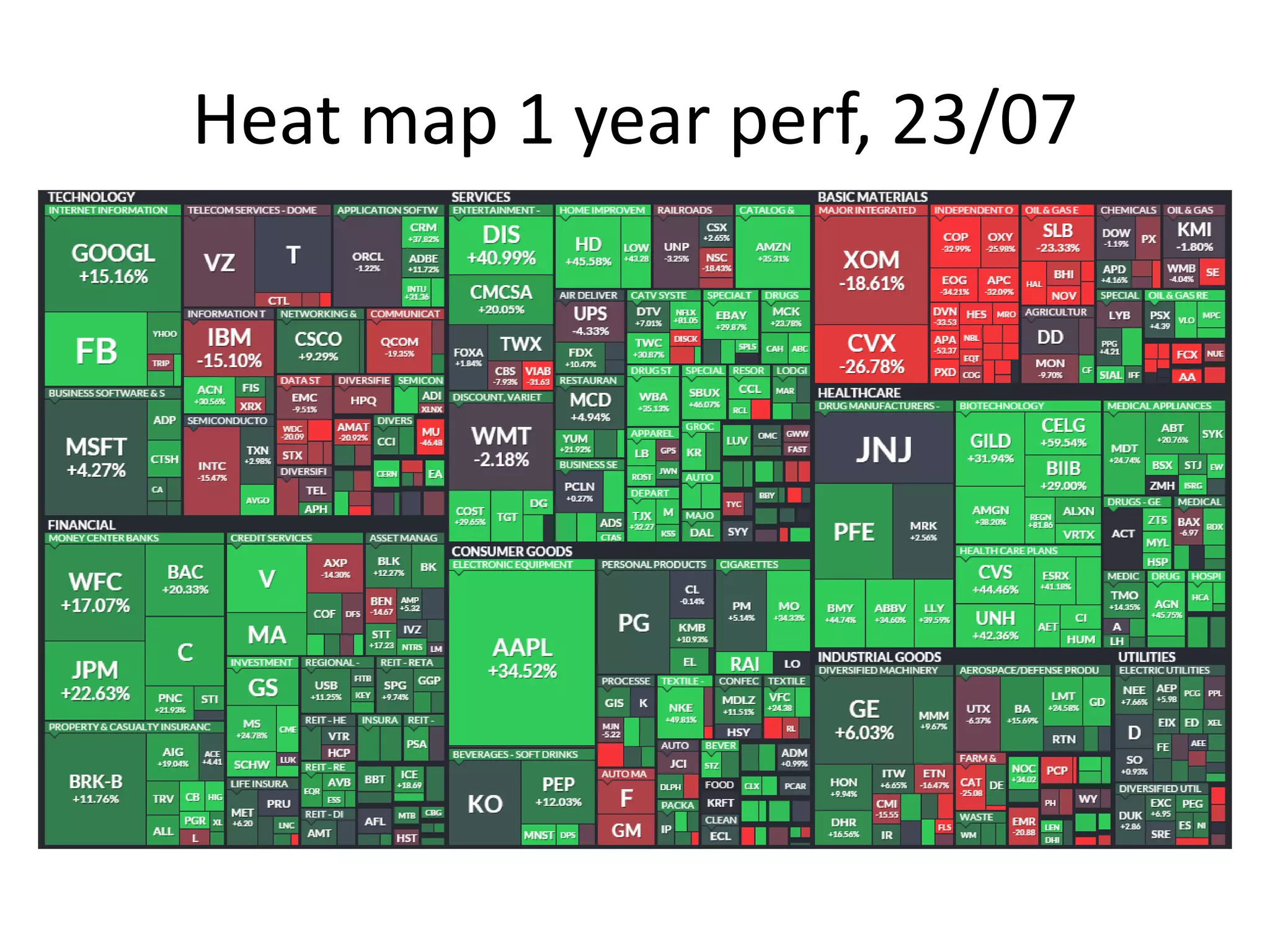

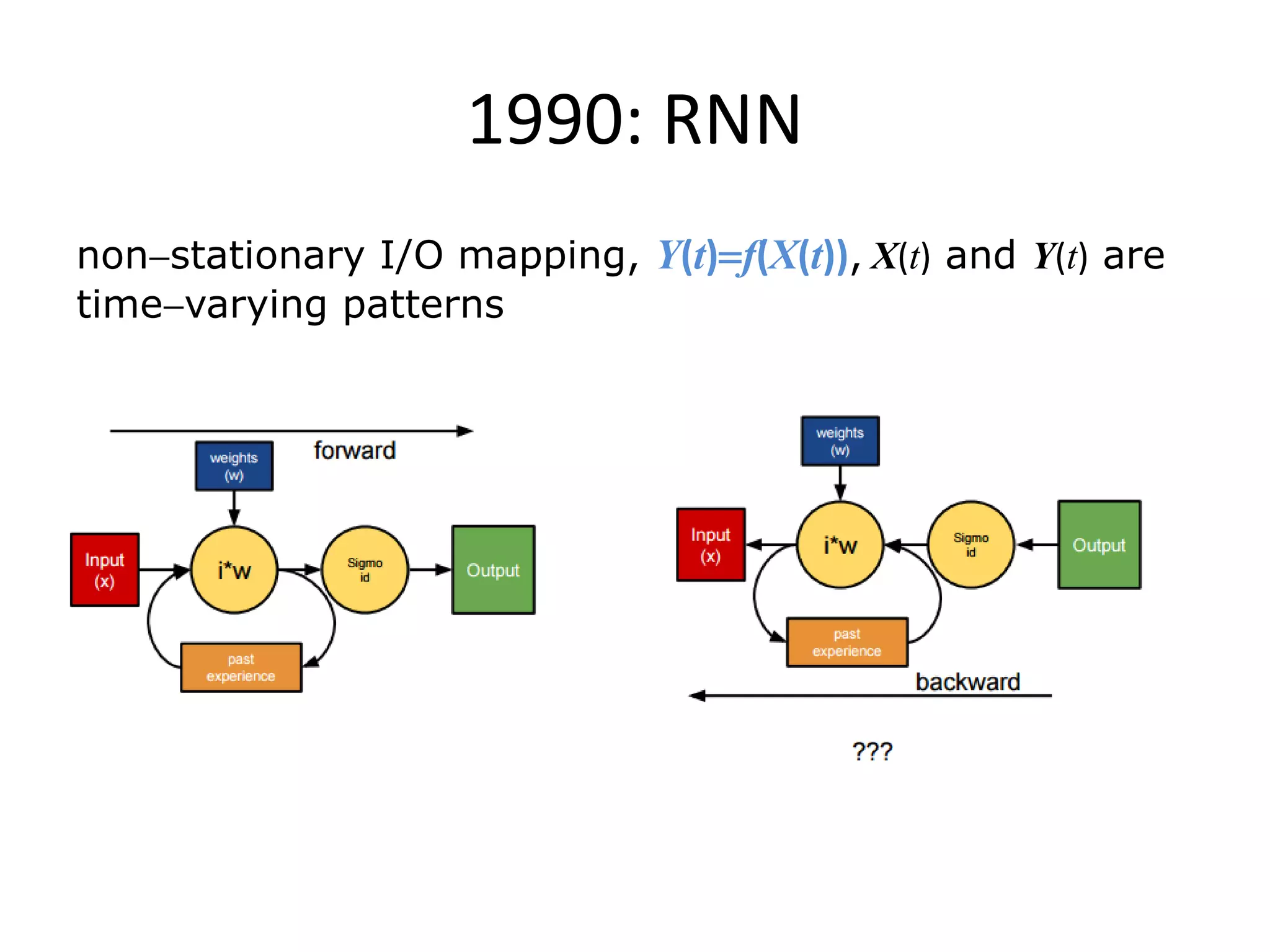

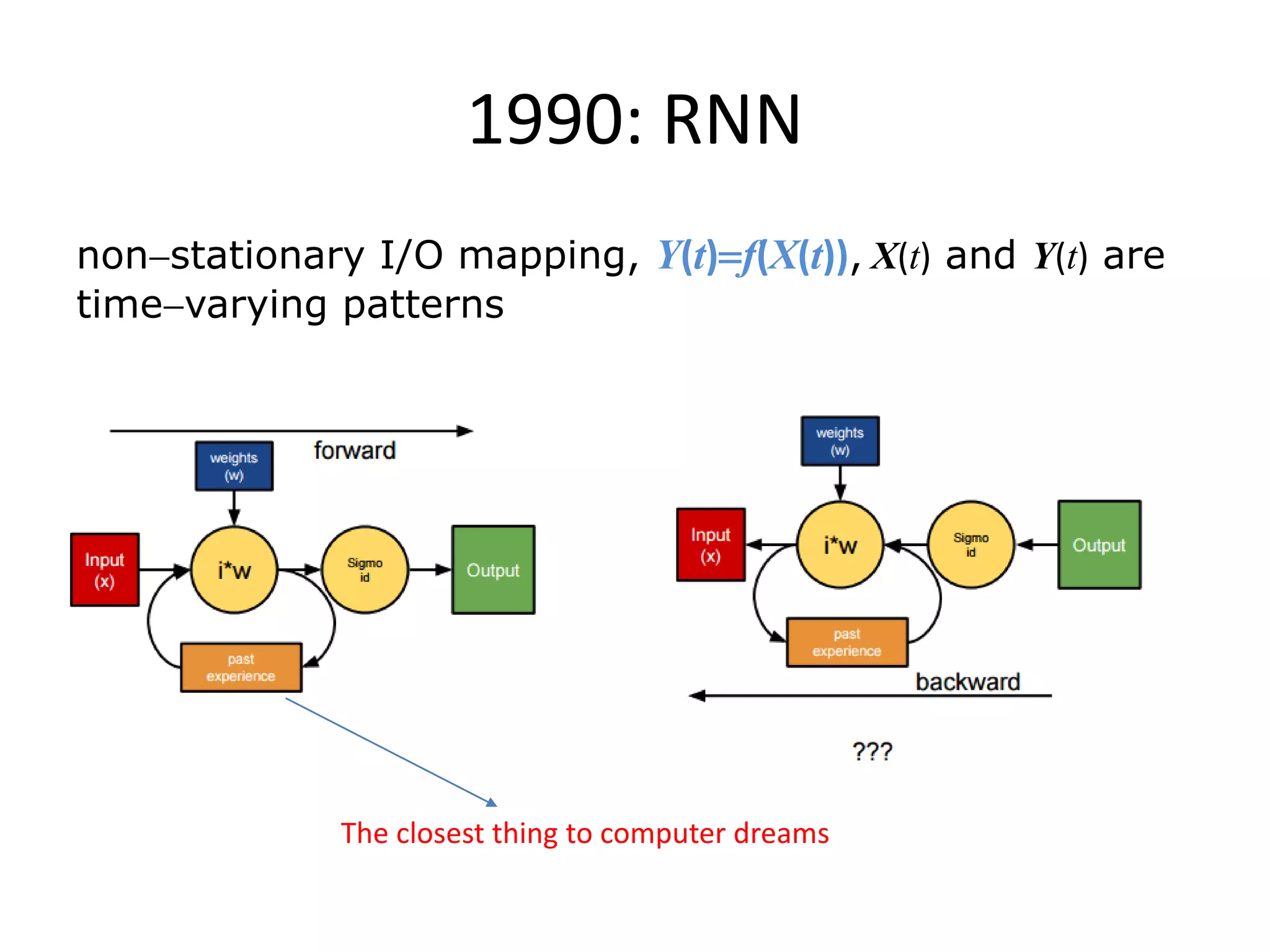

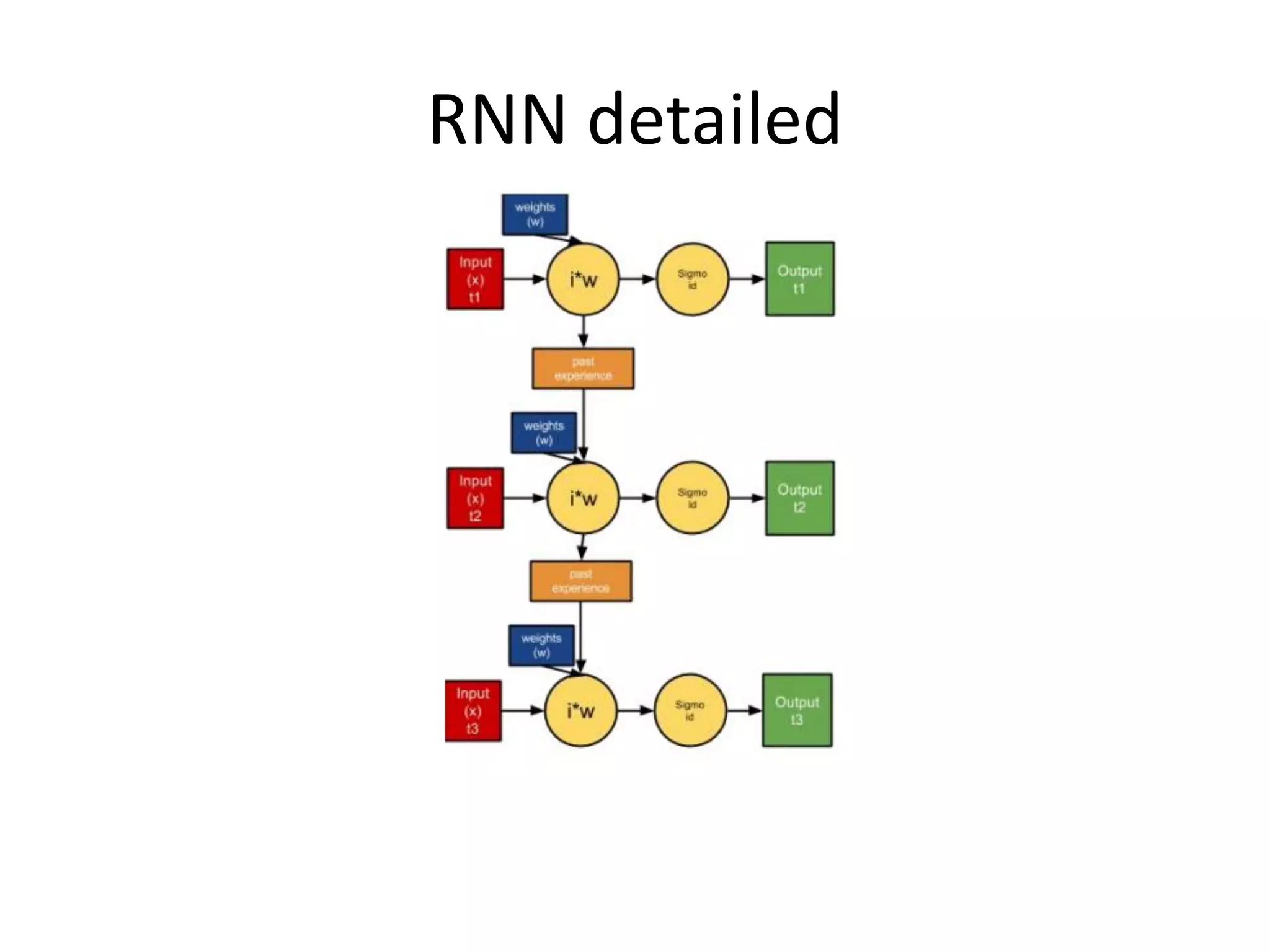

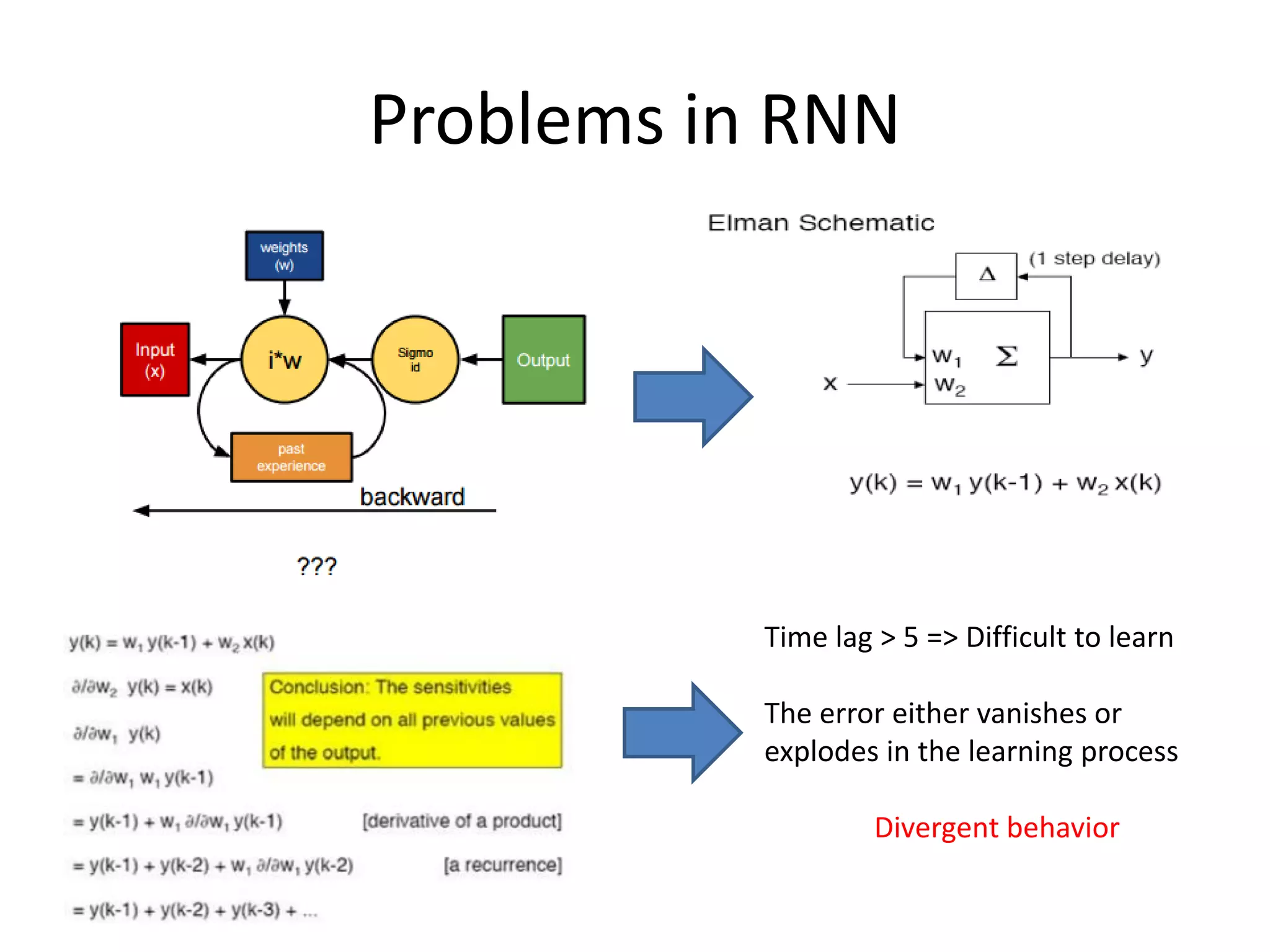

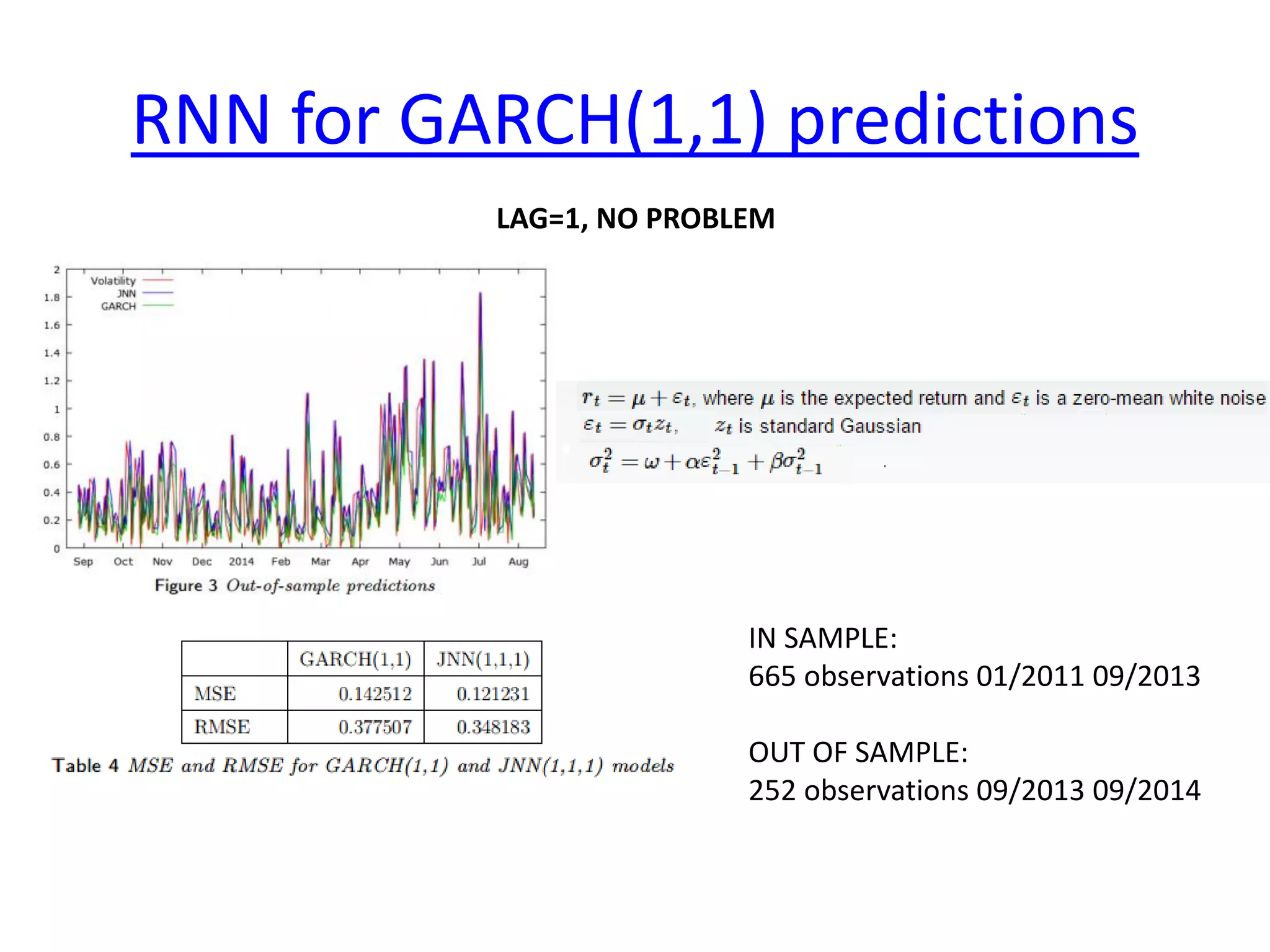

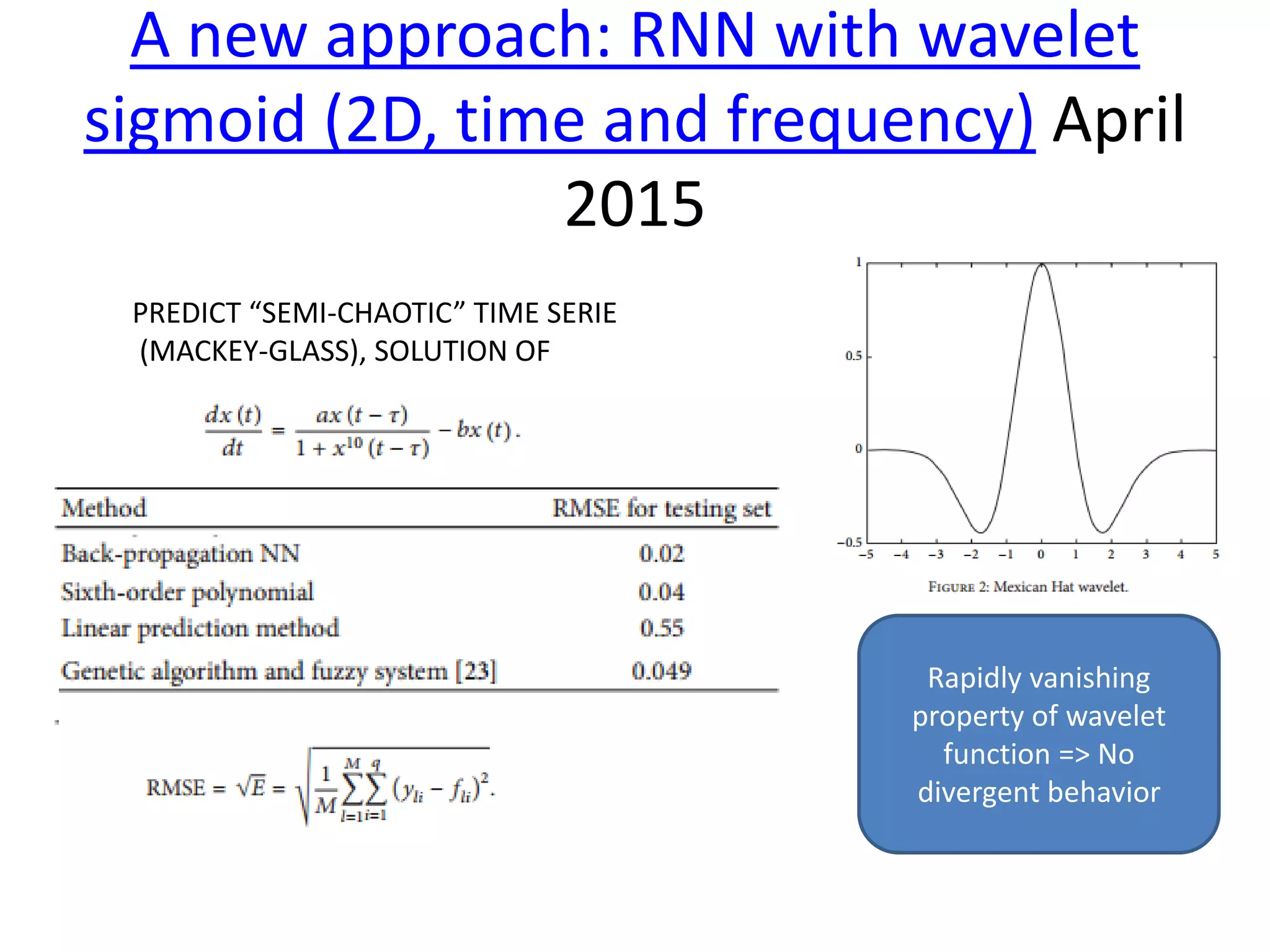

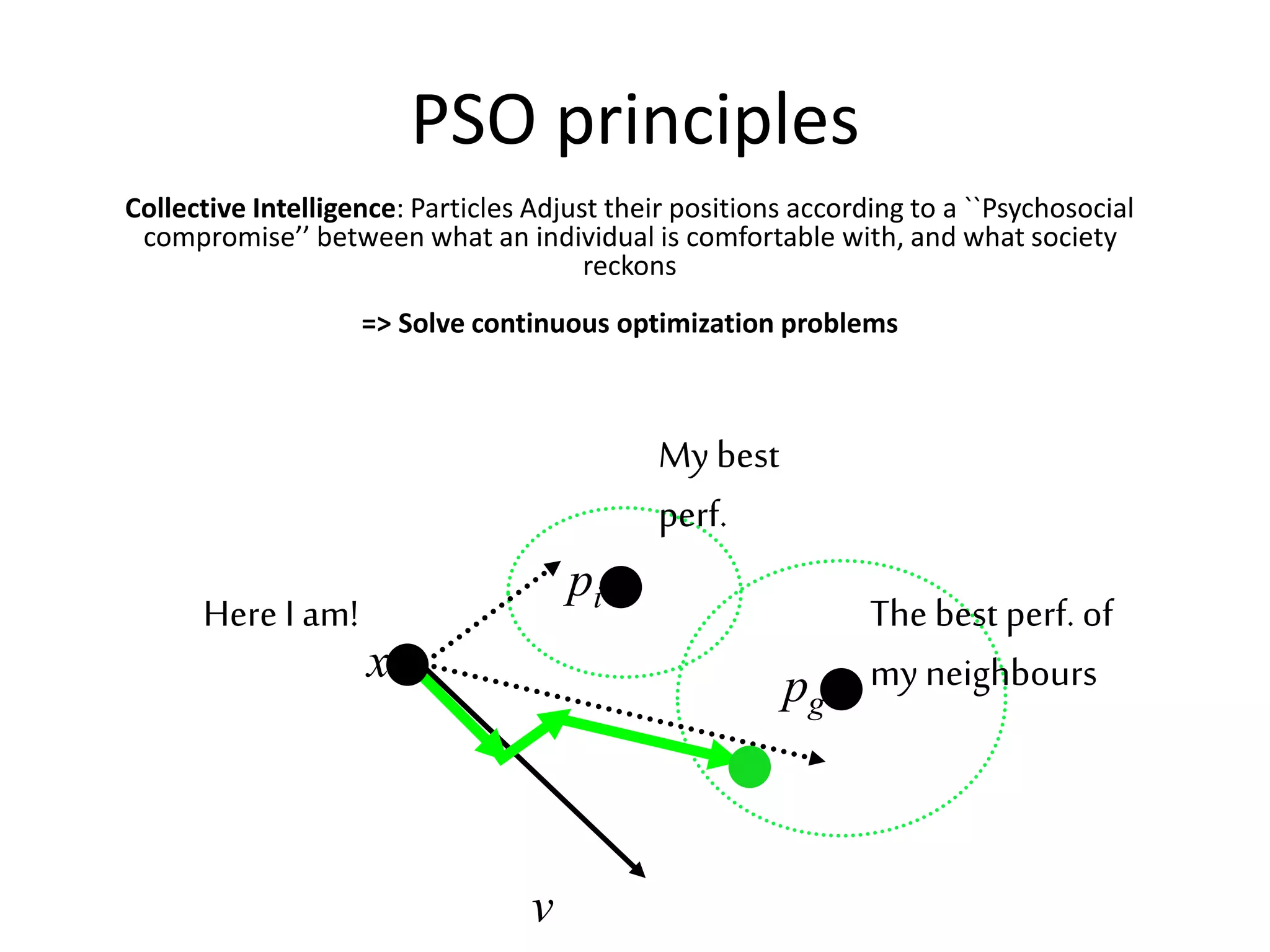

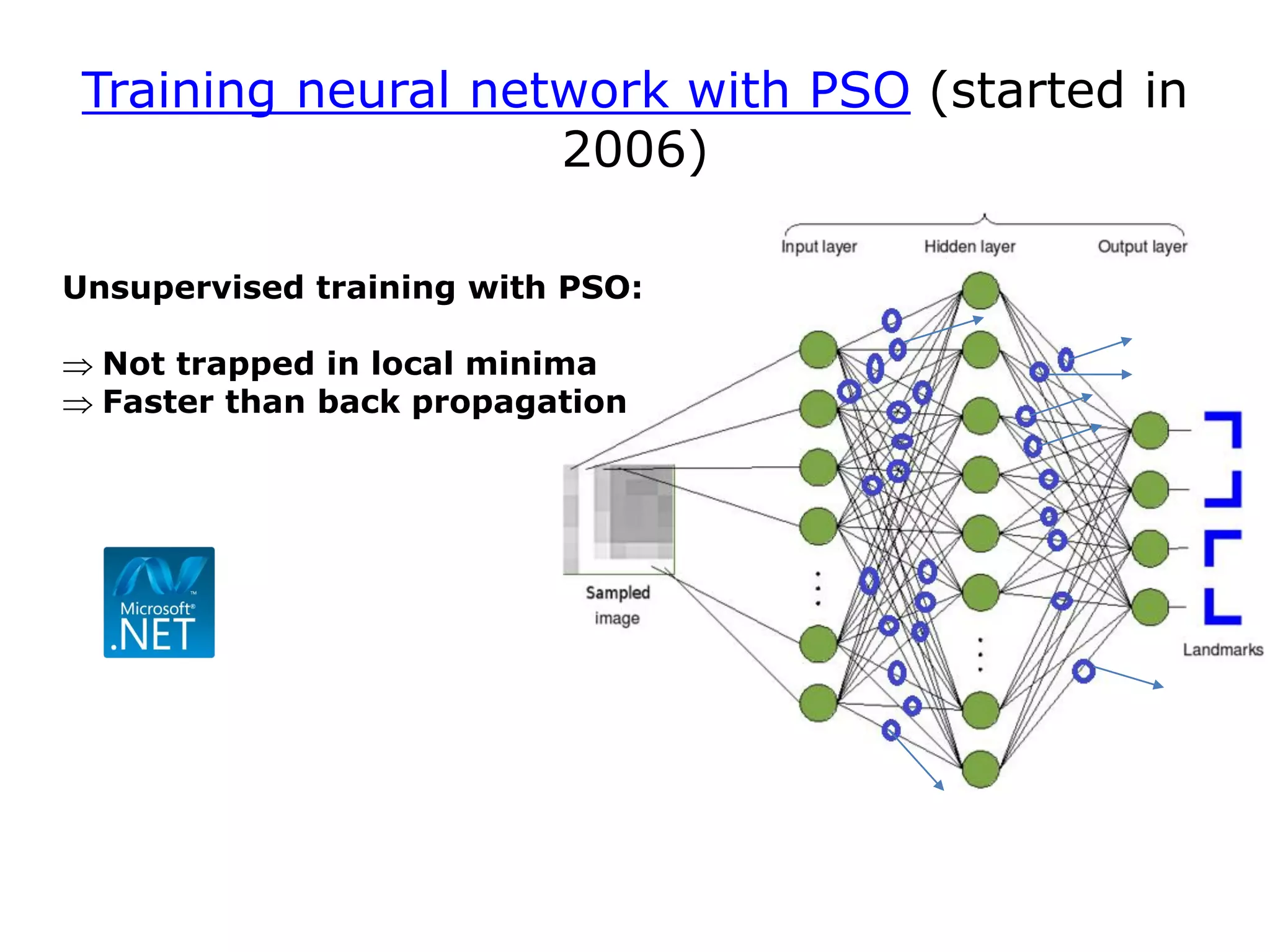

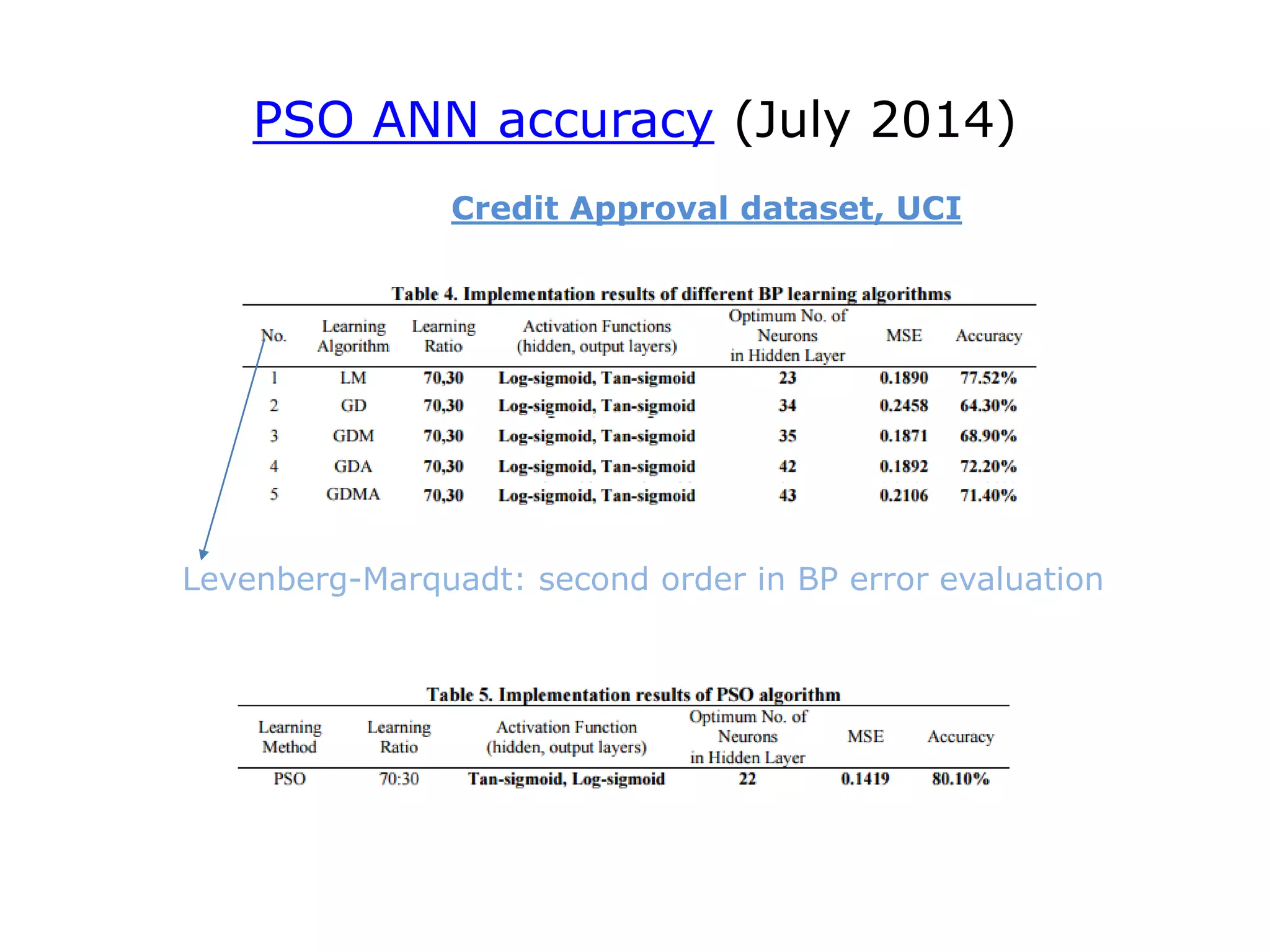

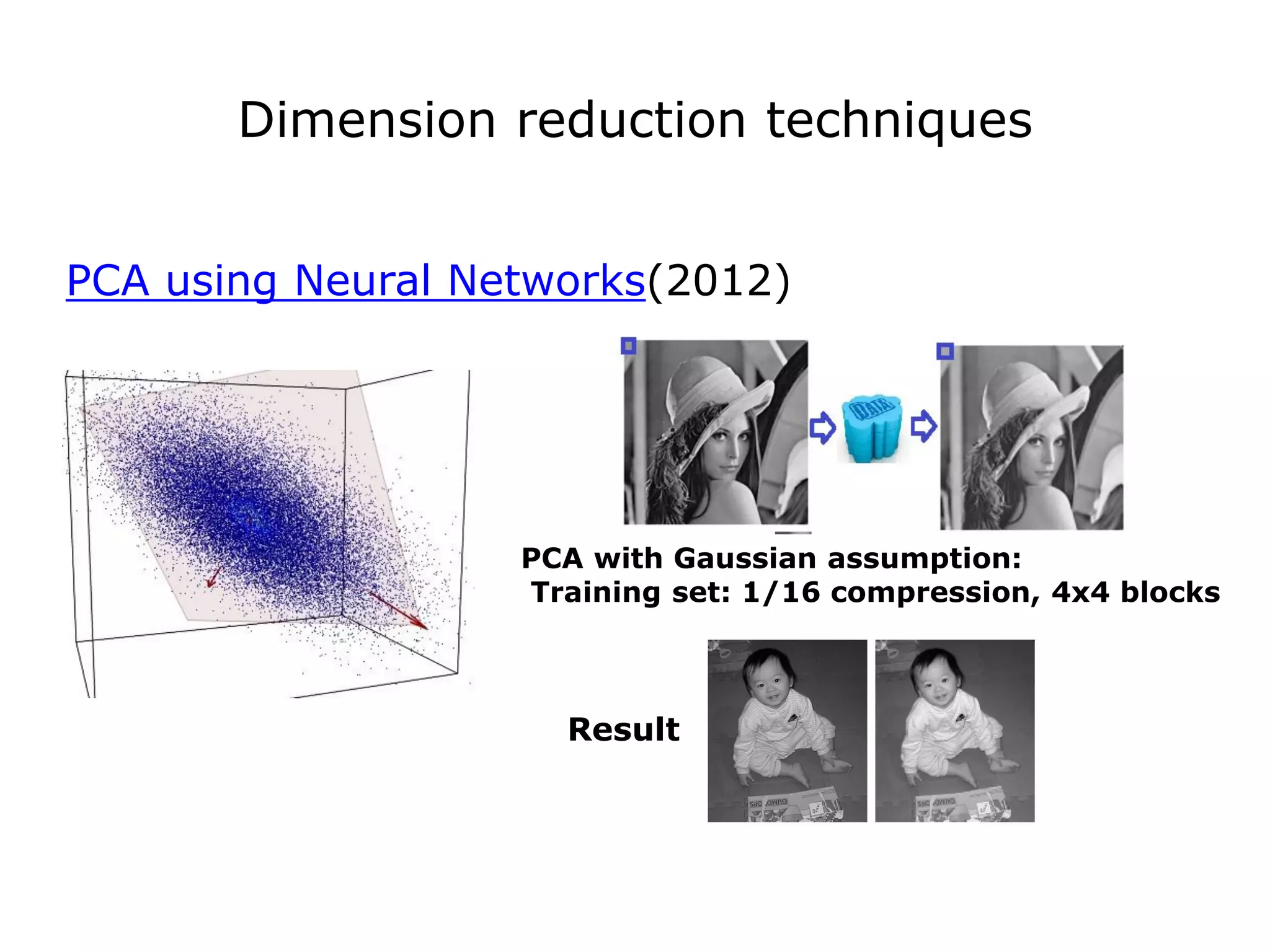

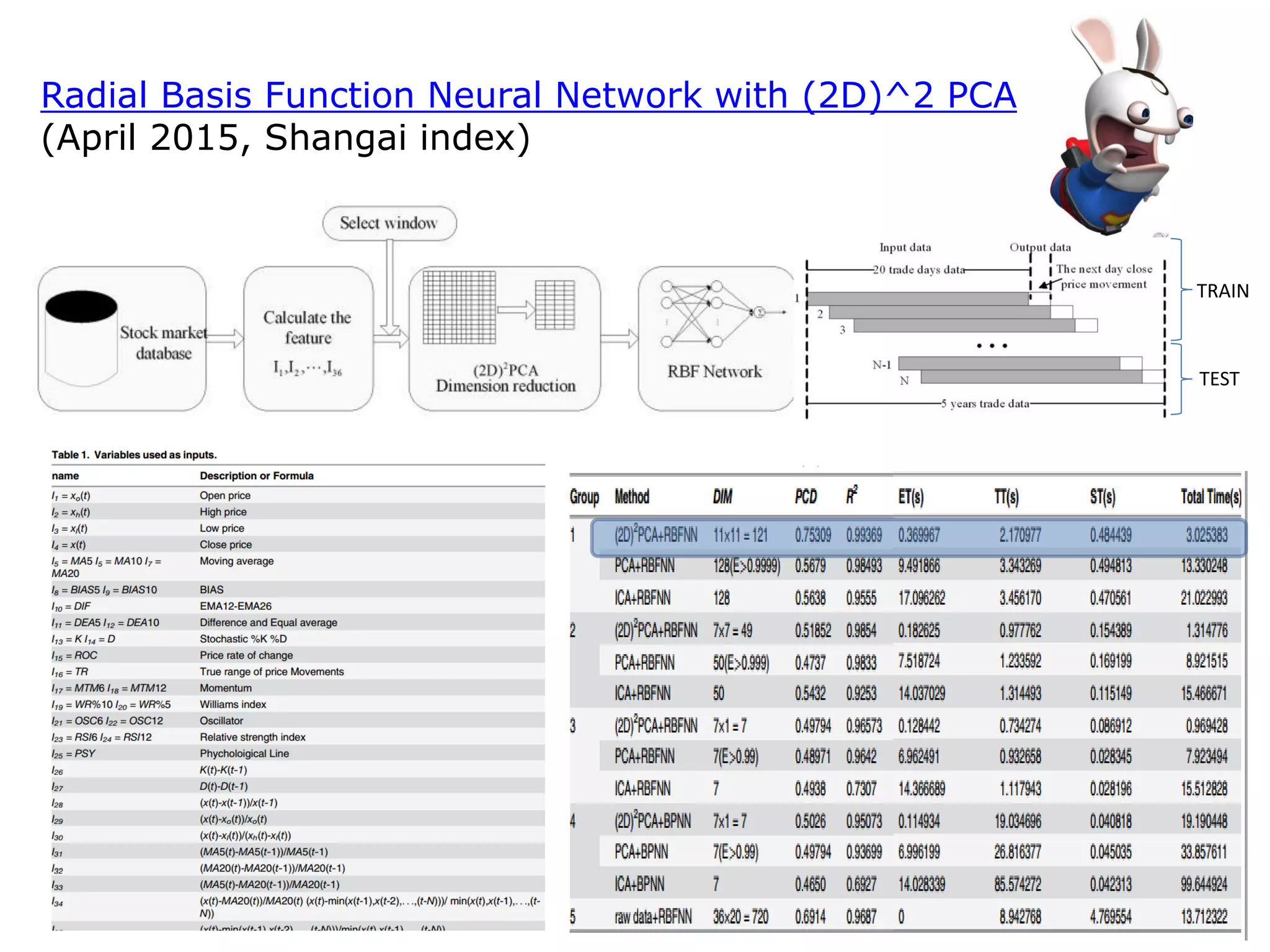

The document discusses the application of deep learning in finance, highlighting its growth and potential within various sectors such as medical imaging, robotics, and military. Key advancements in artificial neural networks, including historical developments and major breakthroughs, are explored, alongside their applications in trading systems and data analysis. Additionally, it mentions challenges in deep learning, such as slow convergence and the difficulty of training deep networks, while also presenting alternative approaches like particle swarm optimization for improving neural network training.