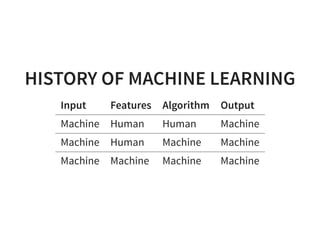

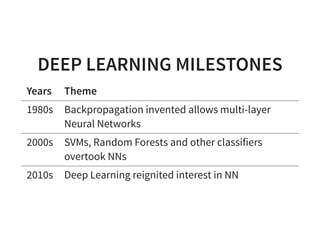

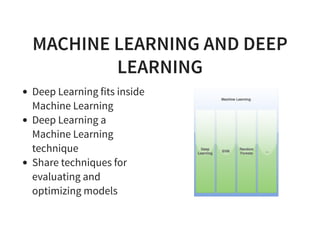

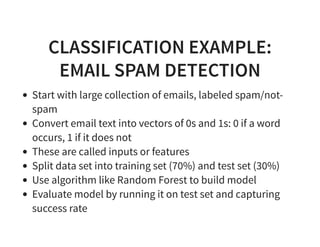

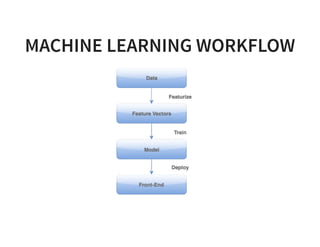

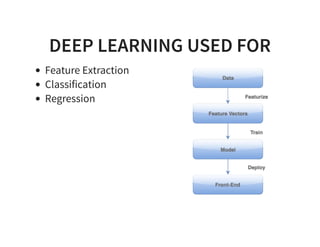

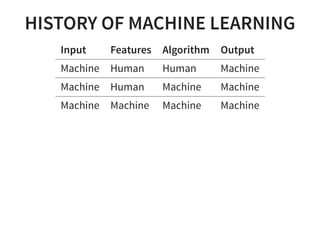

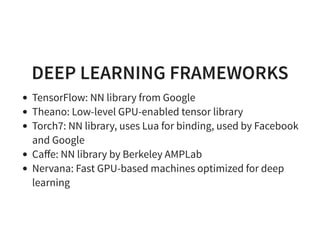

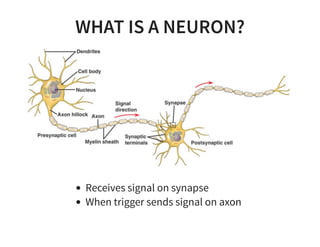

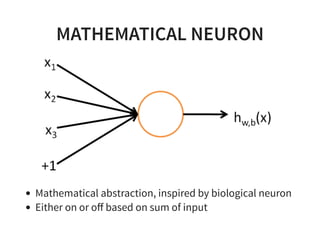

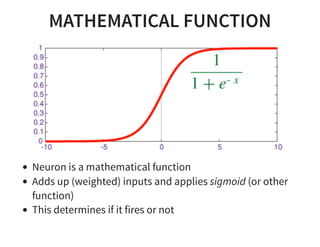

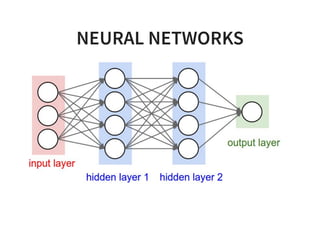

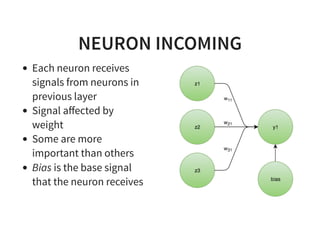

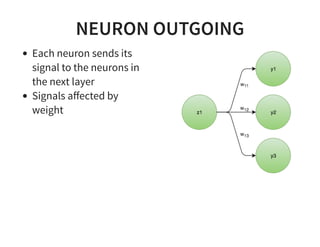

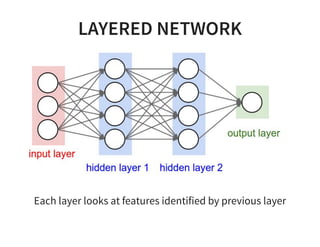

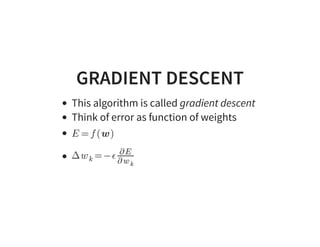

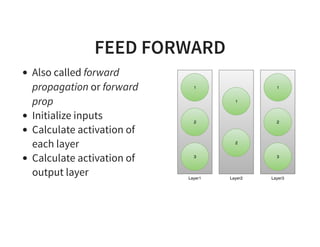

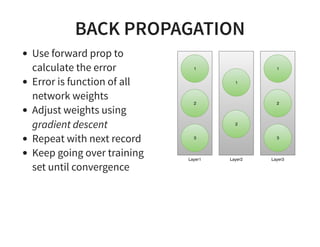

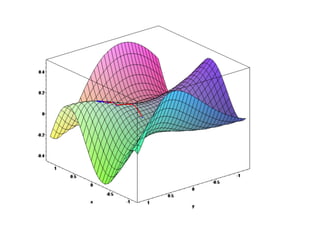

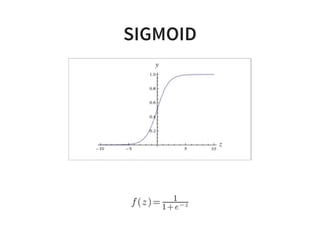

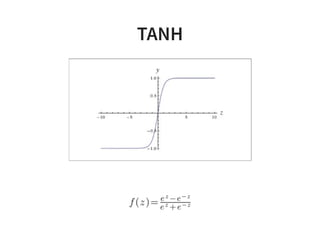

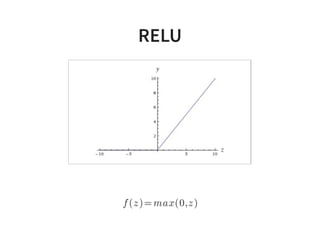

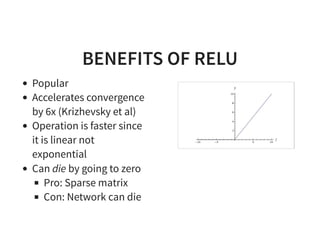

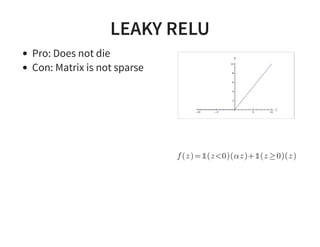

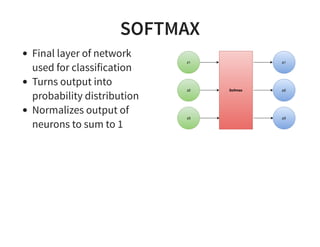

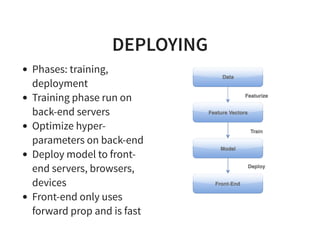

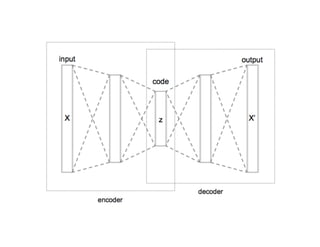

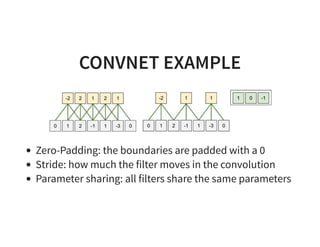

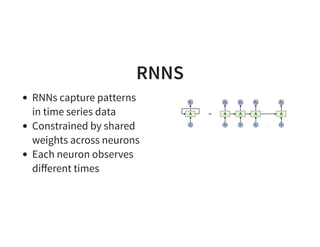

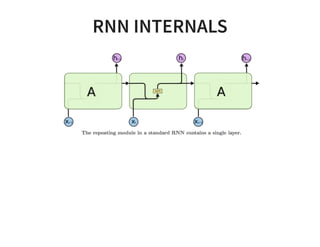

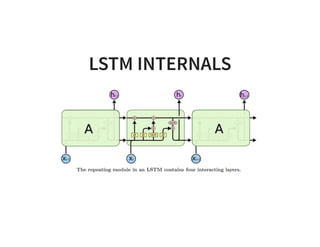

The document provides an overview of neural networks and deep learning, explaining their significance in machine learning, including their applications such as image recognition, and techniques like classification, regression, and clustering. It discusses the evolution of deep learning, techniques for model evaluation, and a range of frameworks and algorithms used in the field. Additionally, it touches on advanced concepts such as convolutional and recurrent neural networks, hyperparameter tuning, and the training process involved in developing deep learning models.