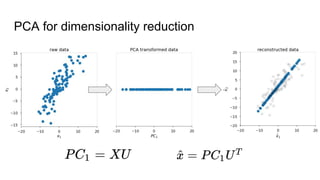

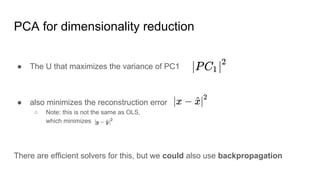

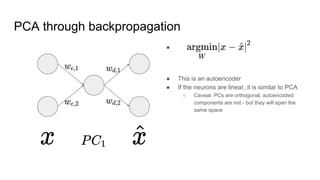

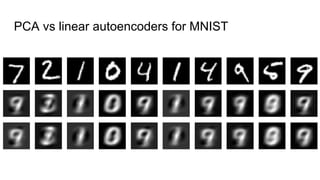

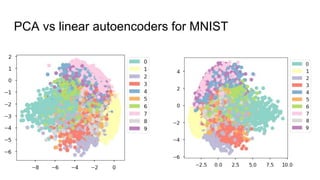

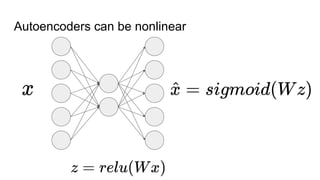

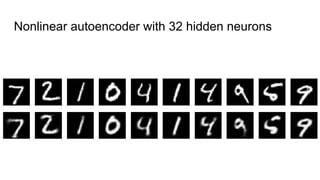

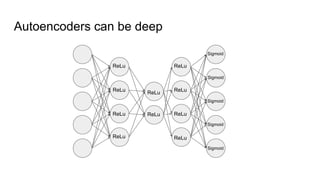

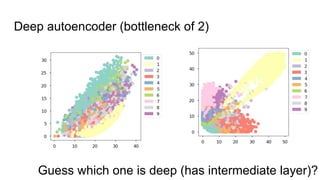

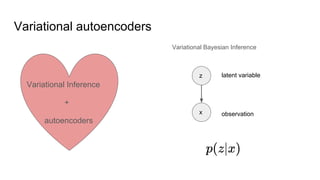

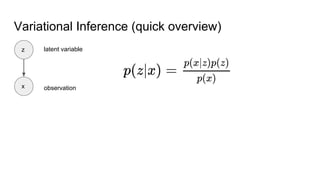

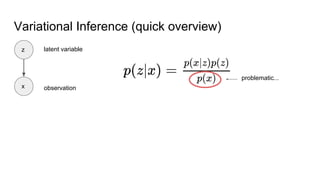

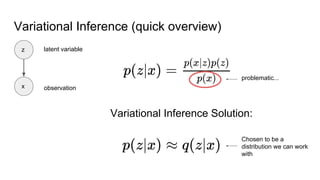

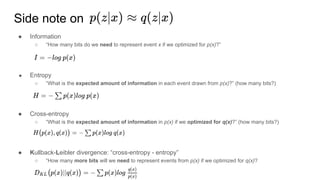

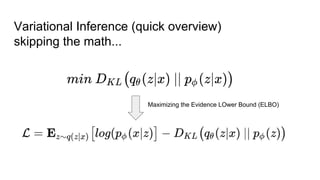

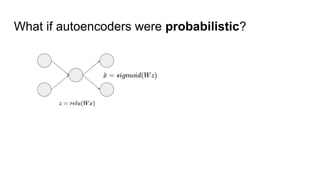

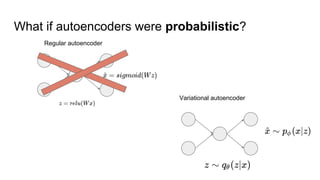

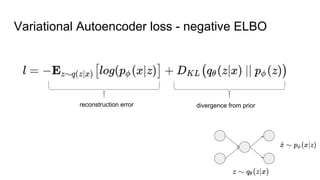

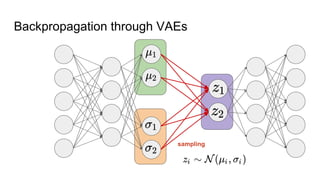

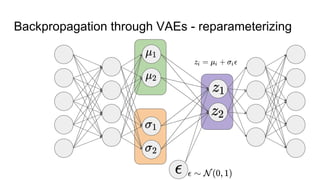

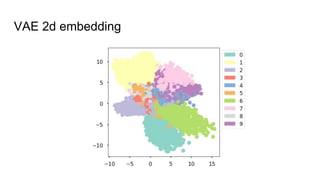

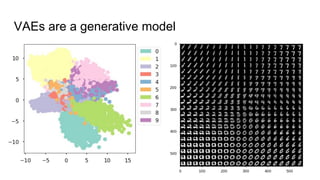

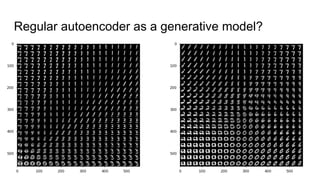

This document provides an overview of autoencoders and variational autoencoders. It discusses how principal component analysis (PCA) is related to linear autoencoders and can be performed using backpropagation. Deep and nonlinear autoencoders are also covered. The document then introduces variational autoencoders, which combine variational inference with autoencoders to allow for probabilistic latent space modeling. It explains how variational autoencoders are trained using backpropagation through reparameterization to maximize the evidence lower bound.