This document provides an overview of artificial intelligence and machine learning techniques, including:

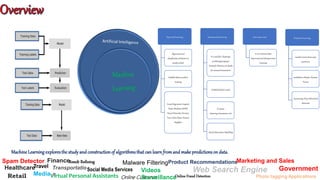

1. It defines artificial intelligence and lists some common applications such as gaming, natural language processing, and robotics.

2. It describes different machine learning algorithms like supervised learning, unsupervised learning, reinforced learning, and their applications in areas such as healthcare, finance, and retail.

3. It explains deep learning concepts such as neural networks, activation functions, loss functions, and architectures like convolutional neural networks and recurrent neural networks.

![Activation functions

Sigmoid function equation

https://cdn-images-1.medium.com/max/800/1*QHPXkxGmIyxn7mH4BtRJXQ.png

• Takes the given equation and a number then squash this number in the range of 0 and 1

Problems

Suffers from Vanishing gradient

problem

The gradient of the output of the network with respect to the

parameters in the early layers becomes very small

has a slow convergence rate

Due to this vanishing gradient problem sigmoid activation

function converges very slowly

is not a zero-centric function sigmoid function's output range is [0,1]

hyperbolictangent function (TanH)

Tanh activation function equation

https://cdn-images-1.medium.com/max/800/1*HJhu8BO7KxkjqRRMSaz0Gw.png

• This function squashes the input region in the range of [-1 to 1]

• its output is zero-centric

TanH also suffers from the vanishing gradient problem

Sigmoid](https://image.slidesharecdn.com/introductiontodeeplearning-190516020329/85/Deep-Learning-13-320.jpg)