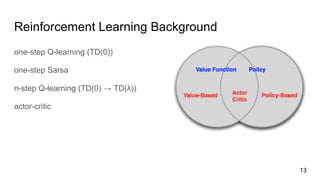

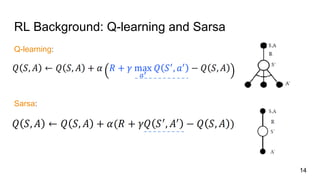

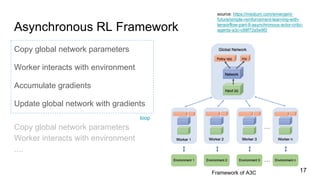

The document discusses asynchronous methods for deep reinforcement learning, highlighting the limitations of experience replay and proposing a framework that executes multiple agents in parallel across environments. This approach enhances training stability, reduces computational costs, and shows significant performance improvements in various tasks compared to traditional methods. Experimental results demonstrate robust efficiencies and effective training using reduced resources on a single machine.

![Asynchronous Methods for Deep Reinforcement Learning

Volodymyr Mnih, Adrià Puigdomènech Badia, Mehdi Mirza, Alex Graves, Tim Harley, Timothy P., David Silver, Koray Kavukcuoglu

Google DeepMind

Montreal Institute for Learning Algorithms (MILA), University of Montreal

Journal reference: ICML 2016

Cite as: arXiv:1602.01783 [cs.LG]

(or arXiv:1602.01783v2 [cs.LG] for this version)

資應所 105065702 李思叡

1](https://image.slidesharecdn.com/ppdlpresentationforisa-190317085322/85/Asynchronous-Methods-for-Deep-Reinforcement-Learning-1-320.jpg)