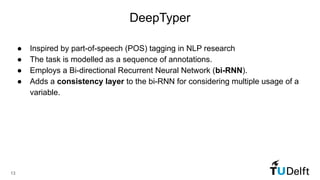

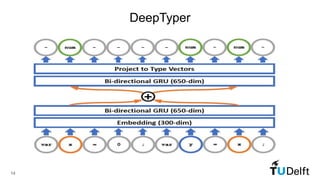

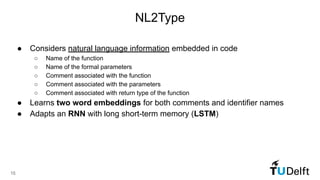

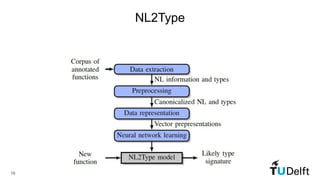

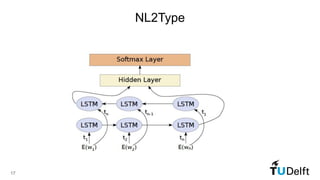

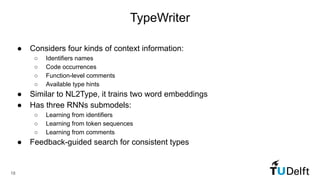

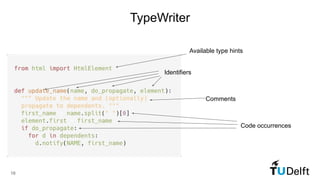

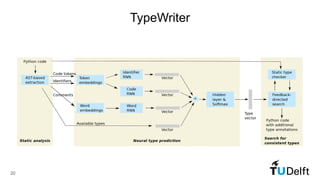

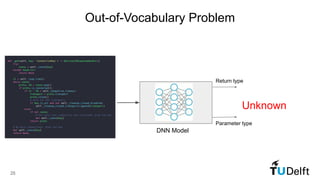

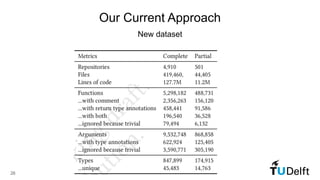

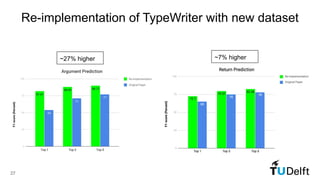

This document summarizes existing deep learning approaches for type inference in dynamic programming languages and the presenter's current approach. Existing approaches like DeepTyper, NL2Type, TypeWriter, and LAMBDANET use neural networks and contextual information from code to infer types. The presenter is reimplementing TypeWriter with a new dataset and improved type extractor. Their approach aims to handle types outside the closed vocabulary and refine the search model through further experiments.

![Our Current Approach

Improved available type extractor

28

[AbstactResolver,

ClientConnectionError,

ClientHttpProxyError,

…]

1

1

0

0Python dataset Visible Type hints extractor

Type mask vector● Lightweight static analysis with importlab and LibCST](https://image.slidesharecdn.com/deeplearningtypeinferencefordynamicprogramminglanguages-public-200422121707/85/Deep-learning-Type-Inference-for-Dynamic-Programming-Languages-28-320.jpg)