The document describes 3 hierarchical LSTM models for generating coherent multi-sentence text:

1) Standard LSTM encodes/decodes a document as a single sequence.

2) Hierarchical LSTM encodes sentences then the document.

3) Hierarchical LSTM with attention encodes sentences then decodes with attention over encoded sentences.

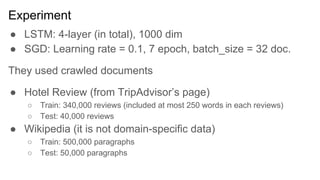

The models were evaluated on hotel reviews and Wikipedia using ROUGE, BLEU, and a coherence metric called L-value. The hierarchical LSTMs outperformed the standard LSTM, and hotel reviews were easier to generate than Wikipedia text.