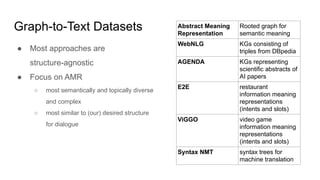

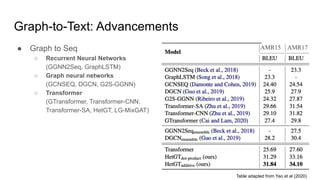

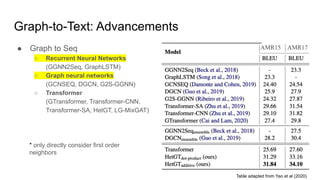

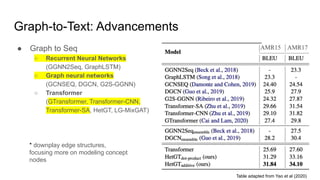

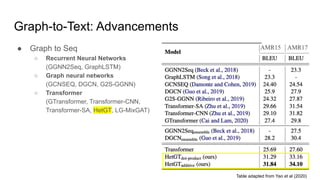

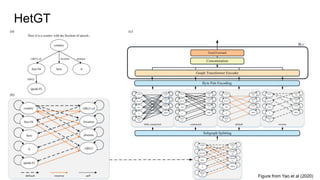

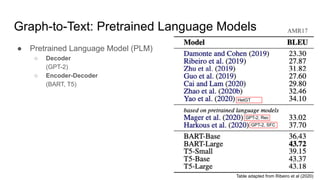

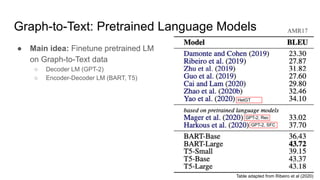

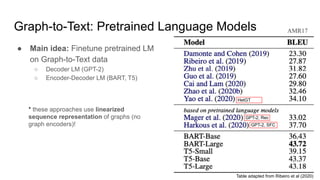

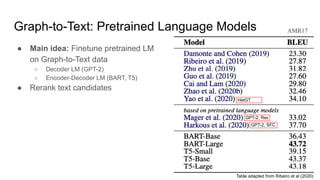

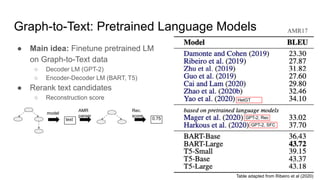

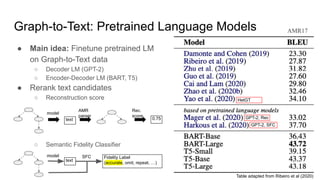

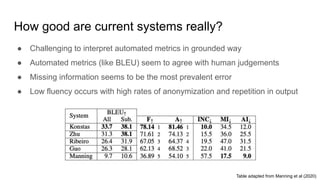

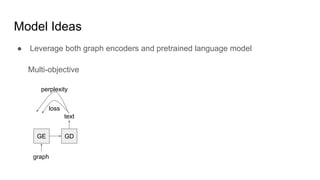

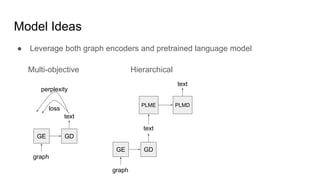

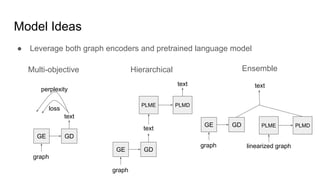

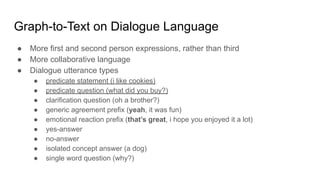

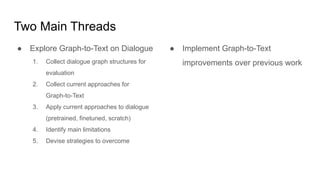

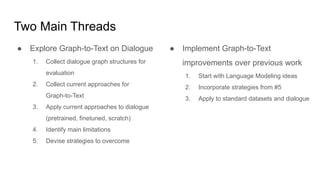

The document discusses graph-to-text generation and its applications to dialogue systems. It provides an overview of current approaches to graph-to-text including rule-based, statistical, sequence-to-sequence and graph-to-sequence models. Recent advances use pretrained language models and graph neural networks. While current systems show promise, they still struggle with omissions, repetitions and unnatural language. The document proposes two threads of future work: exploring graph-to-text on dialogue data and implementing model improvements.