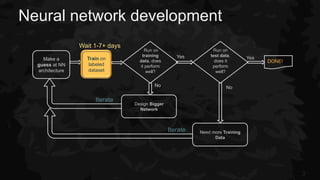

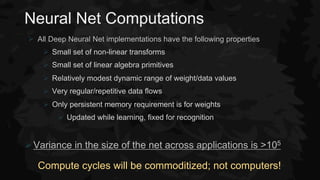

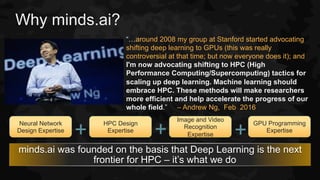

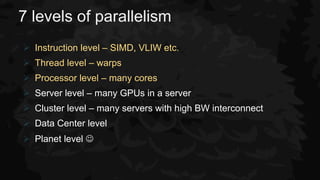

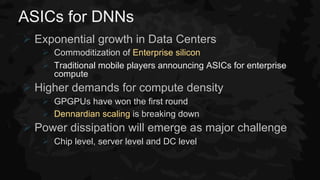

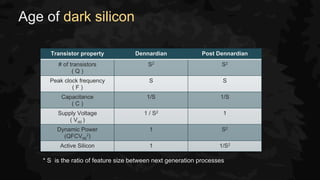

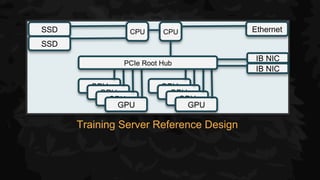

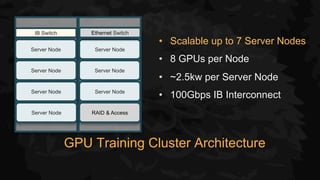

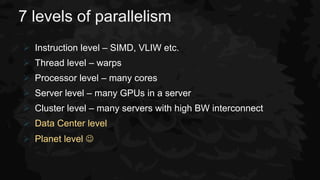

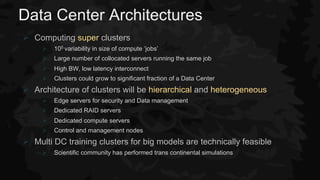

The document discusses how deep learning requires a large amount of computing power and how its requirements are driving the convergence of high performance computing and hyperscale data centers. It notes that deep neural networks require orders of magnitude more computations per data byte than traditional algorithms. This large computational need, coupled with the growth of data centers and data volumes, means deep learning workloads will require specialized hardware designs across multiple levels, from silicon design to data center architecture. Heterogeneous computing resources optimized for deep learning will be needed both within and across servers and data centers to efficiently process large neural networks on big data.