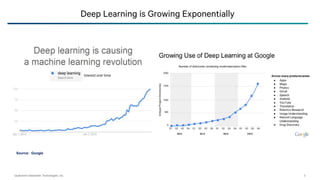

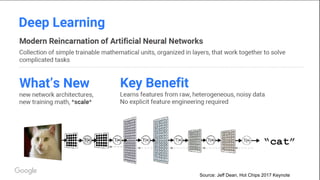

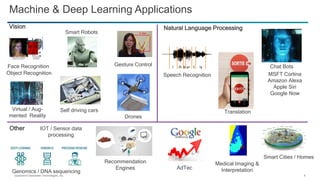

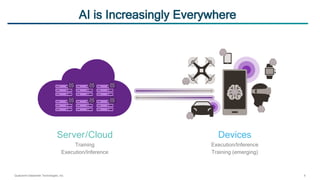

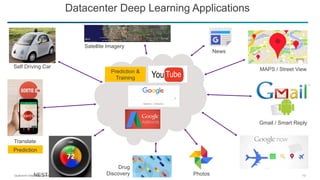

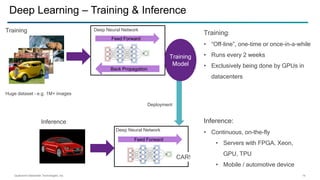

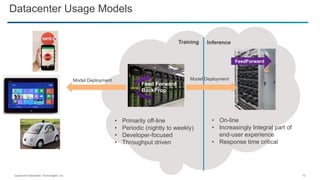

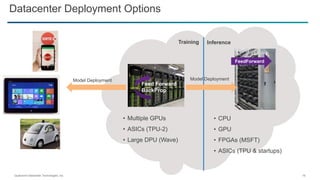

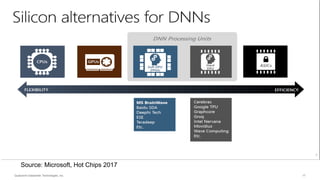

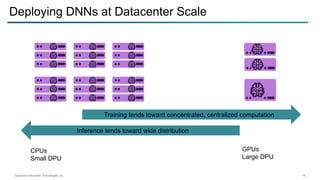

Before 2012, machine learning algorithms were hand-coded but the emergence of deep neural networks (DNNs) enabled a breakthrough in performance. DNNs are now simpler to develop and deploy, driving changes across many fields. Deep learning is growing exponentially, powering applications in areas like computer vision, natural language processing, robotics, and more. Training typically occurs in datacenters using GPUs while inference can happen on devices, in datacenters, or at the edge using various hardware like CPUs, GPUs, FPGAs and ASICs. Innovation in algorithms, hardware, and frameworks is expected to continue driving improvements in deep learning.