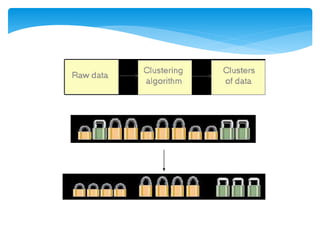

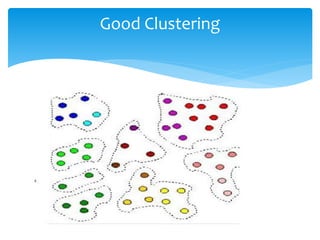

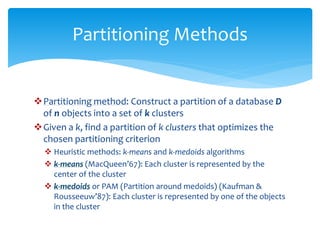

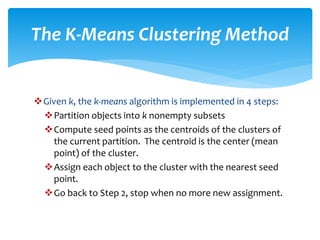

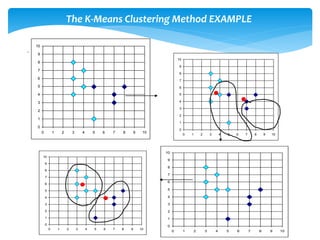

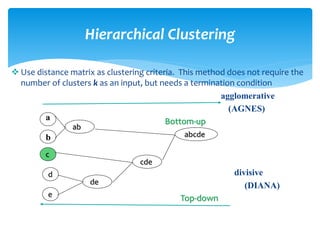

Data clustering is the process of organizing similar objects into groups and is used in various fields such as data mining, pattern recognition, and medical diagnostics. Various clustering methods exist, including partitioning, hierarchical, density-based, and model-based approaches, each with their own algorithms like k-means and agglomerative clustering. The quality of clustering depends on the similarity measures and the ability to identify hidden patterns, making effective algorithms essential for handling large and complex datasets.