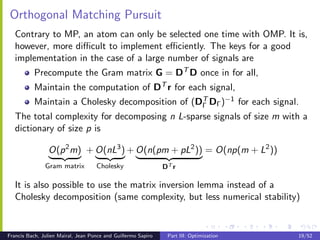

The document discusses optimization methods for sparse coding and dictionary learning problems. It covers greedy pursuit methods like matching pursuit and orthogonal matching pursuit that iteratively select atoms to approximate the signal. Homotopy methods like LARS that follow the piecewise linear regularization path of lasso are also discussed. The document provides optimality conditions for lasso using directional derivatives and describes how coordinate descent can be used to optimize separable nonsmooth objectives like lasso. Code examples using the SPAMS toolbox are provided.

![The Sparse Decomposition Problem

1

minp ||x − Dα||2

2 + λψ(α)

α∈R 2

sparsity-inducing

data fitting term regularization

ψ induces sparsity in α. It can be

△

the ℓ0 “pseudo-norm”. ||α||0 = #{i s.t. α[i ] = 0} (NP-hard)

△ p

the ℓ1 norm. ||α||1 = i =1 |α[i ]| (convex)

...

This is a selection problem.

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 2/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-2-320.jpg)

![Finding your way in the sparse coding literature. . .

. . . is not easy. The literature is vast, redundant, sometimes

confusing and many papers are claiming victory. . .

The main class of methods are

greedy procedures [Mallat and Zhang, 1993], [Weisberg, 1980]

homotopy [Osborne et al., 2000], [Efron et al., 2004],

[Markowitz, 1956]

soft-thresholding based methods [Fu, 1998], [Daubechies et al.,

2004], [Friedman et al., 2007], [Nesterov, 2007], [Beck and

Teboulle, 2009], . . .

reweighted-ℓ2 methods [Daubechies et al., 2009],. . .

active-set methods [Roth and Fischer, 2008].

...

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 3/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-3-320.jpg)

![Matching Pursuit

min || x − Dα ||2 s.t. ||α||0 ≤ L

2

α∈Rp

r

1: α←0

2: r ← x (residual).

3: while ||α||0 < L do

4: Select the atom with maximum correlation with the residual

ˆ ← arg max |dT r|

ı i

i =1,...,p

5: Update the residual and the coefficients

T

α[ˆ] ← α[ˆ] + dˆ r

ı ı ı

T

r ← r − (dˆ r)dˆ

ı ı

6: end while

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 14/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-14-320.jpg)

![Example with the software SPAMS

Software available at http://www.di.ens.fr/willow/SPAMS/

>> I=double(imread(’data/lena.eps’))/255;

>> %extract all patches of I

>> X=im2col(I,[8 8],’sliding’);

>> %load a dictionary of size 64 x 256

>> D=load(’dict.mat’);

>>

>> %set the sparsity parameter L to 10

>> param.L=10;

>> alpha=mexOMP(X,D,param);

On a 8-cores 2.83Ghz machine: 230000 signals processed per second!

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 20/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-20-320.jpg)

![Optimality conditions of the Lasso

Nonsmooth optimization

Directional derivatives and subgradients are useful tools for studying

ℓ1 -decomposition problems:

1

min ||x − Dα||2 + λ||α||1

2

α∈Rp 2

In this tutorial, we use the directional derivatives to derive simple

optimality conditions of the Lasso.

For more information on convex analysis and nonsmooth optimization,

see the following books: [Boyd and Vandenberghe, 2004], [Nocedal and

Wright, 2006], [Borwein and Lewis, 2006], [Bonnans et al., 2006],

[Bertsekas, 1999].

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 21/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-21-320.jpg)

![Optimality conditions of the Lasso

1

minp ||x − Dα||2 + λ||α||1

2

α∈R 2

α⋆ is optimal iff for all u in Rp , ∇f (α, u) ≥ 0—that is,

−uT DT (x − Dα⋆ ) + λ sign(α⋆ [i ])u[i ] + λ |ui | ≥ 0,

i ,α⋆ [i ]=0 i ,α⋆ [i ]=0

which is equivalent to the following conditions:

|dT (x − Dα⋆ )| ≤ λ

i if α⋆ [i ] = 0

∀i = 1, . . . , p,

dT (x − Dα⋆ ) = λ sign(α⋆ [i ])

i if α⋆ [i ] = 0

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 23/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-23-320.jpg)

![Homotopy

A homotopy method provides a set of solutions indexed by a

parameter.

The regularization path (λ, α⋆ (λ)) for instance!!

It can be useful when the path has some “nice” properties

(piecewise linear, piecewise quadratic).

LARS [Efron et al., 2004] starts from a trivial solution, and follows

the regularization path of the Lasso, which is is piecewise linear.

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 24/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-24-320.jpg)

![Homotopy, LARS

[Osborne et al., 2000], [Efron et al., 2004]

|diT (x − Dα⋆ )| ≤ λ if α⋆ [i ] = 0

∀i = 1, . . . , p,

diT (x − Dα⋆ ) = λ sign(α⋆ [i ]) if α⋆ [i ] = 0

(1)

The regularization path is piecewise linear:

DT (x − DΓ α⋆ ) = λ sign(α⋆ )

Γ Γ Γ

α⋆ (λ) = (DT DΓ )−1 (DT x − λ sign(α⋆ )) = A + λB

Γ Γ Γ Γ

A simple interpretation of LARS

Start from the trivial solution (λ = ||DT x||∞ , α⋆ (λ) = 0).

Maintain the computations of |dT (x − Dα⋆ (λ))| for all i .

i

Maintain the computation of the current direction B.

Follow the path by reducing λ until the next kink.

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 25/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-25-320.jpg)

![Example with the software SPAMS

http://www.di.ens.fr/willow/SPAMS/

>> I=double(imread(’data/lena.eps’))/255;

>> %extract all patches of I

>> X=normalize(im2col(I,[8 8],’sliding’));

>> %load a dictionary of size 64 x 256

>> D=load(’dict.mat’);

>>

>> %set the sparsity parameter lambda to 0.15

>> param.lambda=0.15;

>> alpha=mexLasso(X,D,param);

On a 8-cores 2.83Ghz machine: 77000 signals processed per second!

Note that it can also solve constrained version of the problem. The

complexity is more or less the same as OMP and uses the same tricks

(Cholesky decomposition).

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 26/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-26-320.jpg)

![Coordinate Descent

Coordinate descent + nonsmooth objective: WARNING: not

convergent in general

Here, the problem is equivalent to a convex smooth optimization

problem with separable constraints

1

min ||x − D+ α+ + D− α− ||2 + λα+ 1 + λαT 1 s.t. α− , α+ ≥ 0.

2

T

−

α+ ,α− 2

For this specific problem, coordinate descent is convergent.

Supposing ||di ||2 = 1, updating the coordinate i :

1

α[i ] ← arg min || x − α[j]dj −βdi ||2 + λ|β|

2

β 2

j=i

r

← sign(di r)(|di r| − λ)+

T T

⇒ soft-thresholding!

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 27/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-27-320.jpg)

![Example with the software SPAMS

http://www.di.ens.fr/willow/SPAMS/

>> I=double(imread(’data/lena.eps’))/255;

>> %extract all patches of I

>> X=normalize(im2col(I,[8 8],’sliding’));

>> %load a dictionary of size 64 x 256

>> D=load(’dict.mat’);

>>

>> %set the sparsity parameter lambda to 0.15

>> param.lambda=0.15;

>> param.tol=1e-2;

>> param.itermax=200;

>> alpha=mexCD(X,D,param);

On a 8-cores 2.83Ghz machine: 93000 signals processed per second!

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 28/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-28-320.jpg)

![first-order/proximal methods

They require solving efficiently the proximal operator

1 2

minp u−α 2 + λψ(α)

α∈R 2

For the ℓ1 -norm, this amounts to a soft-thresholding:

α⋆ [i ] = sign(u[i ])(u[i ] − λ)+ .

There exists accelerated versions based on Nesterov optimal

first-order method (gradient method with “extrapolation”) [Beck

and Teboulle, 2009, Nesterov, 2007, 1983]

suited for large-scale experiments.

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 30/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-30-320.jpg)

![Optimization for Grouped Sparsity

The proximal operator:

1 2

minp u−α 2 +λ αg q

α∈R 2

g ∈G

For q = 2,

ug

⋆

αg = ( ug 2 − λ)+ , ∀g ∈ G

ug 2

For q = ∞,

α⋆ = ug − Π

g . 1 ≤λ [ug ], ∀g ∈ G

These formula generalize soft-thrsholding to groups of variables. They

are used in block-coordinate descent and proximal algorithms.

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 32/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-32-320.jpg)

![Reweighted ℓ2

Let us start from something simple

a2 − 2ab + b2 ≥ 0.

Then

1 a2

a≤ +b with equality iff a = b

2 b

and

p

1 α[j]2

||α||1 = min + ηj .

ηj ≥0 2 ηj

j=1

The formulation becomes

p

1 2 λ α[j]2

min x − Dα 2 + + ηj .

α,ηj ≥ε 2 2 ηj

j=1

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 33/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-33-320.jpg)

![Optimization for Dictionary Learning

[Mairal, Bach, Ponce, and Sapiro, 2009a]

Classical formulation of dictionary learning

n

1

min fn (D) = min l (xi , D),

D∈C D∈C n

i =1

where

△ 1

l (x, D) = minp ||x − Dα||2 + λ||α||1 .

2

α∈R 2

Which formulation are we interested in?

n

1

min f (D) = Ex [l (x, D)] ≈ lim l (xi , D)

D∈C n→+∞ n

i =1

[Bottou and Bousquet, 2008]: Online learning can

handle potentially infinite or dynamic datasets,

be dramatically faster than batch algorithms.

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 36/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-36-320.jpg)

![Extension to NMF and sparse PCA

[Mairal, Bach, Ponce, and Sapiro, 2009b]

NMF extension

n

1

min ||xi − Dαi ||2 s.t. αi ≥ 0,

2 D ≥ 0.

α∈Rp×n 2

D∈C i =1

SPCA extension

n

1

min ||xi − Dαi ||2 + λ||α1 ||1

2

α∈Rp×n 2

D∈C ′ i =1

△

C ′ = {D ∈ Rm×p s.t. ∀j ||dj ||2 + γ||dj ||1 ≤ 1}.

2

Francis Bach, Julien Mairal, Jean Ponce and Guillermo Sapiro Part III: Optimization 45/52](https://image.slidesharecdn.com/tutocvprpart3-110515231759-phpapp02/85/CVPR2010-Sparse-Coding-and-Dictionary-Learning-for-Image-Analysis-Part-3-Optimization-Techniques-for-Sparse-Coding-45-320.jpg)