The document describes the various phases of a compiler:

1. Lexical analysis breaks the source code into tokens.

2. Syntax analysis generates a parse tree from the tokens.

3. Semantic analysis checks for semantic correctness using the parse tree and symbol table.

4. Intermediate code generation produces machine-independent code.

5. Code optimization improves the intermediate code.

6. Code generation translates the optimized code into target machine code.

![8. When does a Dangling reference occur?

1. A dangling reference occurs when there is a reference to storage that has been

deallocated.

2. It is a logical error to use dangling references, Since the value of deallocated storage

is undefined according to the semantics of most languages.

9. What are the properties of optimizing Compiler?

THE PRINCIPAL SOURCES OF OPTIMIZATION

Semantics Preserving Transformations (Functions ) - Safeguards original program

meaning

Global Common Subexpressions

Copy Propagation

Dead-Code Elimination

Code Motion / Movement

Induction Variables and Reduction in Strength

OPTIMIZATION OF BASIC BLOCKS

The DAG Representation of Basic Blocks

Finding Local Common Subexpressions

Dead Code Elimination

The Use of Algebraic Identities

Representation of Array References

Pointer Assignments and Procedure Calls

Reassembling Basic Blocks from DAG's

PEEPHOLE OPTIMIZATION

Eliminating Redundant Loads and Stores

Eliminating Unreachable Code

Flow-of-Control Optimizations

Algebraic Simplification and Reduction in Strength

Use of Machine Idioms

LOOP OPTINIZATION

Code Motion while(i<max-1) {sum=sum+a[i]}

=> n= max-1; while(i<n) {sum=sum+a[i]}

Induction Variables and Strength Reduction: only 1 Induction Variable in loop, either

i++ or j=j+2, * by +

Loop invariant method

Loop unrolling

Loop fusion

10. Write the three address code sequence for the assignment statement

d:=(a-b)+(a-c)+(a-c).

Three address code sequence

t1=a-b

t2=a-c

t3=t1+t2

t4=t3+t2

d=t4](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-5-320.jpg)

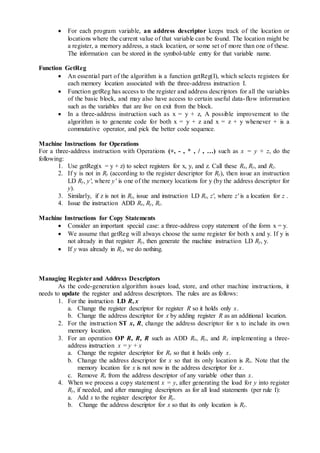

![Induction rules: Suppose r and s are regular expressions denoting languages L(r) and L(s),

respectively.

1. (r) | (s) is a regular expression denoting the language L(r) U L(s).

2. (r) (s) is a regular expression denoting the language L(r) L(s) .

3. (r) * is a regular expression denoting (L (r)) * .

4. (r) is a regular expression denoting L(r). i.e., Additional pairs of parentheses

around expressions.

Example: Let Σ = {a, b}.

Regular

expression

Language Meaning

a|b {a, b} Single ‘a’ or ‘b’

(a|b) (a|b) {aa, ab, ba, bb} All strings of length two over the alphabet Σ

a* { ε, a, aa, aaa, …} Consisting of all strings of zero or more a's

(a|b)* {ε, a, b, aa, ab, ba, bb,

aaa, …}

set of all strings consisting of zero or more

instances of a or b

a|a*b {a, b, ab, aab, aaab, …} String a and all strings consisting of zero or

more a's and ending in b

A language that can be defined by a regular expression is called a regular set. If two

regular expressions r and s denote the same regular set, we say they are equivalent and write r =

s. For instance, (a|b) = (b|a), (a|b)*= (a*b*)*, (b|a)*= (a|b)*, (a|b) (b|a) =aa|ab|ba|bb.

Extensions of Regular Expressions

Few notational extensions that were first incorporated into Unix utilities such as Lex that

are particularly useful in the specification lexical analyzers.

1. One or more instances: The unary, postfix operator + represents the positive closure

of a regular expression and its language. If r is a regular expression, then (r)+ denotes

the language (L(r))+. The two useful algebraic laws, r* = r+|ε and r+ = rr* = r*r.

2. Zero or one instance: The unary postfix operator ? means "zero or one occurrence."

That is, r? is equivalent to r|ε , L(r?) = L(r) U {ε}.

3. Character classes: A regular expression a1|a2|…|an, where the ai's are each symbols

of the alphabet, can be replaced by the shorthand [a1, a2, …an]. Thus, [abc] is

shorthand for a|b|c, and [a-z] is shorthand for a|b|…|z.

Example: Regular definition for C identifier

Letter_ [A-Z a-z_]

digit [0-9]

id letter_ ( letter_ | digit )*

Example: Regular definition unsigned integer

digit [0-9]

digits digit+

number digits ( . digits)? ( E [+ -]? digits )?

Note: The operators *, +, and ? has the same precedence and associativity.

Or](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-14-320.jpg)

![12 (b) (i) Write notes on Regular expression to NFA. Construct Regular expression to

NFA for the sentence (a|b)*a. (10)

Consider the regular expression r = (a|b)*a

Augmented regular expression r = (a|b)*◦a◦#

1 2 3 4

Syntax tree for (a|b)*a#

firstpos and lastpos for nodes in the syntax tree for (a|b)*a#

NODE n Followpos(n)

1 {1, 2, 3}

2 {1, 2, 3}

3 {4}

4 { }

The value of firstpos for the root of the tree is {1,2,3}, so this set is the start state of D.

all this set of states A. We must compute Dtran[A, a] and Dtran[A, b]. Among the positions of

A, 1 and 3 correspond to a, while 2 corresponds to b. Thus, Dtran[A, a] = followpos(1) U

followpos(3) = {1, 2,3,4}, and Dtran[A, b] = followpos(2) = {1,2,3}.

123 1234

b

a

b a

{2} b {2}

{1, 2} | {1,2}

{1} a {1}

{1,2,3}◦ {3}

{1, 2} * {1, 2} {3} a {3}

{4} # {4}

{1,2,3}◦ {4}

b

2

|

* a

3

a

1

◦ #

4

◦](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-15-320.jpg)

![(ii) Construct DFA to recognize the language (a/b)*ab (6)

Consider the regular expression r = (a|b)*ab

Augmented regular expression r = (a|b)*◦a◦b◦#

1 2 3 4 5

Syntax tree for (a|b)*a#

firstpos and lastpos for nodes in the syntax tree for (a|b)*ab#

NODE n Followpos(n)

1 {1, 2, 3}

2 {1, 2, 3}

3 {4}

4 {5 }

5 { }

The value of firstpos for the root of the tree is {1,2,3}, so this set is the start state of D.

all this set of states A. We must compute Dtran[A, a] and Dtran[A, b]. Among the positions of

A, 1 and 3 correspond to a, while 2 corresponds to b. Thus, Dtran[A, a] = followpos(1) U

followpos(3) = {1, 2,3,4}, and Dtran[A, b] = followpos(2) = {1,2,3}.

123

b

a

b a

12351234

b

a

{2} b {2}

{1, 2} | {1,2}

{1} a {1}

{1,2,3}◦ {3}

{1, 2} * {1, 2} {3} a {3}

{4} b {4}

{1,2,3}◦ {4} {5} # {5}

{1,2,3}◦ {5}

b

2

|

* a

3

a

1

◦ b

4

◦ #

5

◦](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-16-320.jpg)

![contains the string to be parsed, followed by the endmarker $. We reuse the symbol $ to mark

the bottom of the stack, which initially contains the start symbol of the grammar on top of $.

The parser is controlled by a program that considers X, the symbol on top of the stack,

and a, the current input symbol. If X is a nonterminal, the parser chooses an X-production by

consulting entry M[X, a] of the parsing table M. Otherwise, it checks for a match between the

terminal X and current input symbol a.

Figure: Model of a table-driven predictive parser

Algorithm 3.3 : Table-driven predictive parsing.

INPUT: A string w and a parsing table M for grammar G.

OUTPUT: If w is in L(G), a leftmost derivation of w; otherwise, an error indication.

METHOD: Initially, the parser is in a configuration with w$ in the input buffer and the start

symbol S of G on top of the stack, above $. The following procedure uses the predictive parsing

table M to produce a predictive parse for the input.

set ip to point to the first symbol of w;

set X to the top stack symbol;

while ( X ≠ $ ) { /* stack is not empty */

if ( X is a ) pop the stack and advance ip;

else if ( X is a terminal ) error();

else if ( M[X, a] is an error entry ) error();

else if ( M[X,a] = X Y1Y2…Yk) {

output the production X Y1Y2…Yk;

pop the stack;

push YkYk-1…Y1 onto the stack, with Yl on top;

}

set X to the top stack symbol;

}

(ii) Explain Context free grammar with examples. (10)

The Formal Definition of a Context-Free Grammar

A context-free grammar G is defined by the 4-tuple: G= (V, T, P S) where

1. V is a finite set of non-terminals (variable).

2. T is a finite set of terminals.

3. P is a finite set of production rules of the form Aα. Where A is nonterminal

and α is string of terminals and/or nonterminals. P is a relation

from V to (V∪T)*.

4. S is the start symbol (variable S∈V).

Example : The following grammar defines simple arithmetic expressions. In this grammar, the

terminal symbols are id + - * / ( ). The nonterminal symbols are expression, term and factor, and

expression is the start symbol.

Input

Stack

Predictive

Parsing

Program

Parsing

Table M

OutputX

Y

Z

$

a + b $](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-18-320.jpg)

![and F. For each E, T, and F-production. the semantic rule computes the value of attribute val for

the nonterminal on the left side from the values of val for the nonterminals on the right side.

Production Semantic rule

L → En Print(E.val)

E → E1 + T E.val := E1.val + T.val

E → T E.val := T.val

T → T1 * F T.val := T1.val * F.val

T → F T.val := F.val

F → (E) F.val := E.val

F → digit F.val := digit.lexval

Example : An annotated parse tree for the input string 3 * 5 + 4 n,

Figure: Annotated parse tree for 3 * 5 + 4 n

(ii) Discuss specification of a simple type checker. (8)

Specification of a simple type checker for a simple language in which the type of each

identifier must be declared before the identifier is used. The type checker is a translation scheme

that synthesizes the type of each expression from the types of its subexpressions. The type

checker can handles arrays, pointers, statements, and functions.

Specification of a simple type checker includes the following:

A Simple Language

Type Checking of Expressions

Type Checking of Statements

Type Checking of Functions

A Simple Language

The following grammar generates programs, represented by the nonterrninal P, consisting of a

sequence of declarations D followed by a single expression E.

P D ; E

D D ; D | id : T

T char | integer | array[num] of T | ↑ T

A translation scheme for above rules:](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-22-320.jpg)

![P D ; E

D D ; D

D id : T {addtype(id.entry, T.type}

T char { T.type := char}

T integer { T.type := integer}

T ↑ T1 { T.type := pointer(T1.type)}

T array[num] of T1 { T.type := array(1..num.val, T1.type)}

Type Checking of Expressions

The synthesized attribute type for E gives the type expression assigned by the type

system to the expression generated by E. The following semantic rules say that constants

represented by the tokens literal and num have type char and integer, respectively:

Rule Semantic Rule

E literal { E.type := char }

E num { E.type := integer}

A function lookup(e) is used to fetch the type saved in the symbol-table entry pointed to

by e. When an identifier appears in an expression, its declared type is fetched and assigned to the

attribute type;

E id { E.type := lookup(id.entry}

The expression formed by applying the mod operator to two subexpressions of type

integer has type integer; otherwise, its type is type_error. The rule is

E E1 mod E2 { E.type := if E1.type = integer and E2.type = integer

then integer

else typr_error}

In an array reference E1 [E2], the index expression E2 must have type integer, in which

case the result is the dement type t obtained from the type array(s. t ) of E1; we make no use of

the index set s of the array.

E E1 [ E2 ] { E.type := if E2.type = integer and E1.type = array(s,t)

then t

else typr_error}

Within expressions, the postfix operator ↑ yields the object pointed to by its operand. The

type of E ↑ is the type ↑ of the object pointed to by the pointer E:

E E1 ↑ { E.type := if E1.type = ponter(t) then t

else typr_error}

Type Checking of Statements

Since language constructs like statements typically do not have values, the special basic

type void can be assigned to them. If an error is detected within a statement, then the type

type_error assigned.

The assignment statement, conditional statement, and while statements are considered for

the type Checking. The Sequences of statements are separated by semicolons.

S id := E {S.type := if id.type = E,type then void

else type_error}

E if E then S1 { S.type := if E.type = Boolean then S1.type

else type_error}

E while E do S1 { S.type := if E.type = Boolean then S1.type

else type_error}

E S1 ; S2 { S.type := if S1.type = void and S2.type = void then void

else type_error}](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-23-320.jpg)

![ As a program is compiled, each of these high level data structure accesses expands into a

number of low-level pointer arithmetic operations, such as the computation of the

location of the (i, j)th element of a matrix A.

Accesses to the same data structure often share many common low-level operations.

Programmers are not aware of these low-level operations and cannot eliminate the

redundancies themselves.

2 A Running Example: Quicksort

Consider a fragment of a sorting program called quicksort to illustrate several important

code improving transformations. The C program for quicksort is given below

void quicksort(int m, int n)

/* recursively sorts a[m] through a[n] */

{

int i, j;

int v, x;

if (n <= m) return;

/* fragment begins here */

i=m-1; j=n; v=a[n];

while(1) {

do i=i+1; while (a[i] < v);

do j = j-1; while (a[j] > v);

i f (i >= j) break;

x=a[i]; a[i]=a[j]; a[j]=x; /* swap a[i], a[j] */

}

x=a[i]; a[i]=a[n]; a[n]=x; /* swap a[i], a[n] */

/* fragment ends here */

quicksort (m, j); quicksort (i+1, n) ;

}

Figure 5.1: C code for quicksort

Intermediate code for the marked fragment of the program in Figure 5.1 is shown in

Figure 5.2. In this example we assume that integers occupy four bytes. The assignment x = a[i]

is translated into the two three address statements t6=4*i and x=a[t6] as shown in steps (14) and

(15) of Figure. 5.2. Similarly, a[j] = x becomes t10=4*j and a[t10]=x in steps (20) and (21).

Figure 5.2: Three-address code for fragment in Figure.5.1](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-27-320.jpg)

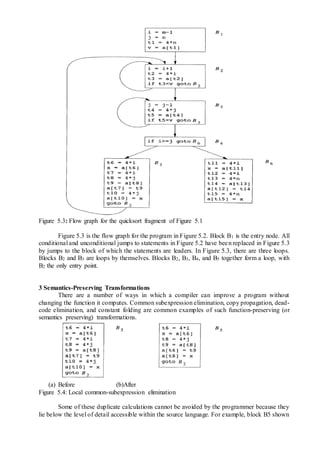

![in Figure 5.4(a) recalculates 4 * i and 4 *j, although none of these calculations were requested

explicitly by the programmer.

4 Global Common Subexpressions

An occurrence of an expression E is called a common subexpression if E was previously

computed and the values of the variables in E have not changed since the previous computation.

We avoid re-computing E if we can use its previously computed value; that is, the variable x to

which the previous computation of E was assigned has not changed in the interim.

The assignments to t7 and t10 in Figure 5.4(a) compute the common subexpressions 4 *

i and 4 * j, respectively. These steps have been eliminated in Figure 5.4(b), which uses t6

instead of t7 and t8 instead of t10.

Figure 9.5 shows the result of eliminating both global and local common subexpressions

from blocks B5 and B6 in the flow graph of Figure 5.3. We first discuss the transformation of B5

and then mention some subtleties involving arrays.

After local common subexpressions are eliminated, B5 still evaluates 4*i and 4 * j, as

shown in Figure 5.4(b). Both are common subexpressions; in particular, the three statements

t8=4*j

t9=a[t8]

a[t8]=x

in B5 can be replaced by

t9=a[t4]

a[t4]=x

using t4 computed in block B3. In Figure 5.5, observe that as control passes from the evaluation

of 4 * j in B3 to B3, there is no change to j and no change to t4, so t4 can be used if 4 * j is

needed.

Another common subexpression comes to light in B5 after t4 replaces t8. The new

expression a[t4] corresponds to the value of a[j] at the source level. Not only does j retain its

value as control leaves B3 and then enters B5, but a[j], a value computed into a temporary t5,

does too, because there are no assignments to elements of the array a in the interim. The

statements

t9=a[t4]

a[t6]=t9

in B5 therefore can be replaced by

a[t6]=t5

Analogously, the value assigned to x in block B5 of Figure 5.4(b) is seen to be the same

as the value assigned to t3 in block B2. Block B5 in Figure 5.5 is the result of eliminating

common subexpressions corresponding to the values of the source level expressions a[i] and a[j]

from B5 in Figure 5.4(b). A similar series of transformations has been done to B6 in Figure 5.5.

The expression a[tl] in blocks B1 and B6 of Figure 5.5 is not considered a common

subexpression, although tl can be used in both places. After control leaves B1 and before it

reaches B6, it can go through B5, where there are assignments to a. Hence, a[tl] may not have the

same value on reaching B6 as it did on leaving B1, and it is not safe to treat a[tl] as a common

subexpression.](https://image.slidesharecdn.com/cs6660compilerdesignmayjune2016-161123062143/85/Cs6660-compiler-design-may-june-2016-Answer-Key-29-320.jpg)