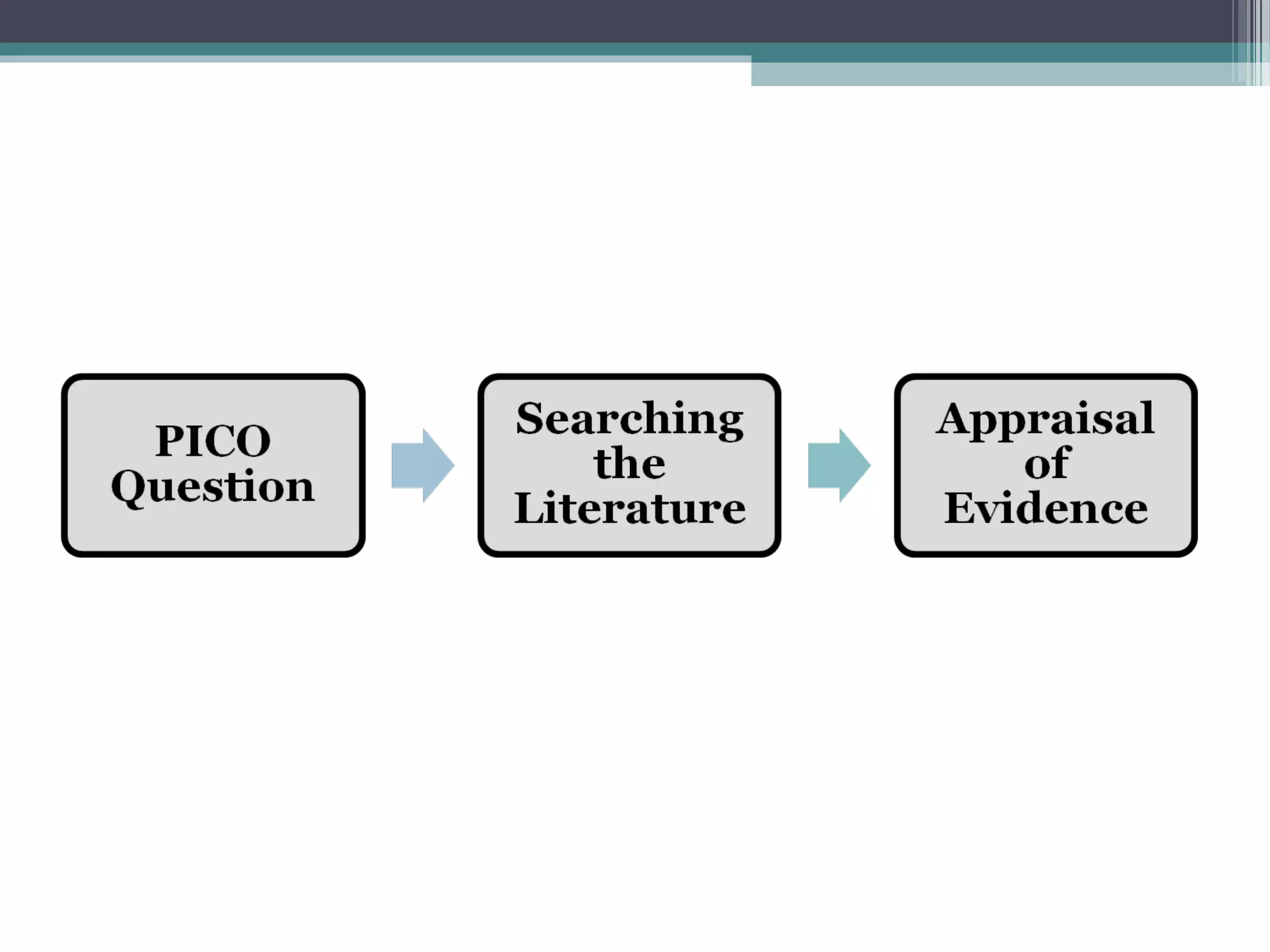

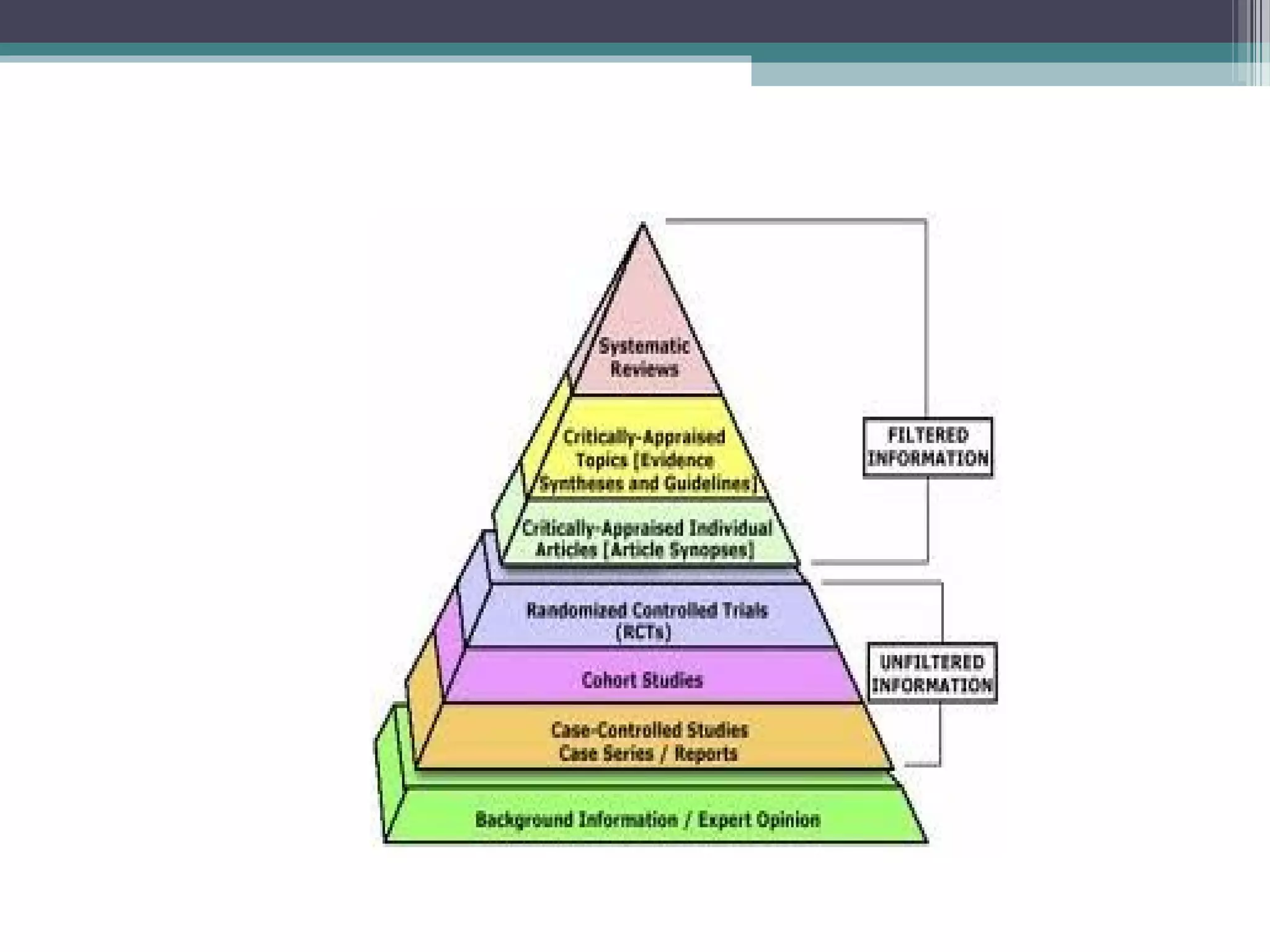

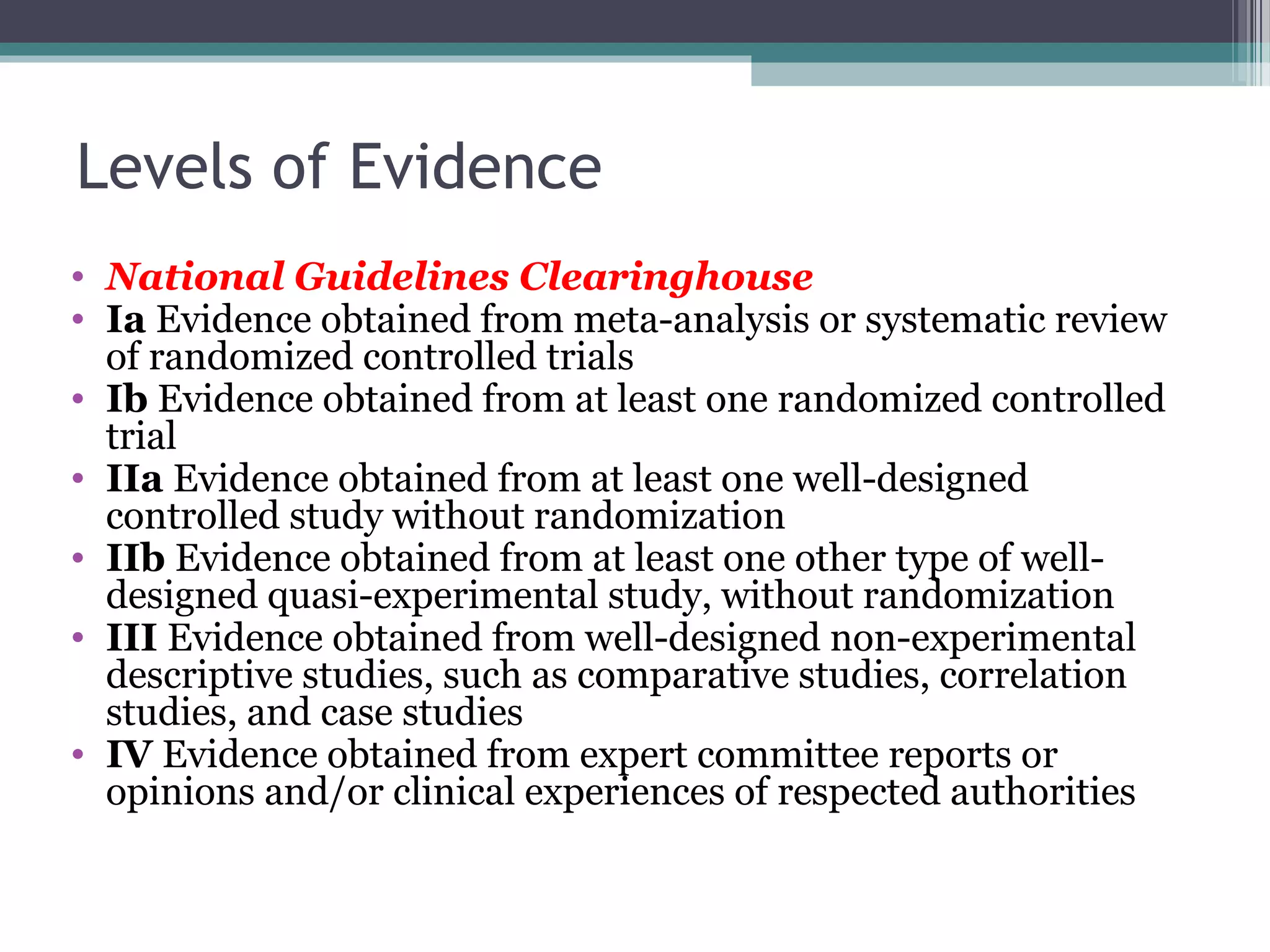

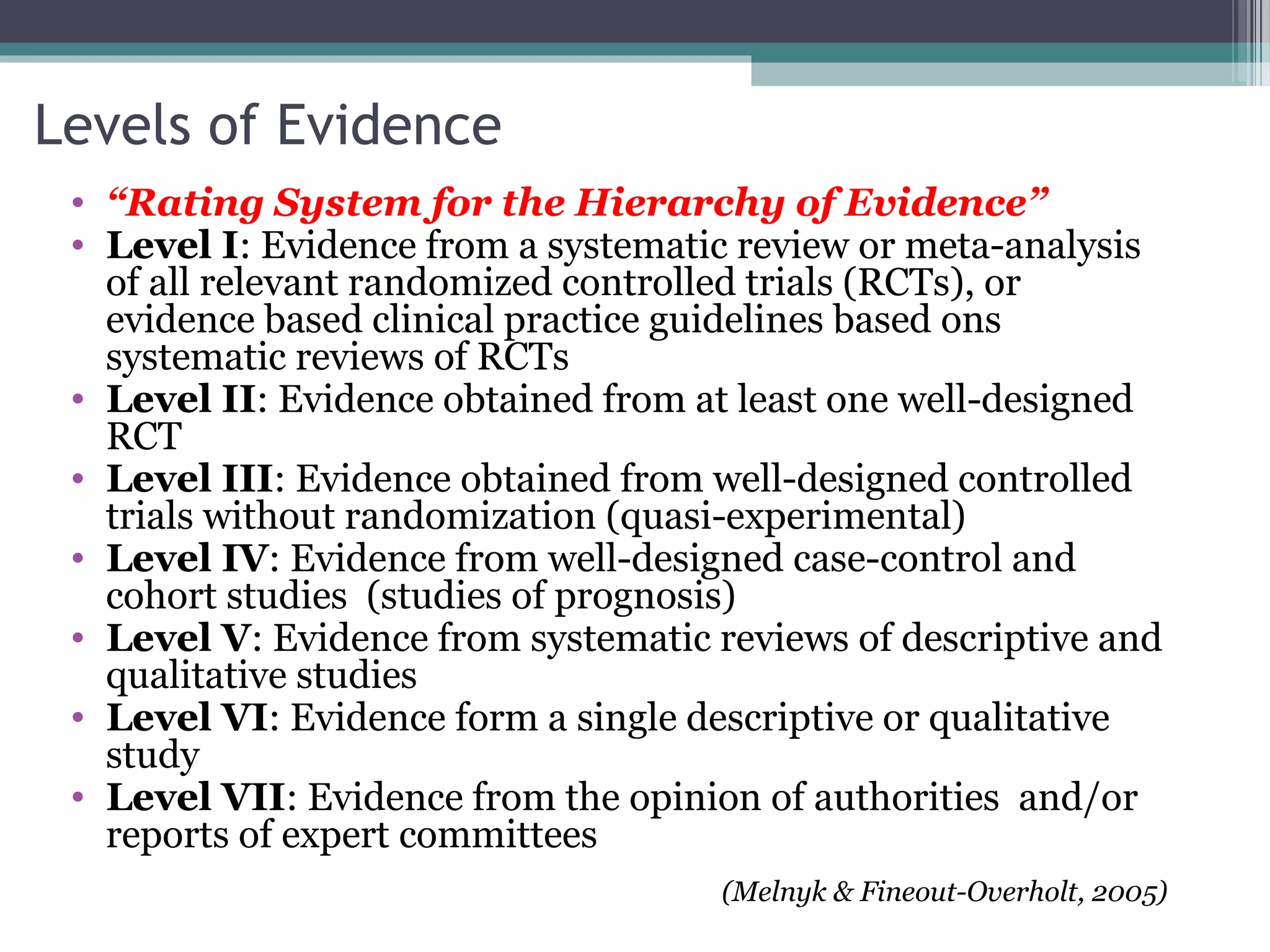

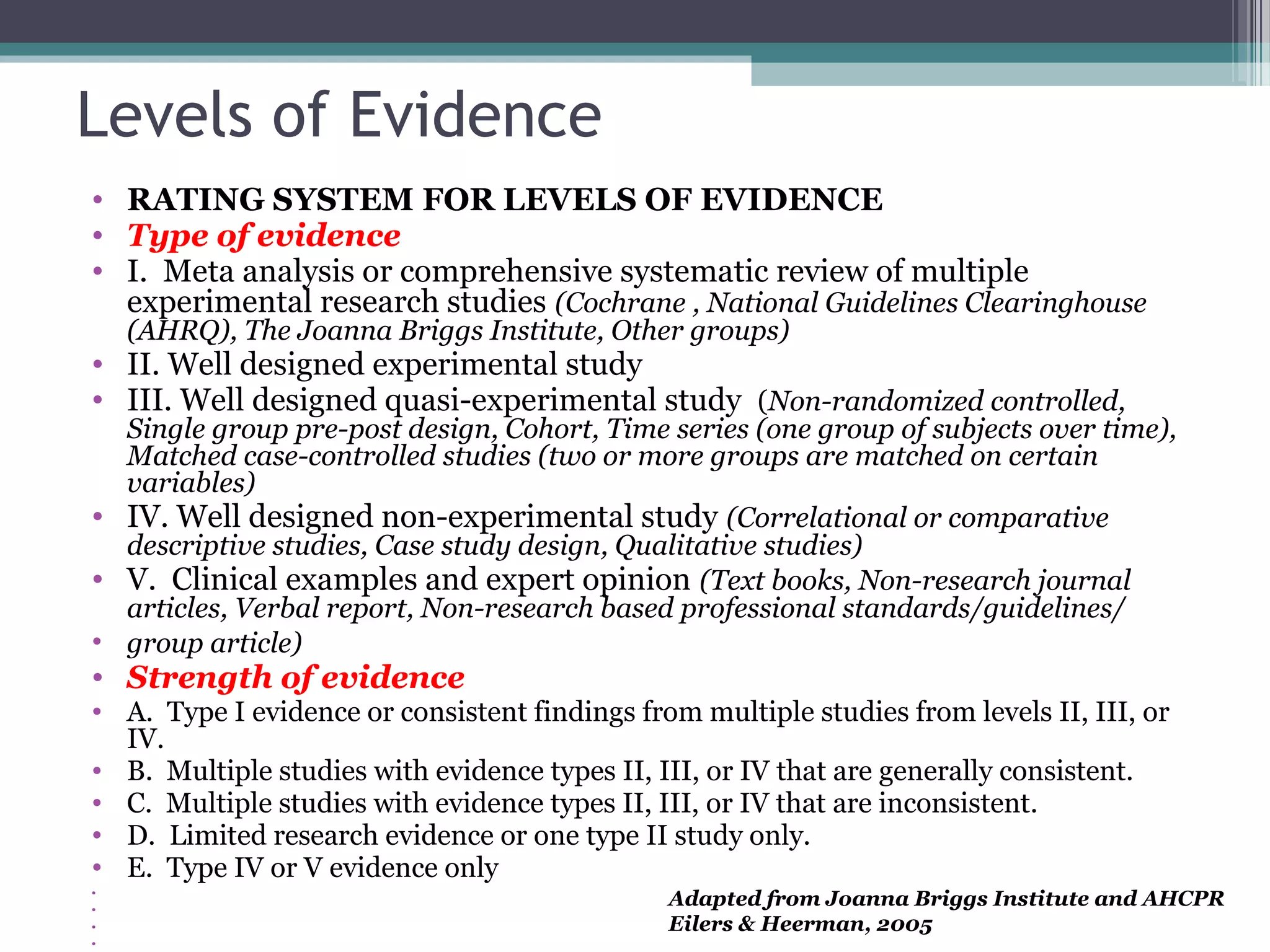

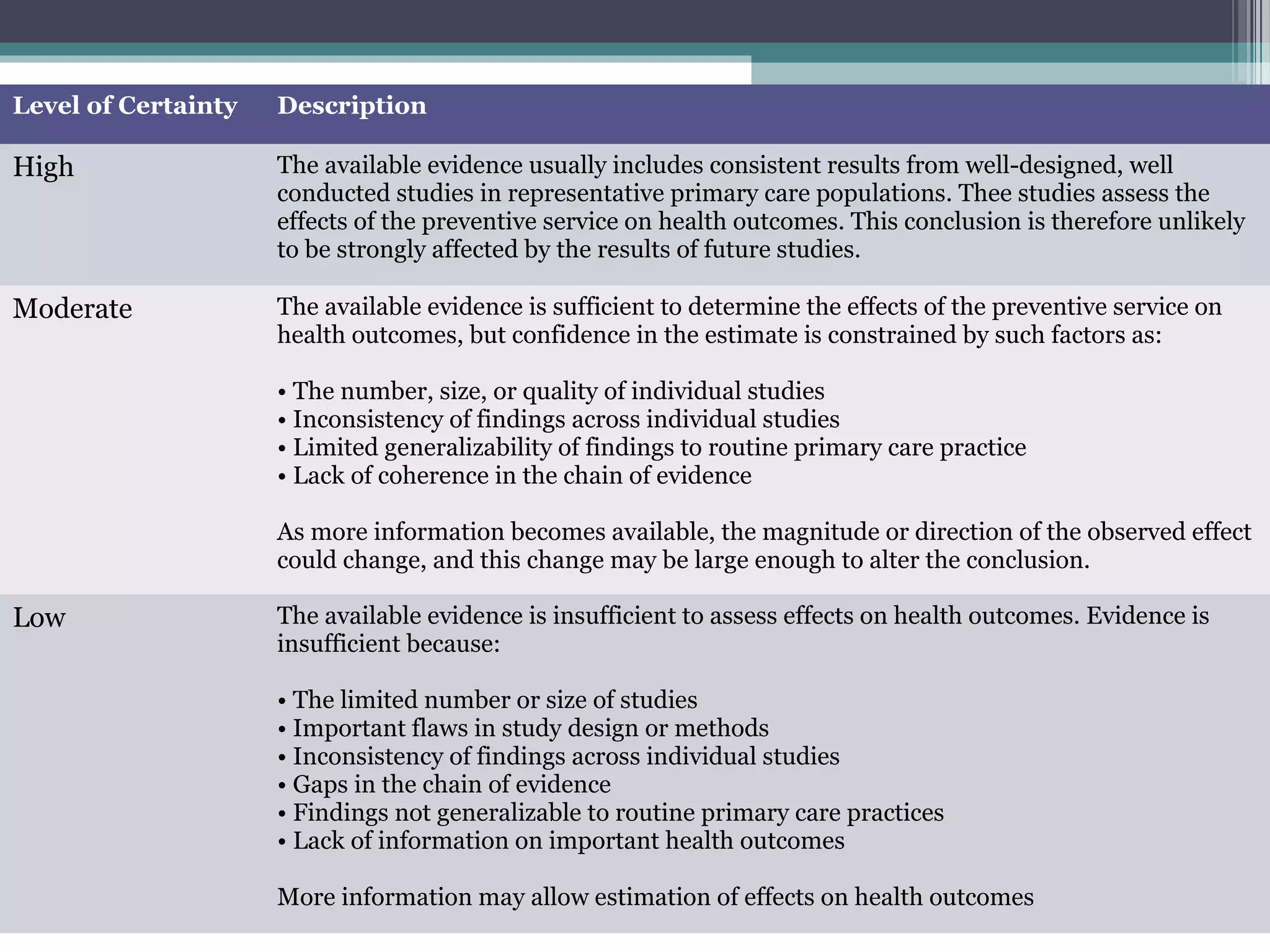

The document discusses critical appraisal of evidence-based findings. It defines critical appraisal as assessing the strength and quality of scientific evidence to evaluate its applicability to healthcare decision making. Strength of evidence depends on factors like quality, quantity, and consistency of research. Evidence is ranked in levels based on research design, with systematic reviews and randomized controlled trials having the highest levels of evidence. Evaluating the quality and applicability of evidence involves assessing the validity of results and whether results can be applied to target populations. Statistical evaluation through effect sizes can also aid in appraising evidence.