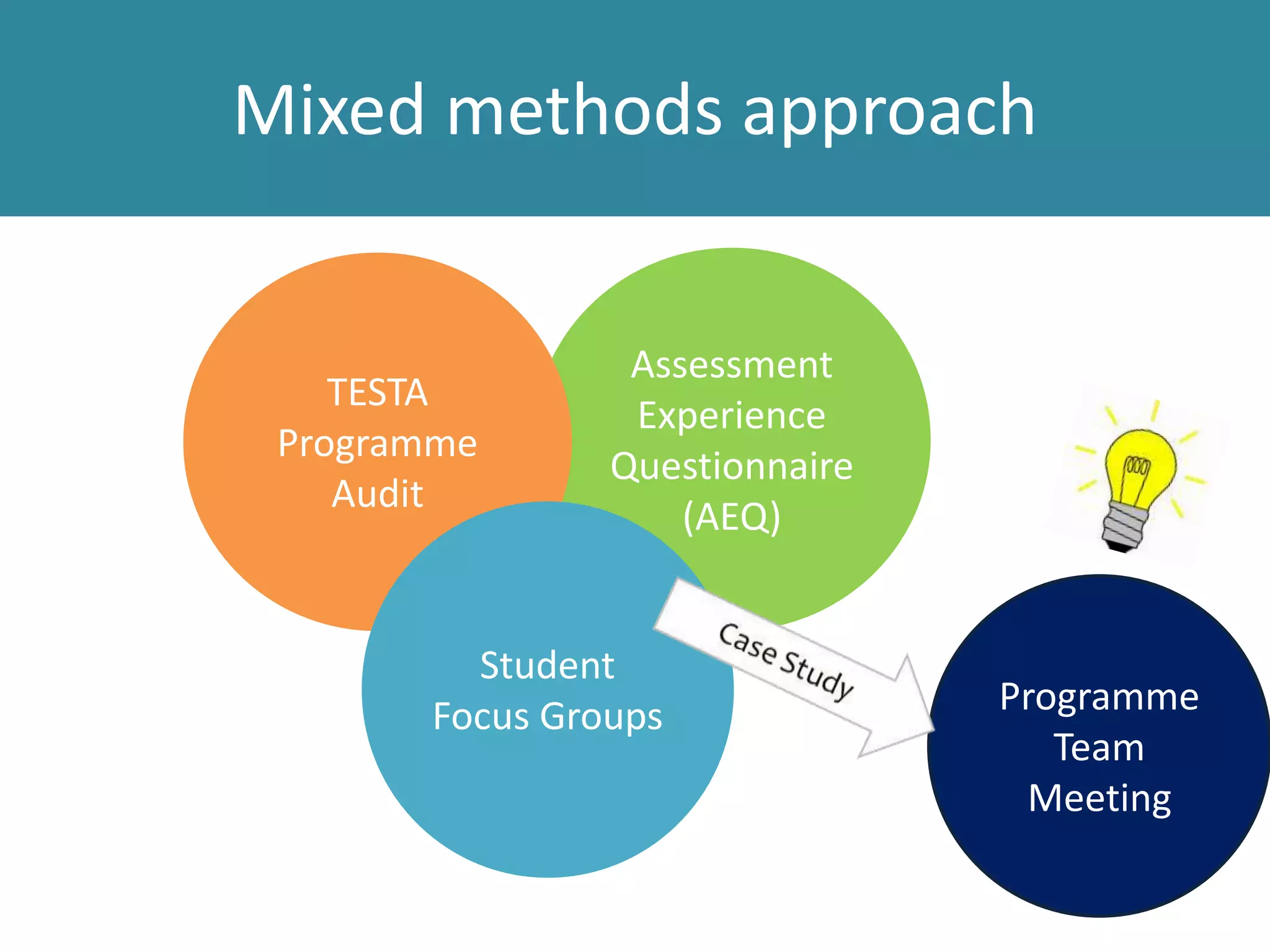

This document summarizes a presentation given by Professor Tansy Jessop on assessment and feedback in medical education. The presentation covered several key topics:

1) The importance of assessment and feedback in driving student learning. Formative assessment and feedback, in particular, are critical for learning but are often underutilized.

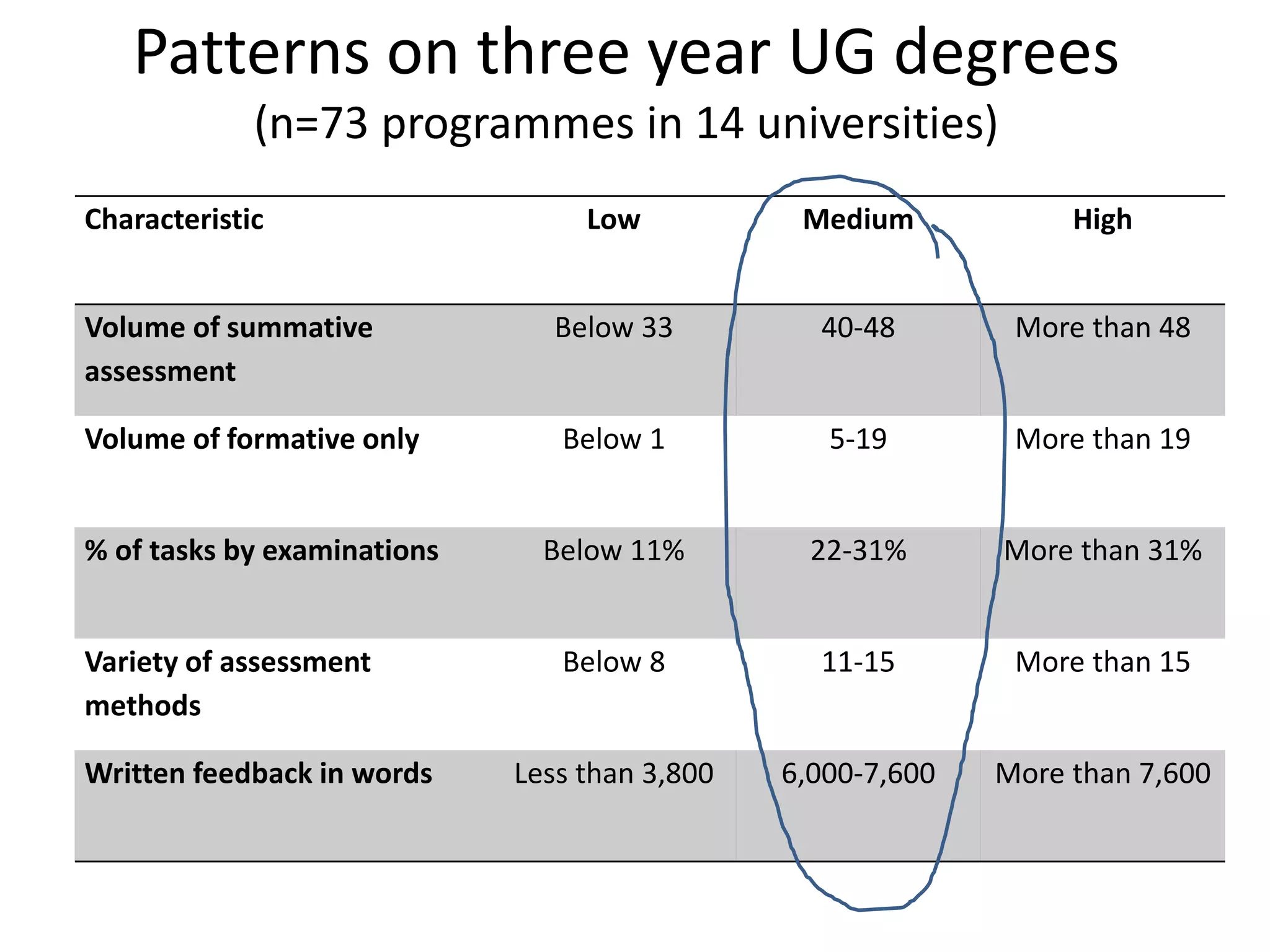

2) Research showing wide variations in assessment patterns across degree programs, with some relying heavily on high-stakes summative assessment and having little formative assessment. This can encourage surface rather than deep learning.

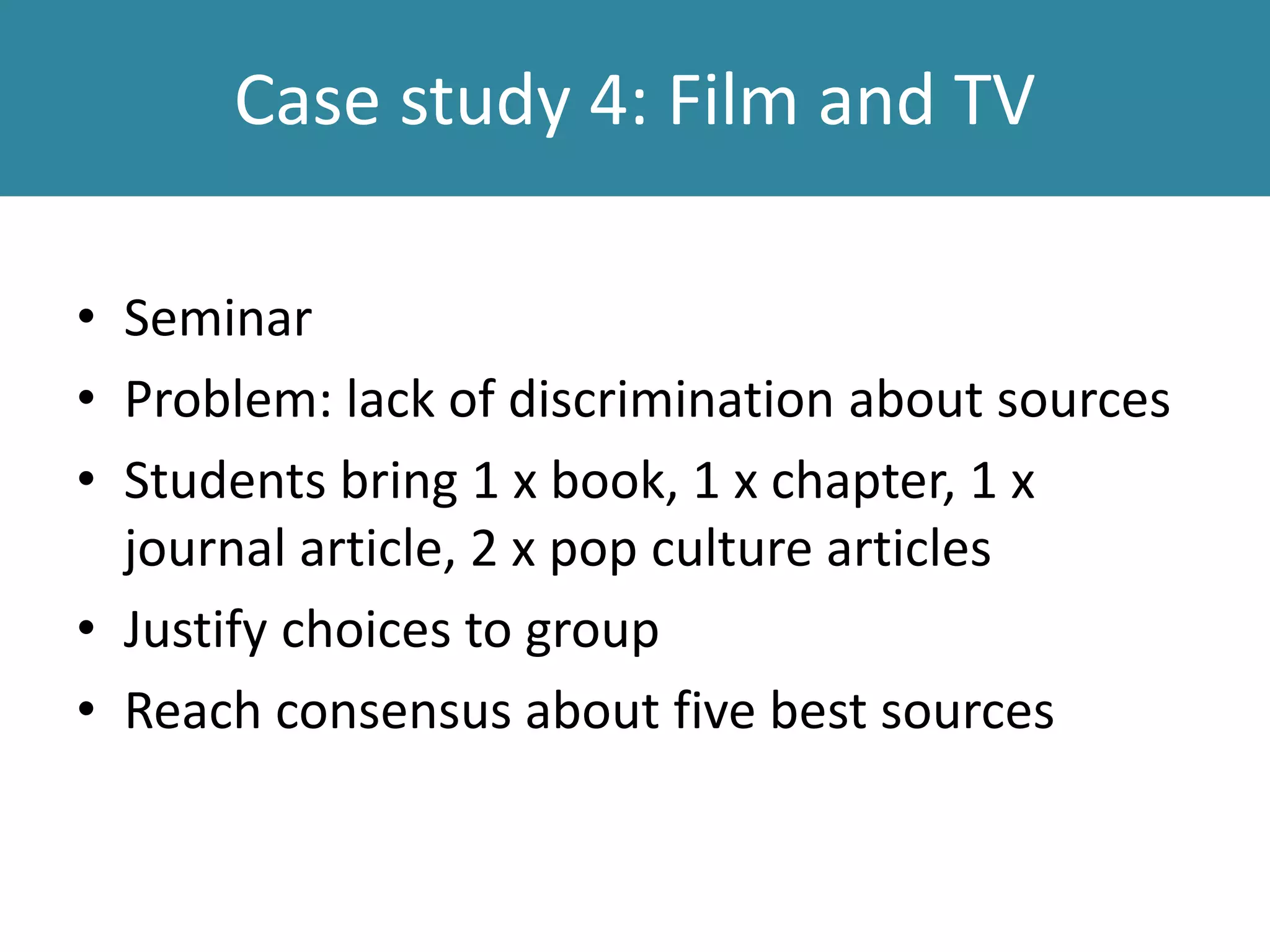

3) Case studies of programs that have successfully integrated formative assessment, emphasizing principles like reducing summative assessment, linking formative and summative tasks, and using low