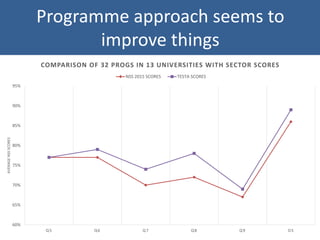

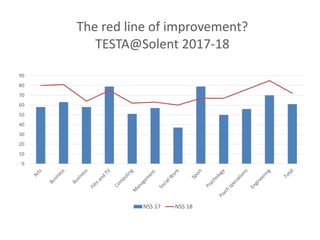

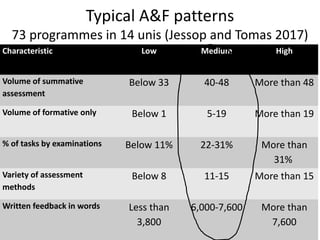

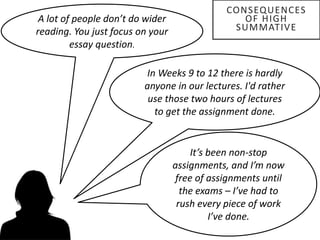

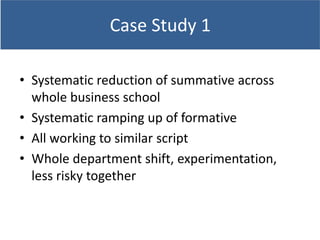

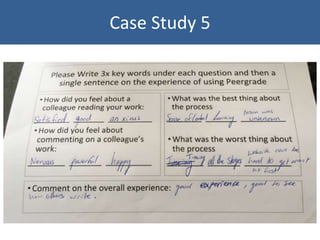

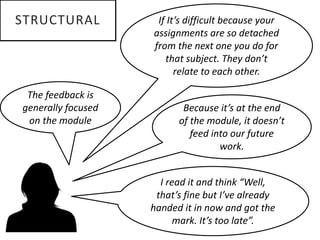

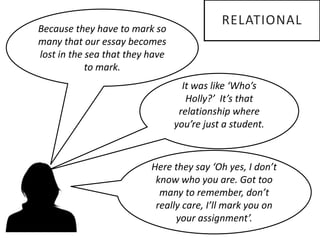

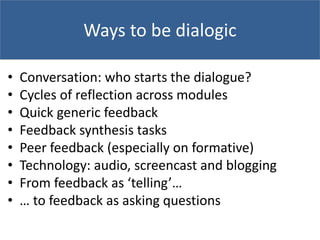

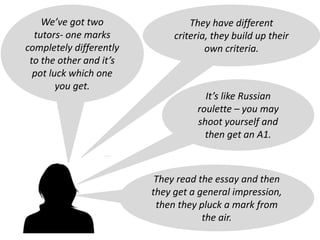

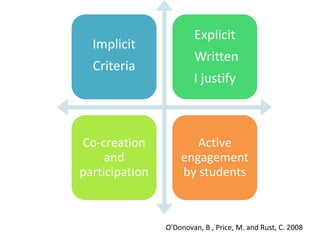

This document summarizes an interactive masterclass on the TESTA (Transforming the Experience of Students Through Assessment) programme approach. The masterclass discusses the rationale for taking a programme approach to assessment, including addressing modular problems, curriculum problems, and student alienation. Methods discussed include conducting a TESTA programme audit and using an Assessment Experience Questionnaire and student focus groups to gather data. Key themes covered are high summative assessment loads, disconnected feedback between assignments, and student confusion about assessment goals and standards. Strategies presented to improve assessment include increasing formative assessment, providing more dialogic feedback, and helping students internalize assessment criteria.