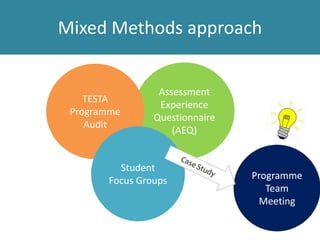

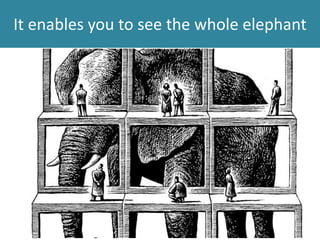

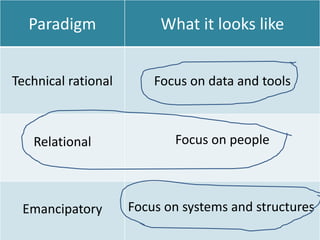

1. The document discusses TESTA (Transforming the Experience of Students Through Assessment), a mixed-methods approach to understanding assessment practices and their impact on student learning.

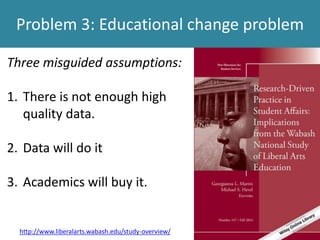

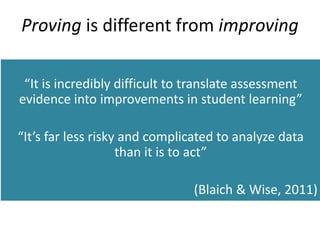

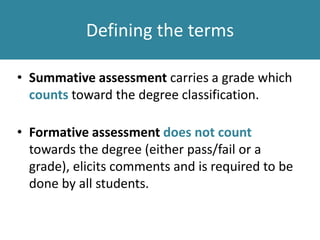

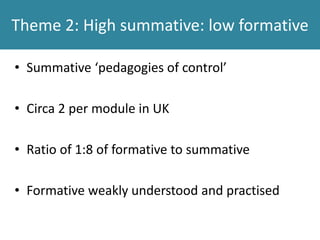

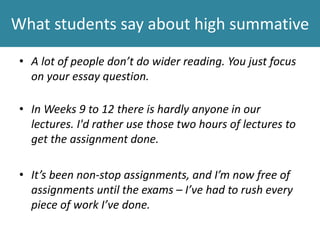

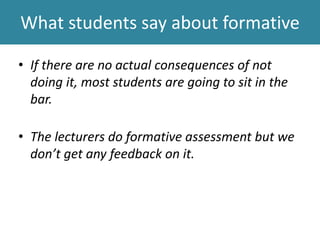

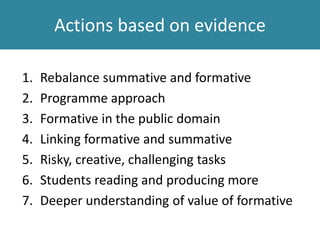

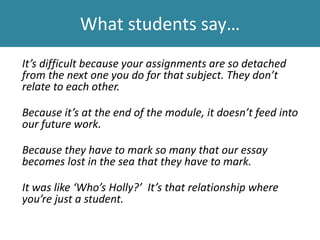

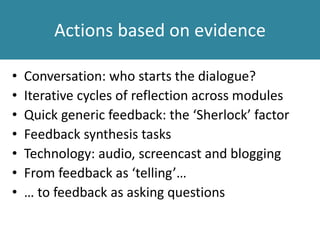

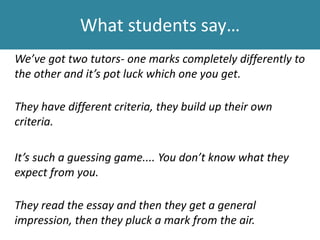

2. TESTA addresses three common problems: variations in assessment leading to uncertainty about quality, an over-reliance on high-stakes summative assessment over formative assessment, and disconnection between feedback and future work.

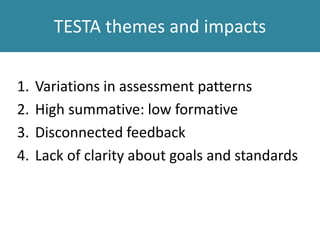

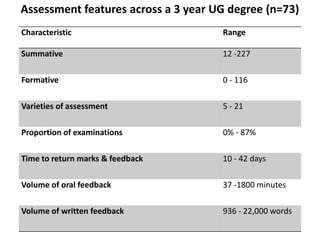

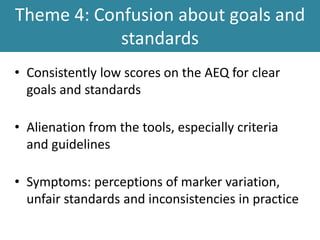

3. The data from TESTA highlights four key themes: large variations in assessment patterns between programmes; high levels of summative assessment and low levels of formative assessment; disconnected feedback that does not feed into future work; and student confusion about learning goals and standards due to inconsistent practices.