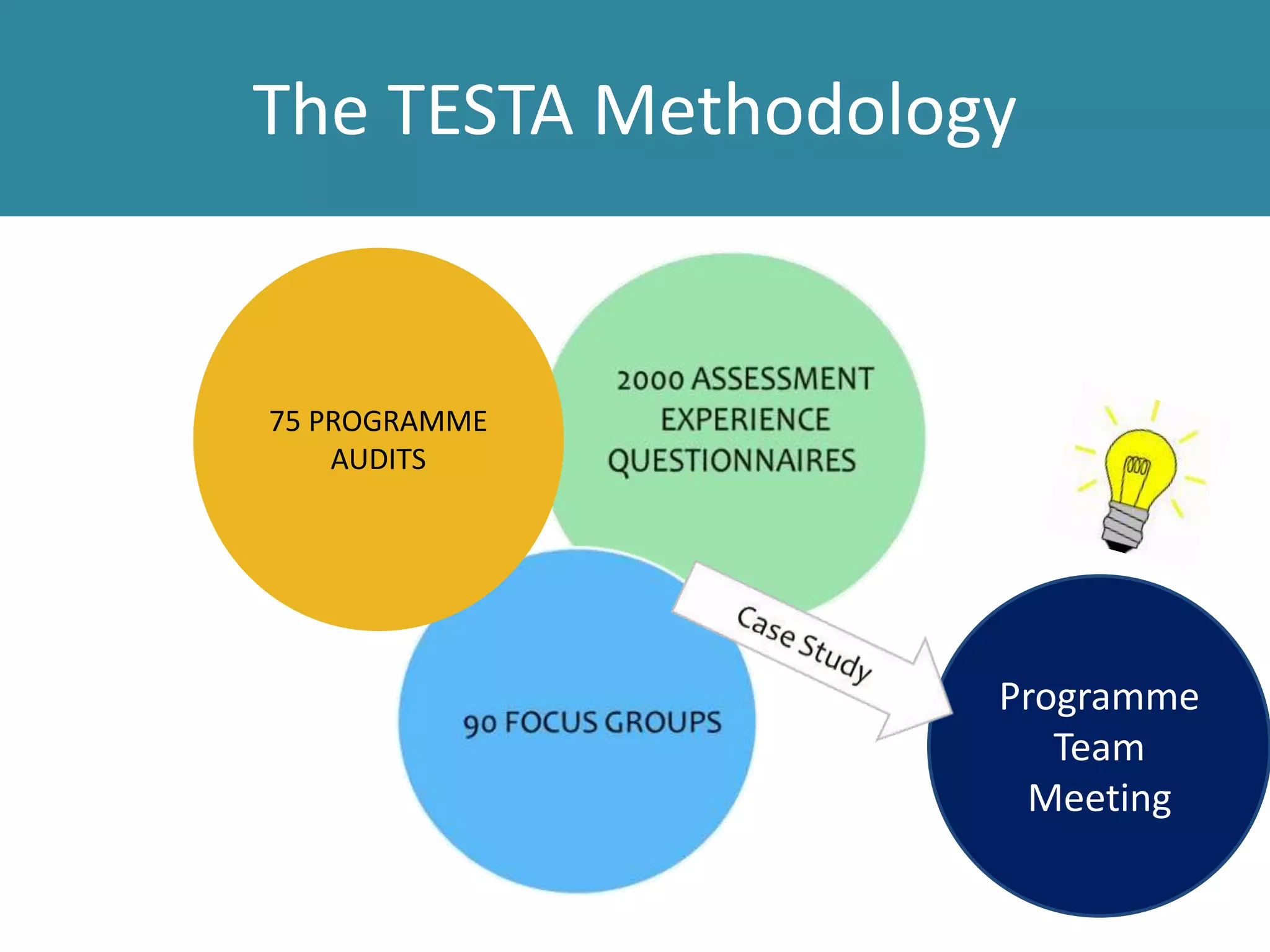

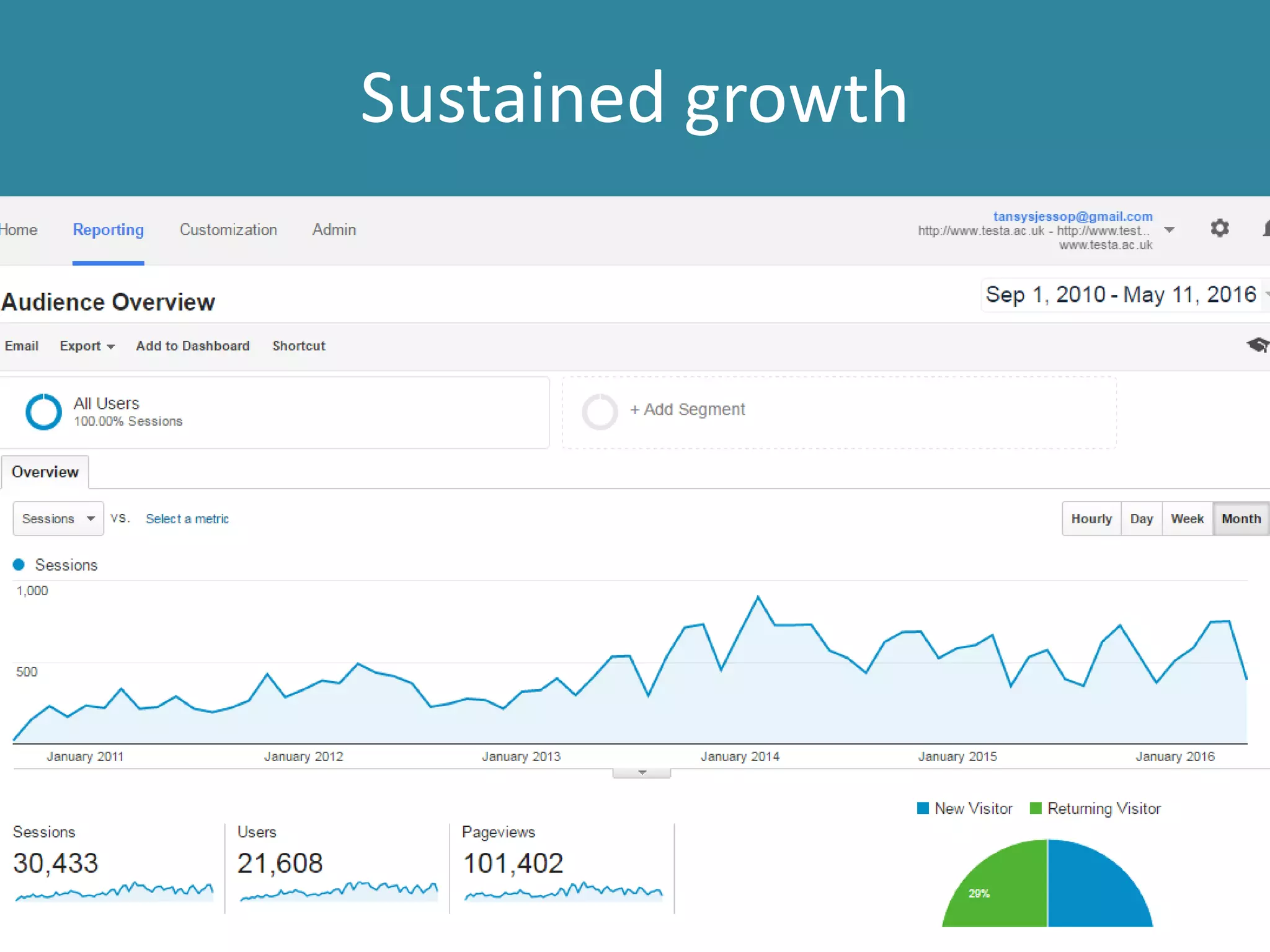

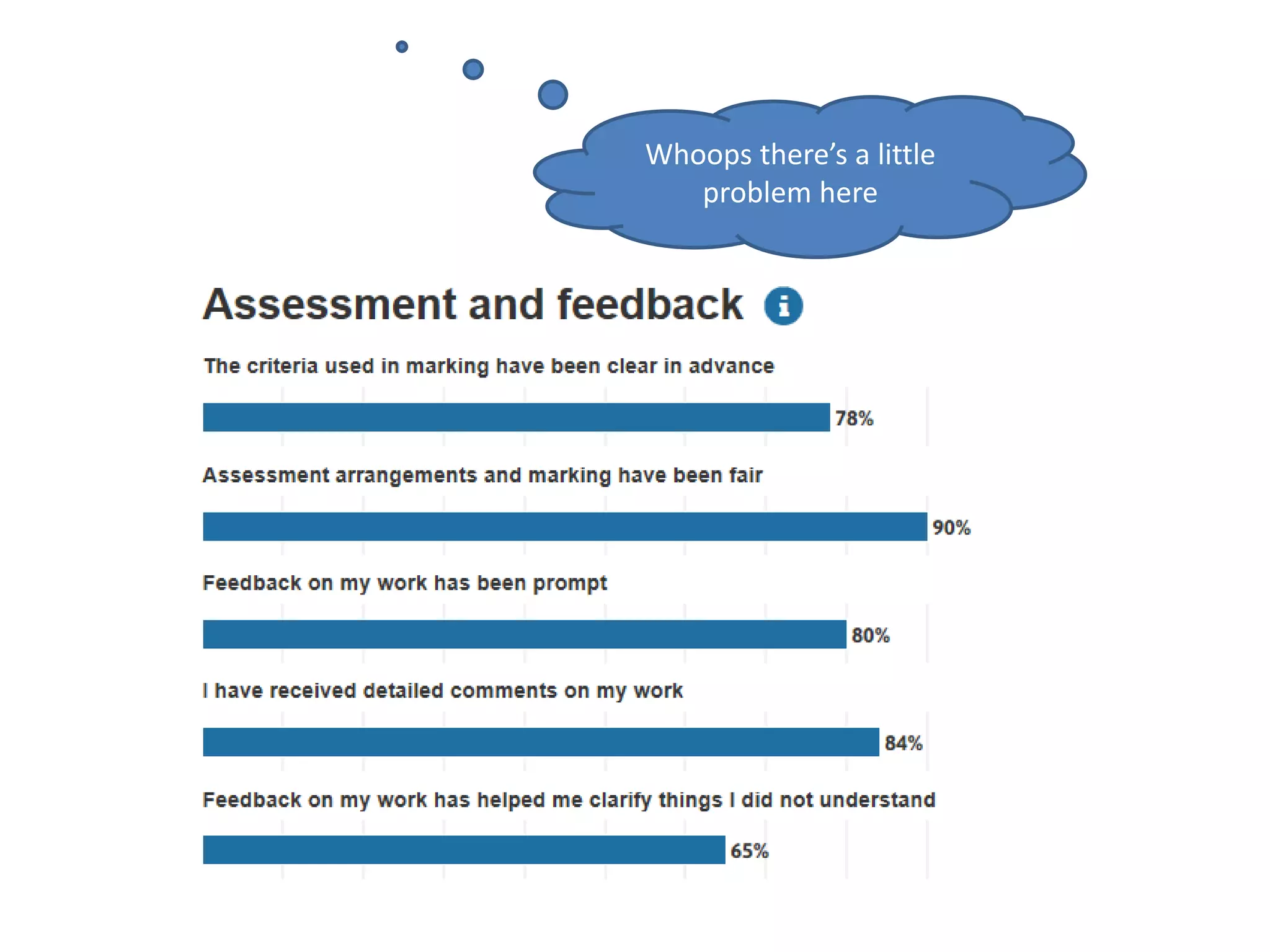

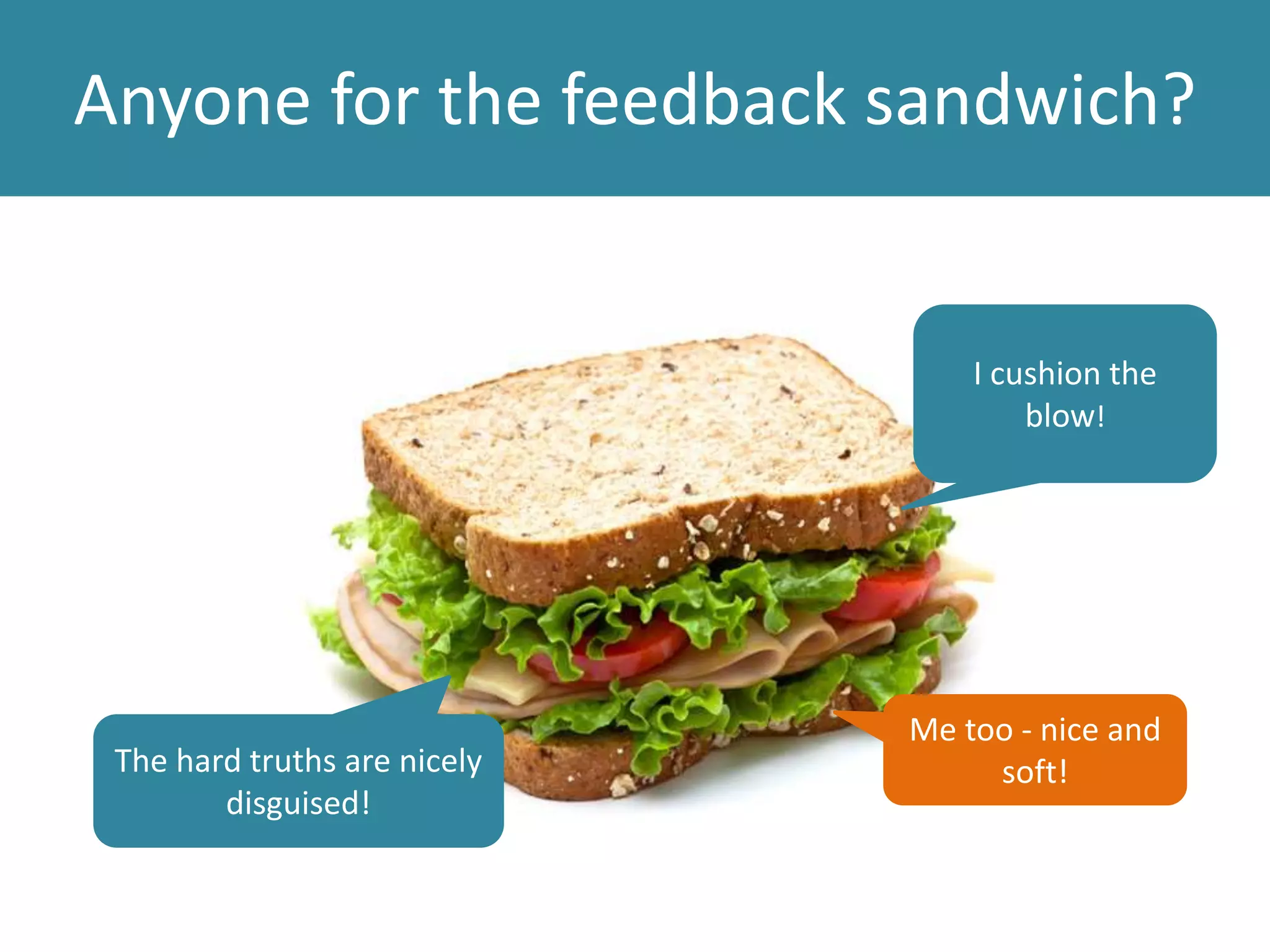

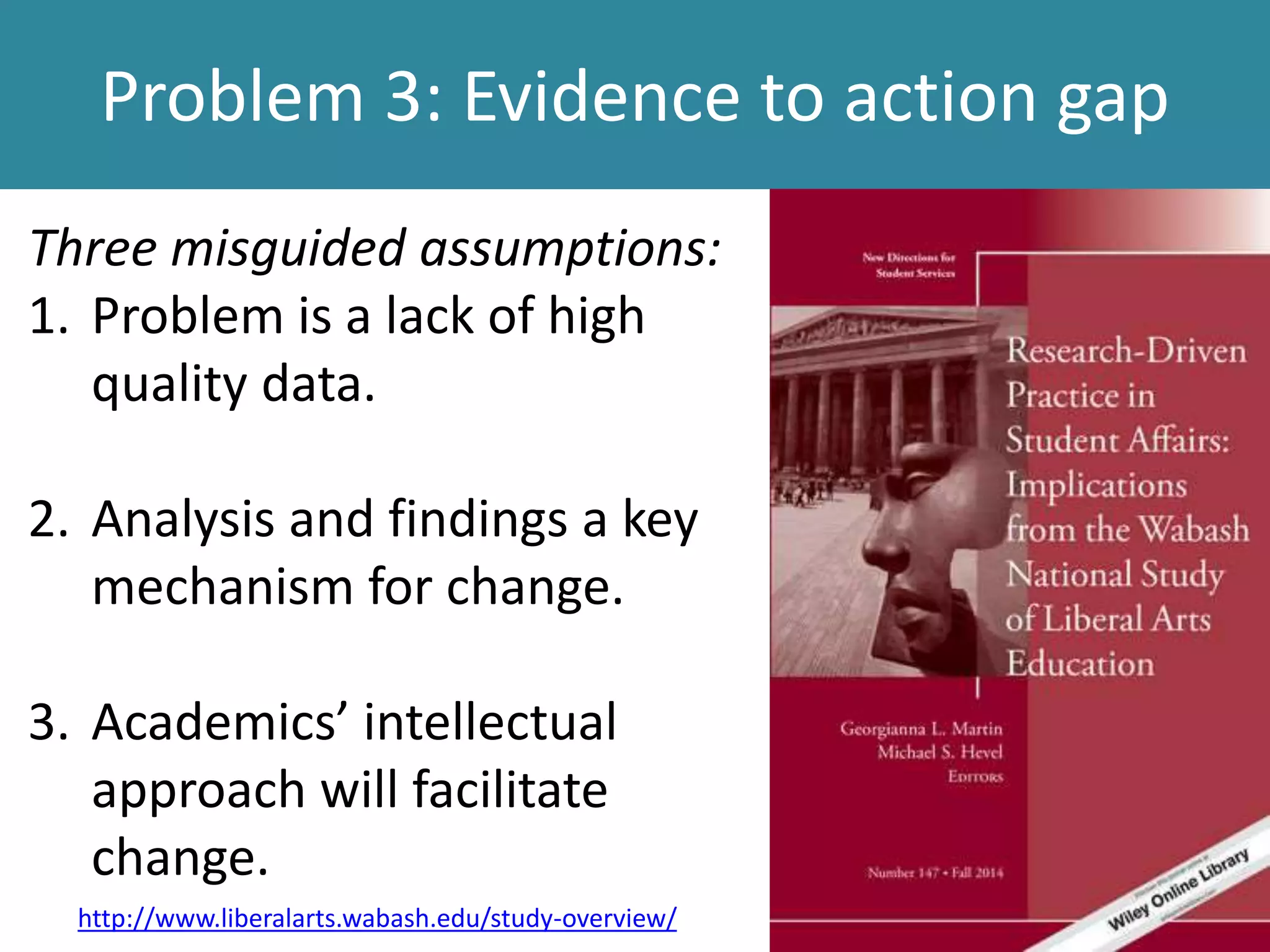

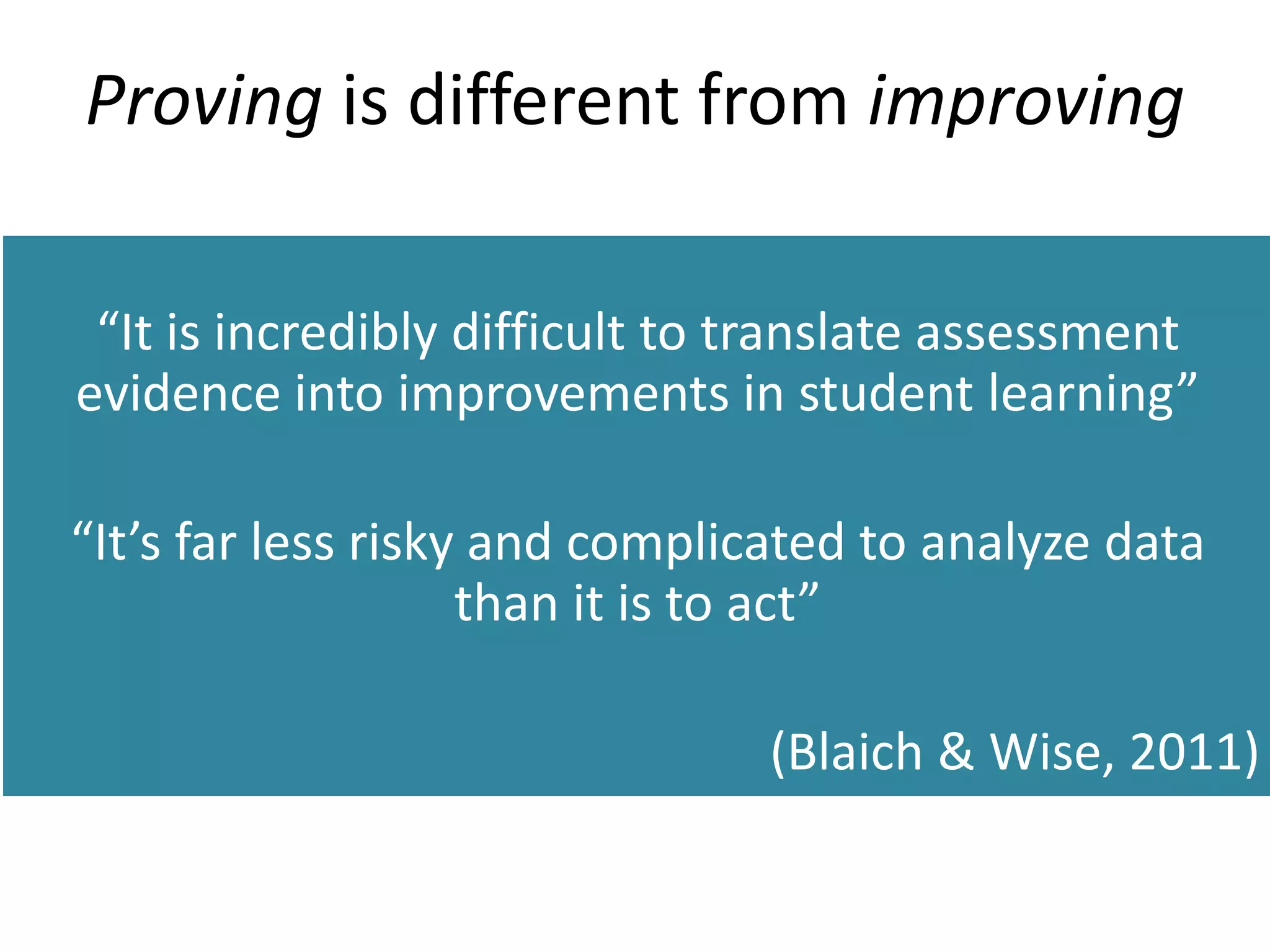

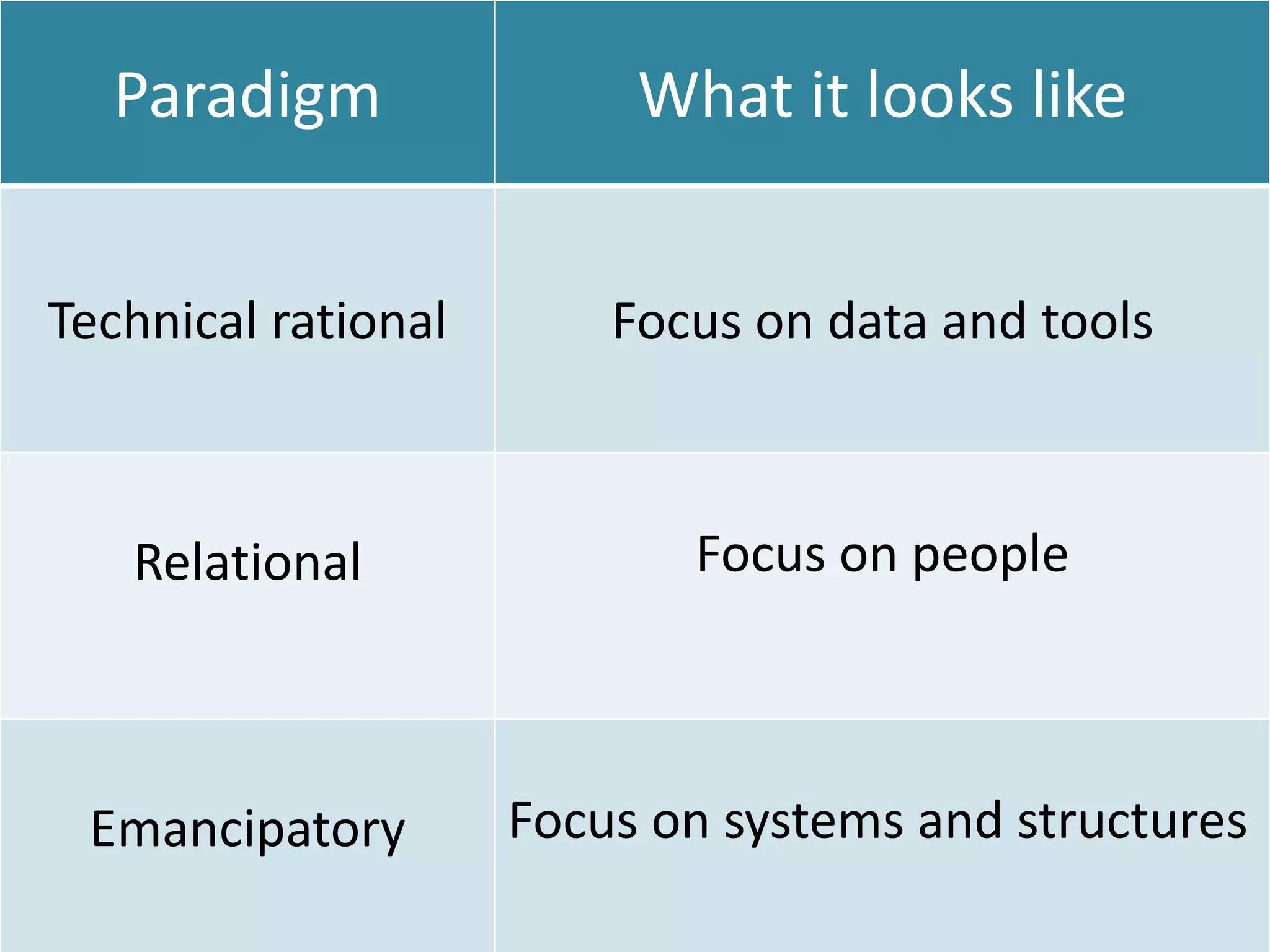

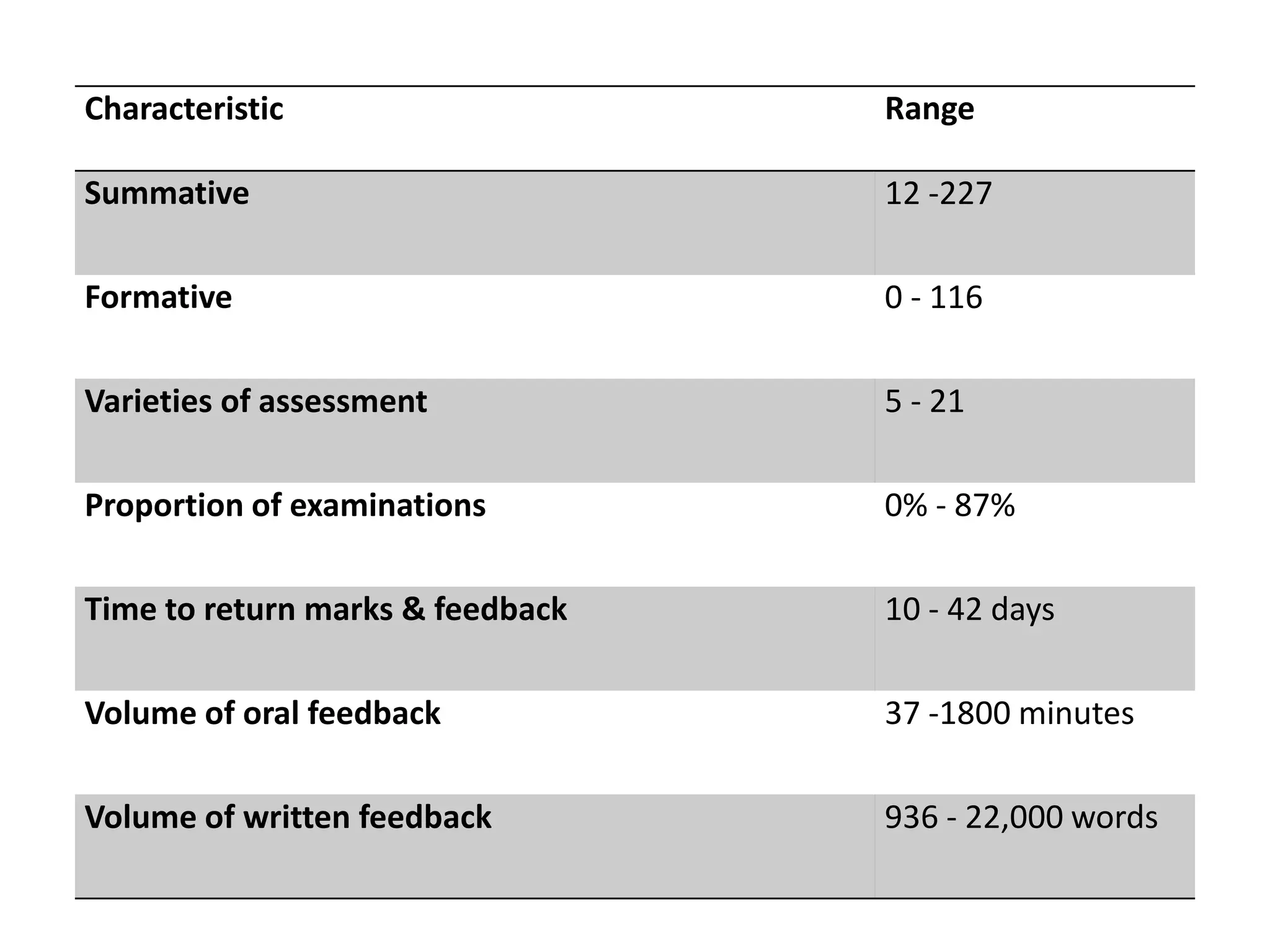

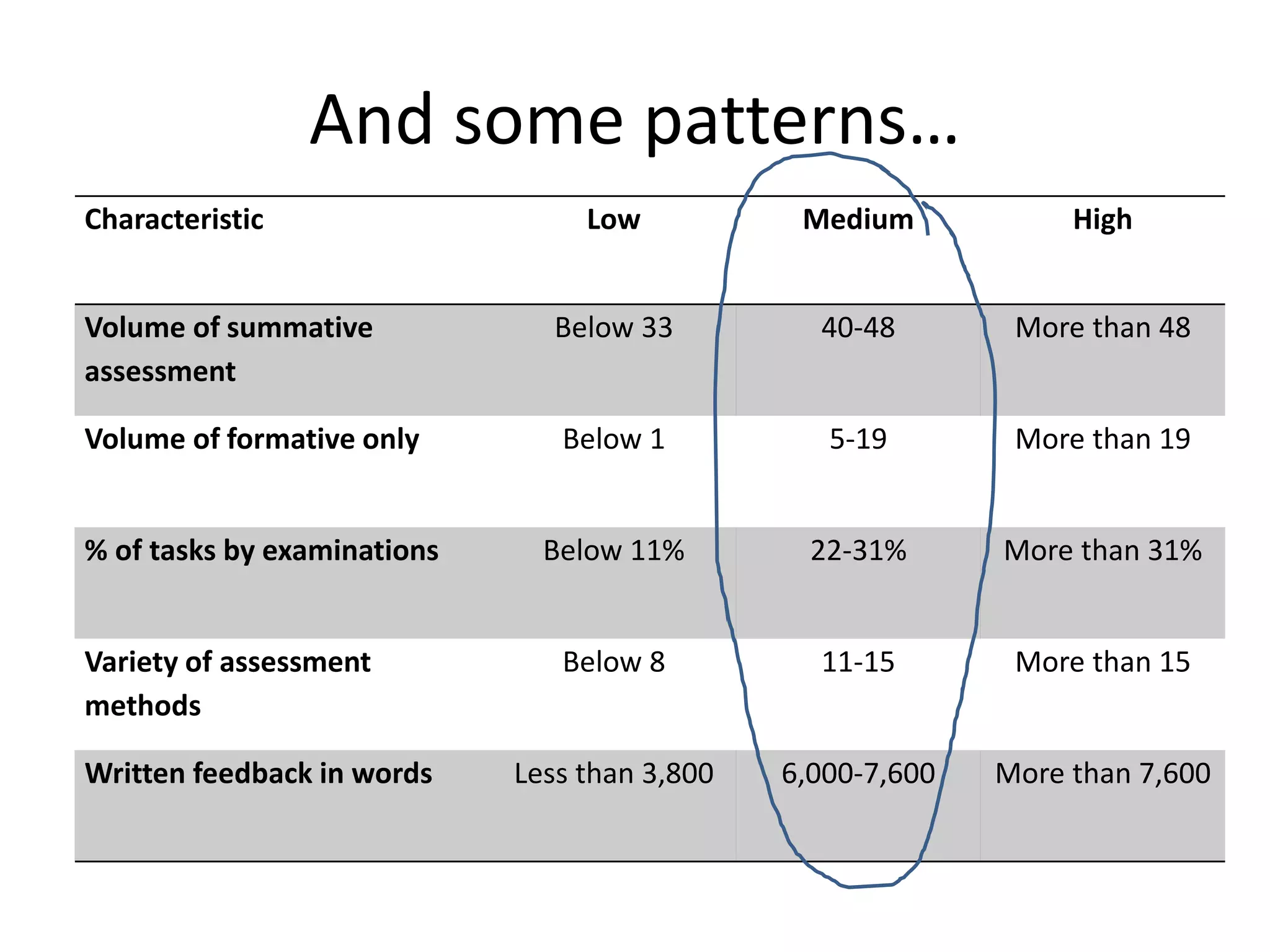

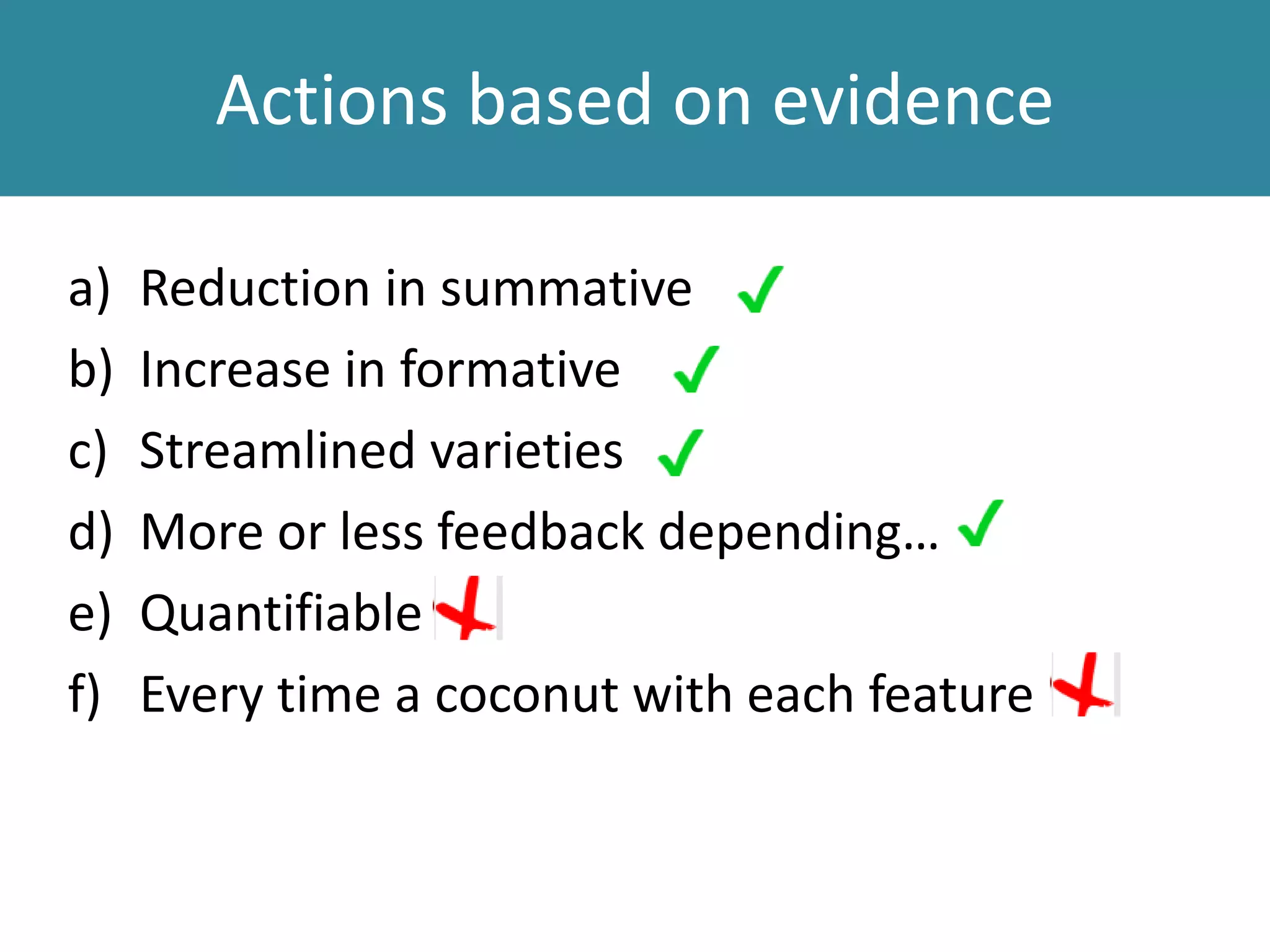

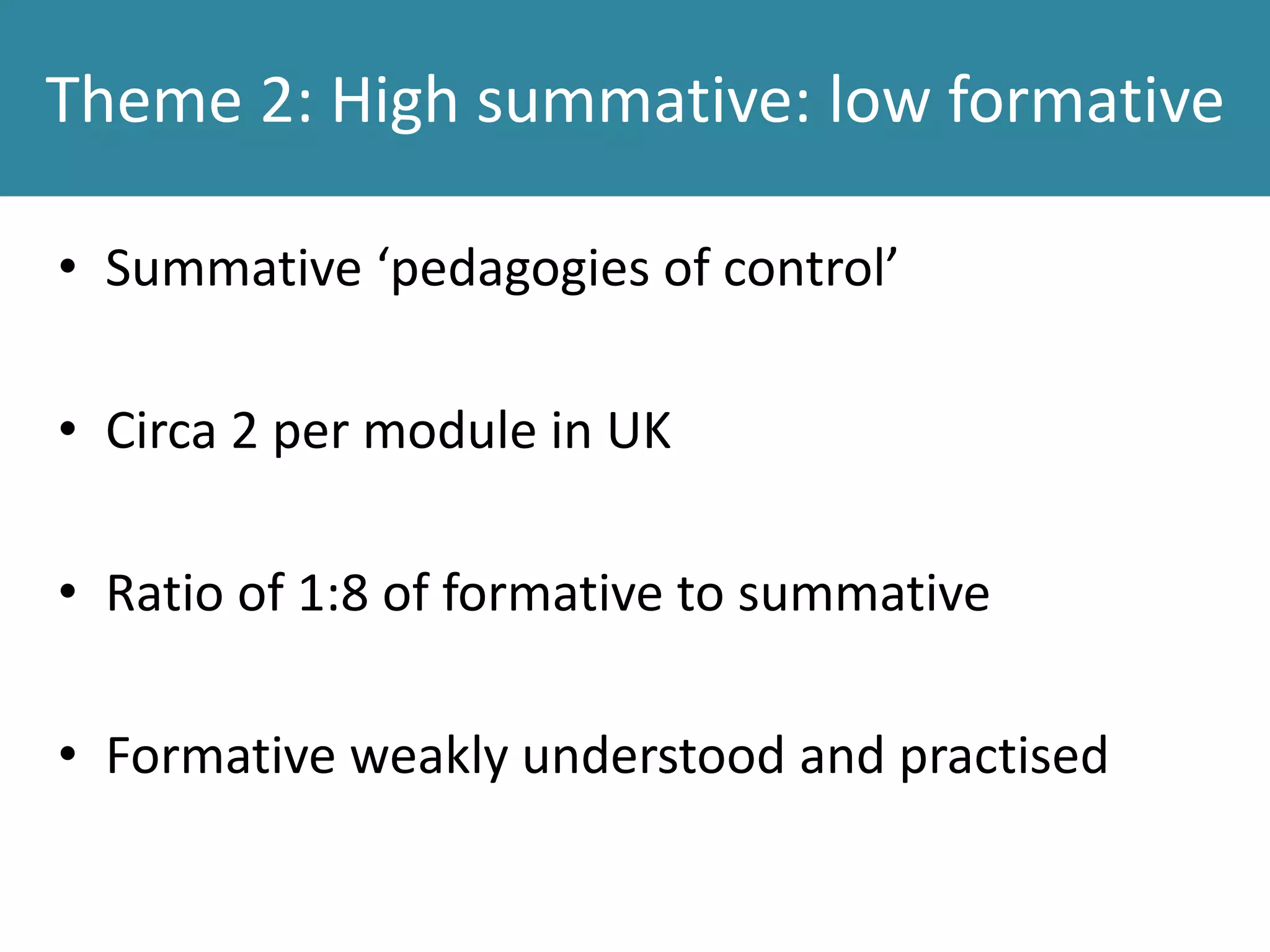

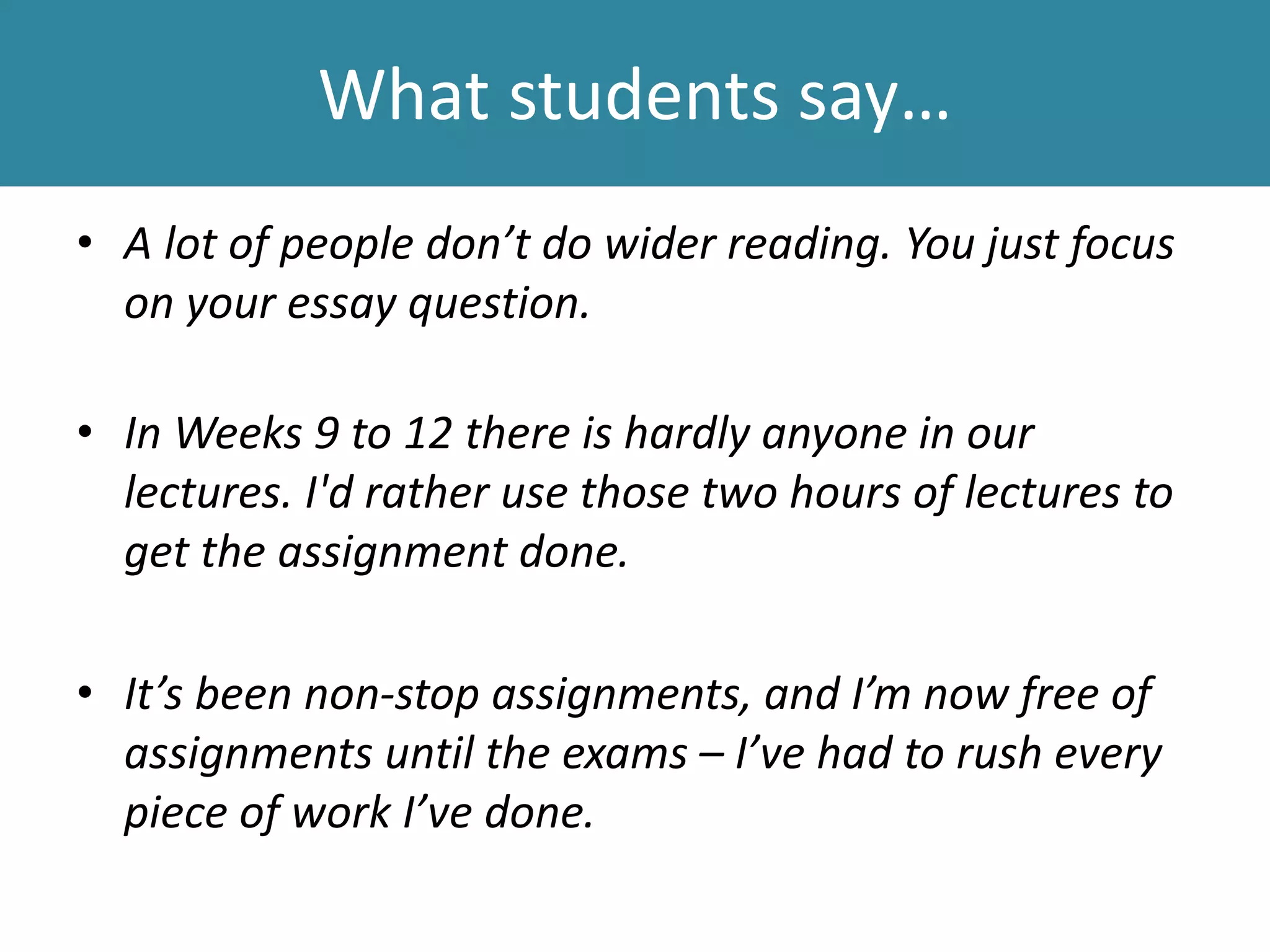

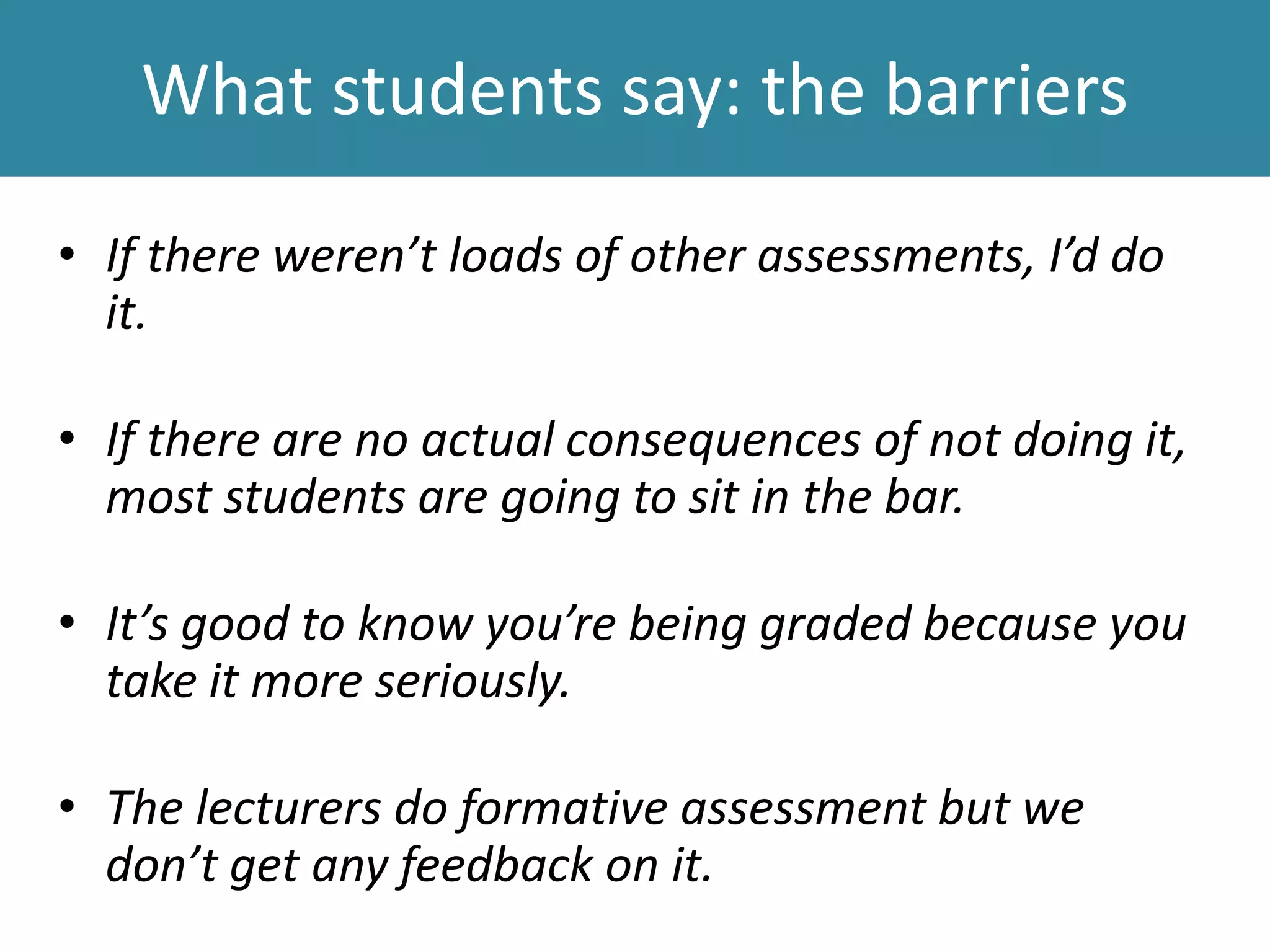

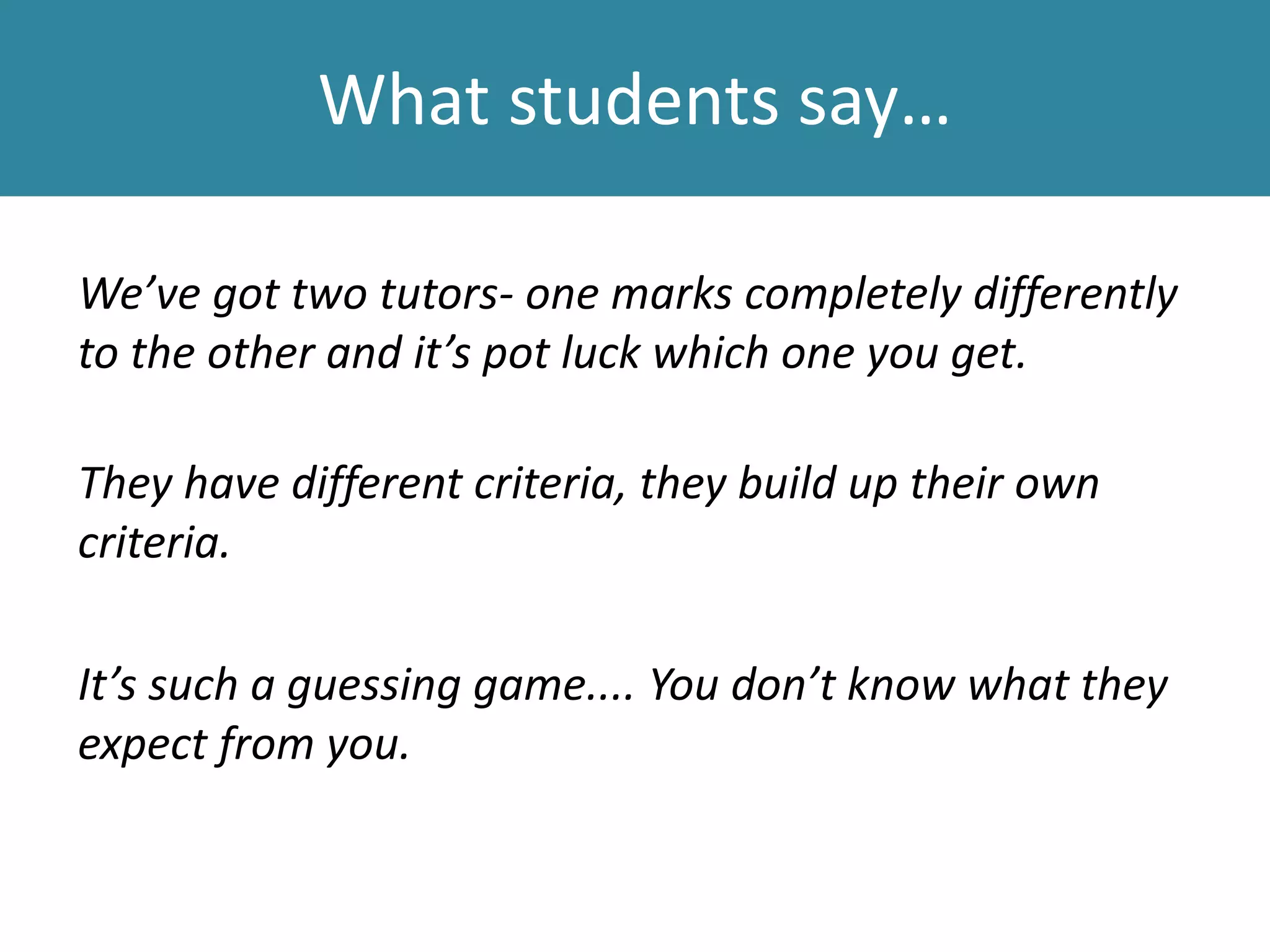

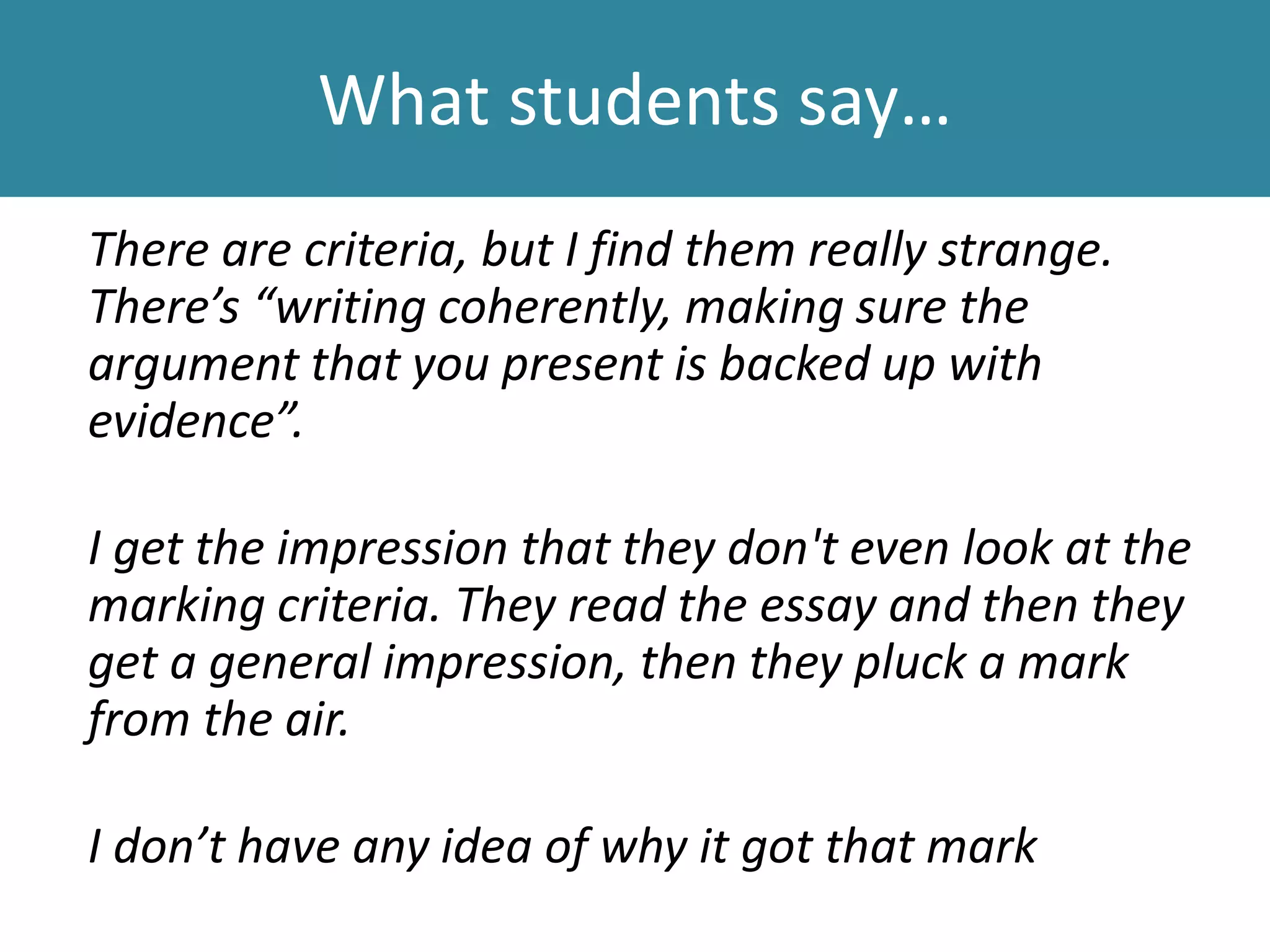

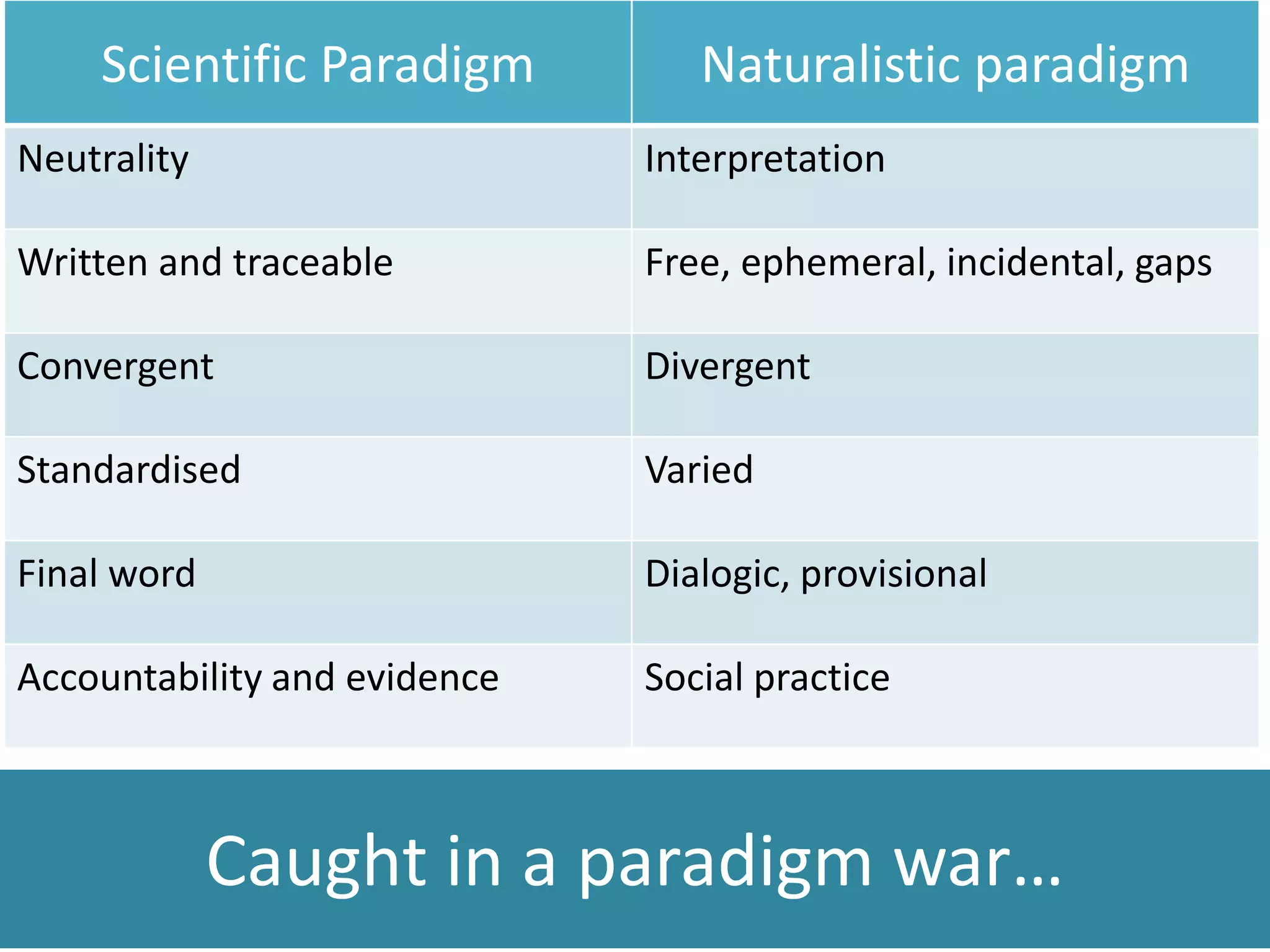

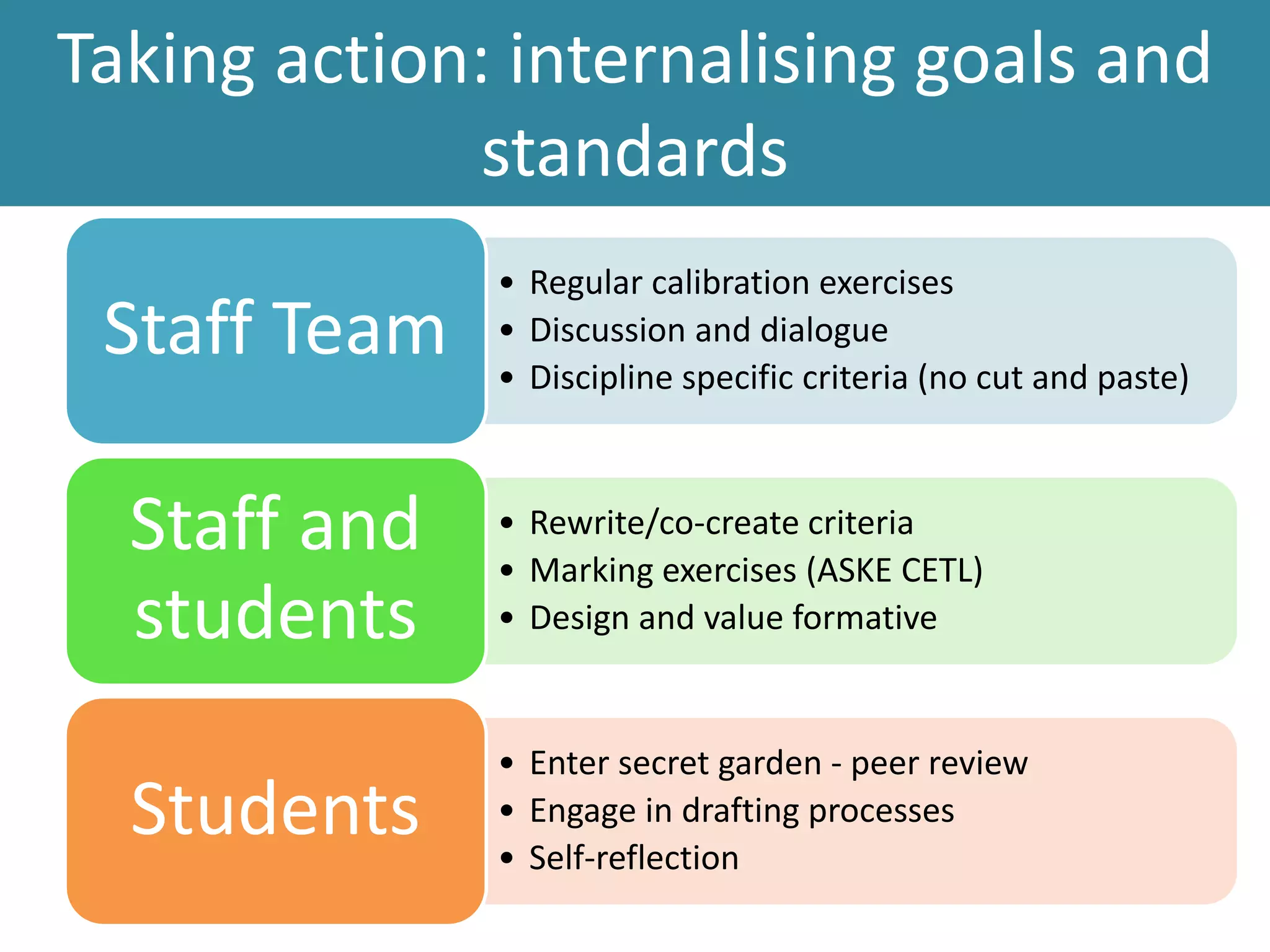

The document discusses the Testa methodology for transforming assessment and feedback culture in higher education, emphasizing a shift towards collaborative and holistic curriculum design rather than individualistic approaches. It identifies three main problems: knee-jerk reactions to student feedback, issues in curriculum design, and the gap between evidence and actionable improvement. Recommendations include rebalancing assessment types, enhancing feedback mechanisms, and fostering coherence in goals and standards to improve overall student experience and outcomes.