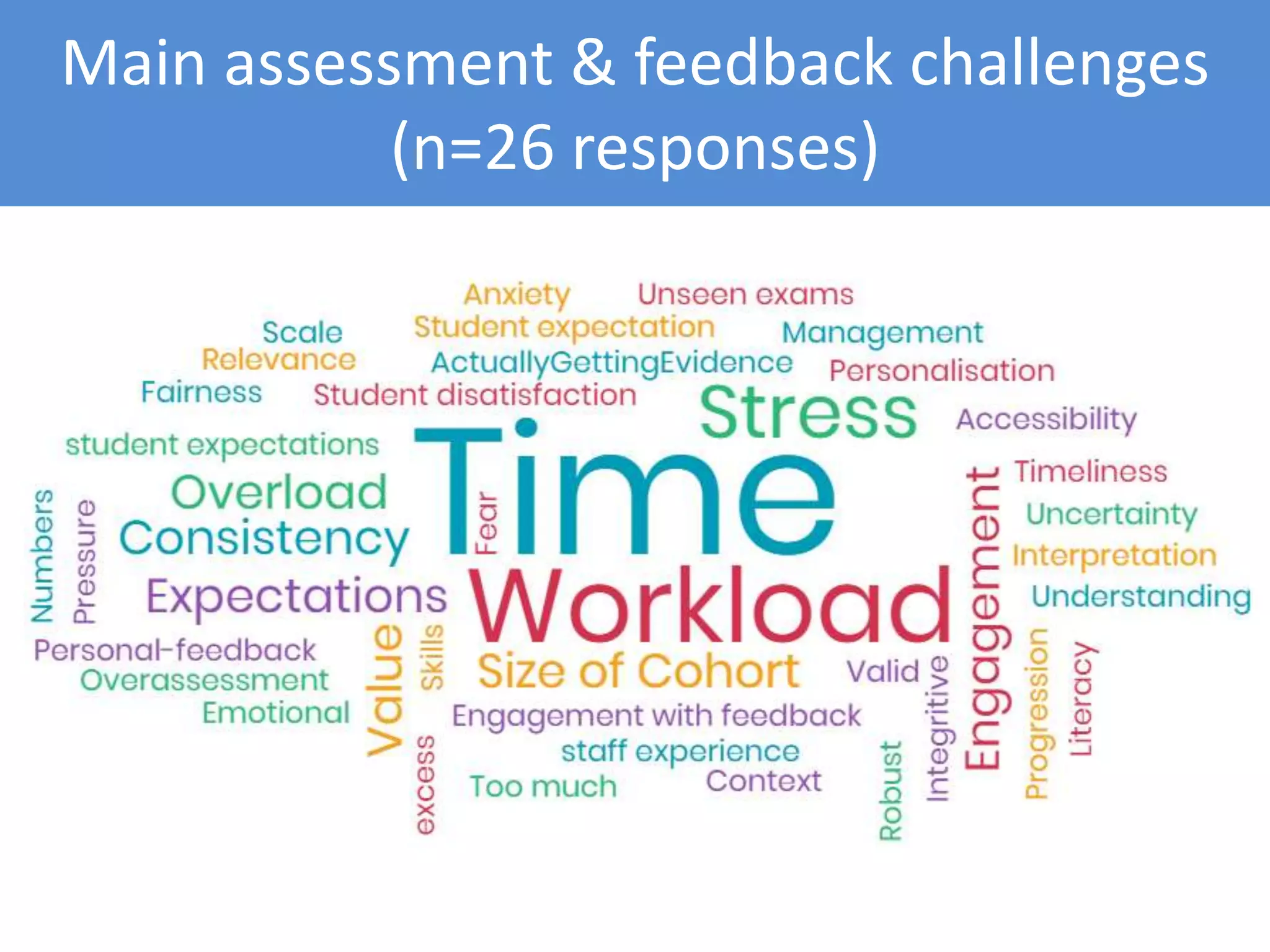

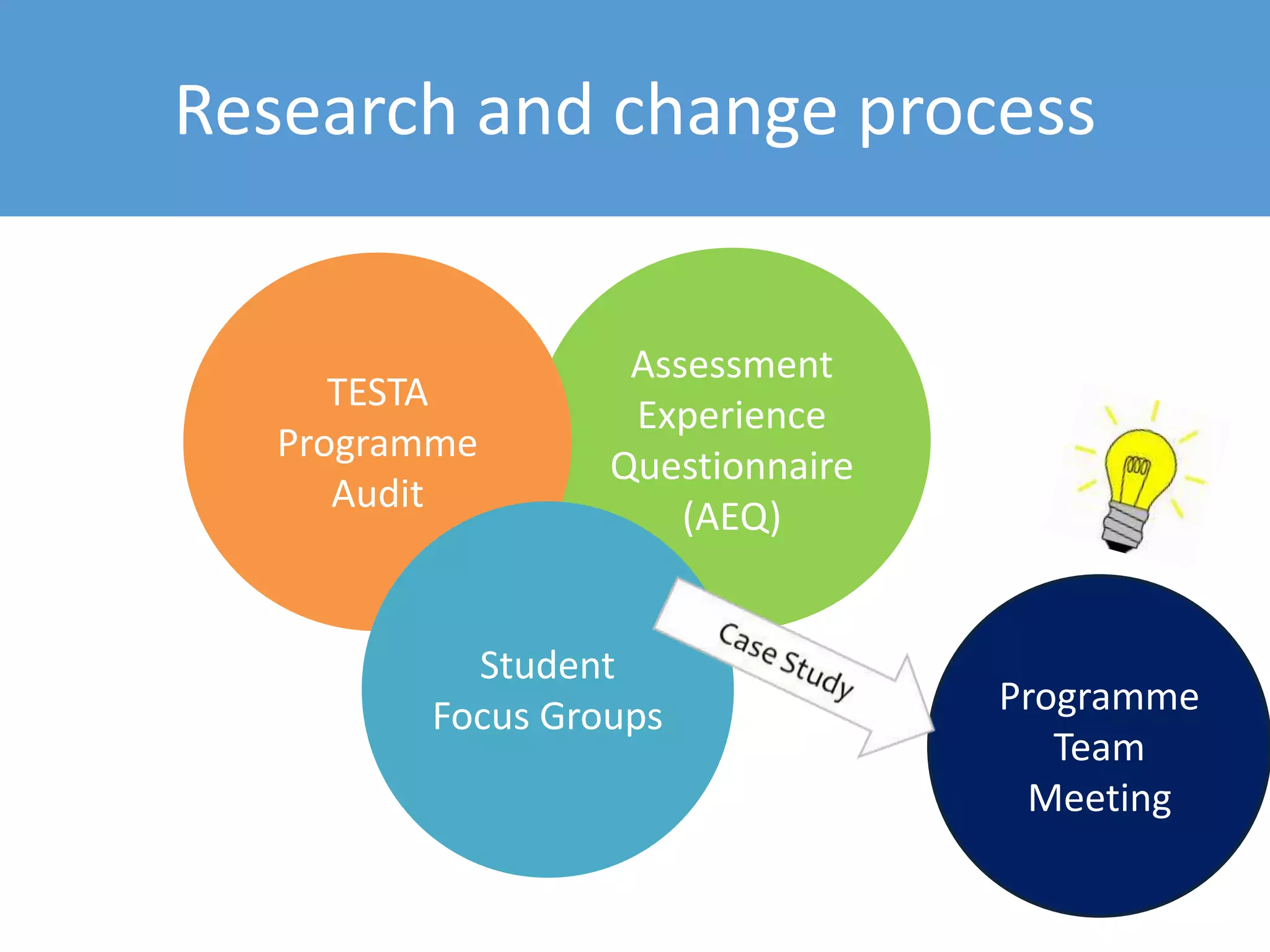

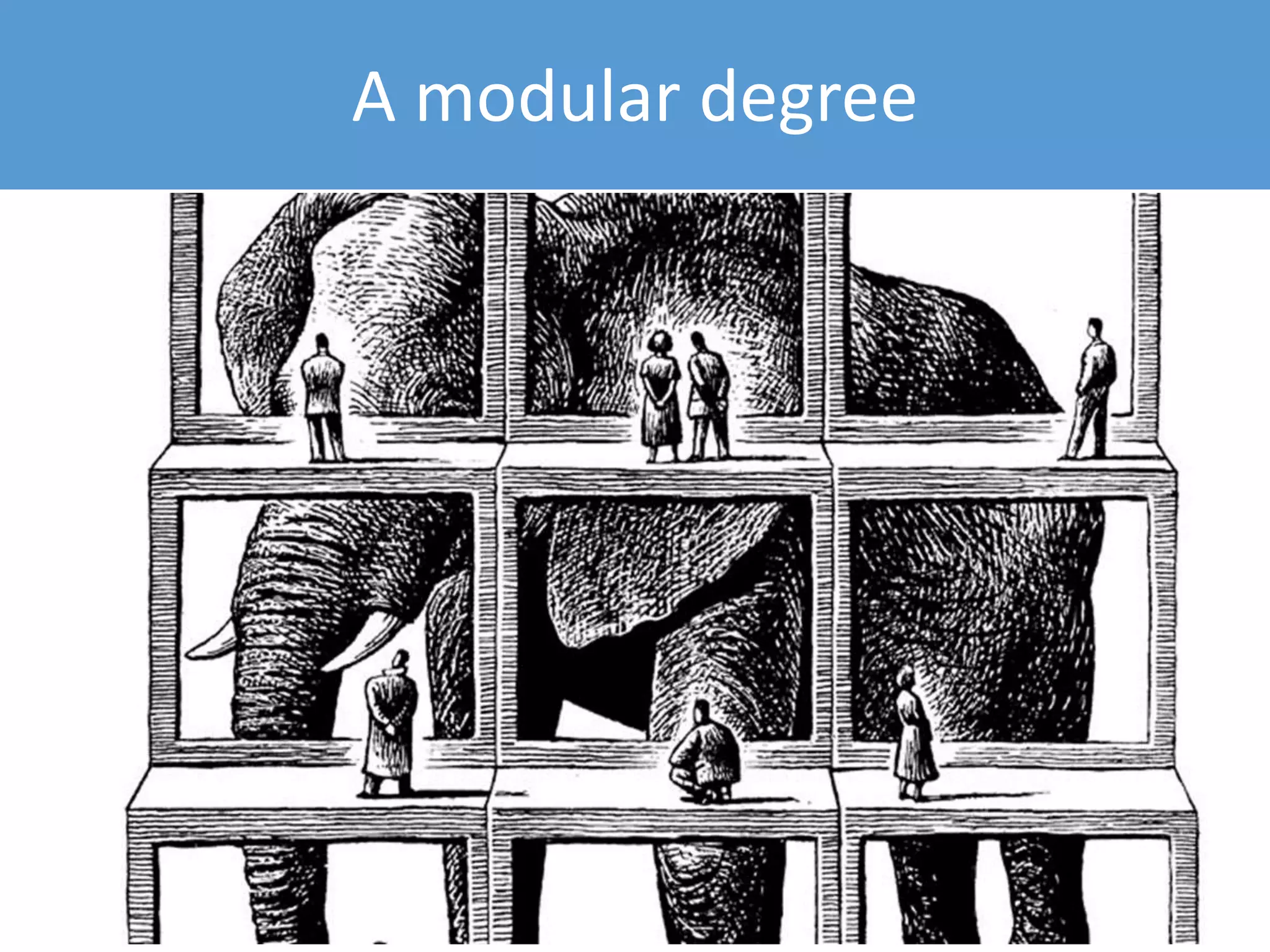

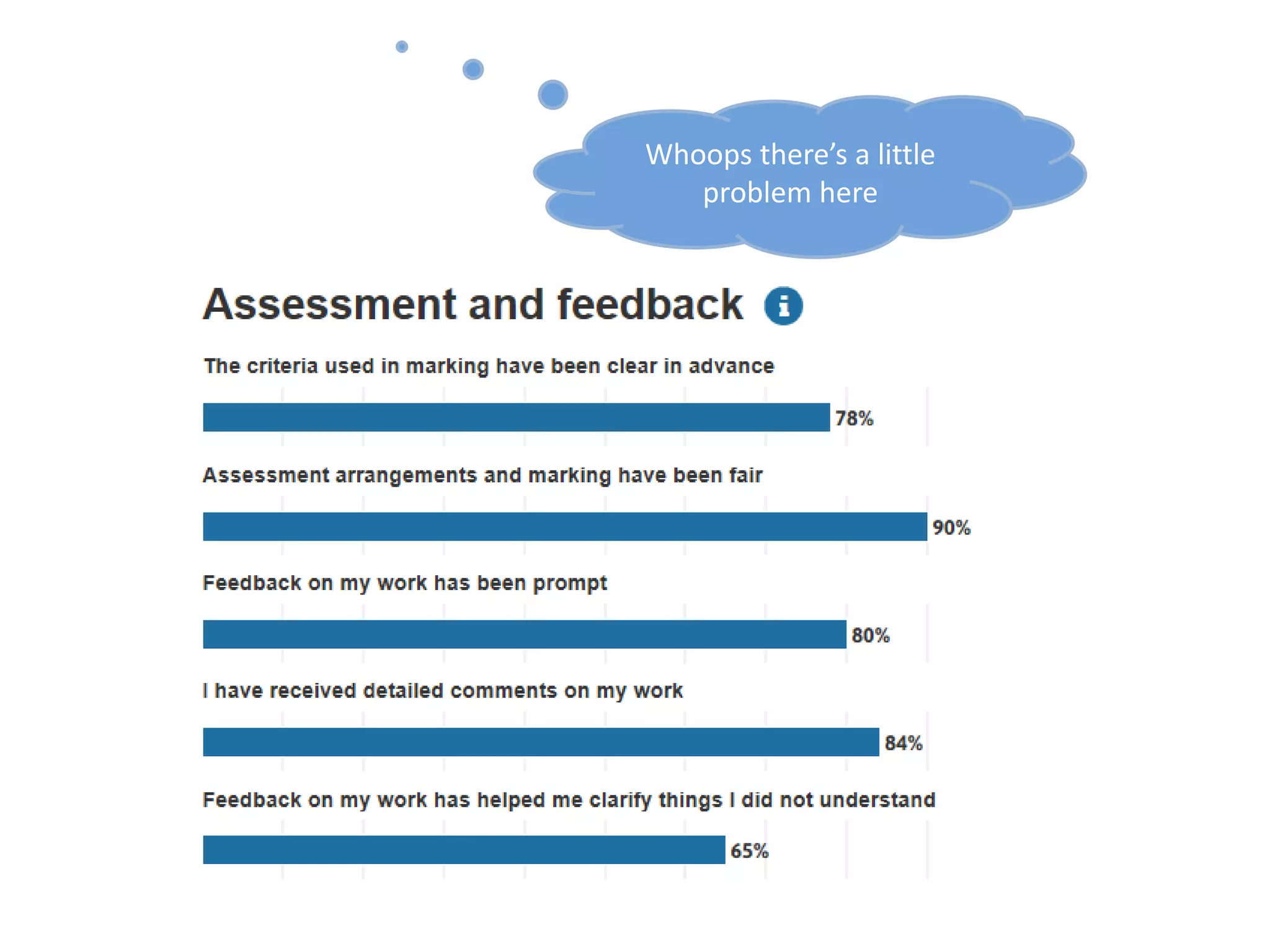

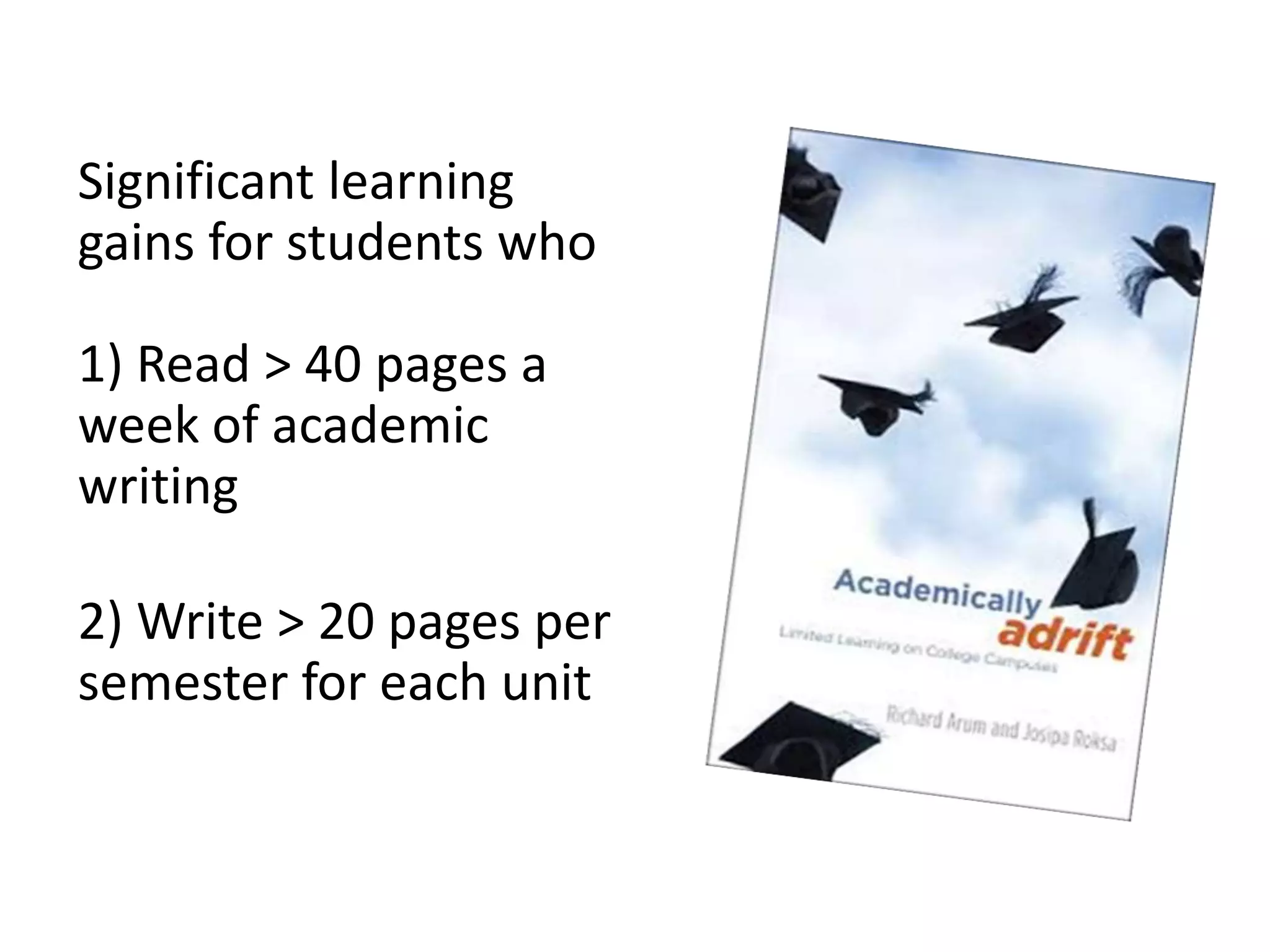

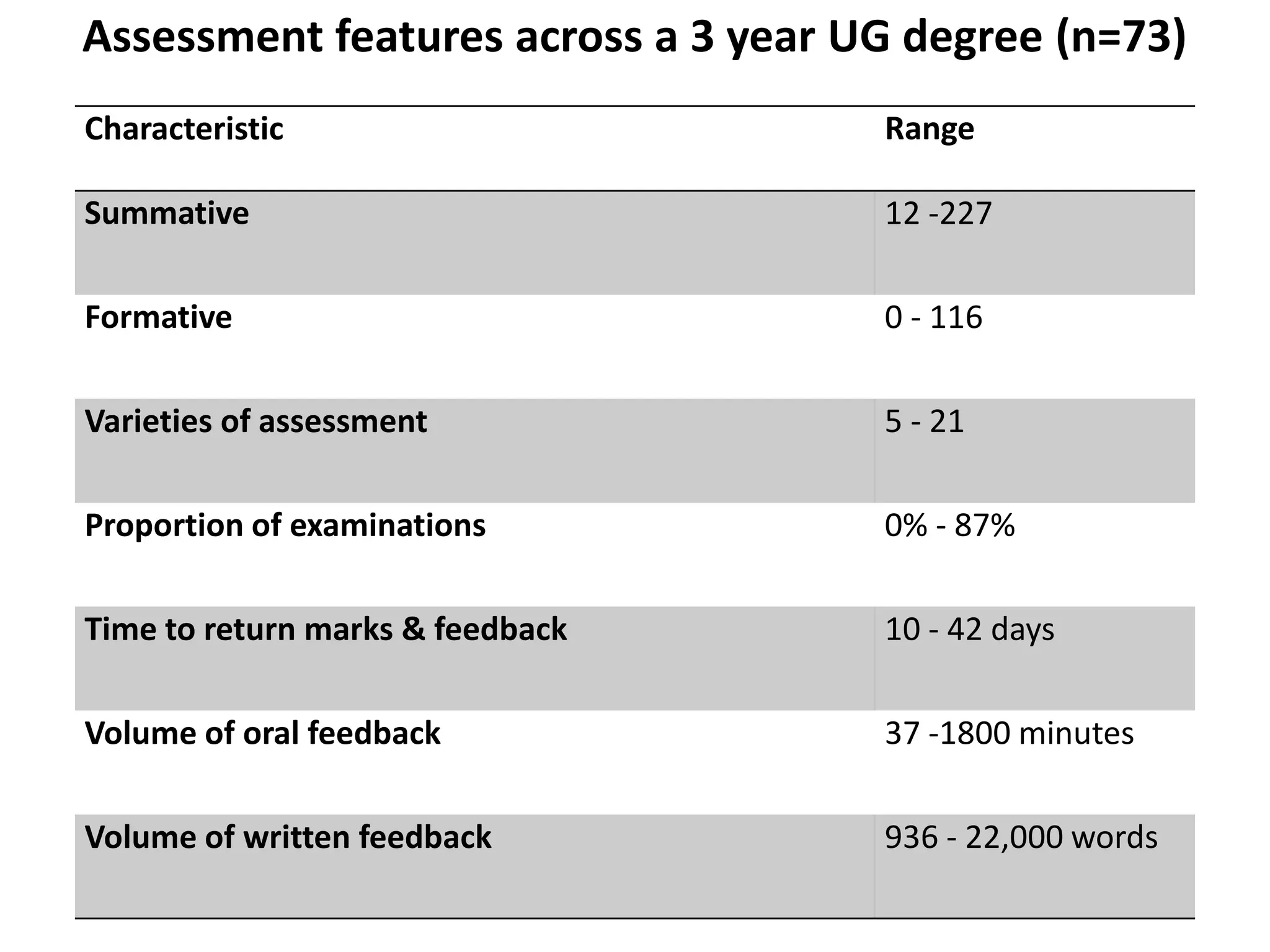

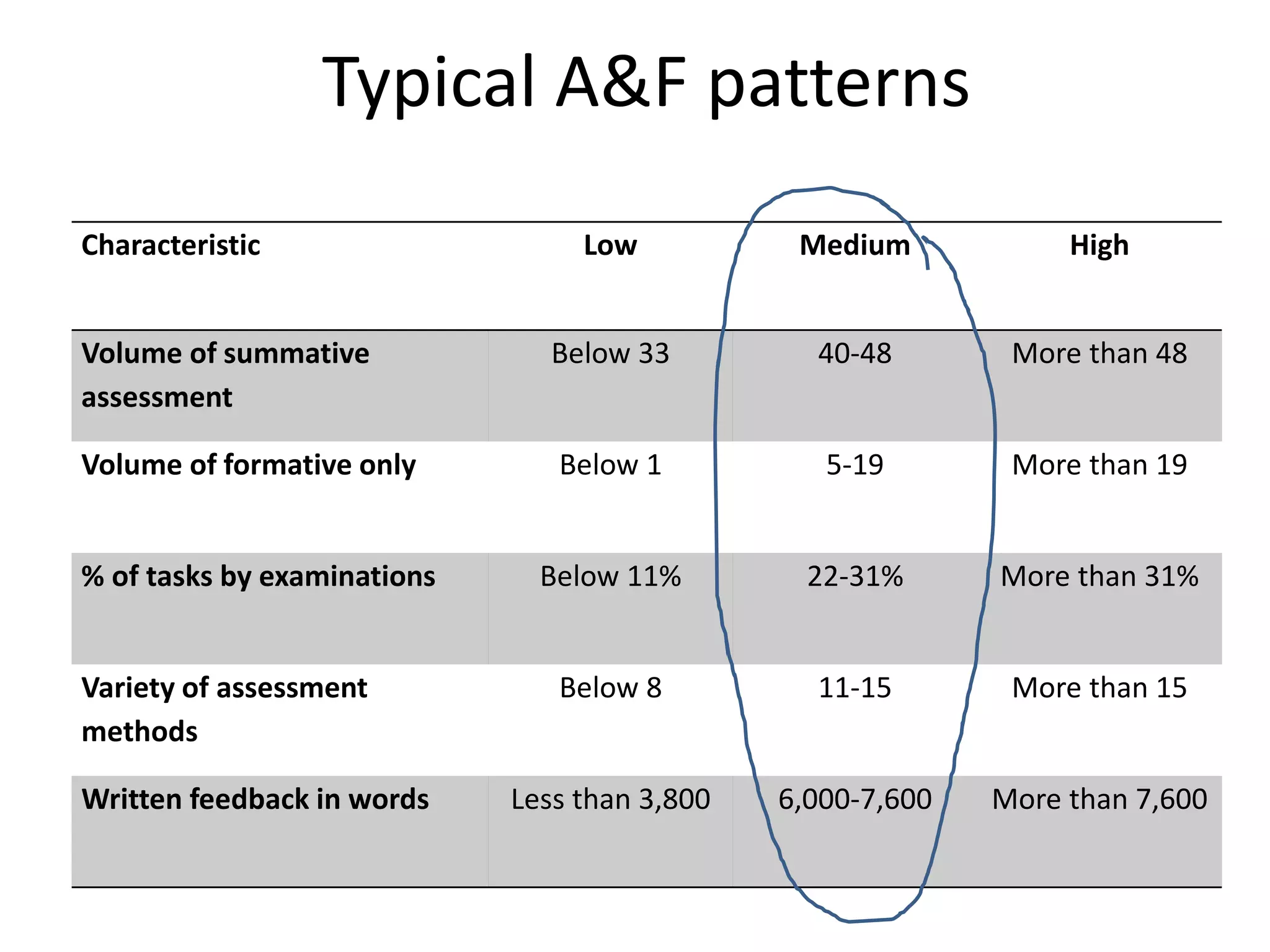

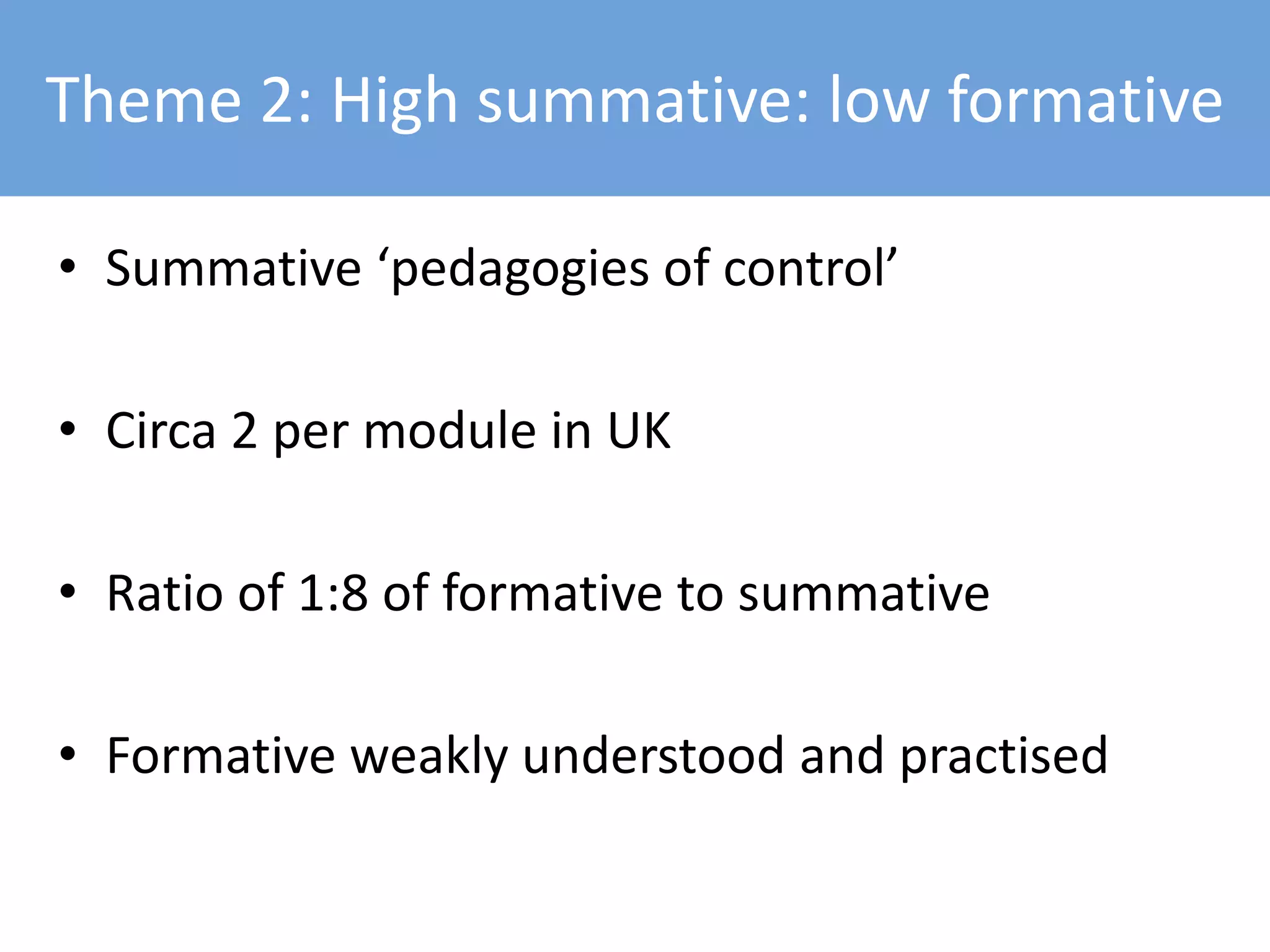

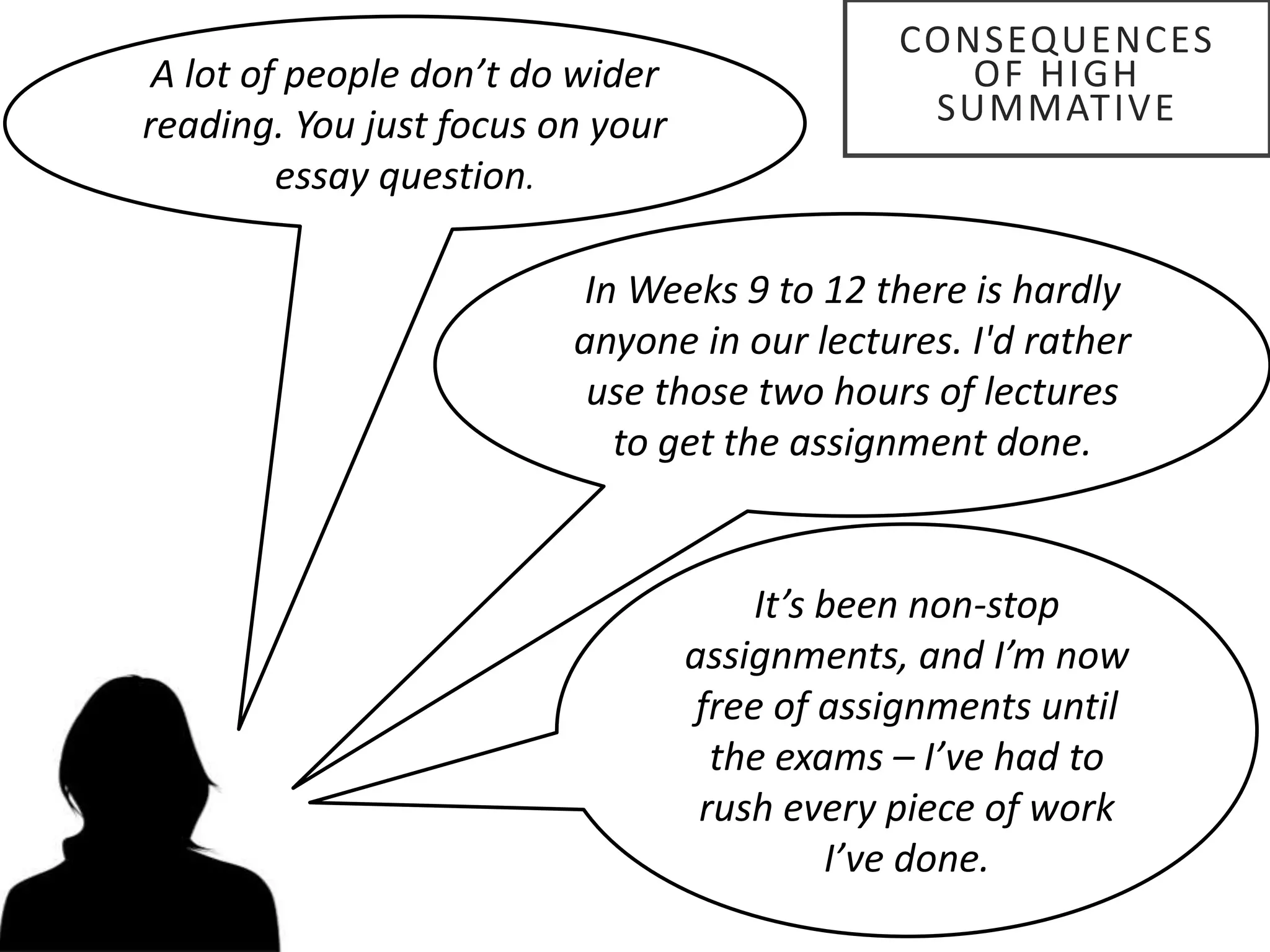

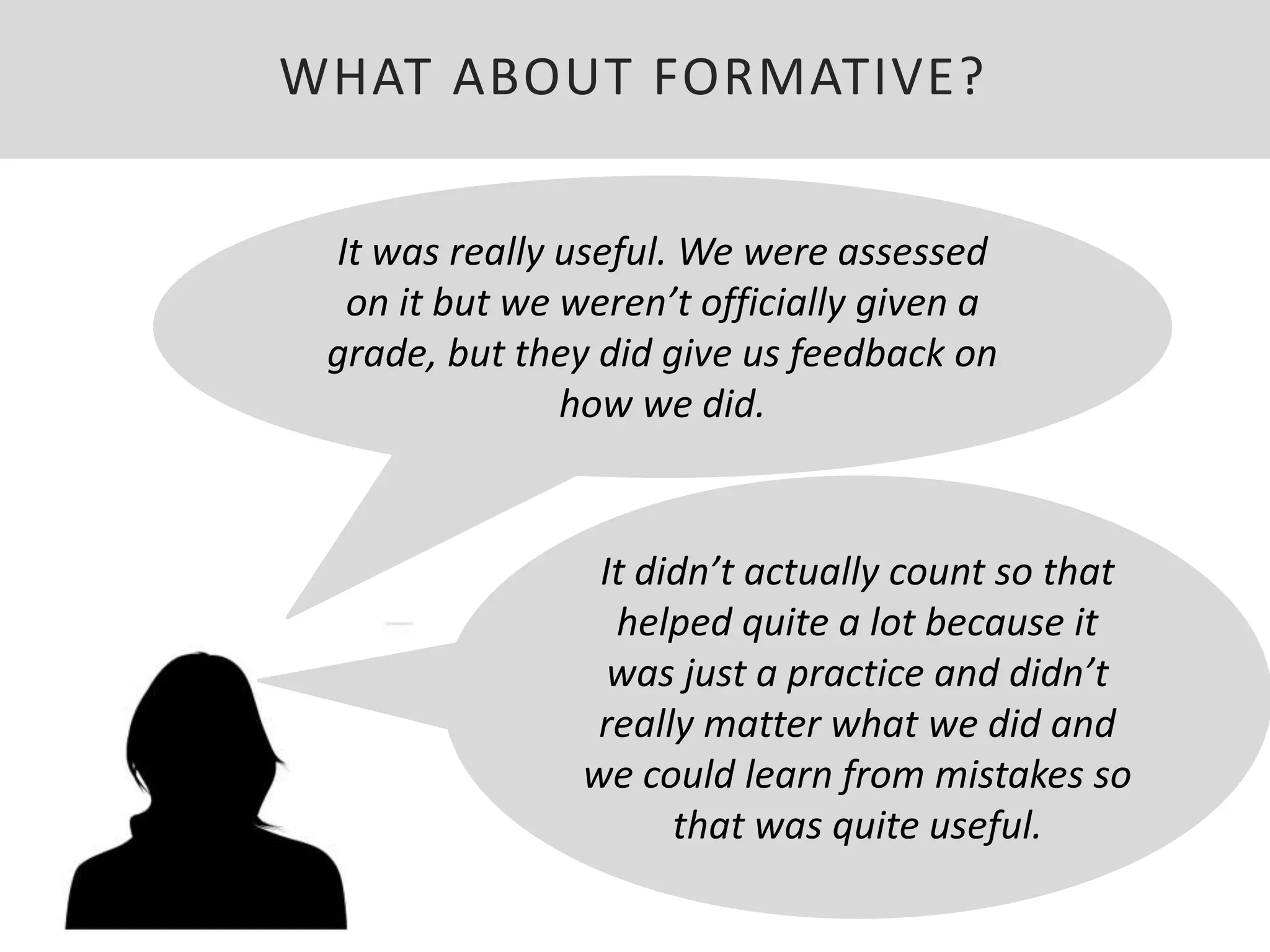

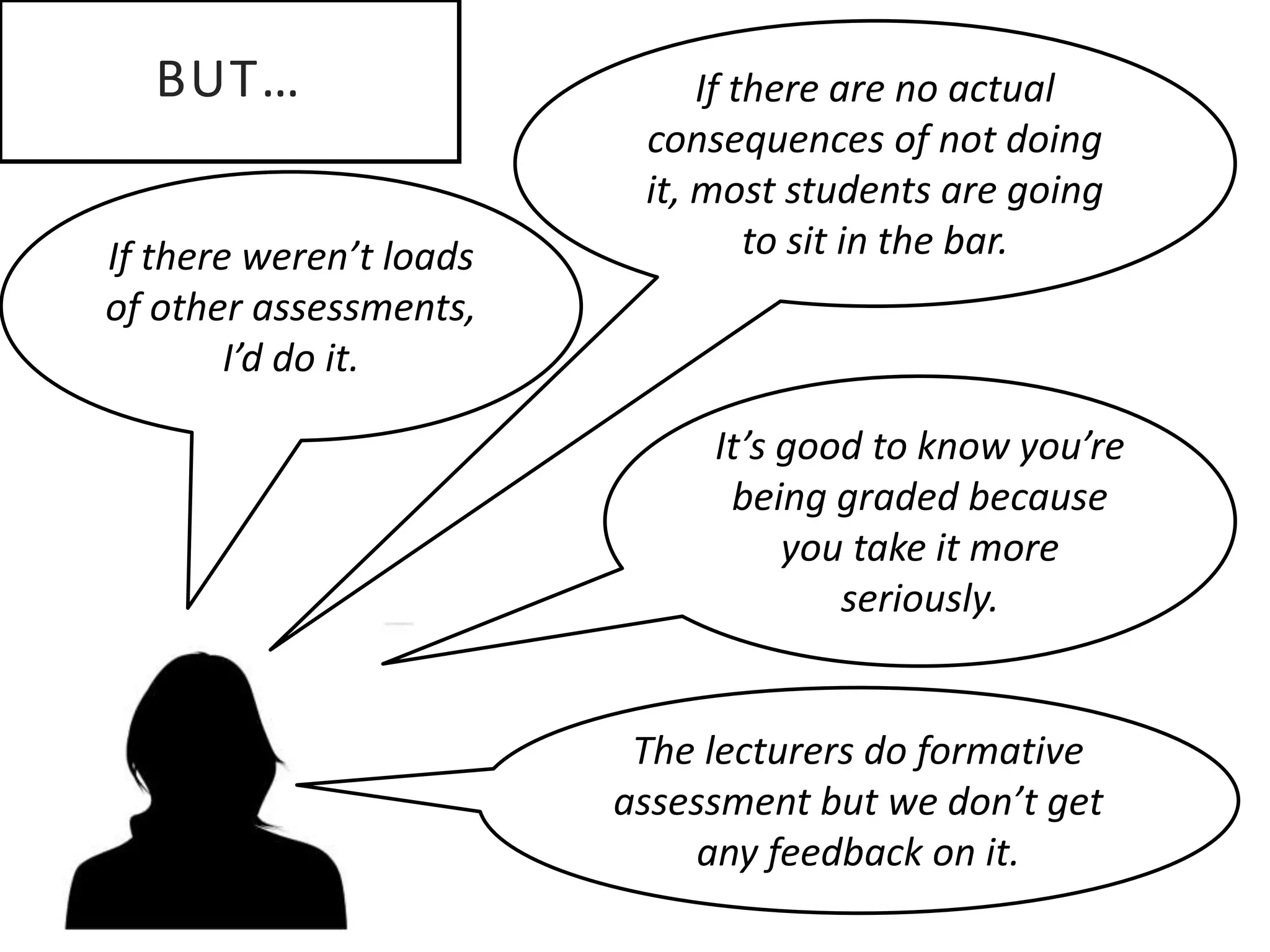

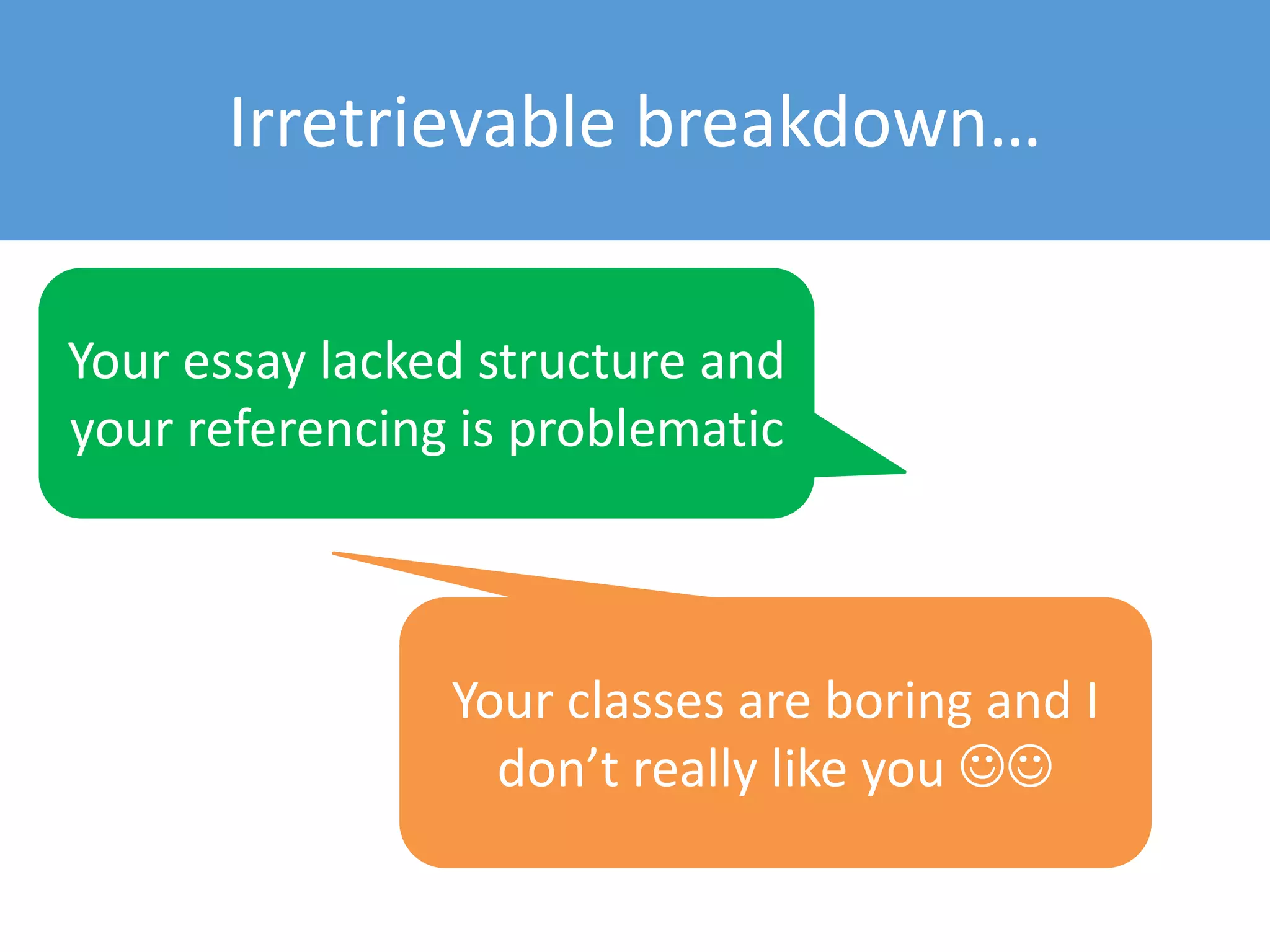

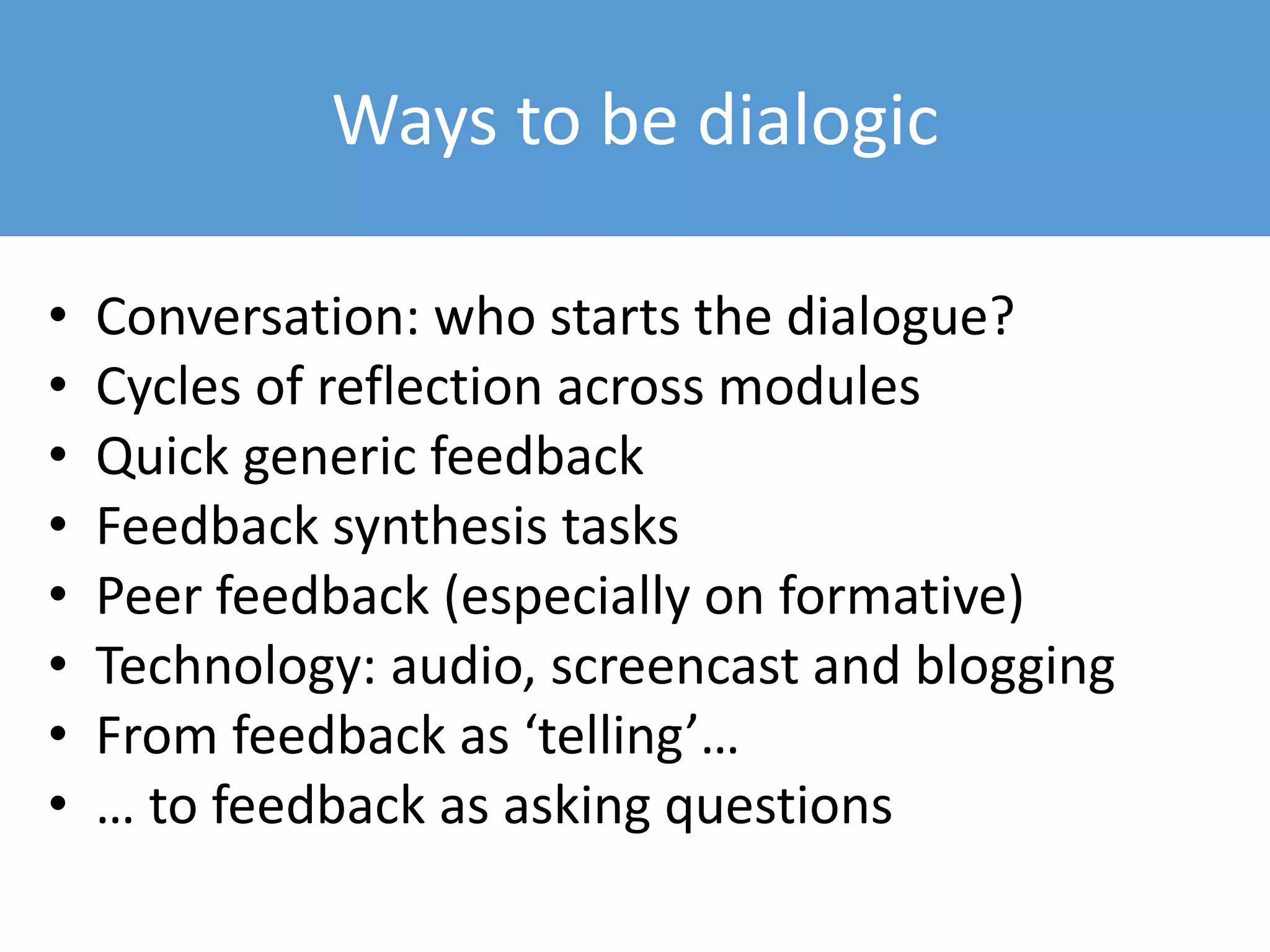

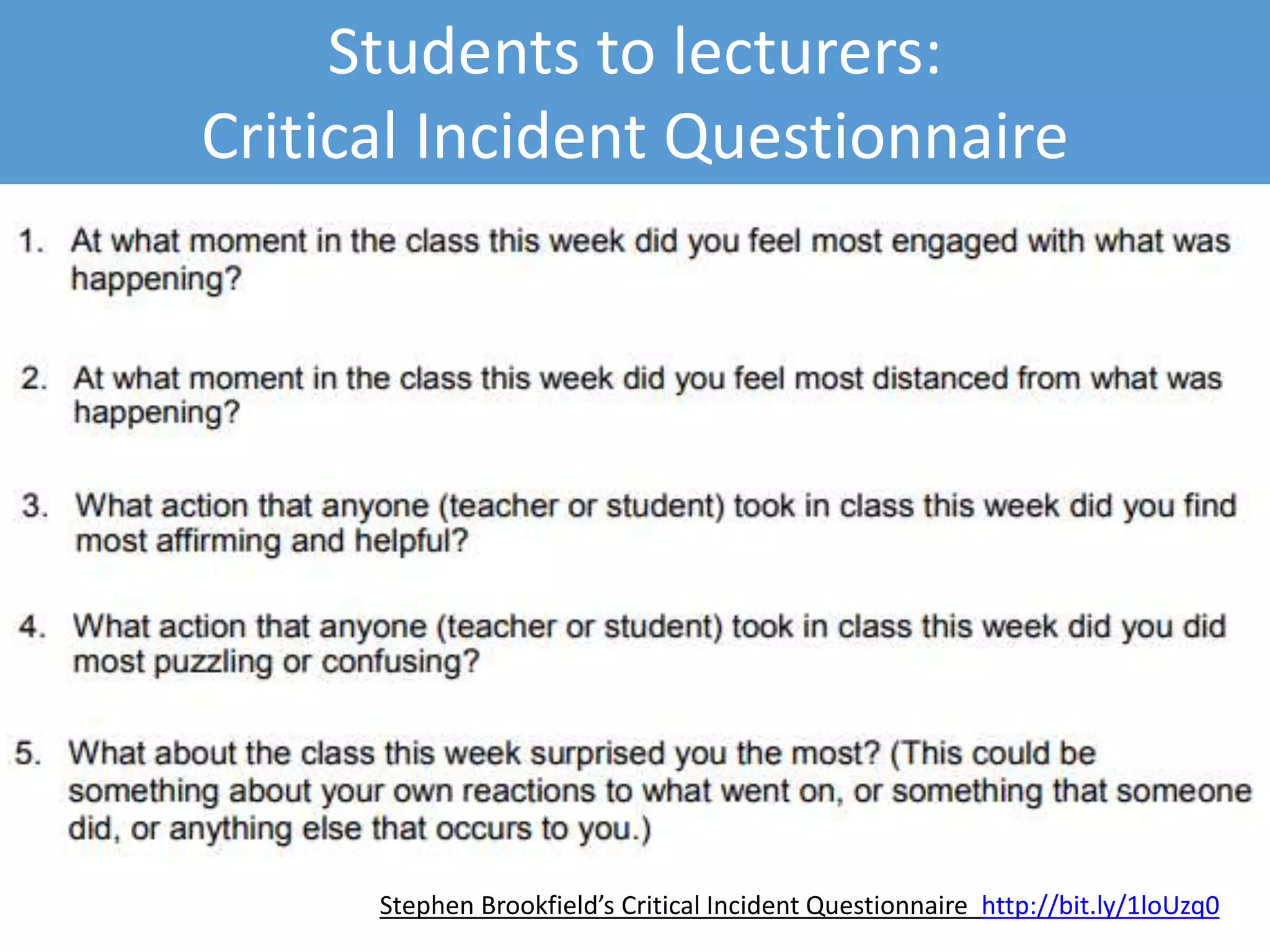

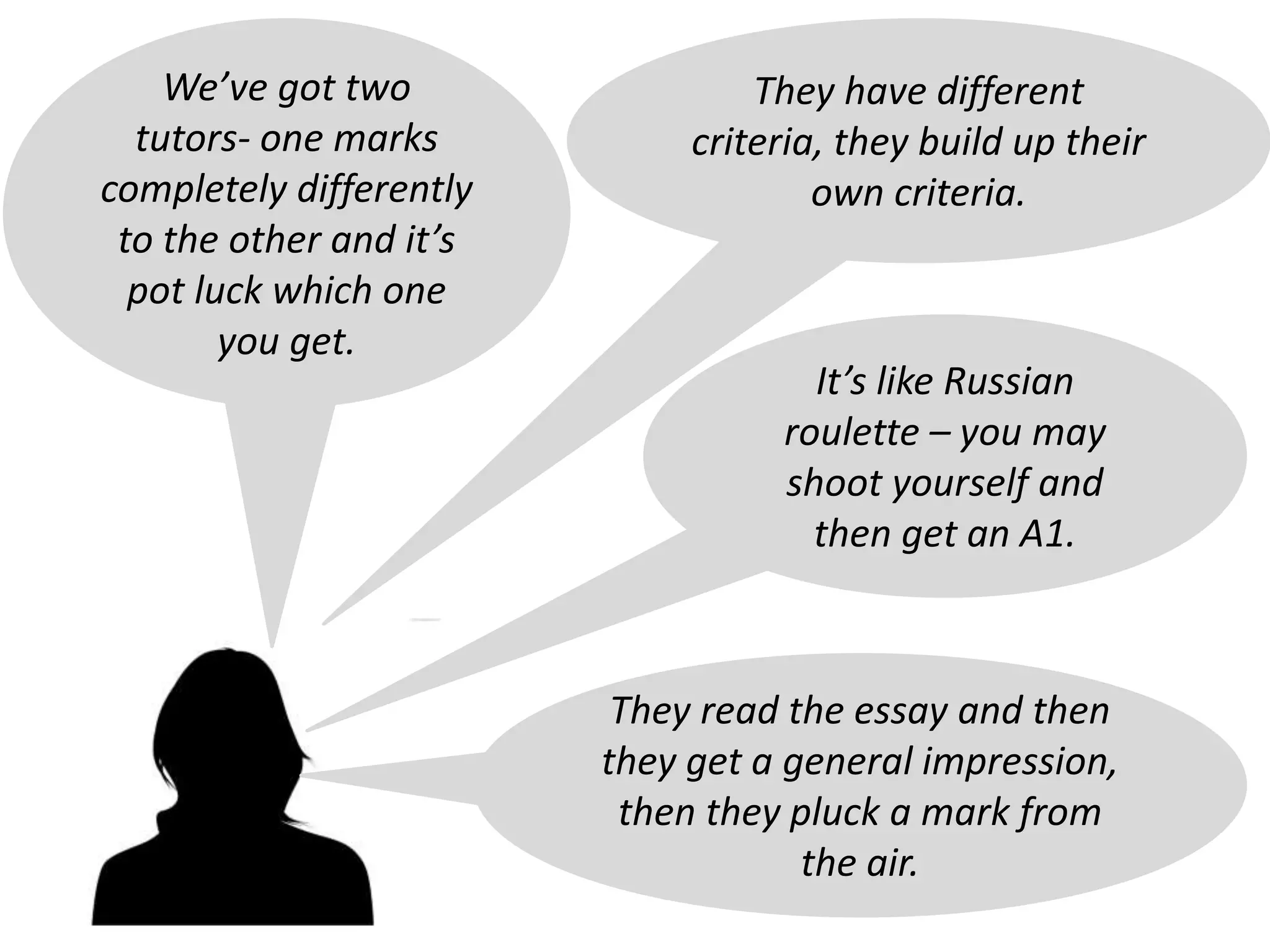

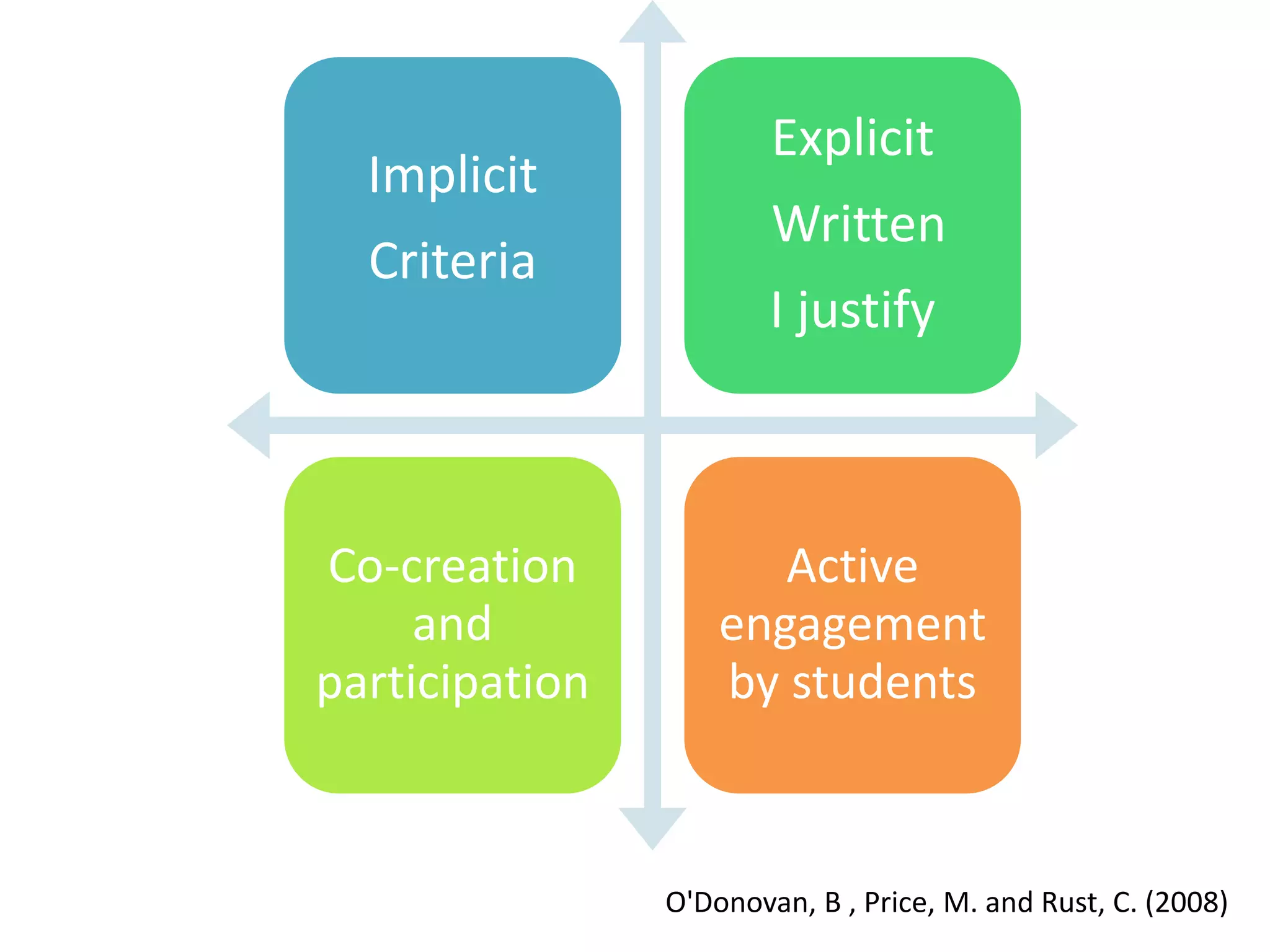

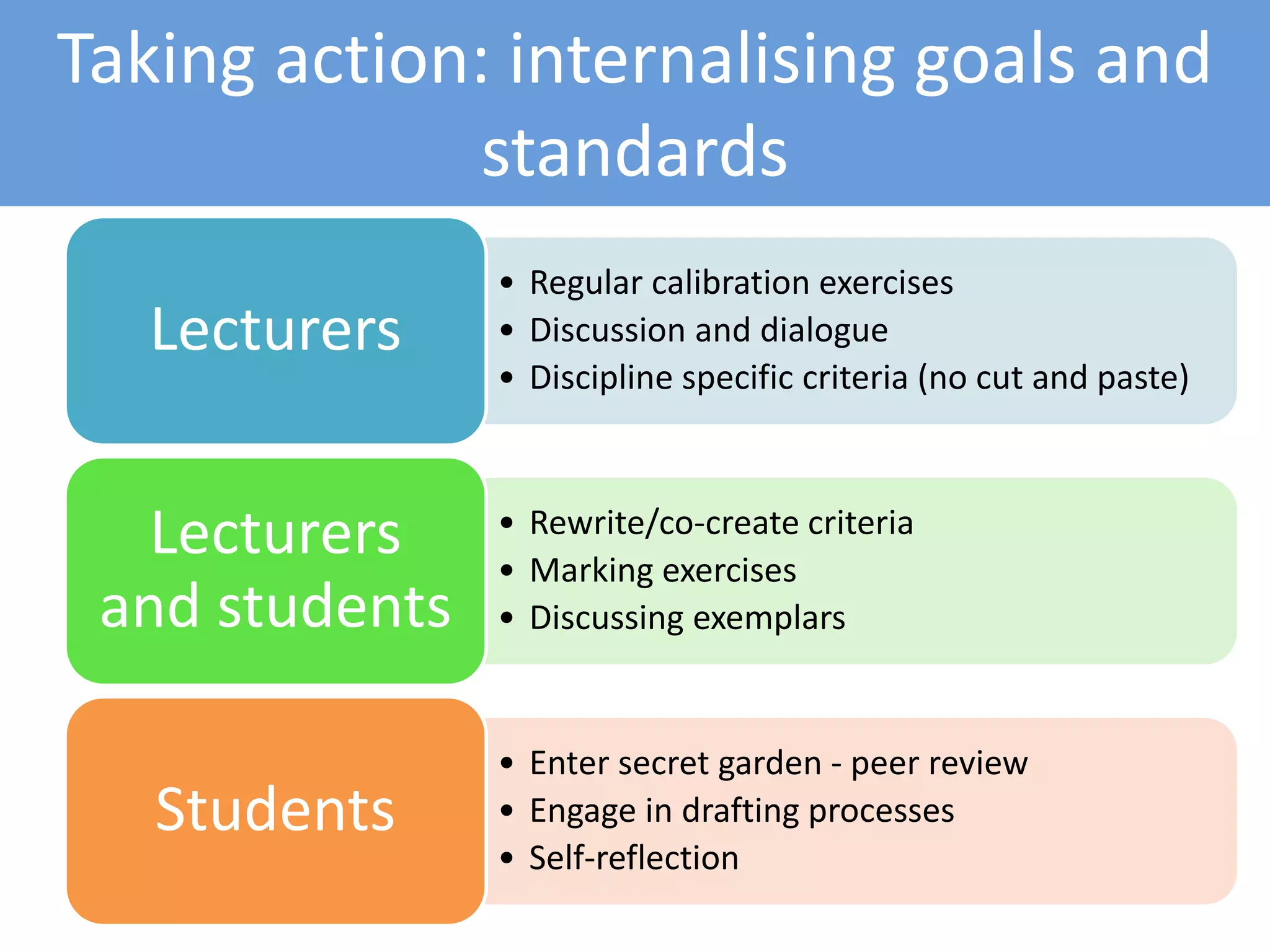

This document summarizes a presentation about TESTA (Transforming the Experience of Students Through Assessment), an assessment program that takes a holistic, program-wide approach. It addresses three common problems in assessment: variations in outcomes without understanding why, challenges with curriculum design, and difficulties with academic reading and writing. The presentation covered TESTA's evidence and strategies for improving assessment patterns, balancing formative and summative assessments, providing more connected feedback, and clarifying goals and standards to reduce student confusion.